Redis实例安装

安装说明:自动解压缩安装包,按照指定路径编译安装,复制配置文件模板到Redis实例路的数据径下,根据端口号修改配置文件模板

三个必须文件:1,配置文件,2,当前shell脚本,3,安装包

参数1:basedir,redis安装包路径

参数2:安装实例路径

参数3:安装包名称

参数4:安装实例的端口号

#!/bin/bash set -e if [ $# -lt 4 ]; then echo "$(basename $0): Missing script argument" echo "$(installdir $0) [installfilename] [port] " exit 9 fi PotInUse=`netstat -anp | awk '{print $4}' | grep $4 | wc -l` if [ $PotInUse -gt 0 ];then echo "ERROR" $4 "Port is used by another process!" exit 9 fi basedir=$1 installdir=$2 installfilename=$3 port=$4 cd $basedir tar -zxvf $installfilename.tar.gz >/dev/null 2>&1 & cd $installfilename mkdir -p $installdir make PREFIX=$installdir install sleep 1s cp $basedir/redis.conf $installdir sed -i "s/instance_port/$port/g" $installdir/redis.conf sleep 1s cd $installdir ./bin/redis-server redis.conf >/dev/null 2>&1 &

配置文件模板

################################## INCLUDES ################################### # include /path/to/local.conf # include /path/to/other.conf ################################## MODULES ##################################### # loadmodule /path/to/my_module.so # loadmodule /path/to/other_module.so ################################## NETWORK ##################################### bind 127.0.0.1 & your ip port instance_port tcp-backlog 511 timeout 0 tcp-keepalive 300 ################################# GENERAL ##################################### daemonize yes supervised no pidfile ./redis_instance_port.pid loglevel notice logfile ./redis_log.log databases 16 always-show-logo yes ################################ SNAPSHOTTING ################################ save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename dump.rdb dir ./ ################################# REPLICATION ################################# # masterauth <master-password> replica-serve-stale-data yes replica-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no replica-priority 100 ################################## SECURITY ################################### requirepass your_passwrod ################################### CLIENTS #################################### # maxclients 10000 ############################## MEMORY MANAGEMENT ################################ # maxmemory <bytes> # maxmemory-policy noeviction # maxmemory-samples 5 # replica-ignore-maxmemory yes ############################# LAZY FREEING #################################### lazyfree-lazy-eviction no lazyfree-lazy-expire no lazyfree-lazy-server-del no replica-lazy-flush no ############################## APPEND ONLY MODE ############################### appendonly no appendfilename "appendonly.aof" # appendfsync always appendfsync everysec # appendfsync no no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes aof-use-rdb-preamble yes ################################ LUA SCRIPTING ############################### lua-time-limit 5000 ################################ REDIS CLUSTER ############################### cluster-enabled yes # cluster-replica-validity-factor 10 # cluster-require-full-coverage yes # cluster-replica-no-failover no ########################## CLUSTER DOCKER/NAT support ######################## ################################## SLOW LOG ################################### slowlog-log-slower-than 10000 slowlog-max-len 128 ################################ LATENCY MONITOR ############################## latency-monitor-threshold 0 ############################# EVENT NOTIFICATION ############################## notify-keyspace-events "" ############################### ADVANCED CONFIG ############################### hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-size -2 list-compress-depth 0 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 stream-node-max-bytes 4096 stream-node-max-entries 100 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit replica 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 # client-query-buffer-limit 1gb # proto-max-bulk-len 512mb hz 10 dynamic-hz yes aof-rewrite-incremental-fsync yes rdb-save-incremental-fsync yes ########################### ACTIVE DEFRAGMENTATION ####################### # Enabled active defragmentation # activedefrag yes # Minimum amount of fragmentation waste to start active defrag # active-defrag-ignore-bytes 100mb # Minimum percentage of fragmentation to start active defrag # active-defrag-threshold-lower 10 # Maximum percentage of fragmentation at which we use maximum effort # active-defrag-threshold-upper 100 # Minimal effort for defrag in CPU percentage # active-defrag-cycle-min 5 # Maximal effort for defrag in CPU percentage # active-defrag-cycle-max 75 # Maximum number of set/hash/zset/list fields that will be processed from # the main dictionary scan # active-defrag-max-scan-fields 1000

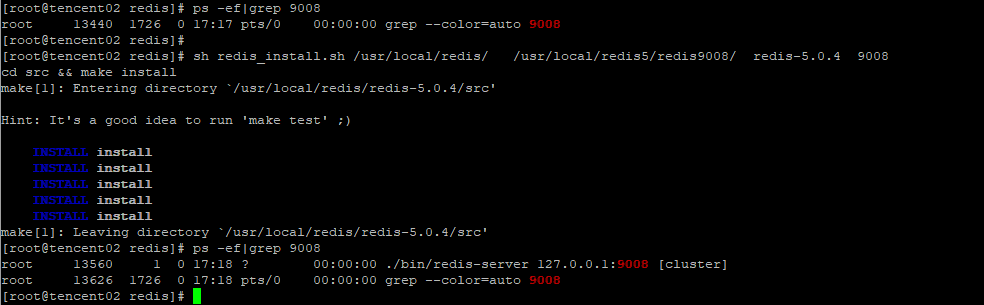

安装示例

sh redis_install.sh /usr/local/redis/ /usr/local/redis5/redis9008/ redis-5.0.4 9008

Redi实例的目录结构

基于Python的Redis自动化集群实现

基于Python的自动化集群实现,初始化节点为node_1~node_6,节点实例需要为集群模式,三主三从,自动化集群,分配slots,加入从节点,3秒钟左右完成

import redis #master node_1 = {'host': '127.0.0.1', 'port': 9001, 'password': '***'} node_2 = {'host': '127.0.0.1', 'port': 9002, 'password': '***'} node_3 = {'host': '127.0.0.1', 'port': 9003, 'password': '***'} #slave node_4 = {'host': '127.0.0.1', 'port': 9004, 'password': '***'} node_5 = {'host': '127.0.0.1', 'port': 9005, 'password': '***'} node_6 = {'host': '127.0.0.1', 'port': 9006, 'password': '***'} redis_conn_1 = redis.StrictRedis(host=node_1["host"], port=node_1["port"], password=node_1["password"]) redis_conn_2 = redis.StrictRedis(host=node_2["host"], port=node_2["port"], password=node_2["password"]) redis_conn_3 = redis.StrictRedis(host=node_3["host"], port=node_3["port"], password=node_3["password"]) # cluster meet redis_conn_1.execute_command("cluster meet {0} {1}".format(node_2["host"],node_2["port"])) redis_conn_1.execute_command("cluster meet {0} {1}".format(node_3["host"],node_3["port"])) print('#################flush slots #################') redis_conn_1.execute_command('cluster flushslots') redis_conn_2.execute_command('cluster flushslots') redis_conn_3.execute_command('cluster flushslots') print('#################add slots#################') for i in range(0,16383+1): if i <= 5461: try: redis_conn_1.execute_command('cluster addslots {0}'.format(i)) except: print('cluster addslots {0}'.format(i) +' error') elif 5461 < i and i <= 10922: try: redis_conn_2.execute_command('cluster addslots {0}'.format(i)) except: print('cluster addslots {0}'.format(i) + ' error') elif 10922 < i: try: redis_conn_3.execute_command('cluster addslots {0}'.format(i)) except: print('cluster addslots {0}'.format(i) + ' error') print() print('#################cluster status#################') print() print('##################'+str(node_1["host"])+':'+str(node_1["port"])+'##################') print(str(redis_conn_1.execute_command('cluster info'), encoding = "utf-8").split(" ")[0]) print('##################'+str(node_2["host"])+':'+str(node_2["port"])+'##################') print(str(redis_conn_1.execute_command('cluster info'), encoding = "utf-8").split(" ")[0]) print('##################'+str(node_3["host"])+':'+str(node_3["port"])+'##################') print(str(redis_conn_1.execute_command('cluster info'), encoding = "utf-8").split(" ")[0]) #slave cluster meet redis_conn_1.execute_command("cluster meet {0} {1}".format(node_4["host"],node_4["port"])) redis_conn_2.execute_command("cluster meet {0} {1}".format(node_5["host"],node_5["port"])) redis_conn_3.execute_command("cluster meet {0} {1}".format(node_6["host"],node_6["port"])) #cluster nodes print(str(redis_conn_1.execute_command('cluster nodes'), encoding = "utf-8")) ############################add salve in cluster########################################## #slave cluster meet print('################# cluster meet slave #################') redis_conn_1.execute_command("cluster meet {0} {1}".format(node_4["host"],node_4["port"])) redis_conn_2.execute_command("cluster meet {0} {1}".format(node_5["host"],node_5["port"])) redis_conn_3.execute_command("cluster meet {0} {1}".format(node_6["host"],node_6["port"])) sleep(5) print('################# add slave in cluster #################') # get 主节点的node_id,按照主节点的顺序依次添加到主节点dict_cluster_nodes中,确保主从按照list中的顺序一一对应 redis_conn_1 = redis.StrictRedis(host=node_1["host"], port=node_1["port"], password=node_1["password"], decode_responses=True) dict_cluster_nodes = redis_conn_1.cluster('nodes') print(dict_cluster_nodes) dict_master_node_id = {} for m_node in master_node: for key, values in dict_cluster_nodes.items(): if key[0:key.index('@')] == str(m_node["host"])+':'+str(m_node["port"]): dict_master_node_id[key[0:key.index('@')]] = values['node_id'] # 输出示例 ''' { '127.0.0.1:9001': '84f0c3a21ab6dd6965923915434cc62fc0f5cc2b', '127.0.0.1:9002': 'b584f695eb9c1552c25f92e28a50c9ce62ad9ee9', '127.0.0.1:9001': 'b95898c17761b448ea88bb9682bac9b69b045adc' } ''' # 依次添加slave节点 # 如何确保主从于list中的节点一一对应? # 在主节点上任意一个节点上get 集群的node的时候,按照master节点顺序构造dict_cluster_nodes,然后遍历dict_cluster_nodes的时候自然就一一对应了。 node_index = 0 for s_node in slave_node: slave_redis_conn = redis.StrictRedis(host=s_node["host"], port=s_node["port"], password=s_node["password"]) print(str(s_node["host"])+':'+str(s_node["port"]) + ' slave of----->' + str(master_node[node_index]["host"])+':'+str(master_node[node_index]["port"])) repl_command = 'cluster replicate ' + dict_master_node_id[str(master_node[node_index]["host"])+':'+str(master_node[node_index]["port"])] print(repl_command) slave_redis_conn.execute_command(repl_command) node_index = node_index + 1 ''' 127.0.0.1:9004 slave of-----> 127.0.0.1:9001 cluster replicate 84f0c3a21ab6dd6965923915434cc62fc0f5cc2b 127.0.0.1:9005 slave of-----> 127.0.0.1:9002 cluster replicate b584f695eb9c1552c25f92e28a50c9ce62ad9ee9 127.0.0.1:9006 slave of-----> 127.0.0.1:9003 cluster replicate b95898c17761b448ea88bb9682bac9b69b045adc '''

示例

这样一个Redis的集群,从实例的安装到集群的安装,环境依赖本身没有问题的话,基本上1分钟之内可以完成这个搭建过程。