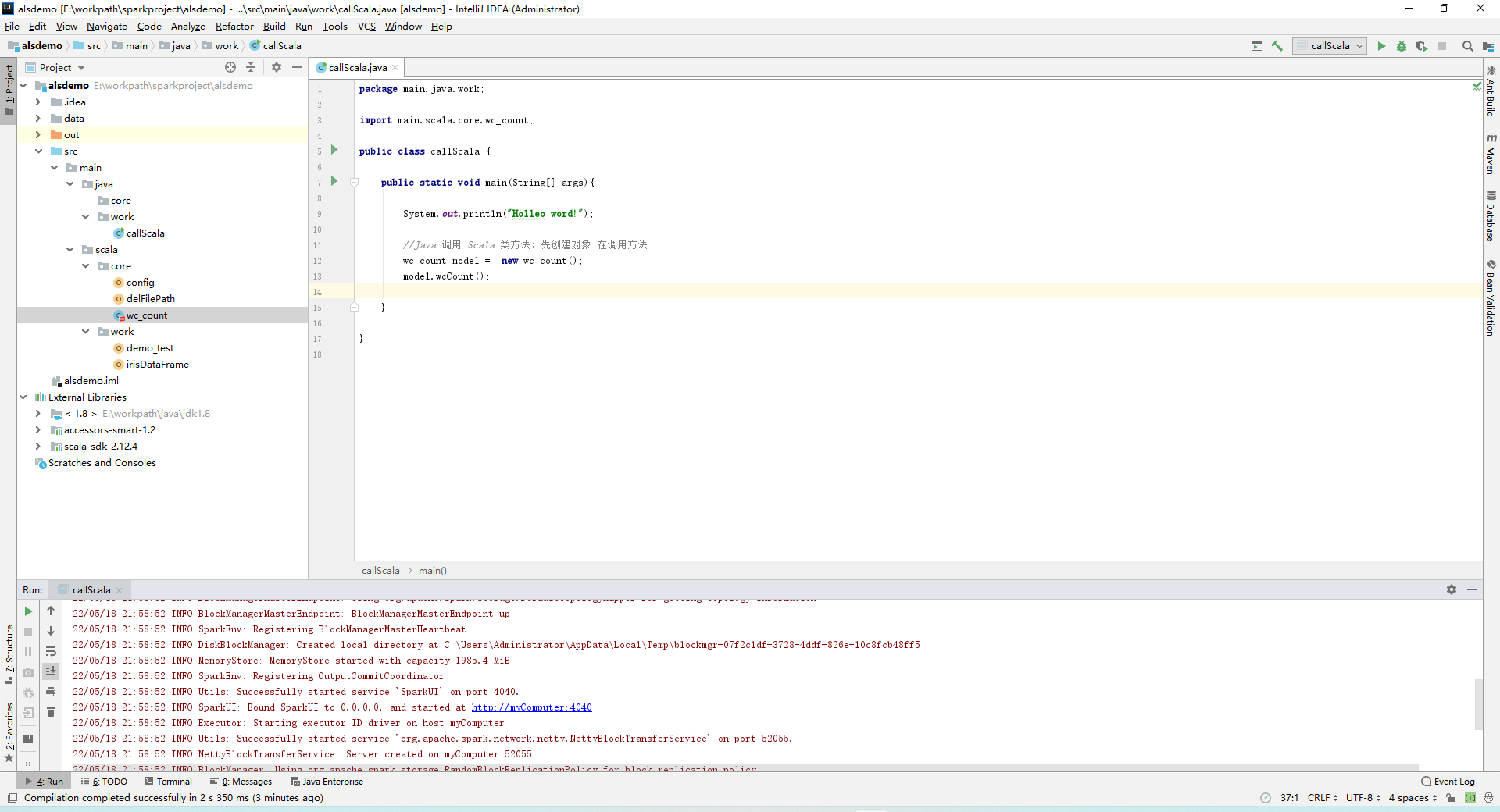

1、目录结构如图

2、Java代码

package main.java.work;

import main.scala.core.wc_count;

public class callScala {

public static void main(String[] args){

System.out.println("Holleo word!");

//Java 调用 Scala 类方法:先创建对象 在调用方法

wc_count model = new wc_count();

model.wcCount();

}

}

3、Scala代码

package main.scala.core

import main.scala.core.config.sc

import main.scala.core.delFilePath.delFPath

import org.apache.spark.rdd.RDD

class wc_count {

def delete(master:String,path:String): Unit ={

println("Begin delete!--" + master+path)

val output = new org.apache.hadoop.fs.Path(master+path)

val hdfs = org.apache.hadoop.fs.FileSystem.get(

new java.net.URI(master), new org.apache.hadoop.conf.Configuration())

// 删除输出目录

if (hdfs.exists(output)) {

hdfs.delete(output, true)

println("delete!--" + master+path)

}

}

def wcCount(): Unit = {

// hdfs dfs -put words.txt /user/root/

val worddata: RDD[String] = sc.textFile("data/wc.txt")

val worddata1: RDD[String] = worddata.flatMap(x=>x.split(" "))

val worddata2: RDD[(String, Int)] = worddata1.map(x=>(x,1))

val worddata3: RDD[(String, Int)] = worddata2.reduceByKey((x, y)=>x+y)

delFPath("data/out")

worddata3.repartition(1).saveAsTextFile("data/out")

// 统计出现次数大于 3 的单词

val worddata4: RDD[String] =worddata3.filter(x=>x._2>3).map(x=>x._1)

delFPath("data/out1")

worddata4.repartition(1).saveAsTextFile("data/out1")

val cunt: Long = worddata4.count()

println(s"出现次数大于3的字母个数:$cunt")

sc.stop()

}

}