毕设终于告一段落,传统方法的视觉做得我整个人都很奔溃,终于结束,可以看些搁置很久的一些论文了,嘤嘤嘤

Densely Connected Convolutional Networks 其实很早就出来了,cvpr 2017 best paper

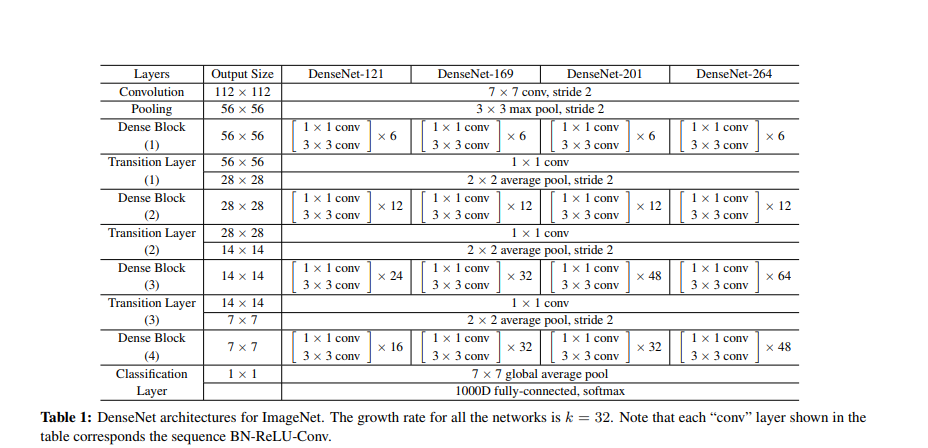

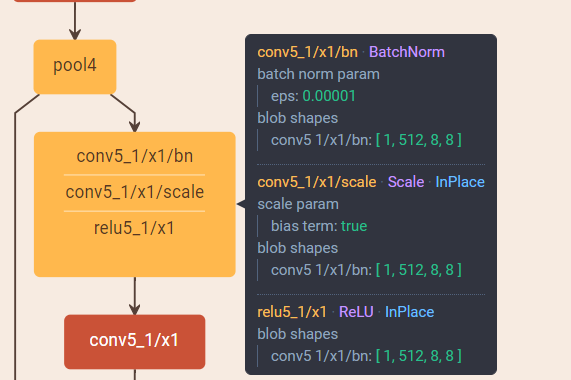

觉得读论文前,还是把dense net的整个网络结构放到http://ethereon.github.io/netscope/#/editor 上面可视化看一下,会更加容易理解,总体这篇论文很好理解

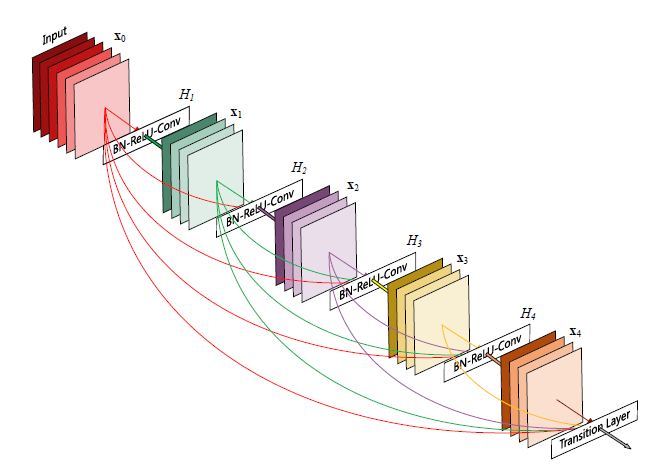

上图是一个5层的dense block,每个dense block的growth rate k=4

论文开头给出了densnet的几个优点:

1、Our proposed DenseNet architecture explicitly differentiates between information that is added to the network and information that is preserved.DenseNet layers are very narrow (e.g., 12 filters per layer),adding only a small set of feature-maps to the “collective knowledge” of the network and keep the remaining featuremaps

unchanged—and the final classifier makes a decision based on all feature-maps in the network

densnet 网络结构参数少,每个block里面的filter也比较少,而我们在使用alexnet,通常filter都是上百的,而这里的filter 12、24、16 等,所以非常narrow

2、one big advantage of DenseNets is their improved flow of information and gradients throughout the network, which makes them easy to train.

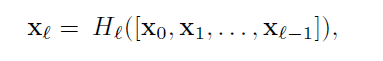

densenet网络从上图中可以看出,每层都和后面的层相连,(第一幅图没有画出来每个block中的层之间的连接,觉得应该结合第一个图和第二个图,才算完整,因为第二个图每个block后面的输入是前面所有层concat一起的结果,相当于图一显示的那样。。。。在可视化工具里面看,最明显了,而且还能看到每一层的实际大小)有利于信息和梯度在整个网络中的传递。

3、we also observe that dense connections have a regularizing effect, which reduces overfitting on tasks with smaller training set sizes.

同时densenet网络也有正则化的作用,在小数据集上训练也能减少过拟合的风险

densenet中是将前面几层concat一起,而resnet是求和,论文中提到这种求和会影响信息在网络中的传递

transition layers:

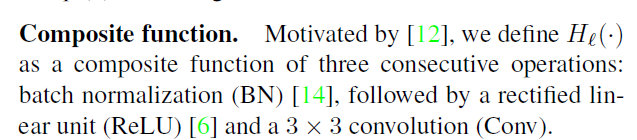

这层就是连接两个block之间的层,由BN层,1x1 卷积层和2x2的avg pooling层构成,如下图所示

Growth rate:

也就是每个block里面的层数,如图一中,每个block里面有4层,所以growth rate=4

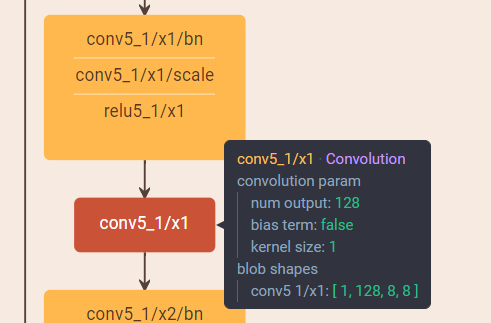

Botttleneck layers:

It has been noted in [36, 11] that a 1X1 convolution can be introduced as bottleneck layer before each 3X3 convolution to reduce the number of input feature-maps, and thus to improve computational efficiency.

是指每一层中在3X3卷积核前面有个1X1的卷积核,作用是减少输入feature-map的数量,如下图所示,512的数量变成了128个

Compression:

If a dense block contains m feature-maps, we let the following transition layer generate [θm] output featuremaps,where 0<θ <=1referred to as the compression factor.

让transition layer压缩block输出的feature map数量。