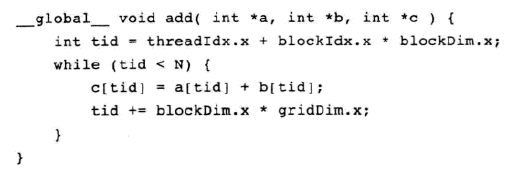

blockDim.x*gridDim.x 跳过一个grid

int <<<参数1,参数2>>>(int *a,int * b,int * c);

如果是一维的,参数1表示一个grid里面有多少个block块,参数2表示一个block块里面有多少个thread线程

namespace caffe {

template <typename Dtype>

__global__ void LogwxlForward(const float scale_,const int n,const Dtype * in,Dtype * out){

CUDA_KERNEL_LOOP(index,n){

out[index]=scale_*in[index];

}

}

template <typename Dtype>

__global__ void LogwxlBackward(const float scale_,const int n,const Dtype *in_diff,Dtype* out_diff){

CUDA_KERNEL_LOOP(index,n) {

out_diff[index]=in_diff[index]*scale_;

}

}

template <typename Dtype>

void LogwxlLayer<Dtype>::Forward_gpu(

const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top) {

//this->Forward_cpu(bottom, top);

const Dtype* bottom_data=bottom[0]->gpu_data();

Dtype * top_data=top[0]->mutable_gpu_data();

const int count=bottom[0]->count();

LogwxlForward<Dtype><<<CAFFE_GET_BLOCKS(count),CAFFE_CUDA_NUM_THREADS>>>(scale_,count,bottom_data,top_data);

CUDA_POST_KERNEL_CHECK;

}

template <typename Dtype>

void LogwxlLayer<Dtype>::Backward_gpu(

const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

//this->Backward_cpu(top, propagate_down, bottom);

if(propagate_down[0]){

const Dtype *top_diff=top[0]->gpu_diff();

Dtype * bottom_diff=bottom[0]->mutable_gpu_diff();

const int count=bottom[0]->count();

LogwxlBackward<Dtype><<<CAFFE_GET_BLOCKS(count),CAFFE_CUDA_NUM_THREADS>>>(scale_,count,top_diff,bottom_diff);

CUDA_POST_KERNEL_CHECK;

}

}

INSTANTIATE_LAYER_GPU_FUNCS(LogwxlLayer);

} // namespace caffe