1.HDFS上数据准备

2019-03-24 09:21:57.347,869454021315519,8,1

2019-03-24 22:07:15.513,867789020387791,8,1

2019-03-24 21:43:34.81,357008082359524,8,1

2019-03-24 16:05:32.227,860201045831206,8,1

2019-03-24 18:11:18.167,866676040163198,8,1

2019-03-24 22:01:24.877,868897026713230,8,1

2019-03-24 12:34:23.377,863119033590062,8,1

2019-03-24 20:16:32.53,862505041870010,8,1

2019-03-24 09:10:55.18,864765037658468,8,1

2019-03-24 16:18:41.503,869609023903469,8,1

2019-03-24 10:44:52.027,869982033593376,8,1

2019-03-24 20:00:08.007,866798025149107,8,1

2019-03-24 10:25:18.1,863291034398181,2,3

2019-03-24 10:33:48.56,867557030361332,8,1

2019-03-24 16:42:15.057,869841022390535,8,1

2019-03-24 10:08:00.277,867574031105048,8,1

注意: 分隔符是‘,’

2. HBase上创建表

create 'ALLUSER','INFO';

3. 在Phoenix中建立相同的表名以实现与hbase表的映射

create table if not exists ALLUSER(

firsttime varchar primary key,

INFO.IMEI varchar,

INFO.COID varchar,

INFO.NCOID varchar

)

注意:

- 除主键外,Phoenix表的表名和字段字段名要和HBase表中相同,包括大小写

- Phoneix中的column必须以HBase的columnFamily开头

4. 通过importtsv.separator指定分隔符,否则默认的分隔符是tab键

hbase org.apache.hadoop.hbase.mapreduce.ImportTsv

-Dimporttsv.columns=HBASE_ROW_KEY,INFO:IMEI,INFO:IMEI,INFO:NCOID

-Dimporttsv.separator=, -Dimporttsv.bulk.output=/warehouse/temp/alluser ALLUSER /user/hive/warehouse/toutiaofeedback.db/newuser/000001_0

5. 将生成的HFlie导入到HBase

hbase org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles /warehouse/temp/alluser ALLUSER

6. 查看HBase,Phoenix

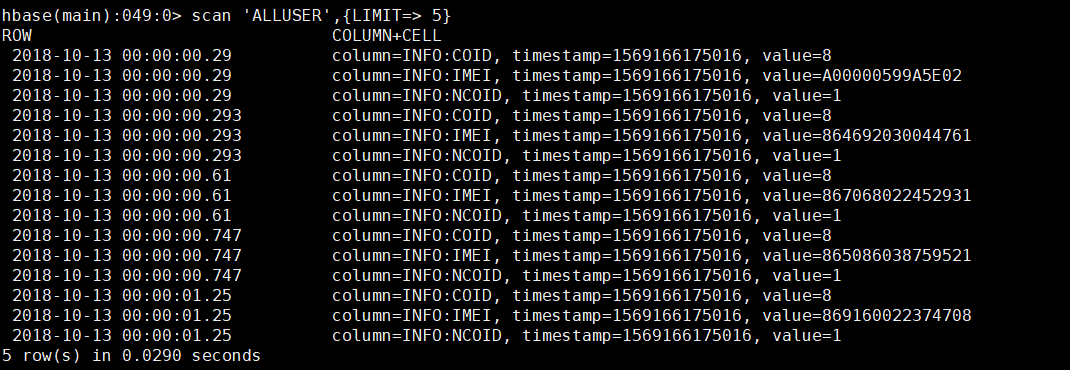

查看HBase

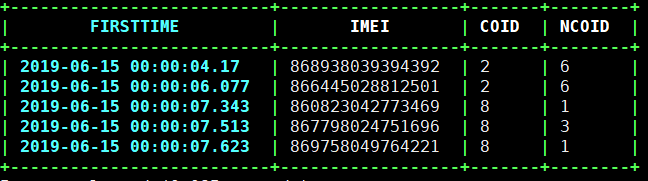

查看Phoenix