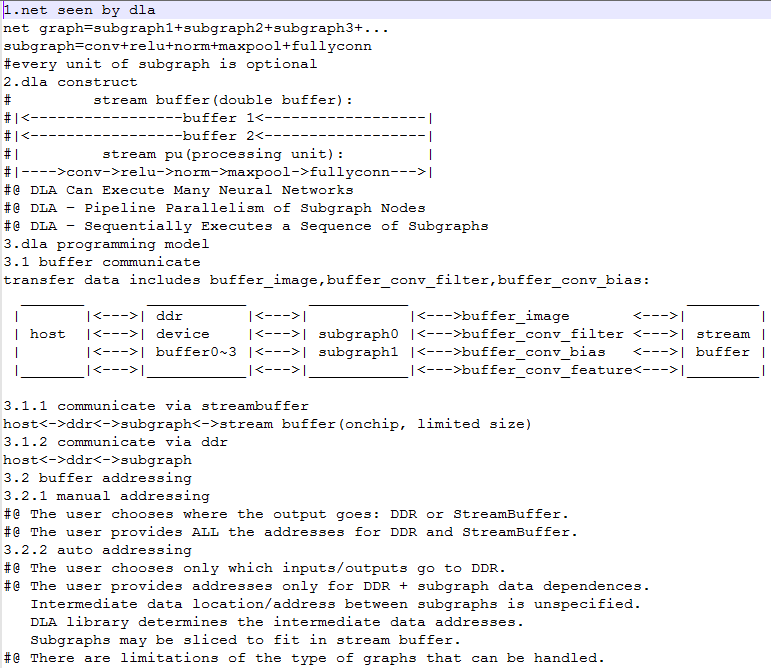

1.net seen by dla

net graph=subgraph1+subgraph2+subgraph3+...

subgraph=conv+relu+norm+maxpool+fullyconn

#every unit of subgraph is optional

2.dla construct

# stream buffer(double buffer):

#|<-----------------buffer 1<------------------|

#|<-----------------buffer 2<------------------|

#| stream pu(processing unit): |

#|---->conv->relu->norm->maxpool->fullyconn--->|

#@ DLA Can Execute Many Neural Networks

#@ DLA – Pipeline Parallelism of Subgraph Nodes

#@ DLA – Sequentially Executes a Sequence of Subgraphs

3.dla programming model

3.1 buffer communicate

transfer data includes buffer_image,buffer_conv_filter,buffer_conv_bias:

_______ ___________ ___________ ________

| |<--->| ddr |<--->| |<--->buffer_image <--->| |

| host |<--->| device |<--->| subgraph0 |<--->buffer_conv_filter <--->| stream |

| |<--->| buffer0~3 |<--->| subgraph1 |<--->buffer_conv_bias <--->| buffer |

|_______|<--->|___________|<--->|___________|<--->buffer_conv_feature<--->|________|

3.1.1 communicate via streambuffer

host<->ddr<->subgraph<->stream buffer(onchip, limited size)

3.1.2 communicate via ddr

host<->ddr<->subgraph

3.2 buffer addressing

3.2.1 manual addressing

#@ The user chooses where the output goes: DDR or StreamBuffer.

#@ The user provides ALL the addresses for DDR and StreamBuffer.

3.2.2 auto addressing

#@ The user chooses only which inputs/outputs go to DDR.

#@ The user provides addresses only for DDR + subgraph data dependences.

Intermediate data location/address between subgraphs is unspecified.

DLA library determines the intermediate data addresses.

Subgraphs may be sliced to fit in stream buffer.

#@ There are limitations of the type of graphs that can be handled.

#@

#@