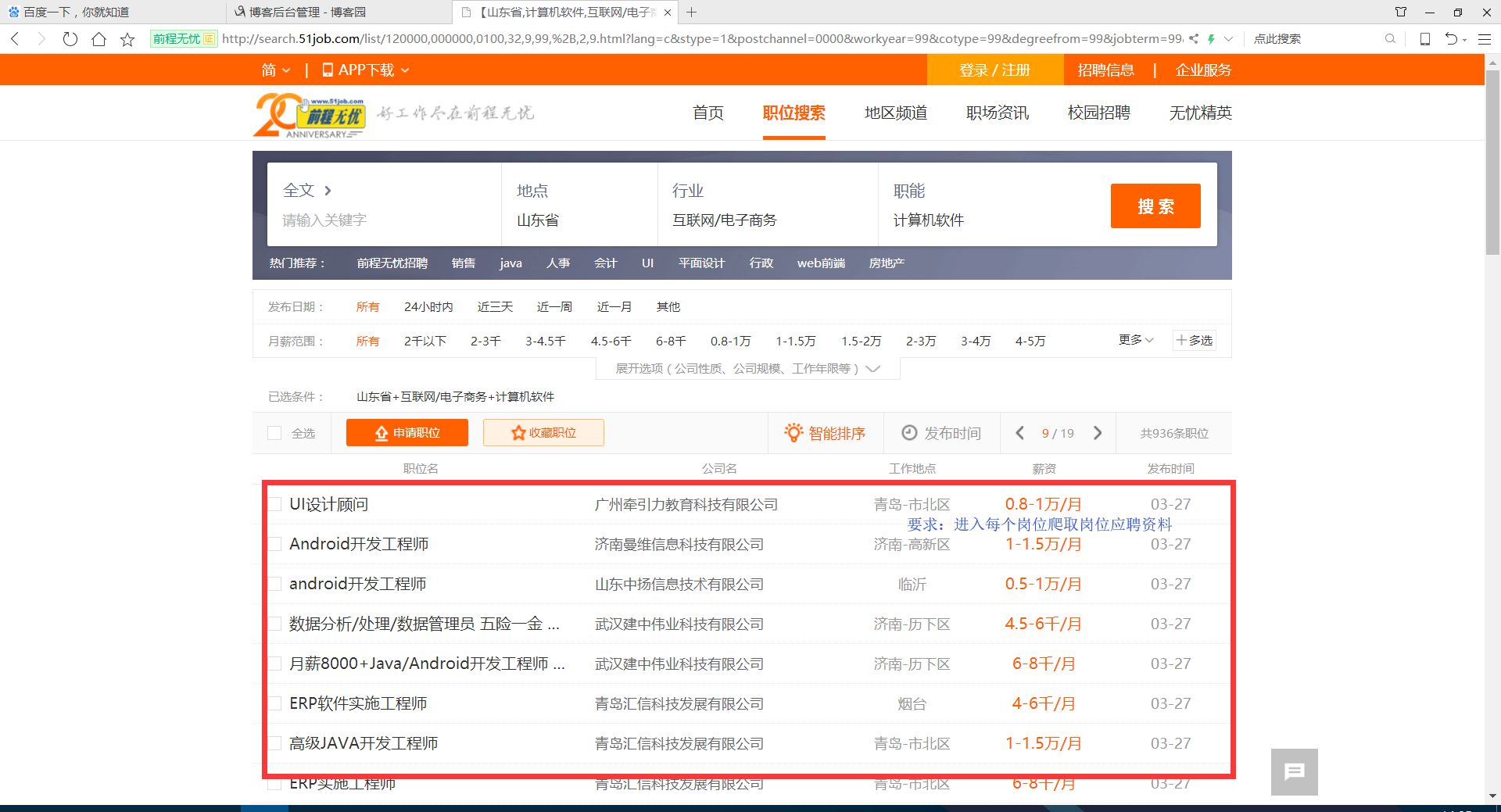

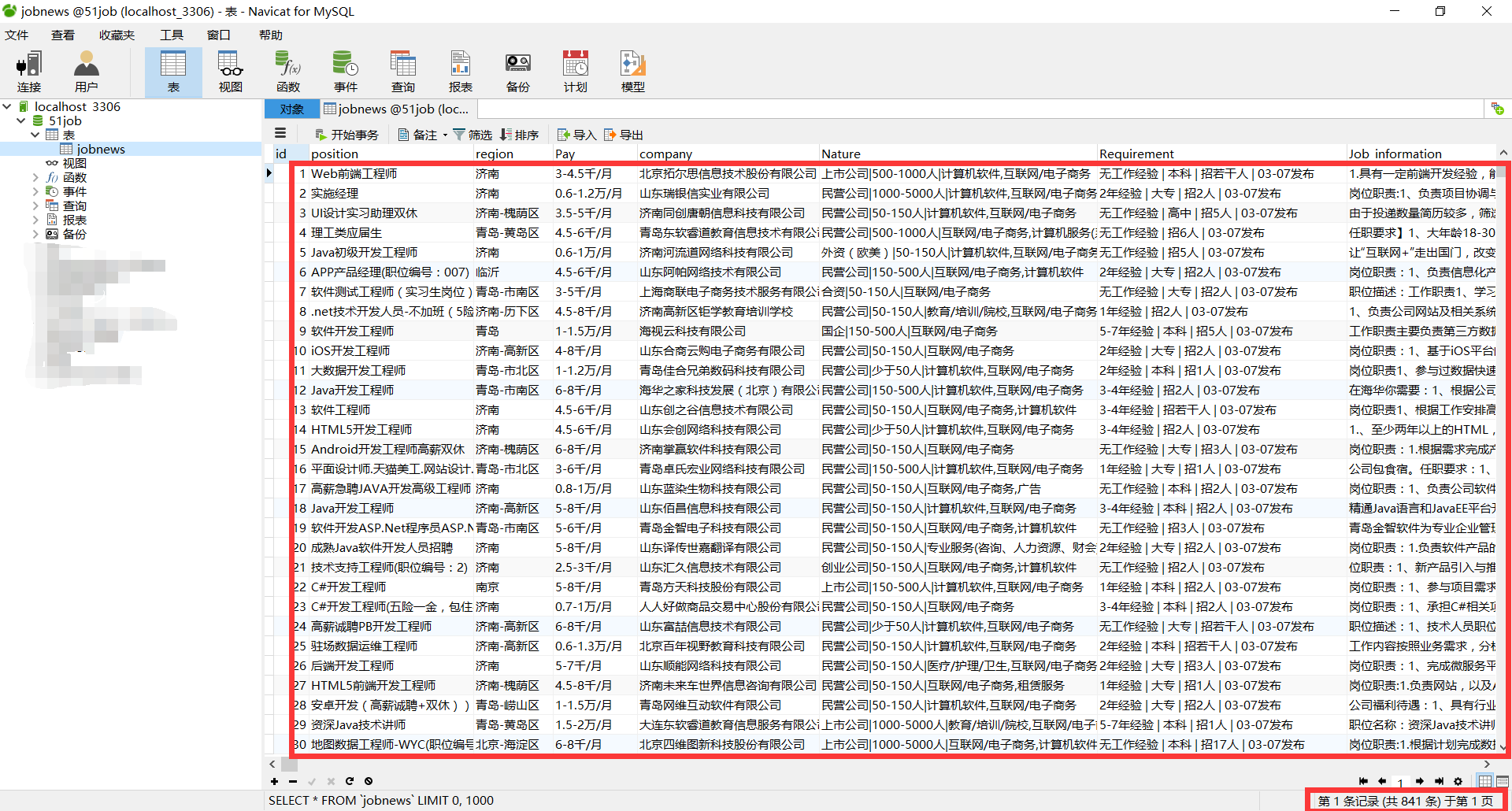

利用Python,爬取 51job 上面有关于 IT行业 的招聘信息

版权声明:未经博主授权,内容严禁分享转载

案例代码:

# __author : "J" # date : 2018-03-07 import urllib.request import re import pymysql connection = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='******', db='51job', charset='utf8') cursor = connection.cursor() num = 0 textnum = 1 while num < 18: num += 1 # 51job IT行业招聘网址 需要翻页,大约800多条数据 request = urllib.request.Request( "http://search.51job.com/list/120000,000000,0100,32,9,99,%2B,2," + str( num) + ".html?lang=c&stype=1&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=1&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=") response = urllib.request.urlopen(request) my_html = response.read().decode('gbk') # print(my_html) my_re = re.compile(r'href="(.*?)" onmousedown="">') second_html_list = re.findall(my_re, my_html) for i in second_html_list: second_request = urllib.request.Request(i) second_response = urllib.request.urlopen(second_request) second_my_html = second_response.read().decode('gbk') # 职位 地区 工资 公司名称 公司简介 # 工作经验 学历 招聘人数 发布时间 # 职位信息 联系方式 公司信息 second_my_re = re.compile('<h1 title=.*?">(.*?)<input value=.*?' + '<span class="lname">(.*?)</span>.*?' + '<strong>(.*?)</strong>.*?' + 'target="_blank" title=".*?">(.*?)<em class="icon_b i_link"></em></a>.*?' + '<p class="msg ltype">(.*?)</p>.*?</div>' , re.S | re.M | re.I) second_html_news = re.findall(second_my_re, second_my_html)[0] zhiwei = second_html_news[0].replace(" | | | ", '').replace(" ", '').replace(" ", '').replace( " ", '') diqu = second_html_news[1].replace(" | | | ", '').replace(" ", '').replace(" ", '').replace( " ", '') gongzi = second_html_news[2].replace(" | | | ", '').replace(" ", '').replace(" ", '').replace( " ", '') gongsimingcheng = second_html_news[3].replace(" | | | ", '').replace(" ", '').replace(" ", '').replace( " ", '') gongsijianjie = second_html_news[4].replace(" | | | ", '').replace(" ", '').replace(" ", '').replace( " ", '') # print(zhiwei,diqu,gongzi,gongsimingcheng,gongsijianjie) try: second_my_re = re.compile('<span class="sp4"><em class="i1"></em>(.*?)</span>' , re.S | re.M | re.I) yaoqiu = re.findall(second_my_re, second_my_html)[0] except Exception as e: pass try: second_my_re = re.compile('<span class="sp4"><em class="i2"></em>(.*?)</span>' , re.S | re.M | re.I) yaoqiu += ' | ' + re.findall(second_my_re, second_my_html)[0] except Exception as e: pass try: second_my_re = re.compile('<span class="sp4"><em class="i3"></em>(.*?)</span>' , re.S | re.M | re.I) yaoqiu += ' | ' + re.findall(second_my_re, second_my_html)[0] except Exception as e: pass try: second_my_re = re.compile('<span class="sp4"><em class="i4"></em>(.*?)</span>' , re.S | re.M | re.I) yaoqiu += ' | ' + re.findall(second_my_re, second_my_html)[0] except Exception as e: pass # print(yaoqiu) second_my_re = re.compile('<div class="bmsg job_msg inbox">(.*?)<div class="mt10">' , re.S | re.M | re.I) gangweizhize = re.findall(second_my_re, second_my_html)[0].replace(" | | | ", '').replace(" ", '').replace( " ", '').replace(" ", '') dr = re.compile(r'<[^>]+>', re.S) gangweizhize = dr.sub('', gangweizhize) second_my_re = re.compile('<span class="bname">联系方式</span>(.*?)<div class="tBorderTop_box">' , re.S | re.M | re.I) lianxifangshi = re.findall(second_my_re, second_my_html)[0].replace(" | | | ", '').replace(" ", '') dr = re.compile(r'<[^>]+>', re.S) lianxifangshi = dr.sub('', lianxifangshi) lianxifangshi = re.sub('s', '', lianxifangshi) second_my_re = re.compile('<span class="bname">公司信息</span>(.*?)<div class="tCompany_sidebar">' , re.S | re.M | re.I) gongsixinxi = re.findall(second_my_re, second_my_html)[0].replace(" ", '') dr = re.compile(r'<[^>]+>', re.S) gongsixinxi = dr.sub('', gongsixinxi) gongsixinxi = re.sub('s', '', gongsixinxi) print('第 '+str(textnum) + ' 条数据 **********************************************') print(zhiwei, diqu, gongzi, gongsimingcheng, gongsijianjie, yaoqiu, gangweizhize, lianxifangshi, gongsixinxi) textnum += 1 # try: # sql = "INSERT INTO `jobNews` (`position`,`region`,`Pay`,`company`,`Nature`,`Requirement`,`Job_information`,`Contact_information`,`Company_information`) VALUES ('" + zhiwei + "','" + diqu + "','" + gongzi + "','" + gongsimingcheng + "','" + gongsijianjie + "','" + yaoqiu + "','" + gangweizhize + "','" + lianxifangshi + "','" + gongsixinxi + "')" # cursor.execute(sql) # connection.commit() # print('存储成功!') # except Exception as e: # pass cursor.close() connection.close()

效果:

我正则表达式用的不好,所以写的很麻烦,接受建议~