测试工程师英语评估能力

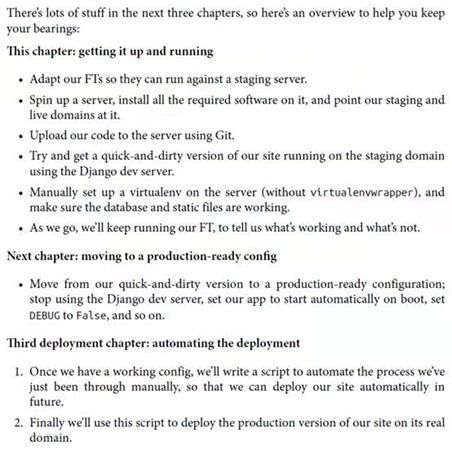

1. 英语试题1,IT行业测试词汇

要求:在10分钟内翻译以下文字为中文,不可借助互联网任何工具与网站。

评估标准:能够准确翻译出专业术语,短语则通过

以上文字实际上来自IT行业中编程的书籍

2. Dialogue About a QA Problem and Proposed Solution

CTO: Hey guys. I just got back from our monthly meeting with Dave the CEO. Boy is he furious. I actually thought at one point his moustache would catch on fire.

Lead Developer: Wow. What happened? Did you guys lose the backups again?

CTO: No, worse! During a recent demo our new UI broke again. Dave was irate because we, and by that of course I mean you, assured him that the software was ready for production. Now we might lose a major new client. And of course he is blaming me. I thought we had fixed all those bugs on staging? I am sick and tired of every single release we have being a huge bag of bugs. I can't even sleep at night.

Lead Developer: Wow that’s terrible. I'm sorry. You know, I think we should reconsider that decision we took at the kickoff not to hire a QA expert. And likewise, by we of course, I mean you and the CEO.

CTO: What? Again with this QA expert? Hire more people? We already have like 5 developers on staff. Why do we need to hire someone else? Why can't you guys just start writing more unit tests like they said at that developer conference last May? These problems are killing the company morale and worrying the stakeholders. Everyone hates us right now. They think we are incompetent morons with no attention to detail.

Lead Developer: Well, our recent move towards TDD and full test coverage has really helped our backend code, but it’s not always feasible on the front end for UI testing. To help with that we started doing automated testing with Selenium. We thought that would be enough. But I guess we still need more test cases to reach 100% coverage. But even then it still feels like we are missing something. We don't do any manual testing or even have an overall system testing strategy in place. I think we may need to bring in extra competence for that.

CTO: Really? Okay. I’ll go talk to the boss. You guys are doing a good job on most stuff, but these bugs have just got to be stopped. Now please get working on a new build without those nasty UI bugs! Maybe we can still salvage this client if we get a patch out soon enough.

Lead Developer: Okay, I hear you, and rest assured that we're on it. I won't go home until these new bugs are fixed. But please get us a QA person. Even a part-time consultant will do for now.

1 month later there is a new meeting with an external QA expert consultant

QA Expert: Hi! My name is Mary Jenkins and I am a QA specialist from Bugfree Enterprises. I heard you guys have had some problems with your Quality Assurance and testing strategy? Is that true?

CTO: Yes, well we don’t even really have an overall QA strategy in place. But we are interested in hearing about best practices and possibly implementing any reasonable proposals you can give us.

QA Consultant: Okay I see. That's not so rare. Most managers view QA as simply a “final additional task” to give the developers and not as a holistic process from start to finish.

CTO: Yes exactly. Holistic. We need some more holistic thinking!

Lead Developer: Yes I love holistic thinking. Should I get out the candles and the incense?

QA Expert: No, that won't be necessary. We don’t need candles and incense to be holistic in this case. We just need to change our preconceptions a little and implement a few basic new processes.

CTO: Okay. Will this be expensive?

QA Expert: No. Quite the contrary. QA should actually save everyone time and money in the medium to long term. And QA gives everyone more peace of mind and pride in their work. You won’t necessarily even have to hire any additional staff members.

CTO: Thank God! The CEO will love that. Can you give us a quick overview of what we need to do? We can discuss the details later.

QA Expert: Sure. Basically, I like to divide my overall testing strategy into two categories: white box testing and black box testing. The developers will be in charge of white box testing, which includes unit testing, integration testing, regression testing and production error monitoring.

Lead Developer: Great, we are already doing most of that.

QA Expert: Cool. Now you need to do the rest. I can help educate your team on what's missing.

Lead Developer: Thank you. I knew this was good idea to bring in an expert.

CTO: Okay. So what about the black box testing? Can the developers do that part too?

QA Expert: Uh, no sorry. The black box testing should be done by non-developers. Mostly they will focus on smoke testing and functional testing. And then select customers with early access would be ideal to perform the acceptance testing. That's the basics right there. Just implementing those steps should really improve things.

CTO: Wait… you want non-developers and even the customers to test the software? Is that what you mean by a holistic approach?

QA Expert: Yes, quality is everyone’s responsibility. It’s easy to blame developers for all the problems, but software is so complex and important nowadays that it’s impossible for one division to be solely responsible for building and testing it.

Lead Developer: Wow, I get it now. Holistic. Even the customers get involved. It's brilliant! This is really eye-opening. I feel like this is all starting to make sense now.

CTO: Yeah I must admit it's a bit of a revelation to me as well. I can't wait to tell the CEO. This might be just what we need. Get the customers involved, wow!

QA Expert: Awesome. Great that you guys get it. Now let's spend the rest of our time today going into more detail and dividing up tasks. Let's grab a meeting room with a whiteboard so we can start brainstorming.

Lead Developer: Okay great, but wait! I think we should honor this zen moment by splurging on some incense and candles for the meeting room. What harm could that do?

CTO: None at all I suppose! Go for it.

Lead Developer: Great. I'll be back in 15 minutes, I will get some donuts as well while I am out. They are my holistic pastry of choice.

-- EVERYONE LAUGHS --

以上对话的英语听力

链接:https://pan.baidu.com/s/1WvV2WCoq5oWMompQWzX9Og

提取码:dxbu

Reading Comprehension Questions

Dave the CTO is angry because the backend of the application broke during a demo.

A. True

B. False

The Lead Developer was one of the first people to realize that the company needed a QA Expert.

A.True

B.False

Black box testing should only be performed by developers who are familiar with the code.

A.True

B. False

3. 还有一些专业测试词汇 也可以做为 面试问题:

A/B testing involves comparing two (or more) different UI options and finding which one is best for a user. “Best” may be defined in many ways, e.g. the button layout that generates the most interactions, the wording that engages a user’s interest best, etc. The key to good A/B testing is to have good instrumentation of your application. This will allow you to record and analyze user interactions properly.

Acceptance testing

Acceptance testing is the final stage of a testing cycle. This is when the customer or end-user of the software verifies that it is working as expected. There are various forms of acceptance testing including user acceptance testing, beta testing, and operational acceptance testing.

Ad hoc testing

Ad hoc testing is unplanned, random testing that tries to break your application. Sometimes, ad hoc testing is referred to as “monkey testing” since it is likened to monkeys randomly pressing buttons. However, this is unfair. While ad hoc testing is unplanned, it is focussed. A skilled ad hoc tester will know how to trip systems up and will try all these tricks to cause the system to fail.

Assertion

An assertion is used in automated testing to assert the expected behavior of the test. An assertion fails if the result is different than what you expected it to be. This is a key concept in functional testing. Assertions are commonly used in Unit testing, but the same concept applies to other forms of automated test.

Automated testing

Automated testing describes any form of testing where a computer runs the tests rather than a human. Typically, this means automated UI testing. The aim is to get the computer to replicate the test steps that a human tester would perform.

Autonomous testing

Autonomous testing is a term for any test automation system that is able to operate without human assistance. As with most things, autonomy is a spectrum. If you want to learn more, download our eBook on the levels of test autonomy.

B

Behavior-driven development

Behavior-driven development or BDD focuses on the needs of the end-user, looking at how the software behaves, not whether it passes the tests. BDD was developed as a reaction to test-driven development (see later). BDD is often closely linked with Gherkin, a domain-specific language created to describe tests.

Beta testing

Beta testing is a common form of acceptance testing. It involves releasing a version of your software to a limited number of real users. They are free to use the software as they wish and are encouraged to give feedback. The software and backend are usually instrumented to allow you to see which features are being used and to record crash reports, etc. if there are any bugs.

Black-box testing

In black-box testing, the tester makes no assumptions about how the system under test works. All she knows is how it is meant to behave. That is, for a given input, she knows what the output is. See also White-box testing.

Bug

A bug is a generic description for any issue with a piece of software that stops it from functioning as intended. Bugs can range from minor UI problems up to issues that cause the software to crash. The aim of software testing is to minimize (or preferably eliminate) bugs in your software. See also Defect and Regression.

C

Canary testing

In Canary Testing a small percentage (around 5-10% of users) are moved to your new code. Your aim is to compare their user experience with that of users still on the old version of the code. This way your new code is being tested on a wide range of devices while running on your production servers under real-world conditions. By comparing the new code with the existing code you can spot any unexpected behaviors and record any changes/improvements in performance.

Checkpoint

In testing, a checkpoint is an intermediate verification step (Assertion) used to confirm that the test is proceeding correctly. Ideally, you should use frequent checkpoints in a complex test. They allow you to “fail fast”, that is, identify that there is a problem in a test without needing to complete every step. They also help to make your tests more robust.

Code coverage

This is related to Unit testing. Code coverage measures the proportion of your functional code that has associated unit tests. Typically, this is given as a percentage. Many CI tools automatically measure this, and it may be used as a criterion to accept a pull request.

Component testing

A component consists of several software functions combined together. Typically, these are tested using the White-box approach. However, you can use Black-box testing where you only make limited assumptions about how the code works. The aim is to check that the component functions correctly. To do this, the tests need to provide suitable input values.

CSS selector

A CSS selector is usually used to determine what style to apply to a given element on a web page. In Selenium and other forms of Test script, a CSS selector can be reused to actually choose an element on the page. See Selectors.

D

Data-driven testing

Data-driven testing is a form of Automated testing. In data-driven testing, you run multiple versions of a test using different data each time. For each set of data, you know the expected outcome. Data can come from a CSV file, an XML document or a database. This form of testing can be really useful in situations where you need to run multiple variations of the test. For instance, if you have a site that needs to work in multiple regions with different postal address formats.

Defect

A defect is a form of software Bug. Defects occur when the software fails to conform to the specification. Sometimes, this will cause the software to fail in some way, but more often a defect may be visual or may have limited functional impact.

Distributed testing

In distributed testing, your tests run across multiple systems in parallel. There are various ways that distributed testing can help with test automation. It may involve multiple end systems accessing one backend to conduct more accurate stress testing. Or it might be multiple versions of the test running in parallel on different systems. It can even just involve dividing your tests across different servers, each running a given browser.

E

End-to-end testing

End-to-end testing involves testing the complete set of user flows to ensure that your software behaves as intended. The aim is to test the software from the perspective of a user. Typically, these tests should start from installing the software from scratch on a clean system and should include registering a new user, etc.

Expected result

The expected result of a test is the correct output based on the inputs given in the test. Every test plan should define the expected result at each step of the test.

Exploratory testing

Exploratory testing is used to find bugs that you know exist but which you cannot replicate. The aim is to reliably reproduce the bug so that the developers can identify what is triggering it. In exploratory testing, there are no formal test cases. Instead, the tester uses their experience to try and work out likely causes for the bug. See also Ad hoc testing.

F

Failure

A test failure happens when a test doesn’t complete, or when it doesn’t produce the expected result. Some test failures are spurious and happen because of issues with the test itself. See False-positive result.

False-negative result

In software testing, a false-negative is a test that incorrectly passes despite the presence of an issue. Typically, false-negative results happen when the expected result hasn’t been correctly defined. This can be a common problem in UI testing, where tests fail to check the whole UI for errors.

False-positive result

In software testing, a false-positive test is one where the test fails incorrectly. Typically, this is because of an error in the test itself, rather than a failure in the system. In automated testing, such failures are common and result in the need for Test maintenance.

Functional testing

Functional tests verify that your application does what it’s designed to do. More specifically, you are aiming to test each functional element of your software to verify that the output is correct. Functional testing covers Unit testing, Component testing, and UI testing among others.

G

Gherkin

Gherkin is a domain-specific language created for Behavior-driven testing. It describes components and their associated tests in formal language designed to make it easier to automatically test the resulting software.

I

Integration testing

Integration testing involves testing complete systems formed of multiple components. The specific aim is to check that the components function correctly together. Each component is treated as a black box, and some components may be replaced with fake inputs. Integration testing is vital when you have several teams working on a large piece of software.

Intelligent test agent

An intelligent test agent uses machine learning and other forms of artificial intelligence to help create, execute, assess, and maintain automated tests. In effect, it acts as a test engineer that never needs sleep, holidays or days off. One example of such a system is Functionize.

K

Keyword-driven framework

Keyword-driven testing frameworks are a popular approach for simplifying test creation. They use simple natural language processing coupled with fixed keywords to define tests. This can help non-technical users to create tests. The Functionize ALP™ engine understands certain keywords, but it is also able to understand unstructured text. Making it a more powerful approach.

L

Load testing

Load testing is a form of Performance testing. In load testing, you are evaluating how your backend behaves when it is subjected to high load. The aim is not to break the system, rather you want to check how the performance changes as the load increases towards the highest load you expect. This allows you to ensure your backend systems are able to scale to cope with this load. If you sustain this high load for a long period, you are doing endurance testing.

M

Maintainability

Maintainability is an abstract concept that refers to how easily a piece of software can be maintained. Typically, this means providing good documentation, following known coding conventions, using meaningful variable and function names, etc. In software testing, maintainability refers to how easily tests can be updated when you make changes to your system. For Test plans, this means adding comments to explain the aims of the plan. For Test scripts, it means documenting and commenting them properly.

Manual testing

This form of testing involves a human performing all the test steps, recording the outcome, and analyzing the results. Manual testing can be quite challenging because it often involves repeating the same set of steps many times. It is essential to always perform each Test step correctly to ensure consistency between different rounds of testing.

ML Engine

We often describe it as the brains of our system – AI technology for test automation. It combines multiple forms of machine learning along with computer vision, natural language processing, and more traditional data science techniques. This allows the engine to accurately model how your UI is meant to work, which ensures that you need minimal test maintenance.

Mutation testing

This is a form of Unit testing, designed to ensure your tests will catch all error conditions. To achieve this, the source code is changed in certain controlled ways, and you check if your tests correctly identify the change as a failure.

N

NLP

We use Natural Language Processing for creating tests from test plans written in plain English. Our version of NLP is purpose-built for testing. This allows it to parse and comprehend both structured and unstructured test plans. The resulting tests are able to run cross-browser and cross-platform.

O

Object Recognition

Object recognition is a key part of computer vision. It involves a computer learning how to recognize objects within an image by object localization, classification, and semantic segmentation. This can be used in testing to help identify objects on the screen without the use of CSS-selectors. See also Visual testing.

Operational acceptance testing

Operational acceptance testing involves final checks on your system to ensure it is ready for your software to go live. This will include Performance testing, checking that your systems failover correctly, verifying that your load balancers are working, etc. See Acceptance testing.

Orchestration

Orchestration is a key part of Automated testing. It involves combining multiple tests to create complex test suites while ensuring the tests don’t interfere with one another. This includes aspects like making sure different tests don’t try to use the same test user simultaneously; ensuring that the system is in the correct state at the start of each test; etc.

P

Peak testing

A peak test is a form of Performance test where you check how well your system handles large transient spikes in load. These flash crowds are often the hardest thing for any system to cope with. Any public-facing system should be tested like this alongside Stress testing.

Penetration testing

Penetration testing is a form of Security testing where you employ an external agent to try and attack your system and penetrate or circumvent your security controls. This is an essential part of Acceptance testing for any modern software system.

Performance testing

Performance testing is interested in how well your system behaves under real-life conditions. This includes aspects such as application responsiveness and latency, server load, and database performance. See also Load testing and Stress testing.

Q

Quality/ Quality assurance

Quality assurance is the process of ensuring all your software meets a suitable level of quality. That is, ensuring that it is tested and documented well, fixing all bugs and defects, and verifying that it meets the user requirements. Many companies will adopt quality management frameworks such as ISO 9001.

R

Regression testing

Regression testing is the process of verifying that any code changes you have made haven’t broken your existing software. Typically, this is done by verifying that no known bugs have recurred (this is known as a regression). However, it also involves checking that the overall application still works properly.

S

Security testing

As the name suggests, this is about verifying that your application is secure. That means checking that your AAA system is working, verifying firewall settings, etc. A key element of this is Penetration testing, but there are other aspects too.

Selectors

A selector is used by test scripts to identify and choose objects on the screen. These are then used to perform some action such as clicking, entering text, etc. There are various forms of selectors that are commonly used. These include simple HTML IDs, CSS-selectors and XPath queries.

Selenium

Selenium is the original general-purpose framework for automated testing of UIs. It was developed in 2004 by Jason Huggins while he was working at ThoughtWorks. Selenium is an open-source project incorporating a number of modules designed to automate the testing of web and mobile applications. Core modules include Selenium Webdriver, Selenium Server, and Selenium Grid

Smoke testing

Smoke testing is a form of Regression testing. In a smoke test, you aim to test all the major user journeys in your application. It is designed to be a quick check that nothing major is broken. However, it cannot guarantee that there are no bugs, defects or regressions.

Stress testing

Stress testing is a form of Performance testing. Here, you are explicitly trying to push your application beyond its expected operating conditions. This is typically done by applying large and increasing load to the backend. You want to measure three things. Firstly, how gracefully does it fail? Secondly, how much simultaneous load can it cope with before failing? Thirdly, how many new sessions can it cope with arriving at once? See also Peak Testing.

System testing

System testing involves testing your complete software once all the components have been integrated. It is the penultimate stage of testing before acceptance testing. The aim is to verify that everything works properly. System testing should be performed against the requirements set out by the product team during the design phase and any subsequent design changes.

T

Test

A test is the specific set of steps designed to verify a particular feature. Tests exist in both manual and automated testing. Some tests are extremely simple, consisting of just a few steps. Others are more complex and may even include branches. At Functionize, tests are produced by our ALP™ engine. See also Test plan and Test step.

Test automation

See Automated testing.

Test case

A test case is the complete set of pre-requisites, required data, and expected outcomes for a given instance of a Test. A test case may be designed to pass or to fail. Often this depends on the data passed to the Test. Some test cases may include a set of different data (see Data-driven testing).

Test-driven development

A form of testing where you start by exhaustively defining a set of tests that will verify your software works. Then you create code that will pass these tests. Finally, you refine and refactor the code to make it performant.

Test maintenance

Test maintenance is the necessary process of updating Test plans and Test scripts to reflect changes in the UI and behavior of your software. Test maintenance is a particular issue in Automated testing. Often, with Selenium, test maintenance can require more resources than creating new test scripts. As a result, it is a source of inefficiency.

Test plan

A test plan is the detailed set of Test steps that are needed to test a given user journey. Typically, test plans will be generated from test management tools. However, they can also be written manually. Test plans usually require input from the product team to ensure you are testing all aspects of the software. However, some test plans are developed following Exploratory testing to replicate known bugs. NB, for regression testing, you will often reuse test plans from earlier rounds of testing.

Test script

Test scripts are used for automated testing. Typically, test scripts are written for Selenium. They can be written in almost any web scripting language including JavaScript, Python, and Ruby. Selenium converts the script into Selenese, and passes it to the Webdriver, which is responsible for executing it on a real browser.

Test step

A test plan is made up of individual test steps. Each step will have a set of starting conditions, a set of required actions, and an expected outcome. A step may be as simple as “Verify that the login button is shown at the top right of the page”. Or it may be more complex: “Locate the Nike Air Force 1 trainers on the screen. Select size 10 ½, then click ‘add to basket’. You should be taken to the shopping basket page with the correct pair of trainers in the basket.”

Test suite

A test suite is a set of tests and test cases designed to work together. Typical projects have several test suites for things like regression testing, smoke testing, and performance testing.

U

UI testing

UI testing involves checking that all elements of your application UI are working. This can include testing all user journeys and application flows, checking the actual UI elements, and ensuring the overall UX works. For many applications, UI testing is vital. Typically, when people talk about automated testing, they mean automated UI testing.

Unit testing

Unit tests are used by developers to test individual functions. This is a form of White-box testing. That is, the developer knows exactly what the function should do. Good unit tests will check both valid and invalid outputs. One way to check this is with Mutation testing.

Usability testing

This is the process of checking how easy it is to actually use a piece of software. It measures the user experience (UX). One common approach is A/B testing.

User acceptance testing

User acceptance testing is one of the two main types of Acceptance testing. Here, the aim is to check that end-users are able to use the software as expected. This may be done using focussed user panels, but more often is done using approaches such as Beta testing.

V

V-model

The V-model for testing associates each stage of the classic waterfall development model with an equivalent stage of testing. It originated in hardware testing. Despite the link with waterfall, it also works for agile development.

Validation

Validation is a key aspect of Acceptance testing. It involves verifying that you are building a system that does what it is expected to. If you are building software for a client, then validation involves checking with them that you have delivered what they wanted. If you are building software for general release, then the Product Owner or Head of Product becomes the final arbiter. Contrast this with Verification.

Verification

Verification is the process of checking that software is working correctly. That is, checking that it is bug- and defect-free and that the backend works. This covers all stages of testing up to System testing, along with Load and Stress testing.

Visual testing

Visual testing relies on Object recognition, but couples it with other forms of artificial intelligence. In visual testing, screenshots are used to check whether a test step has succeeded or not. Functionize uses screenshots taken before, during, and after each test step. It compares these against all previous test runs. Any anomalies are identified and highlighted on the screen. The system will ignore any elements that are expected to change, and can cope with UI design changes (e.g. styling changes). It also knows to ignore elements that are expected to change like the date.

W

White-box testing

In white-box testing, the tester is aware of exactly what the module under test does, and how it does it. In other words, they know the inner workings of the module. The aim is to explicitly test every part of the code. This is different from Black-box testing.

X

XPath query

An XPath query is an expression used to locate a specific object within an XML document. Since HTML is a form of XML, it can be used to very precisely locate any object within a web page, even objects within nested DOMs. However, constructing XPath queries is quite complex, so usually, other Selectors such as CSS Selectors are used instead.

其他参考:

http://www.aptest.com/glossary.html

https://www.mstb.org/Downloadfile/ISTQB%20Glossary%20of%20Testing%20Terms%202.1.pdf

https://www.iso.org/obp/ui/#iso:std:iso-iec-ieee:24765:ed-2:v1:en

https://ieeexplore.ieee.org/document/159342/definitions#definitions

其他的资源

今天先到这儿,希望对云原生,技术领导力, 企业管理,系统架构设计与评估,团队管理, 项目管理, 产品管管,团队建设 有参考作用 , 您可能感兴趣的文章:

领导人怎样带领好团队

构建创业公司突击小团队

国际化环境下系统架构演化

微服务架构设计

视频直播平台的系统架构演化

微服务与Docker介绍

Docker与CI持续集成/CD

互联网电商购物车架构演变案例

互联网业务场景下消息队列架构

互联网高效研发团队管理演进之一

消息系统架构设计演进

互联网电商搜索架构演化之一

企业信息化与软件工程的迷思

企业项目化管理介绍

软件项目成功之要素

人际沟通风格介绍一

精益IT组织与分享式领导

学习型组织与企业

企业创新文化与等级观念

组织目标与个人目标

初创公司人才招聘与管理

人才公司环境与企业文化

企业文化、团队文化与知识共享

高效能的团队建设

项目管理沟通计划

构建高效的研发与自动化运维

某大型电商云平台实践

互联网数据库架构设计思路

IT基础架构规划方案一(网络系统规划)

餐饮行业解决方案之客户分析流程

餐饮行业解决方案之采购战略制定与实施流程

餐饮行业解决方案之业务设计流程

供应链需求调研CheckList

企业应用之性能实时度量系统演变

如有想了解更多软件设计与架构, 系统IT,企业信息化, 团队管理 资讯,请关注我的微信订阅号:

作者:Petter Liu

出处:http://www.cnblogs.com/wintersun/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

该文章也同时发布在我的独立博客中-Petter Liu Blog。

![MegadotnetMicroMsg_thumb1_thumb1_thu[2] MegadotnetMicroMsg_thumb1_thumb1_thu[2]](https://images0.cnblogs.com/blog/15172/201503/211054062506158.jpg)