上一篇《docker-compose ELK+Filebeat查看docker及容器的日志》已经演示了如何在docker中使用docker-compose创建容器,并将docker中的所有日志收集到ELK中,使用Filebeat的方式读取docker容器的日志文件

视频来源是:[ ElasticSearch 3 ] How to install EFK stack using Docker with Fluentd

代码参考地址:https://github.com/justmeandopensource/elk/tree/master/docker-efk

现在使用的是docker-compose EFK读取容器日志

其中docker-compose.yml文件内容如下

version: '2.2' services: fluentd: build: ./fluentd container_name: fluentd volumes: - ./fluentd/conf:/fluentd/etc ports: - "24224:24224" - "24224:24224/udp" # Elasticsearch requires your vm.max_map_count set to 262144 # Default will be 65530 # sysctl -w vm.max_map_count=262144 # Add this to /etc/sysctl.conf for making it permanent elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch:6.5.4 container_name: elasticsearch environment: - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - esdata1:/usr/share/elasticsearch/data ports: - 9200:9200 kibana: image: docker.elastic.co/kibana/kibana:6.5.4 container_name: kibana environment: ELASTICSEARCH_URL: "http://elasticsearch:9200" ports: - 5601:5601 depends_on: - elasticsearch volumes: esdata1: driver: local

fluentd = > Dockerfile

FROM fluent/fluentd RUN ["gem", "install", "fluent-plugin-elasticsearch", "--no-rdoc", "--no-ri"]

fluentd => fluent.conf

<source> @type forward port 24224 </source> # Store Data in Elasticsearch <match *.**> @type copy <store> @type elasticsearch host elasticsearch port 9200 include_tag_key true tag_key @log_name logstash_format true flush_interval 10s </store> </match>

musc => clients-td-agent.conf

<source> @type syslog @id input_syslog port 42185 tag centosvm01.system </source> <match *.**> @type forward @id forward_syslog <server> host <fluentd-ip-address> </server> </match>

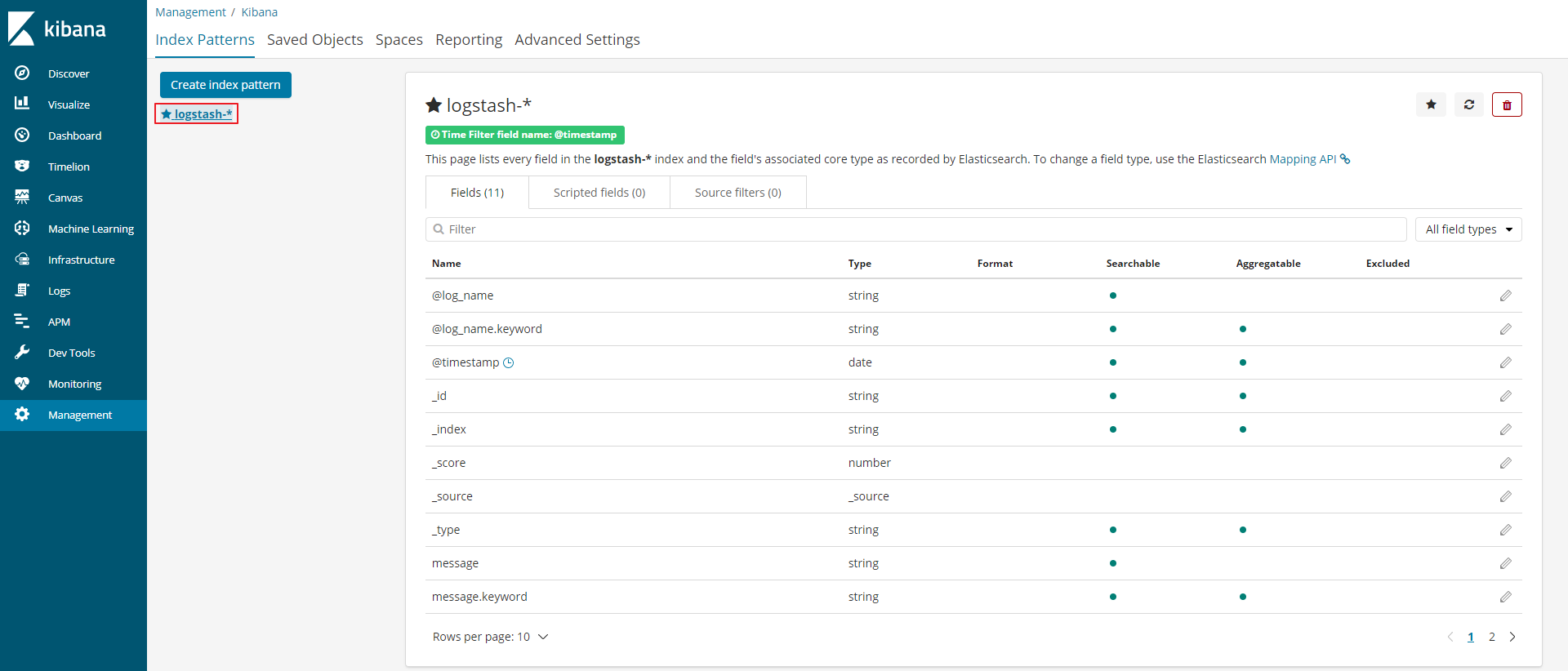

整体运行还是很简单的,在打开[HostIP:5601]后,可以看到kibana已经存在了,关于index-pattern,也可以创建,但这次的名称与之前ELK的名称不同,已经是logstash-*,并且日志也是可以读取的。