为什么使用容器?

1.上线流程繁琐

开发->测试->申请资源->审批->部署->测试等环节

2.资源利用率低

普遍服务器利用率低,造成过多浪费

3.扩容/缩容不及时

业务高峰期扩容流程繁琐,上线不及时

4.服务器环境臃肿

服务器越来越臃肿,对维护、迁移带来困难

Docker设计目标:

- 提供简单的应用程序打包工具

- 开发人员和运维人员职责逻辑分离

- 多环境保持一致性

Kubernetes设计目标:

- 集中管理所有容器

- 资源编排

- 资源调度

- 弹性伸缩

- 资源隔离

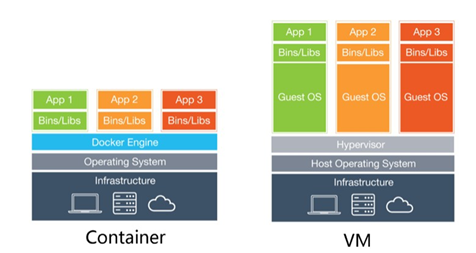

容器 vs 虚拟机

优点:

1、环境治理

2、提高服务器资源利用率

3、快速搭建新技术环境,不用学习复杂的部署环境

4、轻量级

5、虚拟化满足不了一些业务场景

6、完美构建微服务部署环境

7、一次构建,多地方部署

8、快速部署、迁移、回滚,不依赖底层环境

9、高度保持多个环境一致性

缺点:

1、安全性不如VM

2、隔离性不如VM

3、大规模不易管理,K8s应用而生

4、不易有状态应用部署

5、排查问题比较难

6、不支持Windows

原理

cgroups 资源限制:比如CPU/内存

namespace 资源隔离:进程、文件系统、用户等

ufs 联合文件系统:镜像增量式存储,提高磁盘利用率

Docker 基本使用

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo http://mirrors.aliyun.com/repo/Centos-7.repo 安装docker注意事项: 1、替换国内yum源 2、服务器时区和时间保持国内 3、selinux和firewalld关闭 sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker docker inspect image nginx

CentOS7.x安装Docker # 安装依赖包 yum install -y yum-utils device-mapper-persistent-data lvm2 # 添加Docker软件包源 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo # 安装Docker CE yum install -y docker-ce # 启动Docker服务并设置开机启动 systemctl start docker systemctl enable docker 官方文档:https://docs.docker.com 阿里云源:http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

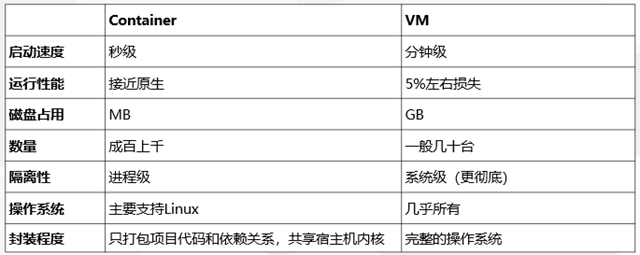

理解容器镜像

镜像是什么?

- 一个分层存储的文件

- 一个软件的环境

- 一个镜像可以创建N个容器

- 一种标准化的交付

- 一个不包含Linux内核而又精简的Linux操作系统

镜像不是一个单一的文件,而是有多层构成。我们可以通过docker history <ID/NAME> 查看镜像中各层内容及大小,每层 对应着Dockerfile中的一条指令。Docker镜像默认存储在/var/lib/docker/<storage-driver>中。

镜像从哪里来?

Docker Hub是由Docker公司负责维护的公共注册中心,包含大量的容器镜像,Docker工具默认从这个公共镜像库下载镜像。 地址:https://hub.docker.com

配置镜像加速器:https://www.daocloud.io/mirror

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io

如图,容器其实是在镜像的最上面加了一层读写层,在运行容器里文件改动时, 会先从镜像里要写的文件复制到容器自己的文件系统中(读写层)。

如果容器删除了,最上面的读写层也就删除了,改动也就丢失了。所以无论多少个容器共享一个镜像,所做的写操作都是从镜像的文件系统中复制过来操作 的,并不会修改镜像的源文件,这种方式提高磁盘利用率。

若想持久化这些改动,可以通过docker commit 将容器保存成一个新镜像。

- 一个镜像创建多个容器

- 镜像增量式存储

- 创建的容器里面修改不会影响到镜像

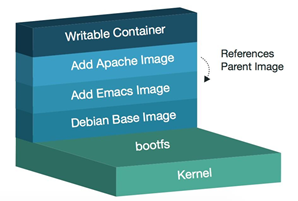

管理镜像常用命令表

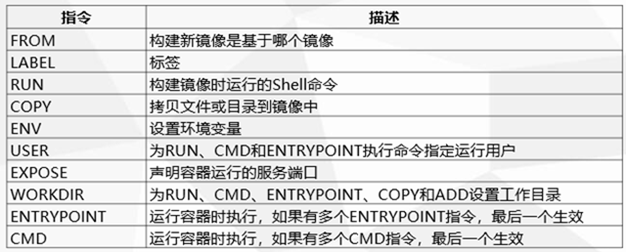

编写Dockerfile的技巧:

1、如果追求镜像更小,选择alpine

2、运行的Shell命令尽可能写到一个RUN里面,减少镜像层

3、清理部署时产生留的缓存或者文件

FROM centos:7 RUN yum install -y gcc gcc-c++ make openssl-devel pcre-devel RUN ./configure --prefix=/usr/local/nginx --with-http_ssl_module --with-http_stub_status_module && make -j 4 && make install

创建应用容器并做资源限制

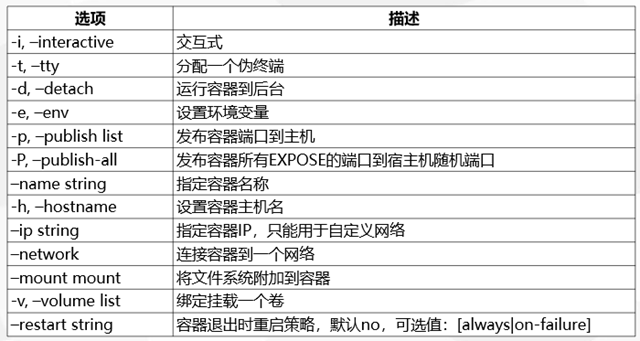

创建容器常用选项

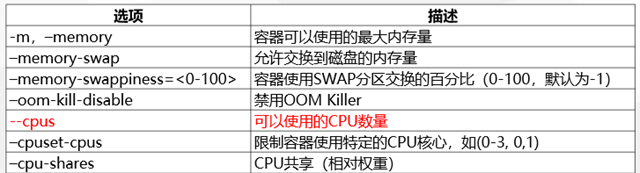

容器资源限制参数表

示例:

内存限额: 允许容器最多使用500M内存和100M的Swap,并禁用 OOM Killer: docker run -d --name nginx03 --memory="500m" --memory-swap="600m" --oom-kill-disable nginx CPU限额: 允许容器最多使用一个半的CPU: docker run -d --name nginx04 --cpus="1.5" nginx 允许容器最多使用50%的CPU: docker run -d --name nginx05 --cpus=".5" nginx

管理容器常用命令表

创建一个容器: 1、设置容器名为hello 2、暴露88端口让外部访问 3、传入一个test=123456变量 4、设置开机启动 docker run -d --name hello -e test=123456 -p 88:80 --restart=always nginx docker run [OPTIONS] IMAGE [COMMAND] [ARG...] docker exec -it nginx bash docker run -it centos bash nginx -g daemon off; 资源限制:内存、CPU、硬盘、网络 需要持久化的数据: 1、日志,一般用于方便日志采集和故障排查 2、配置文件,比如nginx配置文件 3、业务数据,比如mysql,网站程序 4、临时缓存数据,比如nginx-proxy-cache -v 数据卷名称或者源目录:容器目标 bind mounts注意点: 1、宿主机文件或者目录必须存在才能成功挂载 2、宿主机文件或者目录覆盖容器中内容 镜像分类: 1、基础镜像,例如centos(yum)、ubuntu(apt)、alpine(apk) 2、环境镜像,例如php、jdk、python 3、项目镜像,打包好的可部署镜像 制作镜像: 1、选择一个符合线上操作系统的基础镜像 2、用基础镜像创建一个容器,手动在容器里面跑一边你要部署的应用 3、确认你启动这个应用的前台运行命令 源码安装: 0. 安装依赖包,例如gcc、make 1、./configure 2、make 3、make install PHP镜像: 1、PHP环境,可以运行认可php脚本 2、PHP-FPM java -jar xxx.jar docker-compose 单机容器编排工具

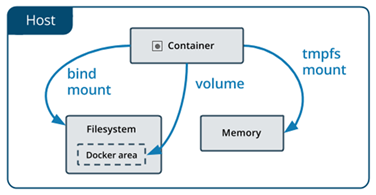

持久化容器中应用程序数据

Docker提供三种方式将数据从宿主机挂载到容器中:

- volumes:Docker管理宿主机文件系统的一部分(/var/lib/docker/volumes)。保存数据的最佳方式。

- bind mounts:将宿主机上的任意位置的文件或者目录挂载到容器中。

- tmpfs:挂载存储在主机系统的内存中,而不会写入主机的文件系统。如果不希望将数据持久存储在任何位置,可以使用 tmpfs,同时避免写入容器可写层提高性能。

Dockerfile 构建常见基础镜像

[root@mysql dockerfile]# tree

.

├── java

│ └── Dockerfile

├── nginx

│ ├── Dockerfile

│ ├── nginx-1.15.5.tar.gz

│ └── nginx.conf

├── php

│ ├── Dockerfile

│ ├── php-5.6.36.tar.gz

│ ├── php-fpm.conf

│ └── php.ini

└── tomcat

├── apache-tomcat-8.5.43.tar.gz

└── Dockerfile

构建Nginx基础镜像

FROM centos:7

LABEL maintainer www.ctnrs.com

RUN yum install -y gcc gcc-c++ make

openssl-devel pcre-devel gd-devel

iproute net-tools telnet wget curl &&

yum clean all &&

rm -rf /var/cache/yum/*

COPY nginx-1.15.5.tar.gz /

RUN tar zxf nginx-1.15.5.tar.gz &&

cd nginx-1.15.5 &&

./configure --prefix=/usr/local/nginx

--with-http_ssl_module

--with-http_stub_status_module &&

make -j 4 && make install &&

rm -rf /usr/local/nginx/html/* &&

echo "ok" >> /usr/local/nginx/html/status.html &&

cd / && rm -rf nginx* &&

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV PATH $PATH:/usr/local/nginx/sbin

COPY nginx.conf /usr/local/nginx/conf/nginx.conf

WORKDIR /usr/local/nginx

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

构建PHP基础镜像

FROM centos:7

MAINTAINER www.ctnrs.com

RUN yum install epel-release -y &&

yum install -y gcc gcc-c++ make gd-devel libxml2-devel

libcurl-devel libjpeg-devel libpng-devel openssl-devel

libmcrypt-devel libxslt-devel libtidy-devel autoconf

iproute net-tools telnet wget curl &&

yum clean all &&

rm -rf /var/cache/yum/*

COPY php-5.6.36.tar.gz /

RUN tar zxf php-5.6.36.tar.gz &&

cd php-5.6.36 &&

./configure --prefix=/usr/local/php

--with-config-file-path=/usr/local/php/etc

--enable-fpm --enable-opcache

--with-mysql --with-mysqli --with-pdo-mysql

--with-openssl --with-zlib --with-curl --with-gd

--with-jpeg-dir --with-png-dir --with-freetype-dir

--enable-mbstring --with-mcrypt --enable-hash &&

make -j 4 && make install &&

cp php.ini-production /usr/local/php/etc/php.ini &&

cp sapi/fpm/php-fpm.conf /usr/local/php/etc/php-fpm.conf &&

sed -i "90a daemonize = no" /usr/local/php/etc/php-fpm.conf &&

mkdir /usr/local/php/log &&

cd / && rm -rf php* &&

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV PATH $PATH:/usr/local/php/sbin

COPY php.ini /usr/local/php/etc/

COPY php-fpm.conf /usr/local/php/etc/

WORKDIR /usr/local/php

EXPOSE 9000

CMD ["php-fpm"]

;;;;;;;;;;;;;;;;;;;;;

; FPM Configuration ;

;;;;;;;;;;;;;;;;;;;;;

; All relative paths in this configuration file are relative to PHP's install

; prefix (/usr/local/php). This prefix can be dynamically changed by using the

; '-p' argument from the command line.

; Include one or more files. If glob(3) exists, it is used to include a bunch of

; files from a glob(3) pattern. This directive can be used everywhere in the

; file.

; Relative path can also be used. They will be prefixed by:

; - the global prefix if it's been set (-p argument)

; - /usr/local/php otherwise

;include=etc/fpm.d/*.conf

;;;;;;;;;;;;;;;;;;

; Global Options ;

;;;;;;;;;;;;;;;;;;

[global]

; Pid file

; Note: the default prefix is /usr/local/php/var

; Default Value: none

pid = /var/run/php-fpm.pid

; Error log file

; If it's set to "syslog", log is sent to syslogd instead of being written

; in a local file.

; Note: the default prefix is /usr/local/php/var

; Default Value: log/php-fpm.log

error_log = /usr/local/php/log/php-fpm.log

; syslog_facility is used to specify what type of program is logging the

; message. This lets syslogd specify that messages from different facilities

; will be handled differently.

; See syslog(3) for possible values (ex daemon equiv LOG_DAEMON)

; Default Value: daemon

;syslog.facility = daemon

; syslog_ident is prepended to every message. If you have multiple FPM

; instances running on the same server, you can change the default value

; which must suit common needs.

; Default Value: php-fpm

;syslog.ident = php-fpm

; Log level

; Possible Values: alert, error, warning, notice, debug

; Default Value: notice

log_level = warning

; If this number of child processes exit with SIGSEGV or SIGBUS within the time

; interval set by emergency_restart_interval then FPM will restart. A value

; of '0' means 'Off'.

; Default Value: 0

;emergency_restart_threshold = 0

; Interval of time used by emergency_restart_interval to determine when

; a graceful restart will be initiated. This can be useful to work around

; accidental corruptions in an accelerator's shared memory.

; Available Units: s(econds), m(inutes), h(ours), or d(ays)

; Default Unit: seconds

; Default Value: 0

emergency_restart_interval = 24h

; Time limit for child processes to wait for a reaction on signals from master.

; Available units: s(econds), m(inutes), h(ours), or d(ays)

; Default Unit: seconds

; Default Value: 0

process_control_timeout = 5s

; The maximum number of processes FPM will fork. This has been design to control

; the global number of processes when using dynamic PM within a lot of pools.

; Use it with caution.

; Note: A value of 0 indicates no limit

; Default Value: 0

; process.max = 128

; Specify the nice(2) priority to apply to the master process (only if set)

; The value can vary from -19 (highest priority) to 20 (lower priority)

; Note: - It will only work if the FPM master process is launched as root

; - The pool process will inherit the master process priority

; unless it specified otherwise

; Default Value: no set

; process.priority = -19

; Send FPM to background. Set to 'no' to keep FPM in foreground for debugging.

; Default Value: yes

daemonize = no

; Set open file descriptor rlimit for the master process.

; Default Value: system defined value

rlimit_files = 10240

; Set max core size rlimit for the master process.

; Possible Values: 'unlimited' or an integer greater or equal to 0

; Default Value: system defined value

;rlimit_core = 0

; Specify the event mechanism FPM will use. The following is available:

; - select (any POSIX os)

; - poll (any POSIX os)

; - epoll (linux >= 2.5.44)

; - kqueue (FreeBSD >= 4.1, OpenBSD >= 2.9, NetBSD >= 2.0)

; - /dev/poll (Solaris >= 7)

; - port (Solaris >= 10)

; Default Value: not set (auto detection)

;events.mechanism = epoll

; When FPM is build with systemd integration, specify the interval,

; in second, between health report notification to systemd.

; Set to 0 to disable.

; Available Units: s(econds), m(inutes), h(ours)

; Default Unit: seconds

; Default value: 10

;systemd_interval = 10

;;;;;;;;;;;;;;;;;;;;

; Pool Definitions ;

;;;;;;;;;;;;;;;;;;;;

; Multiple pools of child processes may be started with different listening

; ports and different management options. The name of the pool will be

; used in logs and stats. There is no limitation on the number of pools which

; FPM can handle. Your system will tell you anyway :)

; Start a new pool named 'www'.

; the variable $pool can we used in any directive and will be replaced by the

; pool name ('www' here)

[www]

; Per pool prefix

; It only applies on the following directives:

; - 'access.log'

; - 'slowlog'

; - 'listen' (unixsocket)

; - 'chroot'

; - 'chdir'

; - 'php_values'

; - 'php_admin_values'

; When not set, the global prefix (or /usr/local/php) applies instead.

; Note: This directive can also be relative to the global prefix.

; Default Value: none

;prefix = /path/to/pools/$pool

; Unix user/group of processes

; Note: The user is mandatory. If the group is not set, the default user's group

; will be used.

user = nobody

group = nobody

; The address on which to accept FastCGI requests.

; Valid syntaxes are:

; 'ip.add.re.ss:port' - to listen on a TCP socket to a specific IPv4 address on

; a specific port;

; '[ip:6:addr:ess]:port' - to listen on a TCP socket to a specific IPv6 address on

; a specific port;

; 'port' - to listen on a TCP socket to all IPv4 addresses on a

; specific port;

; '[::]:port' - to listen on a TCP socket to all addresses

; (IPv6 and IPv4-mapped) on a specific port;

; '/path/to/unix/socket' - to listen on a unix socket.

; Note: This value is mandatory.

;listen = 127.0.0.1:9000

listen = 0.0.0.0:9000

; Set listen(2) backlog.

; Default Value: 65535 (-1 on FreeBSD and OpenBSD)

;listen.backlog = 65535

; Set permissions for unix socket, if one is used. In Linux, read/write

; permissions must be set in order to allow connections from a web server. Many

; BSD-derived systems allow connections regardless of permissions.

; Default Values: user and group are set as the running user

; mode is set to 0660

listen.owner = nobody

listen.group = nobody

;listen.mode = 0660

; When POSIX Access Control Lists are supported you can set them using

; these options, value is a comma separated list of user/group names.

; When set, listen.owner and listen.group are ignored

;listen.acl_users =

;listen.acl_groups =

; List of addresses (IPv4/IPv6) of FastCGI clients which are allowed to connect.

; Equivalent to the FCGI_WEB_SERVER_ADDRS environment variable in the original

; PHP FCGI (5.2.2+). Makes sense only with a tcp listening socket. Each address

; must be separated by a comma. If this value is left blank, connections will be

; accepted from any ip address.

; Default Value: any

; listen.allowed_clients = 127.0.0.1

; Specify the nice(2) priority to apply to the pool processes (only if set)

; The value can vary from -19 (highest priority) to 20 (lower priority)

; Note: - It will only work if the FPM master process is launched as root

; - The pool processes will inherit the master process priority

; unless it specified otherwise

; Default Value: no set

; process.priority = -19

; Set the process dumpable flag (PR_SET_DUMPABLE prctl) even if the process user

; or group is differrent than the master process user. It allows to create process

; core dump and ptrace the process for the pool user.

; Default Value: no

; process.dumpable = yes

; Choose how the process manager will control the number of child processes.

; Possible Values:

; static - a fixed number (pm.max_children) of child processes;

; dynamic - the number of child processes are set dynamically based on the

; following directives. With this process management, there will be

; always at least 1 children.

; pm.max_children - the maximum number of children that can

; be alive at the same time.

; pm.start_servers - the number of children created on startup.

; pm.min_spare_servers - the minimum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is less than this

; number then some children will be created.

; pm.max_spare_servers - the maximum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is greater than this

; number then some children will be killed.

; ondemand - no children are created at startup. Children will be forked when

; new requests will connect. The following parameter are used:

; pm.max_children - the maximum number of children that

; can be alive at the same time.

; pm.process_idle_timeout - The number of seconds after which

; an idle process will be killed.

; Note: This value is mandatory.

pm = dynamic

; The number of child processes to be created when pm is set to 'static' and the

; maximum number of child processes when pm is set to 'dynamic' or 'ondemand'.

; This value sets the limit on the number of simultaneous requests that will be

; served. Equivalent to the ApacheMaxClients directive with mpm_prefork.

; Equivalent to the PHP_FCGI_CHILDREN environment variable in the original PHP

; CGI. The below defaults are based on a server without much resources. Don't

; forget to tweak pm.* to fit your needs.

; Note: Used when pm is set to 'static', 'dynamic' or 'ondemand'

; Note: This value is mandatory.

pm.max_children = 200

; The number of child processes created on startup.

; Note: Used only when pm is set to 'dynamic'

; Default Value: min_spare_servers + (max_spare_servers - min_spare_servers) / 2

pm.start_servers = 50

; The desired minimum number of idle server processes.

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.min_spare_servers = 50

; The desired maximum number of idle server processes.

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.max_spare_servers = 100

; The number of seconds after which an idle process will be killed.

; Note: Used only when pm is set to 'ondemand'

; Default Value: 10s

;pm.process_idle_timeout = 10s;

; The number of requests each child process should execute before respawning.

; This can be useful to work around memory leaks in 3rd party libraries. For

; endless request processing specify '0'. Equivalent to PHP_FCGI_MAX_REQUESTS.

; Default Value: 0

pm.max_requests = 51200

; The URI to view the FPM status page. If this value is not set, no URI will be

; recognized as a status page. It shows the following informations:

; pool - the name of the pool;

; process manager - static, dynamic or ondemand;

; start time - the date and time FPM has started;

; start since - number of seconds since FPM has started;

; accepted conn - the number of request accepted by the pool;

; listen queue - the number of request in the queue of pending

; connections (see backlog in listen(2));

; max listen queue - the maximum number of requests in the queue

; of pending connections since FPM has started;

; listen queue len - the size of the socket queue of pending connections;

; idle processes - the number of idle processes;

; active processes - the number of active processes;

; total processes - the number of idle + active processes;

; max active processes - the maximum number of active processes since FPM

; has started;

; max children reached - number of times, the process limit has been reached,

; when pm tries to start more children (works only for

; pm 'dynamic' and 'ondemand');

; Value are updated in real time.

; Example output:

; pool: www

; process manager: static

; start time: 01/Jul/2011:17:53:49 +0200

; start since: 62636

; accepted conn: 190460

; listen queue: 0

; max listen queue: 1

; listen queue len: 42

; idle processes: 4

; active processes: 11

; total processes: 15

; max active processes: 12

; max children reached: 0

;

; By default the status page output is formatted as text/plain. Passing either

; 'html', 'xml' or 'json' in the query string will return the corresponding

; output syntax. Example:

; http://www.foo.bar/status

; http://www.foo.bar/status?json

; http://www.foo.bar/status?html

; http://www.foo.bar/status?xml

;

; By default the status page only outputs short status. Passing 'full' in the

; query string will also return status for each pool process.

; Example:

; http://www.foo.bar/status?full

; http://www.foo.bar/status?json&full

; http://www.foo.bar/status?html&full

; http://www.foo.bar/status?xml&full

; The Full status returns for each process:

; pid - the PID of the process;

; state - the state of the process (Idle, Running, ...);

; start time - the date and time the process has started;

; start since - the number of seconds since the process has started;

; requests - the number of requests the process has served;

; request duration - the duration in µs of the requests;

; request method - the request method (GET, POST, ...);

; request URI - the request URI with the query string;

; content length - the content length of the request (only with POST);

; user - the user (PHP_AUTH_USER) (or '-' if not set);

; script - the main script called (or '-' if not set);

; last request cpu - the %cpu the last request consumed

; it's always 0 if the process is not in Idle state

; because CPU calculation is done when the request

; processing has terminated;

; last request memory - the max amount of memory the last request consumed

; it's always 0 if the process is not in Idle state

; because memory calculation is done when the request

; processing has terminated;

; If the process is in Idle state, then informations are related to the

; last request the process has served. Otherwise informations are related to

; the current request being served.

; Example output:

; ************************

; pid: 31330

; state: Running

; start time: 01/Jul/2011:17:53:49 +0200

; start since: 63087

; requests: 12808

; request duration: 1250261

; request method: GET

; request URI: /test_mem.php?N=10000

; content length: 0

; user: -

; script: /home/fat/web/docs/php/test_mem.php

; last request cpu: 0.00

; last request memory: 0

;

; Note: There is a real-time FPM status monitoring sample web page available

; It's available in: /usr/local/php/share/php/fpm/status.html

;

; Note: The value must start with a leading slash (/). The value can be

; anything, but it may not be a good idea to use the .php extension or it

; may conflict with a real PHP file.

; Default Value: not set

pm.status_path = /status

; The ping URI to call the monitoring page of FPM. If this value is not set, no

; URI will be recognized as a ping page. This could be used to test from outside

; that FPM is alive and responding, or to

; - create a graph of FPM availability (rrd or such);

; - remove a server from a group if it is not responding (load balancing);

; - trigger alerts for the operating team (24/7).

; Note: The value must start with a leading slash (/). The value can be

; anything, but it may not be a good idea to use the .php extension or it

; may conflict with a real PHP file.

; Default Value: not set

;ping.path = /ping

; This directive may be used to customize the response of a ping request. The

; response is formatted as text/plain with a 200 response code.

; Default Value: pong

;ping.response = pong

; The access log file

; Default: not set

;access.log = log/$pool.access.log

; The access log format.

; The following syntax is allowed

; %%: the '%' character

; %C: %CPU used by the request

; it can accept the following format:

; - %{user}C for user CPU only

; - %{system}C for system CPU only

; - %{total}C for user + system CPU (default)

; %d: time taken to serve the request

; it can accept the following format:

; - %{seconds}d (default)

; - %{miliseconds}d

; - %{mili}d

; - %{microseconds}d

; - %{micro}d

; %e: an environment variable (same as $_ENV or $_SERVER)

; it must be associated with embraces to specify the name of the env

; variable. Some exemples:

; - server specifics like: %{REQUEST_METHOD}e or %{SERVER_PROTOCOL}e

; - HTTP headers like: %{HTTP_HOST}e or %{HTTP_USER_AGENT}e

; %f: script filename

; %l: content-length of the request (for POST request only)

; %m: request method

; %M: peak of memory allocated by PHP

; it can accept the following format:

; - %{bytes}M (default)

; - %{kilobytes}M

; - %{kilo}M

; - %{megabytes}M

; - %{mega}M

; %n: pool name

; %o: output header

; it must be associated with embraces to specify the name of the header:

; - %{Content-Type}o

; - %{X-Powered-By}o

; - %{Transfert-Encoding}o

; - ....

; %p: PID of the child that serviced the request

; %P: PID of the parent of the child that serviced the request

; %q: the query string

; %Q: the '?' character if query string exists

; %r: the request URI (without the query string, see %q and %Q)

; %R: remote IP address

; %s: status (response code)

; %t: server time the request was received

; it can accept a strftime(3) format:

; %d/%b/%Y:%H:%M:%S %z (default)

; %T: time the log has been written (the request has finished)

; it can accept a strftime(3) format:

; %d/%b/%Y:%H:%M:%S %z (default)

; %u: remote user

;

; Default: "%R - %u %t "%m %r" %s"

;access.format = "%R - %u %t "%m %r%Q%q" %s %f %{mili}d %{kilo}M %C%%"

; The log file for slow requests

; Default Value: not set

; Note: slowlog is mandatory if request_slowlog_timeout is set

slowlog = log/$pool.log.slow

; The timeout for serving a single request after which a PHP backtrace will be

; dumped to the 'slowlog' file. A value of '0s' means 'off'.

; Available units: s(econds)(default), m(inutes), h(ours), or d(ays)

; Default Value: 0

request_slowlog_timeout = 10

; The timeout for serving a single request after which the worker process will

; be killed. This option should be used when the 'max_execution_time' ini option

; does not stop script execution for some reason. A value of '0' means 'off'.

; Available units: s(econds)(default), m(inutes), h(ours), or d(ays)

; Default Value: 0

request_terminate_timeout = 600

; Set open file descriptor rlimit.

; Default Value: system defined value

rlimit_files = 10240

; Set max core size rlimit.

; Possible Values: 'unlimited' or an integer greater or equal to 0

; Default Value: system defined value

;rlimit_core = 0

; Chroot to this directory at the start. This value must be defined as an

; absolute path. When this value is not set, chroot is not used.

; Note: you can prefix with '$prefix' to chroot to the pool prefix or one

; of its subdirectories. If the pool prefix is not set, the global prefix

; will be used instead.

; Note: chrooting is a great security feature and should be used whenever

; possible. However, all PHP paths will be relative to the chroot

; (error_log, sessions.save_path, ...).

; Default Value: not set

;chroot =

; Chdir to this directory at the start.

; Note: relative path can be used.

; Default Value: current directory or / when chroot

;chdir = /var/www

; Redirect worker stdout and stderr into main error log. If not set, stdout and

; stderr will be redirected to /dev/null according to FastCGI specs.

; Note: on highloaded environement, this can cause some delay in the page

; process time (several ms).

; Default Value: no

;catch_workers_output = yes

; Clear environment in FPM workers

; Prevents arbitrary environment variables from reaching FPM worker processes

; by clearing the environment in workers before env vars specified in this

; pool configuration are added.

; Setting to "no" will make all environment variables available to PHP code

; via getenv(), $_ENV and $_SERVER.

; Default Value: yes

;clear_env = no

; Limits the extensions of the main script FPM will allow to parse. This can

; prevent configuration mistakes on the web server side. You should only limit

; FPM to .php extensions to prevent malicious users to use other extensions to

; exectute php code.

; Note: set an empty value to allow all extensions.

; Default Value: .php

;security.limit_extensions = .php .php3 .php4 .php5

; Pass environment variables like LD_LIBRARY_PATH. All $VARIABLEs are taken from

; the current environment.

; Default Value: clean env

;env[HOSTNAME] = $HOSTNAME

;env[PATH] = /usr/local/bin:/usr/bin:/bin

;env[TMP] = /tmp

;env[TMPDIR] = /tmp

;env[TEMP] = /tmp

; Additional php.ini defines, specific to this pool of workers. These settings

; overwrite the values previously defined in the php.ini. The directives are the

; same as the PHP SAPI:

; php_value/php_flag - you can set classic ini defines which can

; be overwritten from PHP call 'ini_set'.

; php_admin_value/php_admin_flag - these directives won't be overwritten by

; PHP call 'ini_set'

; For php_*flag, valid values are on, off, 1, 0, true, false, yes or no.

; Defining 'extension' will load the corresponding shared extension from

; extension_dir. Defining 'disable_functions' or 'disable_classes' will not

; overwrite previously defined php.ini values, but will append the new value

; instead.

; Note: path INI options can be relative and will be expanded with the prefix

; (pool, global or /usr/local/php)

; Default Value: nothing is defined by default except the values in php.ini and

; specified at startup with the -d argument

;php_admin_value[sendmail_path] = /usr/sbin/sendmail -t -i -f www@my.domain.com

;php_flag[display_errors] = off

;php_admin_value[error_log] = /var/log/fpm-php.www.log

;php_admin_flag[log_errors] = on

;php_admin_value[memory_limit] = 32M

构建Tomcat基础镜像

FROM centos:7

MAINTAINER www.ctnrs.com

ENV VERSION=8.5.43

RUN yum install java-1.8.0-openjdk wget curl unzip iproute net-tools -y &&

yum clean all &&

rm -rf /var/cache/yum/*

COPY apache-tomcat-${VERSION}.tar.gz /

RUN tar zxf apache-tomcat-${VERSION}.tar.gz &&

mv apache-tomcat-${VERSION} /usr/local/tomcat &&

rm -rf apache-tomcat-${VERSION}.tar.gz /usr/local/tomcat/webapps/* &&

mkdir /usr/local/tomcat/webapps/test &&

echo "ok" > /usr/local/tomcat/webapps/test/status.html &&

sed -i '1a JAVA_OPTS="-Djava.security.egd=file:/dev/./urandom"' /usr/local/tomcat/bin/catalina.sh &&

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ENV PATH $PATH:/usr/local/tomcat/bin

WORKDIR /usr/local/tomcat

EXPOSE 8080

CMD ["catalina.sh", "run"]

构建Java基础镜像

FROM java:8-jdk-alpine

LABEL maintainer www.ctnrs.com

ENV JAVA_OPTS="$JAVA_OPTS -Dfile.encoding=UTF8 -Duser.timezone=GMT+08"

RUN apk add -U tzdata &&

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY ./target/eureka-service.jar ./

EXPOSE 8888

CMD java -jar $JAVA_OPTS /eureka-service.jar

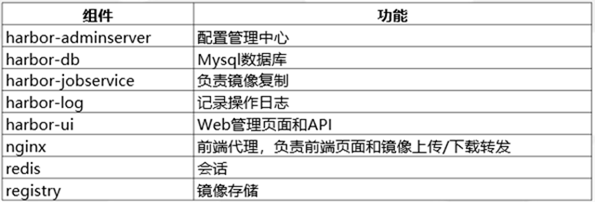

企业级 Harbor 镜像仓库

Harbor 是由VMWare公司开源的容器镜像仓库。事实上,Harbor是在Docker Registry上进行了相应 的企业级扩展,从而获得了更加广泛的应用,这些新的企业级特性包括:管理用户界面,基于角色的 访问控制 ,AD/LDAP集成以及审计日志等,足以满足基本企业需求。官方地址:https://vmware.github.io/harbor/cn/

1、安装docker与docker-compose 依赖Python环境 wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum install docker-ce -y systemctl start docker systemctl enable docker 2、解压离线包部署 tar zxvf harbor-offline-installer-v1.9.1.tgz cd harbor vi harbor.yml hostname: 10.0.0.70 ./prepare ./install.sh

在Jenkins主机配置Docker可信任

由于habor未配置https,还需要在docker配置可信任。

# cat /etc/docker/daemon.json

{"registry-mirrors": ["http://f1361db2.m.daocloud.io"],

"insecure-registries": ["10.0.0.0.70"]

}

# systemctl restart docker

[root@mysql harbor]# docker-compose ps

Name Command State Ports

---------------------------------------------------------------------------------------------

harbor-core /harbor/harbor_core Up (healthy)

harbor-db /docker-entrypoint.sh Up (healthy) 5432/tcp

harbor-jobservice /harbor/harbor_jobservice ... Up (healthy)

harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up (healthy) 8080/tcp

nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp

redis redis-server /etc/redis.conf Up (healthy) 6379/tcp

registry /entrypoint.sh /etc/regist ... Up (healthy) 5000/tcp

registryctl /harbor/start.sh Up (healthy)

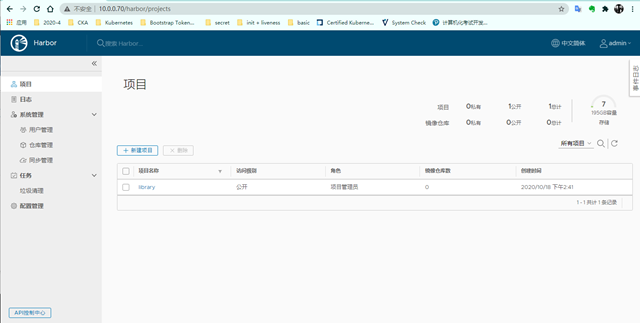

admin 默认密码:Harbor12345

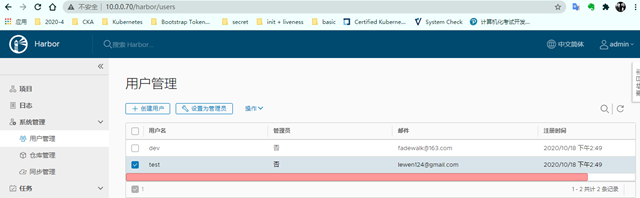

创建用户

终端登录 Harbor

# 报错 [root@mysql harbor]# docker login 10.0.0.70 Username: admin Password: Error response from daemon: Get http://10.0.0.70/v2/: dial tcp 10.0.0.70:80: connect: connection refused # 重启 docker-compose down -v docker-compose up -d

配置上传镜像

1、配置http镜像仓库可信任

[root@mysql harbor]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"insecure-registries": ["10.0.0.70"]

}

systemctl restart docker

2、打标签

docker tag tomcat:v1 10.0.0.70/library/tomcat:v1

3、上传

for i in {nginx,php};do docker push 10.0.0.70/library/${i}:v1;done

4、下载

# docker pull 10.0.0.70/library/nginx:v1

上传完毕后查看

基于 Docker 构建企业 Jenkins CI平台

持续集成(Continuous Integration,CI):代码合并、构建、部署、测试都在一起,不断地执行这个过程,并对结果反馈。

持续部署(Continuous Deployment,CD):部署到测试环境、预生产环境、生产环境。

持续交付(Continuous Delivery,CD):将最终产品发布到生产环境,给用户使用。

高效的CI/CD环境可以获得:

•及时发现问题

•大幅度减少故障率

•加快迭代速度

•减少时间成本

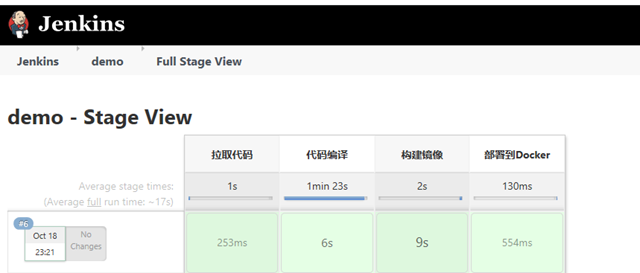

CI 工作流程

CI流程:

1、拉取代码

2、代码编译(java项目),产出war包

3、打包项目镜像并推送到镜像仓库

4、部署镜像测试

部署gitlab

docker run -d --name gitlab -p 8443:443 -p 9999:80 -p 9998:22 -v $PWD/config:/etc/gitlab -v $PWD/logs:/var/log/gitlab -v $PWD/data:/var/opt/gitlab -v /etc/localtime:/etc/localtime lizhenliang/gitlab-ce-zh:latest gitlab/gitlab-ce:latest

访问地址:http://IP:9999

初次会先设置管理员密码 ,然后登陆,默认管理员用户名root,密码就是刚设置的。

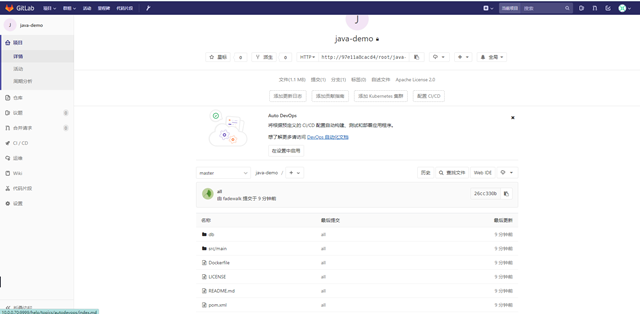

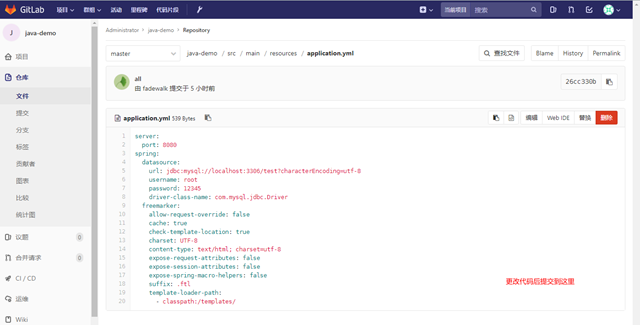

创建项目,提交测试代码

进入后先创建项目,提交代码,以便后面测试。

git remote add pb http://10.0.0.70:9999/root/java-demo.git

[root@mysql tomcat-java-demo-master]# cat .git/config

[core]

repositoryformatversion = 0

filemode = true

bare = false

logallrefupdates = true

[remote "pb"]

url = http://10.0.0.70:9999/root/java-demo.git

fetch = +refs/heads/*:refs/remotes/pb/*

git config --global user.email "fadewalk@163.com"

git config --global user.name "fadewalk"

[root@mysql tomcat-java-demo-master]# git push pb master

Username for 'http://10.0.0.70:9999': root

Password for 'http://root@10.0.0.70:9999':

Counting objects: 179, done.

Delta compression using up to 4 threads.

Compressing objects: 100% (166/166), done.

Writing objects: 100% (179/179), 1.12 MiB | 0 bytes/s, done.

Total 179 (delta 4), reused 0 (delta 0)

remote: Resolving deltas: 100% (4/4), done.

To http://10.0.0.70:9999/root/java-demo.git

* [new branch] master -> master

准备JDK和Maven环境

解压相应的包到宿主机的目录

tar zxvf jdk-8u45-linux-x64.tar.gz mv jdk1.8.0_45 /usr/local/jdk tar zxf apache-maven-3.5.0-bin.tar.gz mv apache-maven-3.5.0 /usr/local/maven

启动Jenkins容器

docker run -d --name jenkins -p 8099:8080 -p 50000:50000 -u root -v /opt/jenkins_home:/var/jenkins_home -v /var/run/docker.sock:/var/run/docker.sock -v /usr/bin/docker:/usr/bin/docker -v /usr/local/maven:/usr/local/maven -v /usr/local/jdk:/usr/local/jdk -v /etc/localtime:/etc/localtime --name jenkins jenkins/jenkins:lts

将宿主机中的环境目录直接挂载到Jenkins容器的目录中,实现利用宿主机的环境构建性能

[root@mysql tools]# docker exec -it jenkins bash root@d413a8199d28:/# cat /var/jenkins_home/secrets/initialAdminPassword 813c4d8fc29f45c7b898e73a79f03283

使用/root/.ssh中私钥访问gitlab。 更加的方便安全。在Jenkins中配置私钥访问gitlab代码仓库。

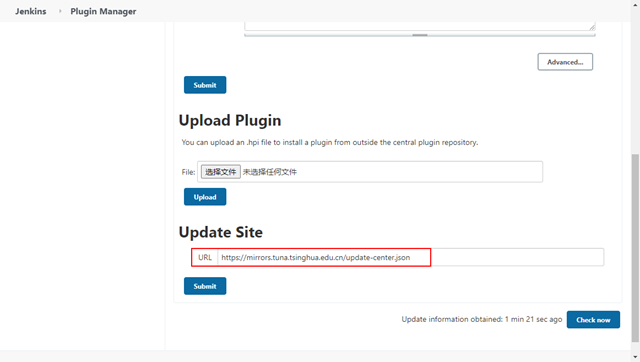

安装插件

系统管理-->插件管理-->Installed

搜索git/pipeline,点击安装。 在线安装不行,可以离线安装,或者在初始化Jenkins的页面就把插件都安装好,只是要多等几分钟。

插件页面这里配置了以后,一直是报错,没有用

修改国内源: cd /opt/jenkins_home/updates sed -i 's/http://updates.jenkins-ci.org/download/https://mirrors.tuna.tsinghua.edu.cn/jenkins/g' default.json && sed -i 's/http://www.google.com/https://www.baidu.com/g' default.json 然后重启jenkins容器生效。

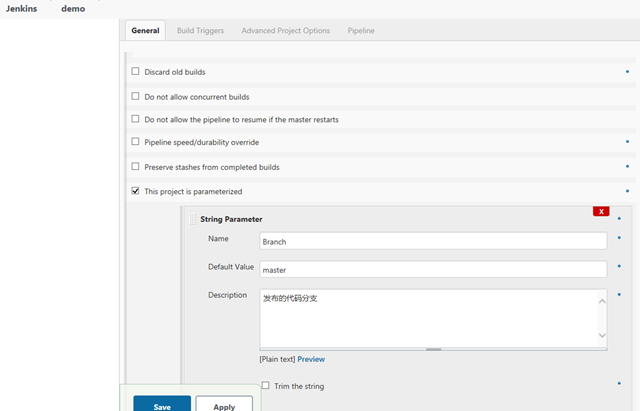

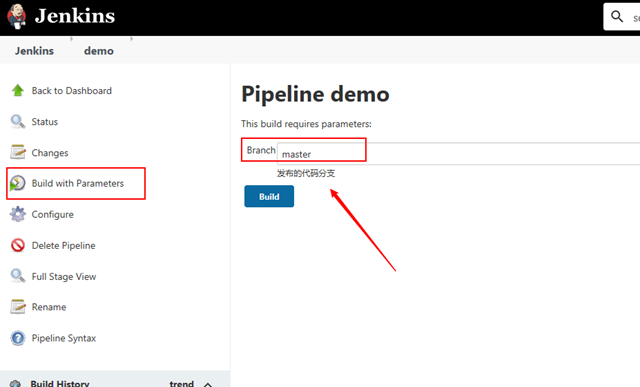

添加参数化构建

This project is parameterized -> String Parameter

Name:Branch # 变量名,下面脚本中调用

Default Value:master # 默认分支

Description:发布的代码分支 # 描述

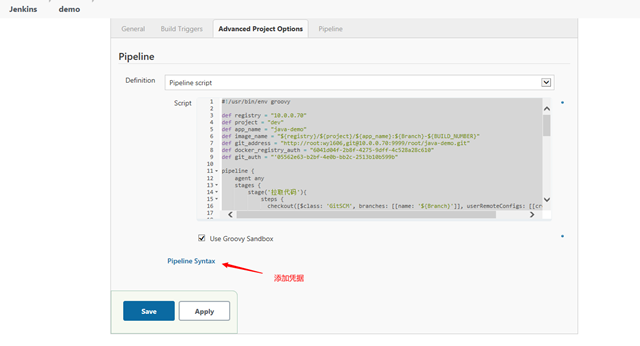

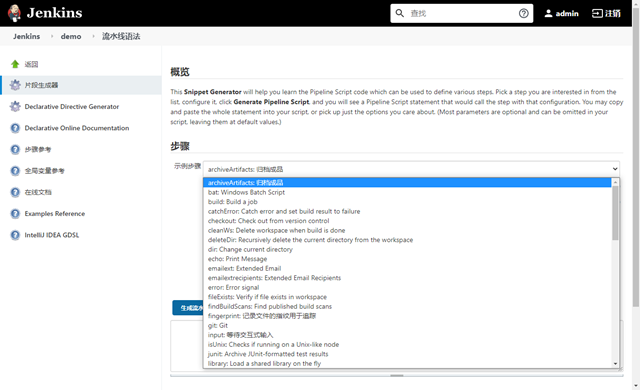

Pipeline脚本

#!/usr/bin/env groovy

def registry = "10.0.0.70"

def project = "dev"

def app_name = "java-demo"

def image_name = "${registry}/${project}/${app_name}:${Branch}-${BUILD_NUMBER}"

def git_address = "http://root:wyl606,git@10.0.0.70:9999/root/java-demo.git"

def docker_registry_auth = "6041d04f-2b8f-4275-9dff-4c528a28c610"

def git_auth = "'05562e63-b2bf-4e0b-bb2c-2513b10b599b"

pipeline {

agent any

stages {

stage('拉取代码'){

steps {

checkout([$class: 'GitSCM', branches: [[name: '${Branch}']], userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]])

}

}

stage('代码编译'){

steps {

sh """

JAVA_HOME=/usr/local/jdk

PATH=$JAVA_HOME/bin:/usr/local/maven/bin:$PATH

mvn clean package -Dmaven.test.skip=true

"""

}

}

stage('构建镜像'){

steps {

withCredentials([usernamePassword(credentialsId: "${docker_registry_auth}", passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

echo '

FROM ${registry}/library/tomcat:v1

LABEL maitainer lizhenliang

RUN rm -rf /usr/local/tomcat/webapps/*

ADD target/*.war /usr/local/tomcat/webapps/ROOT.war

' > Dockerfile

docker build -t ${image_name} .

docker login -u ${username} -p '${password}' ${registry}

docker push ${image_name}

"""

}

}

}

stage('部署到Docker'){

steps {

sh """

REPOSITORY=${image_name}

docker rm -f tomcat-java-demo |true

docker container run -d --name tomcat-java-demo -p 88:8080 ${image_name}

"""

}

}

}

}

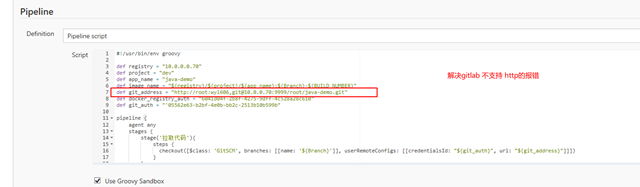

添加凭据

这里Jenkins 中 gitlab的用户名和密码生成的凭据id 通过http无法正常连接,

只好将用户名和密码写到了连接中的方式解决

1、添加拉取git代码凭据,并获取id替换到上面git_auth变量值。

2、添加拉取harbor镜像凭据,并获取id替换到上面docker_registry_auth变量值。

凭据的id要与scripts 里面的变量一致

点击进行构建

构建的时候

maven建议采用国内的源,这样更快一些。

在配置文件中添加

maven 镜像添加

[root@k8s-m1 conf]# grep ali settings.xml

<name>aliyun maven</name>

<url>https://maven.aliyun.com/repository/public</url>

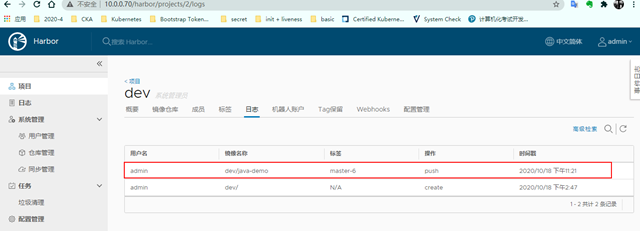

流水线构建完成后,在docker的镜像仓库Harbor 里面查看push 上来的镜像

部署成功,在部署应用端查看

当修改代码,或者提交新的分支。后gitlab仓库的master会更新。

后面又触发新的构建

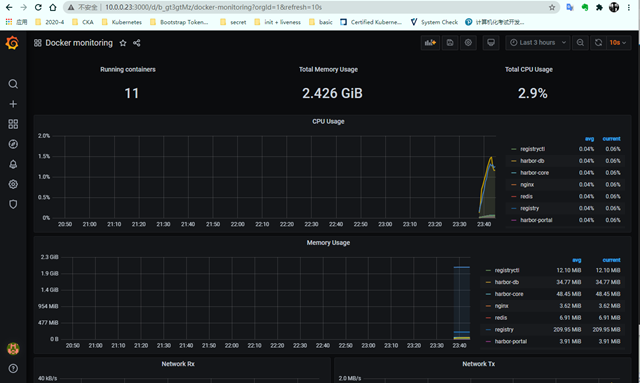

Prometheus+Grafana 监控 Docker

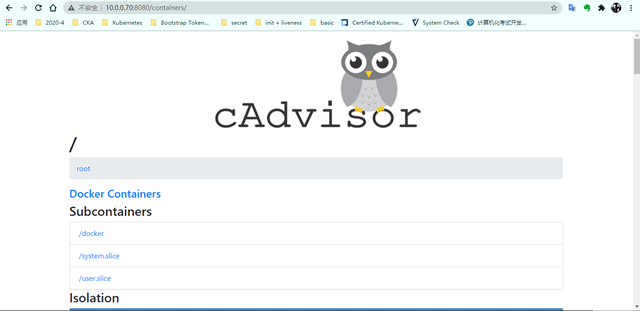

- cAdvisor (Container Advisor) :用于收集正在运行的容器资源使用和性能信息。 https://github.com/google/cadvisor

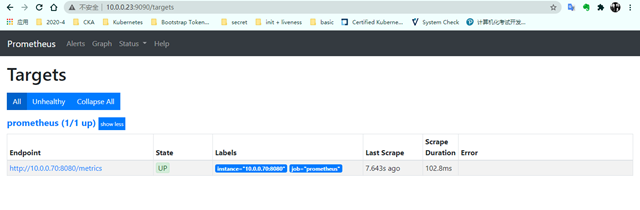

- Prometheus(普罗米修斯):容器监控系统。 https://prometheus.io https://github.com/prometheus

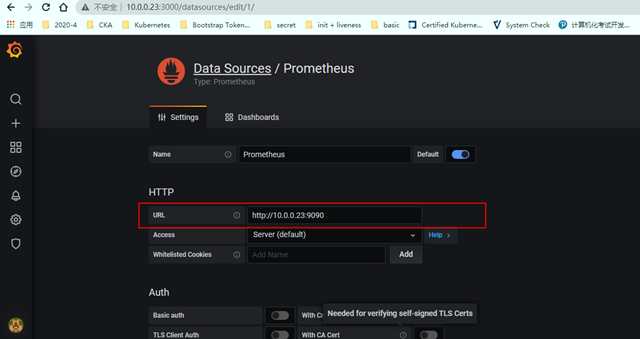

- Grafana:是一个开源的度量分析和可视化系统。 https://grafana.com/grafana/download https://grafana.com/dashboards/193(监控Docker主机模板)

被监控端执行 10.0.0.70

Docker部署cAdvisor: docker run -d --volume=/:/rootfs:ro --volume=/var/run:/var/run:ro --volume=/sys:/sys:ro --volume=/var/lib/docker/:/var/lib/docker:ro --volume=/dev/disk/:/dev/disk:ro --publish=8080:8080 --detach=true --name=cadvisor google/cadvisor:latest

监控端执行 10.0.0.23

Docker部署Grafana: docker run -d --name=grafana -p 3000:3000 grafana/grafana Docker部署Prometheus: docker run -d --name=prometheus -p 9090:9090 -v /tmp/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

[root@k8s-m1 tmp]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['10.0.0.70:8080']

配置数据源

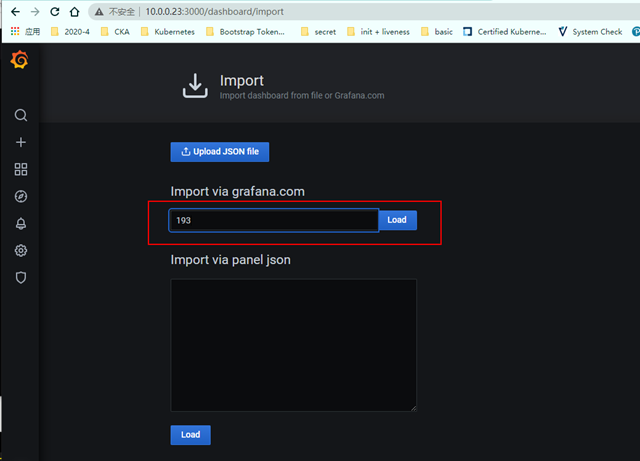

导入 官方的模板id

查看被监控的docker主机资源情况