多容器复杂应用的部署

基于 flask容器,链接外部另一个redis 容器 docker pull redis sudo docker run -d --name redis redis # redis 没必要映射端口出来 创建flask镜像 docker build -t kvin/flask-redis . 镜像名称 Dockerfile的路径 . 是当前目录 运行容器并连接到数据库 docker run -d --name flask-redis --link redis -e REDIS_HOST=redis kvin/flask-redis docker exec -it flask-redis /bin/bash

FROM python:2.7 LABEL maintaner="Peng Xiao xiaoquwl@gmail.com" COPY . /app WORKDIR /app RUN pip install flask redis EXPOSE 5000 CMD [ "python", "app.py" ]

app.py from flask import Flask from redis import Redis import os import socket app = Flask(__name__) redis = Redis(host=os.environ.get('REDIS_HOST', '127.0.0.1'), port=6379) @app.route('/') def hello(): redis.incr('hits') return 'Hello Container World! I have been seen %s times and my hostname is %s. ' % (redis.get('hits'),socket.gethostname()) if __name__ == "__main__": app.run(host="0.0.0.0", port=5000, debug=True)

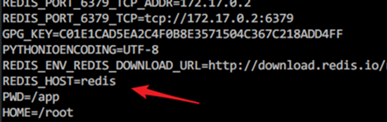

容器内部环境变量

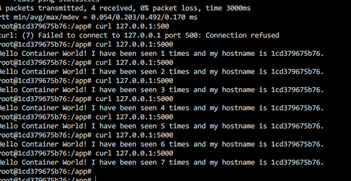

root@1cd379675b76:/app# ping redis PING redis (172.17.0.2) 56(84) bytes of data. 64 bytes from redis (172.17.0.2): icmp_seq=1 ttl=64 time=0.492 ms 64 bytes from redis (172.17.0.2): icmp_seq=2 ttl=64 time=0.134 ms 64 bytes from redis (172.17.0.2): icmp_seq=3 ttl=64 time=0.054 ms 64 bytes from redis (172.17.0.2): icmp_seq=4 ttl=64 time=0.134 ms ^C --- redis ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3000ms rtt min/avg/max/mdev = 0.054/0.203/0.492/0.170 ms

没有映射时,宿主本地访问不了

[root@docker-node1 flask-redis]# curl 127.0.0.1:5000 curl: (7) Failed connect to 127.0.0.1:5000; Connection refused [root@docker-node1 flask-redis]# docker run -d -p 5000:5000 --name flask-redis --link redis -e REDIS_HOST=redis kvin/flask-redis 28ea7f498f5b9084935aa0a4fa0332aa56701a4357346b215cae689842f41fb1 [root@docker-node1 flask-redis]# curl 127.0.0.1:5000 Hello Container World! I have been seen 8 times and my hostname is 28ea7f498f5b. [root@docker-node1 flask-redis]# curl 127.0.0.1:5000 Hello Container World! I have been seen 9 times and my hostname is 28ea7f498f5b. [root@docker-node1 flask-redis]# curl 127.0.0.1:5000 Hello Container World! I have been seen 10 times and my hostname is 28ea7f498f5b. [root@docker-node1 flask-redis]# curl 127.0.0.1:5000 Hello Container World! I have been seen 11 times and my hostname is 28ea7f498f5b.

sudo docker run -d --name test4 -e DK_NAME=lewen busybox /bin/sh -c "while true;do sleep 3600;done" [root@docker-node1 flask-redis]# docker exec -it test4 /bin/sh / # env HOSTNAME=e72d1e6b396a SHLVL=1 HOME=/root DK_NAME=lewen TERM=xterm PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin PWD=/ / #

多机器通信

overlay

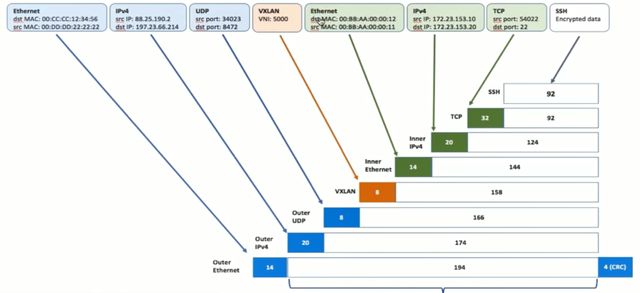

What is VXLAN and how it works?

https://www.evoila.de/2015/11/06/what-is-vxlan-and-how-it-works/

https://coreos.com/eted/

安装 etcd

vagrant@docker-node1:~$ wget https://github.com/coreos/etcd/releases/download/v3.0.12/etcd-v3.0.12-linux-amd64.tar.gz vagrant@docker-node1:~$ tar zxvf etcd-v3.0.12-linux-amd64.tar.gz vagrant@docker-node1:~$ cd etcd-v3.0.12-linux-amd64 vagrant@docker-node1:~$ nohup ./etcd --name docker-node1 --initial-advertise-peer-urls http://192.168.205.10:2380 --listen-peer-urls http://192.168.205.10:2380 --listen-client-urls http://192.168.205.10:2379,http://127.0.0.1:2379 --advertise-client-urls http://192.168.205.10:2379 --initial-cluster-token etcd-cluster --initial-cluster docker-node1=http://192.168.205.10:2380,docker-node2=http://192.168.205.11:2380 --initial-cluster-state new&

vagrant@docker-node2:~$ wget https://github.com/coreos/etcd/releases/download/v3.0.12/etcd-v3.0.12-linux-amd64.tar.gz vagrant@docker-node2:~$ tar zxvf etcd-v3.0.12-linux-amd64.tar.gz vagrant@docker-node2:~$ cd etcd-v3.0.12-linux-amd64/ vagrant@docker-node2:~$ nohup ./etcd --name docker-node2 --initial-advertise-peer-urls http://192.168.205.11:2380 --listen-peer-urls http://192.168.205.11:2380 --listen-client-urls http://192.168.205.11:2379,http://127.0.0.1:2379 --advertise-client-urls http://192.168.205.11:2379 --initial-cluster-token etcd-cluster --initial-cluster docker-node1=http://192.168.205.10:2380,docker-node2=http://192.168.205.11:2380 --initial-cluster-state new&

vagrant@docker-node2:~/etcd-v3.0.12-linux-amd64$ ./etcdctl cluster-health member 21eca106efe4caee is healthy: got healthy result from http://192.168.205.10:2379 member 8614974c83d1cc6d is healthy: got healthy result from http://192.168.205.11:2379 cluster is healthy

在docker-node1上 $ sudo service docker stop $ sudo /usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --cluster-store=etcd://192.168.205.10:2379 --cluster-advertise=192.168.205.10:2375& 在docker-node2上 $ sudo service docker stop $ sudo /usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --cluster-store=etcd://192.168.205.11:2379 --cluster-advertise=192.168.205.11:2375&

在docker-node1上创建一个demo的overlay network vagrant@docker-node1:~$ sudo docker network ls NETWORK ID NAME DRIVER SCOPE 0e7bef3f143a bridge bridge local a5c7daf62325 host host local 3198cae88ab4 none null local vagrant@docker-node1:~$ sudo docker network create -d overlay demo 3d430f3338a2c3496e9edeccc880f0a7affa06522b4249497ef6c4cd6571eaa9 vagrant@docker-node1:~$ sudo docker network ls NETWORK ID NAME DRIVER SCOPE 0e7bef3f143a bridge bridge local 3d430f3338a2 demo overlay global a5c7daf62325 host host local 3198cae88ab4 none null local vagrant@docker-node1:~$ sudo docker network inspect demo [ { "Name": "demo", "Id": "3d430f3338a2c3496e9edeccc880f0a7affa06522b4249497ef6c4cd6571eaa9", "Scope": "global", "Driver": "overlay", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": {}, "Config": [ { "Subnet": "10.0.0.0/24", "Gateway": "10.0.0.1/24" } ] }, "Internal": false, "Containers": {}, "Options": {}, "Labels": {} } ]

我们会看到在node2上,这个demo的overlay network会被同步创建

vagrant@docker-node2:~$ sudo docker network ls NETWORK ID NAME DRIVER SCOPE c9947d4c3669 bridge bridge local 3d430f3338a2 demo overlay global fa5168034de1 host host local c2ca34abec2a none null local

通过查看etcd的key-value, 我们获取到,这个demo的network是通过etcd从node1同步到node2的

vagrant@docker-node2:~/etcd-v3.0.12-linux-amd64$ ./etcdctl ls /docker

/docker/network

/docker/nodes

vagrant@docker-node2:~/etcd-v3.0.12-linux-amd64$ ./etcdctl ls /docker/nodes

/docker/nodes/192.168.205.11:2375

/docker/nodes/192.168.205.10:2375

vagrant@docker-node2:~/etcd-v3.0.12-linux-amd64$ ./etcdctl ls /docker/network/v1.0/network

/docker/network/v1.0/network/3d430f3338a2c3496e9edeccc880f0a7affa06522b4249497ef6c4cd6571eaa9

vagrant@docker-node2:~/etcd-v3.0.12-linux-amd64$ ./etcdctl get /docker/network/v1.0/network/3d430f3338a2c3496e9edeccc880f0a7affa06522b4249497ef6c4cd6571eaa9 | jq .

{

"addrSpace": "GlobalDefault",

"enableIPv6": false,

"generic": {

"com.docker.network.enable_ipv6": false,

"com.docker.network.generic": {}

},

"id": "3d430f3338a2c3496e9edeccc880f0a7affa06522b4249497ef6c4cd6571eaa9",

"inDelete": false,

"ingress": false,

"internal": false,

"ipamOptions": {},

"ipamType": "default",

"ipamV4Config": "[{"PreferredPool":"","SubPool":"","Gateway":"","AuxAddresses":null}]",

"ipamV4Info": "[{"IPAMData":"{\"AddressSpace\":\"GlobalDefault\",\"Gateway\":\"10.0.0.1/24\",\"Pool\":\"10.0.0.0/24\"}","PoolID":"GlobalDefault/10.0.0.0/24"}]",

"labels": {},

"name": "demo",

"networkType": "overlay",

"persist": true,

"postIPv6": false,

"scope": "global"

}

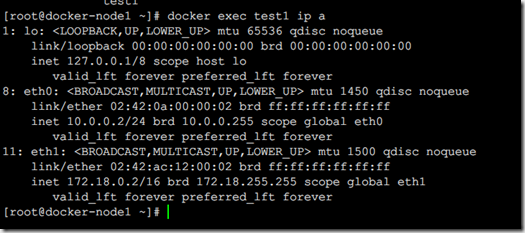

创建连接demo网络的容器

vagrant@docker-node1:~$ sudo docker run -d --name test1 --net demo busybox sh -c "while true; do sleep 3600; done" Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 56bec22e3559: Pull complete Digest: sha256:29f5d56d12684887bdfa50dcd29fc31eea4aaf4ad3bec43daf19026a7ce69912 Status: Downloaded newer image for busybox:latest a95a9466331dd9305f9f3c30e7330b5a41aae64afda78f038fc9e04900fcac54 vagrant@docker-node1:~$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a95a9466331d busybox "sh -c 'while true; d" 4 seconds ago Up 3 seconds test1 vagrant@docker-node1:~$ sudo docker exec test1 ifconfig eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02 inet addr:10.0.0.2 Bcast:0.0.0.0 Mask:255.255.255.0 inet6 addr: fe80::42:aff:fe00:2/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1 RX packets:15 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1206 (1.1 KiB) TX bytes:648 (648.0 B) eth1 Link encap:Ethernet HWaddr 02:42:AC:12:00:02 inet addr:172.18.0.2 Bcast:0.0.0.0 Mask:255.255.0.0 inet6 addr: fe80::42:acff:fe12:2/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:648 (648.0 B) TX bytes:648 (648.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

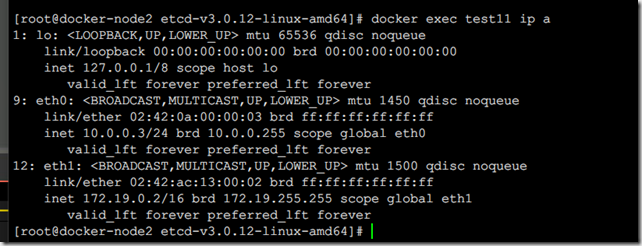

在docker-node2上 vagrant@docker-node2:~$ sudo docker run -d --name test1 --net demo busybox sh -c "while true; do sleep 3600; done" Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 56bec22e3559: Pull complete Digest: sha256:29f5d56d12684887bdfa50dcd29fc31eea4aaf4ad3bec43daf19026a7ce69912 Status: Downloaded newer image for busybox:latest fad6dc6538a85d3dcc958e8ed7b1ec3810feee3e454c1d3f4e53ba25429b290b docker: Error response from daemon: service endpoint with name test1 already exists. vagrant@docker-node2:~$ sudo docker run -d --name test2 --net demo busybox sh -c "while true; do sleep 3600; done" 9d494a2f66a69e6b861961d0c6af2446265bec9b1d273d7e70d0e46eb2e98d20

vagrant@docker-node2:~$ sudo docker exec -it test2 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:03

inet addr:10.0.0.3 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::42:aff:fe00:3/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:208 errors:0 dropped:0 overruns:0 frame:0

TX packets:201 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:20008 (19.5 KiB) TX bytes:19450 (18.9 KiB)

eth1 Link encap:Ethernet HWaddr 02:42:AC:12:00:02

inet addr:172.18.0.2 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::42:acff:fe12:2/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

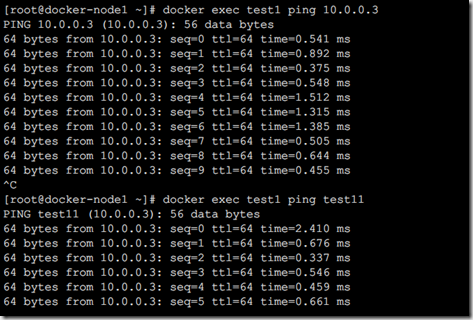

vagrant@docker-node1:~$ sudo docker exec test1 sh -c "ping 10.0.0.3"

PING 10.0.0.3 (10.0.0.3): 56 data bytes

64 bytes from 10.0.0.3: seq=0 ttl=64 time=0.579 ms

64 bytes from 10.0.0.3: seq=1 ttl=64 time=0.411 ms

64 bytes from 10.0.0.3: seq=2 ttl=64 time=0.483 ms

^C

vagrant@docker-node1:~$

[root@docker-node2 etcd-v3.0.12-linux-amd64]# docker run -d --name test1 --net demo busybox /bin/sh -c "while true;do sleep 3600;done"

到此位于不同机器上的docker容器通信完成

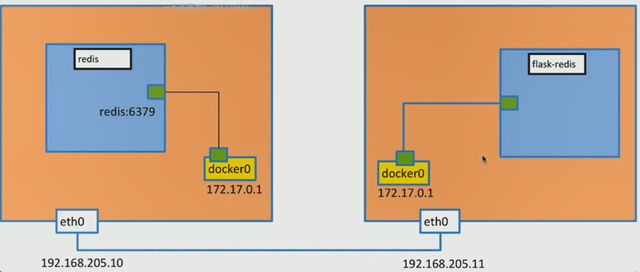

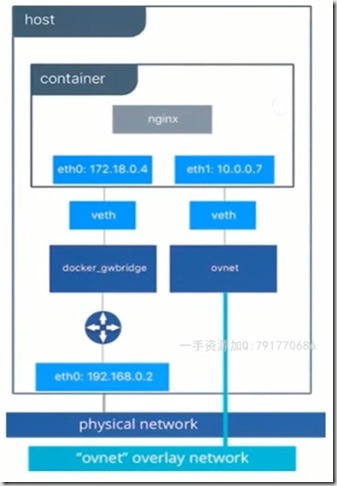

可以看到容器,分别有两个网卡 10.0.0.0/24 是基于demo网络的虚拟IP

172.xx.xx.xx 是分别宿主机基于 docker网桥的ip

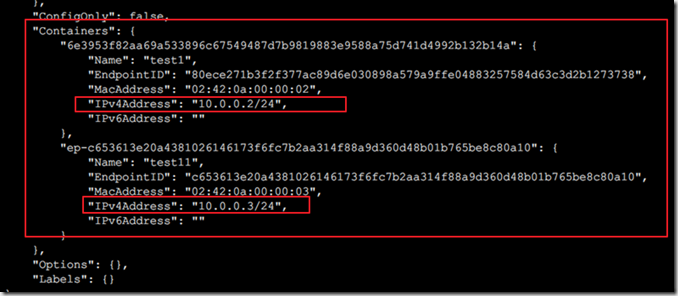

查看网络中的容器

![adc621df-7985-4e8a-9e8b-913b9048be13[5] adc621df-7985-4e8a-9e8b-913b9048be13[5]](https://img2018.cnblogs.com/blog/1165731/201811/1165731-20181126234557796-2008720356.png)