-------------------------------------------------------------------------------------------------------------------------------------------------------------

运行getdata.sh,下载voxforge语音库

修改cmd.sh queue.pl为run.pl.

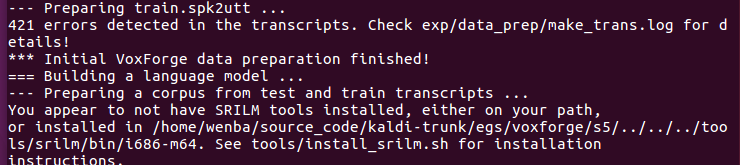

install_srilm.sh

执行该脚本

按照网址下载srilm.tgz,然后运行install_srilm.sh

提示安装

sudo ./install_sequitur.sh

sudo apt-get install swig

最后,修改run.sh njobs = 10(cpu核心数)

运行成功。

----------------------------------------------------------------------------------------------------------------------------------------------------------------------

默认模式离线文件解码, online_demo/run.sh

离线在线解码 , online_demo/run.sh --test-mode live

安装录音机,检查录音设备是否有问题

#!/bin/bash # Copyright 2012 Vassil Panayotov # Apache 2.0 # Note: you have to do 'make ext' in ../../../src/ before running this. # Set the paths to the binaries and scripts needed KALDI_ROOT=`pwd`/../../.. export PATH=$PWD/../s5/utils/:$KALDI_ROOT/src/onlinebin:$KALDI_ROOT/src/bin:$PATH data_file="online-data" data_url="http://sourceforge.net/projects/kaldi/files/online-data.tar.bz2" # Change this to "tri2a" if you like to test using a ML-trained model ac_model_type=tri2b_mmi # Alignments and decoding results are saved in this directory(simulated decoding only) decode_dir="./work" # Change this to "live" either here or using command line switch like: # --test-mode live test_mode="simulated" . parse_options.sh ac_model=${data_file}/models/$ac_model_type trans_matrix="" audio=${data_file}/audio if [ ! -s ${data_file}.tar.bz2 ]; then #下载语音数据,用于仿真测试用 echo "Downloading test models and data ..." wget -T 10 -t 3 $data_url; if [ ! -s ${data_file}.tar.bz2 ]; then echo "Download of $data_file has failed!" exit 1 fi fi if [ ! -d $ac_model ]; then #验证模型是否存在 echo "Extracting the models and data ..." tar xf ${data_file}.tar.bz2 fi if [ -s $ac_model/matrix ]; then #设置转移矩阵 trans_matrix=$ac_model/matrix fi case $test_mode in live)#实时在线解码模式 echo echo -e " LIVE DEMO MODE - you can use a microphone and say something " echo " The (bigram) language model used to build the decoding graph was" echo " estimated on an audio book's text. The text in question is" echo " "King Solomon's Mines" (http://www.gutenberg.org/ebooks/2166)." echo " You may want to read some sentences from this book first ..." echo online-gmm-decode-faster --rt-min=0.5 --rt-max=0.7 --max-active=4000 --beam=12.0 --acoustic-scale=0.0769 $ac_model/model $ac_model/HCLG.fst $ac_model/words.txt '1:2:3:4:5' $trans_matrix;; simulated)#离线文件识别 echo echo -e " SIMULATED ONLINE DECODING - pre-recorded audio is used " echo " The (bigram) language model used to build the decoding graph was" echo " estimated on an audio book's text. The text in question is" echo " "King Solomon's Mines" (http://www.gutenberg.org/ebooks/2166)." echo " The audio chunks to be decoded were taken from the audio book read" echo " by John Nicholson(http://librivox.org/king-solomons-mines-by-haggard/)" echo echo " NOTE: Using utterances from the book, on which the LM was estimated" echo " is considered to be "cheating" and we are doing this only for" echo " the purposes of the demo." echo echo " You can type "./run.sh --test-mode live" to try it using your" echo " own voice!" echo mkdir -p $decode_dir # make an input .scp file > $decode_dir/input.scp for f in $audio/*.wav; do bf=`basename $f` bf=${bf%.wav} echo $bf $f >> $decode_dir/input.scp done online-wav-gmm-decode-faster --verbose=1 --rt-min=0.8 --rt-max=0.85 --max-active=4000 --beam=12.0 --acoustic-scale=0.0769 scp:$decode_dir/input.scp $ac_model/model $ac_model/HCLG.fst $ac_model/words.txt '1:2:3:4:5' ark,t:$decode_dir/trans.txt ark,t:$decode_dir/ali.txt $trans_matrix;;# ali.txt记录对齐的状态与帧之间关系 trans.txt记录解码结果的数字 *) echo "Invalid test mode! Should be either "live" or "simulated"!"; exit 1;; esac # Estimate the error rate for the simulated decoding if [ $test_mode == "simulated" ]; then # Convert the reference transcripts from symbols to word IDs sym2int.pl -f 2- $ac_model/words.txt < $audio/trans.txt > $decode_dir/ref.txt #结合words.txt将trans.txt标记参考文本符号 转成 int符号 # Compact the hypotheses belonging to the same test utterance cat $decode_dir/trans.txt | sed -e 's/^(test[0-9]+)([^ ]+)(.*)/1 3/' | gawk '{key=$1; $1=""; arr[key]=arr[key] " " $0; } END { for (k in arr) { print k " " arr[k]} }' > $decode_dir/hyp.txt

#将trans.txt变成类似ref.txt的格式,便于对比分析 # Finally compute WER compute-wer --mode=present ark,t:$decode_dir/ref.txt ark,t:$decode_dir/hyp.txt #将ref.txt与hyp.txt做对比,计算wer率 fi

Usage: online-gmm-decode-faster [options] <model-in><fst-in> <word-symbol-table> <silence-phones> [<lda-matrix-in>]

#模型 fst状态机 词符号表 静音音素 lda-矩阵 Example: online-gmm-decode-faster --rt-min=0.3 --rt-max=0.5 --max-active=4000 --beam=12.0 --acoustic-scale=0.0769 model HCLG.fst words.txt '1:2:3:4:5' lda-matrix Options: --acoustic-scale : Scaling factor for acoustic likelihoods (float, default = 0.1) 声学似然度 伸缩系数 --batch-size : Number of feature vectors processed w/o interruption (int, default = 27) 特征矢量batch数目设定 --beam : Decoding beam. Larger->slower, more accurate. (float, default = 16) 解码beam,beam越大,则越慢,越精确 --beam-delta : Increment used in decoder [obscure setting] (float, default = 0.5) 解码器中的增量 --beam-update : Beam update rate (float, default = 0.01) beam更新速率 --cmn-window : Number of feat. vectors used in the running average CMN calculation (int, default = 600) cmn的窗,决定了feat的数目 --delta-order : Order of delta computation (int, default = 2) delta的阶数 --delta-window : Parameter controlling window for delta computation (actual window size for each delta order is 1 + 2*delta-window-size) (int, default = 2) #delta控制窗 --hash-ratio : Setting used in decoder to control hash behavior (float, default = 2) 解码器中控制hash的设置 --inter-utt-sil : Maximum # of silence frames to trigger new utterance (int, default = 50) #slience最大帧数,超过这个就会激活新的发音 --left-context : Number of frames of left context (int, default = 4)#左边上下文的帧数 --max-active : Decoder max active states. Larger->slower; more accurate (int, default = 2147483647) #解码器最大的有效状态, 越大,则越慢,越准确 --max-beam-update : Max beam update rate (float, default = 0.05) #最大的beam的更新率 --max-utt-length : If the utterance becomes longer than this number of frames, shorter silence is acceptable as an utterance separator (int, default = 1500)#如果发音超过这个帧数,短时静音是可接受的,作为分割符 --min-active : Decoder min active states (don't prune if #active less than this). (int, default = 20)#解码器的最小有效状态 --min-cmn-window : Minumum CMN window used at start of decoding (adds latency only at start) (int, default = 100) #最小CMN窗 --num-tries : Number of successive repetitions of timeout before we terminate stream (int, default = 5)#再我们终止流时,超时连续重复的数目 --right-context : Number of frames of right context (int, default = 4) #右上下文帧数 --rt-max : Approximate maximum decoding run time factor (float, default = 0.75)#近似最大解码动态时间系数 --rt-min : Approximate minimum decoding run time factor (float, default = 0.7)# --update-interval : Beam update interval in frames (int, default = 3)#beam更新间隔 Standard options: --config : Configuration file to read (this option may be repeated) (string, default = "") --help : Print out usage message (bool, default = false) --print-args : Print the command line arguments (to stderr) (bool, default = true) --verbose : Verbose level (higher->more logging) (int, default = 0)

由于使用的是服务器主板,所以接入的是usb音频设备。

但是PortAudio没有检测成功。

所以重新安装了下新版的PortAudio,修改/install_portaduio.sh里面的版本,后来又成功了。

重新make ext.

1.首先检查linux系统录音功能是否可用:arecord命令,如arecord -d 10 test.wav,也可以使用arecord -l查看当前的录音设备,一般是都有的

2.检查portaudio是否安装成功。可以使用tools/install_portaduio.sh安装,如果之前安装过一遍,一定要先进入tools/portaudio,然后make clean,否则没有用。有些时候一些依赖没有也会安装,但是程序不可用,可以进入tools/portaudio,然后./configure,通常情况alsa显示no,通过sudo apt-get install libasound-dev可以解决