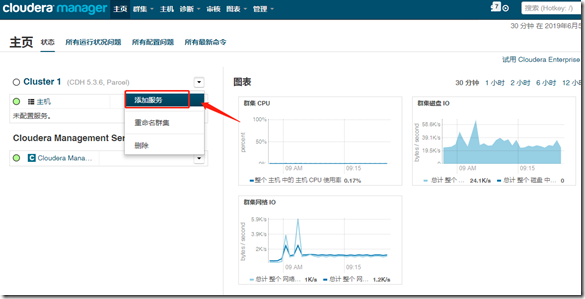

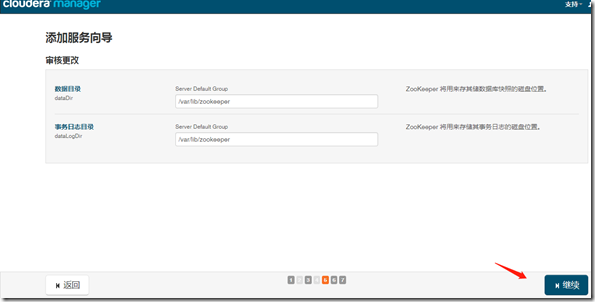

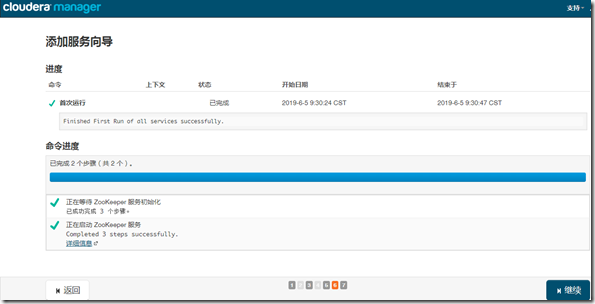

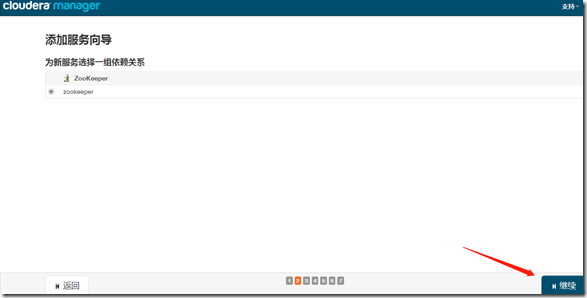

一、zookeeper

1、安装

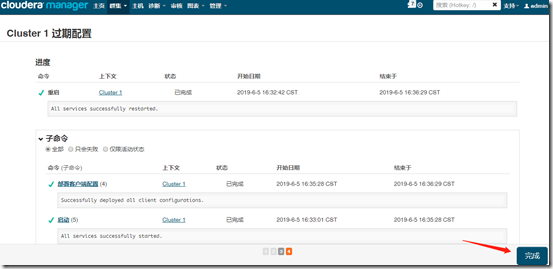

继续—>完成;

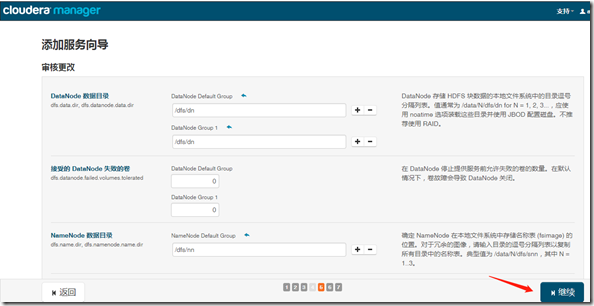

二、HDFS

1、安装

继续—>完成;

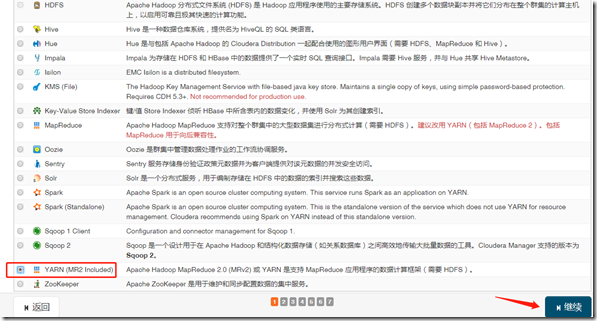

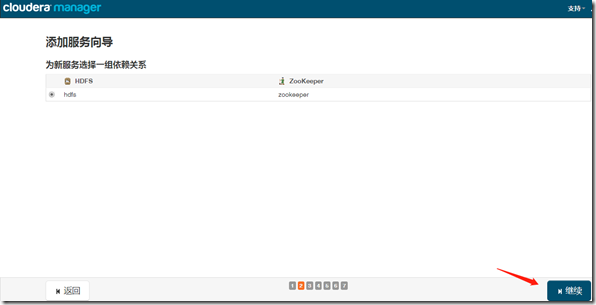

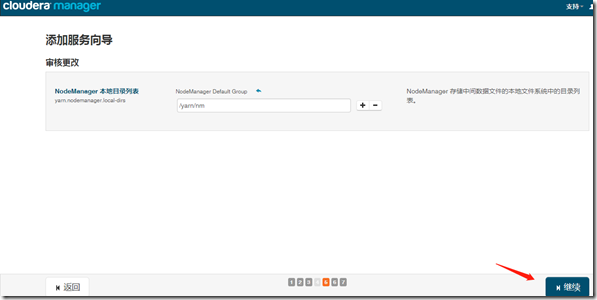

三、yarn、hive

1、安装yarn

继续—>完成;

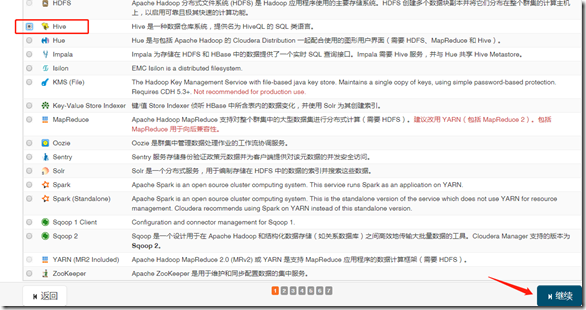

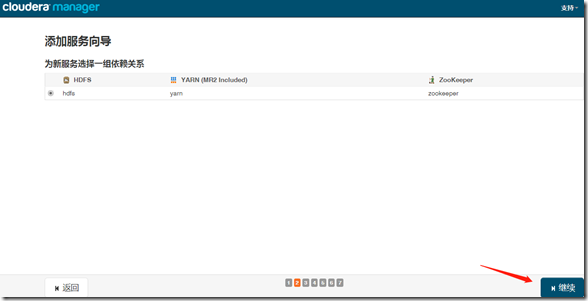

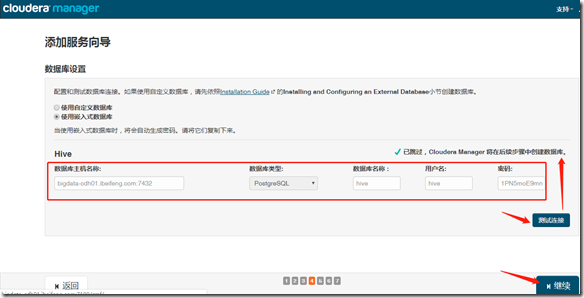

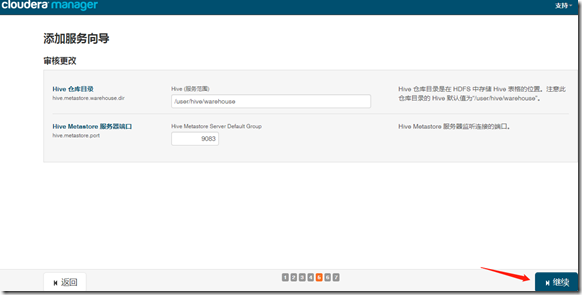

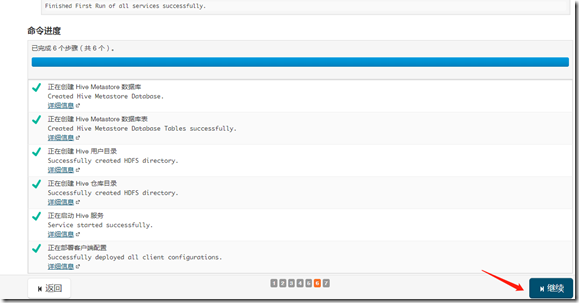

2、安装hive

继续—>完成;

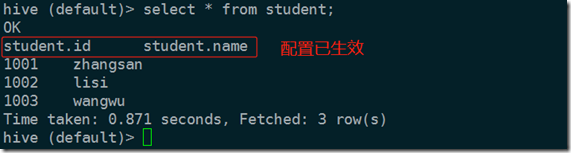

3、测试hive

hive> show tables; OK Time taken: 0.41 seconds hive> create table student(id int, name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '; OK Time taken: 0.168 seconds hive> show tables; OK student Time taken: 0.019 seconds, Fetched: 1 row(s) hive> load data local inpath '/home/stu.txt' into table student; Loading data to table default.student Table default.student stats: [numFiles=1, numRows=0, totalSize=36, rawDataSize=0] OK Time taken: 0.471 seconds hive> select * from student; OK 1001 zhangsan 1002 lisi 1003 wangwu ###hive on yarn [root@bigdata-cdh03 ~]# su - hdfs hive 19/06/05 13:33:23 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore. Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-5.3.6-1.cdh5.3.6.p0.11/jars/hive-common-0.13.1-cdh5.3.6.jar!/hive-log4j.properties hive> select count(1) from student; Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks determined at compile time: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Job = job_1559705875106_0001, Tracking URL = http://bigdata-cdh03.ibeifeng.com:8088/proxy/application_1559705875106_0001/ Kill Command = /opt/cloudera/parcels/CDH-5.3.6-1.cdh5.3.6.p0.11/lib/hadoop/bin/hadoop job -kill job_1559705875106_0001 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1 2019-06-05 13:33:46,429 Stage-1 map = 0%, reduce = 0% 2019-06-05 13:33:51,610 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.86 sec 2019-06-05 13:33:57,788 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.16 sec MapReduce Total cumulative CPU time: 2 seconds 160 msec Ended Job = job_1559705875106_0001 MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 2.16 sec HDFS Read: 264 HDFS Write: 2 SUCCESS Total MapReduce CPU Time Spent: 2 seconds 160 msec OK 3 Time taken: 20.502 seconds, Fetched: 1 row(s)

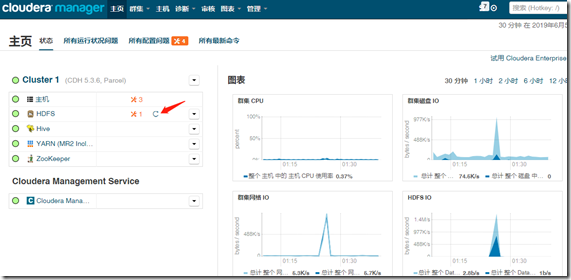

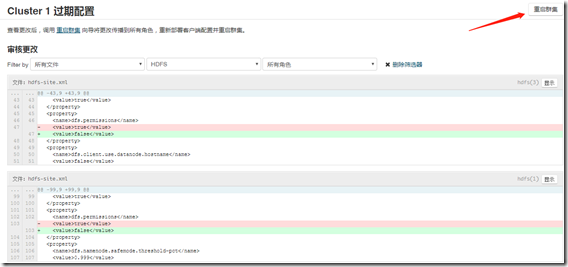

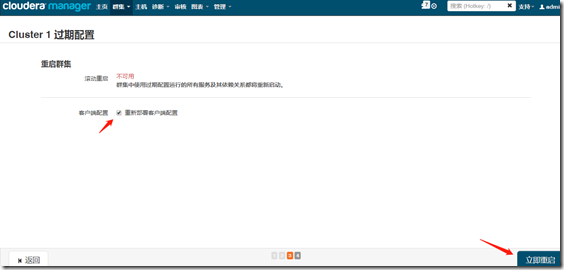

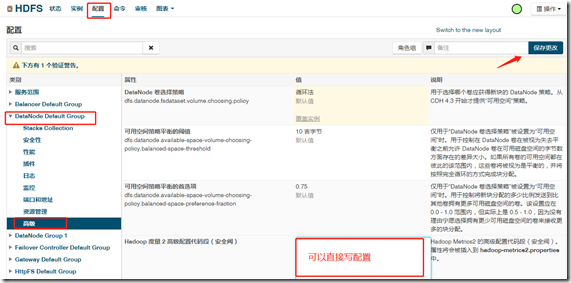

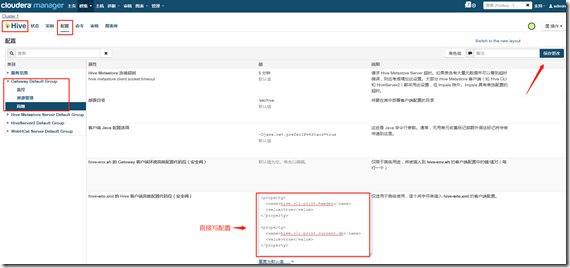

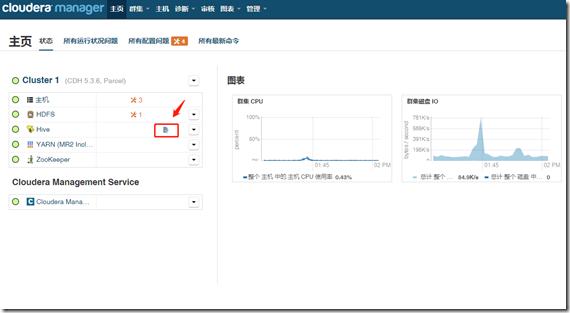

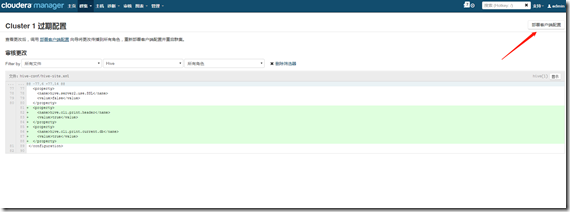

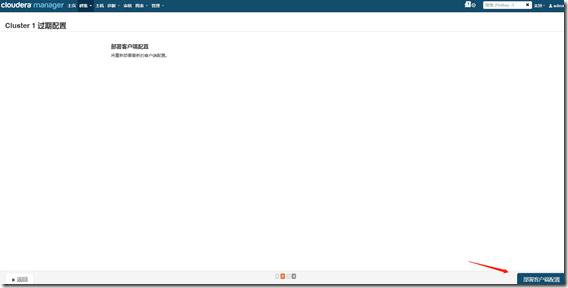

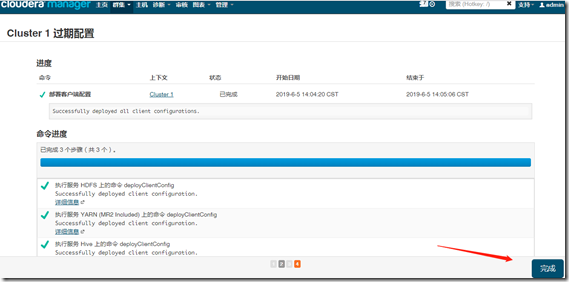

4、服务组件配置

如,配置HDFS

如,配置hive:

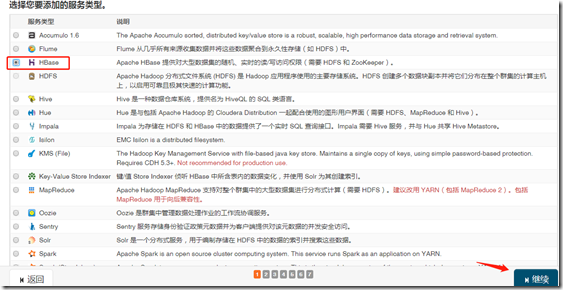

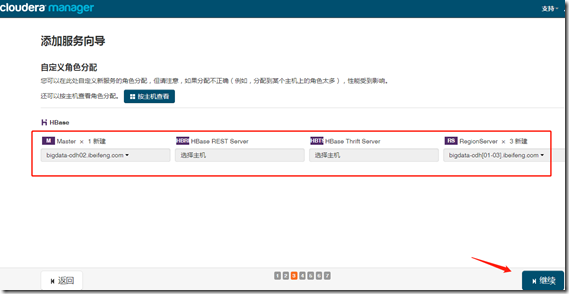

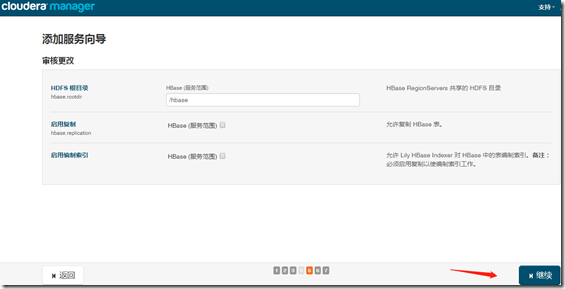

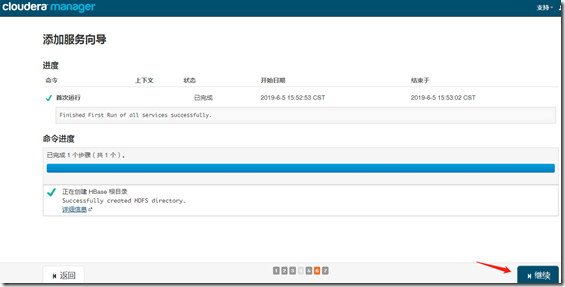

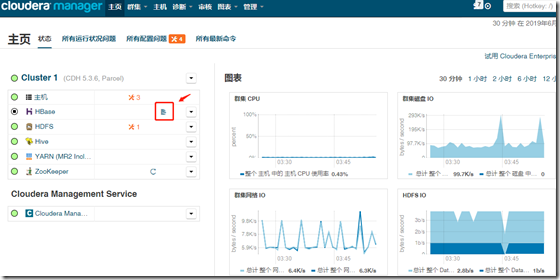

四、HBase

1、安装

继续—>完成;