一、file format

ORCFile在HDP 2:更好的压缩,更好的性能:

https://zh.hortonworks.com/blog/orcfile-in-hdp-2-better-compression-better-performance/

官方ORCfile介绍:

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+ORC

官方PARQUET介绍:

https://cwiki.apache.org/confluence/display/Hive/Parquet

file_format:

: SEQUENCEFILE

| TEXTFILE -- (Default, depending on hive.default.fileformat configuration)

| RCFILE -- (Note: Available in Hive 0.6.0 and later)

| ORC -- (Note: Available in Hive 0.11.0 and later)

| PARQUET -- (Note: Available in Hive 0.13.0 and later)

| AVRO -- (Note: Available in Hive 0.14.0 and later)

| JSONFILE -- (Note: Available in Hive 4.0.0 and later)

| INPUTFORMAT input_format_classname OUTPUTFORMAT output_format_classname

数据存储:

按行存储

按列存储

#TEXTFILE :行式存储

#其他的都是列存储,ORC是对RCFILE的优化;ORC和PARQUET用的较多;二、测试

我们建三张表导入同样的数据,看数据存储的大小;

1、TEXTFILE

#建表

hive (default)> create table page_views(

> track_time string,

> url string,

> session_id string,

> referer string,

> ip string,

> end_user_id string,

> city_id string

> )

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '

> STORED AS TEXTFILE;

OK

Time taken: 0.089 seconds

#加载数据

hive (default)> load data local inpath '/opt/datas/page_views.data' into table page_views;

Copying data from file:/opt/datas/page_views.data

Copying file: file:/opt/datas/page_views.data

Loading data to table default.page_views

Table default.page_views stats: [numFiles=1, numRows=0, totalSize=19014993, rawDataSize=0]

OK

Time taken: 0.326 seconds2、ORCFILE

hive (default)> create table page_views_orc(

> track_time string,

> url string,

> session_id string,

> referer string,

> ip string,

> end_user_id string,

> city_id string

> )

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '

> STORED AS orc;

OK

Time taken: 0.04 seconds

hive (default)> insert into table page_views_orc select * from page_views;3、PARQUET

hive (default)> create table page_views_parquet(

> track_time string,

> url string,

> session_id string,

> referer string,

> ip string,

> end_user_id string,

> city_id string

> )

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '

> STORED AS parquet;

OK

Time taken: 0.037 seconds

hive (default)> insert into table page_views_parquet select * from page_views;4、查看各个表文件大小

hive (default)> dfs -du -h /user/hive/warehouse/page_views/;

18.1 M /user/hive/warehouse/page_views/page_views.data

hive (default)> dfs -du -h /user/hive/warehouse/page_views_orc/;

2.6 M /user/hive/warehouse/page_views_orc/000000_0

hive (default)> dfs -du -h /user/hive/warehouse/page_views_parquet/;

13.1 M /user/hive/warehouse/page_views_parquet/000000_0

#明显的可以看出,数据相同时,存储为不同的格式:

TEXTFILE格式的表:18.1M

ORCFILE格式的表:2.6M

PARQUET格式的表:13.1M5、查询测试

#TEXTFILE表查询

hive (default)> select session_id, count(*) cnt from page_views group by session_id order by cnt desc limit 30;

......

Time taken: 39.427 seconds, Fetched: 30 row(s) #39.427秒

#ORCFILE表查询

hive (default)> select session_id, count(*) cnt from page_views_orc group by session_id order by cnt desc limit 30;

......

Time taken: 38.319 seconds, Fetched: 30 row(s) #38.319秒

#可见orc格式的表,不仅数据体积小很多,查询也有优势三、ORCFILE优化

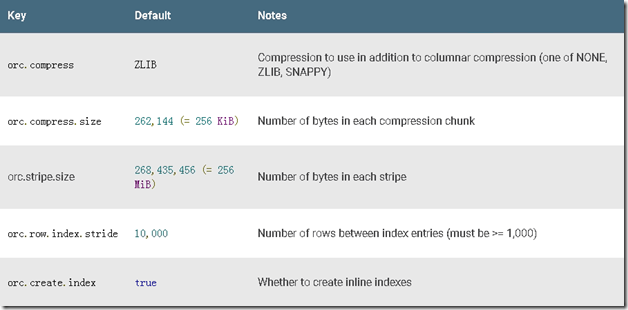

创建ORC表时,可以使用许多表属性进一步优化ORC的工作方式:

1、例如,假设您想使用snappy压缩而不是zlib压缩。方法如下:

#建表

hive (default)> create table page_views_orc_snappy(

> track_time string,

> url string,

> session_id string,

> referer string,

> ip string,

> end_user_id string,

> city_id string

> )

> ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '

> STORED AS orc tblproperties ("orc.compress"="SNAPPY");

OK

Time taken: 0.033 seconds

#插入数据

hive (default)> insert into table page_views_orc_snappy select * from page_views;

#查询

hive (default)> dfs -du -h /user/hive/warehouse/page_views_orc_snappy/;

3.8 M /user/hive/warehouse/page_views_orc_snappy/000000_0

#此时发现,用snappy压缩完,数据反而比orcfile表数据还大了,怎么回事呢?

因为建ORCFILE表时,不指定压缩,默认使用ZLIB压缩,但是指定了snappy压缩就会使用snappy,

而且ZLIB的压缩比大于snappy,所以此时的snappy压缩稍微大一些;总结:

在实际的项目开发当中,hive表的数据

*存储格式I orcfile/qarquet #推荐

*数据压缩

snappy #推荐