python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

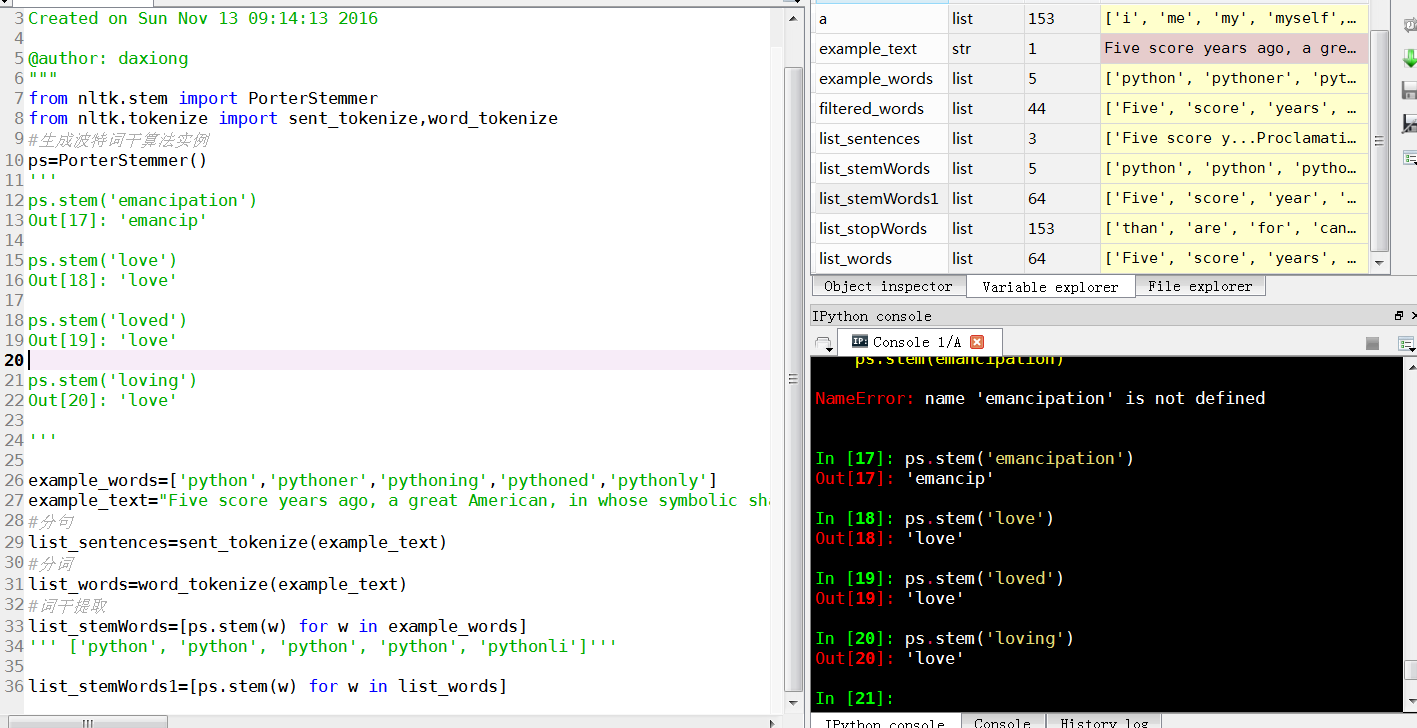

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016

@author: daxiong

"""

from nltk.stem import PorterStemmer

from nltk.tokenize import sent_tokenize,word_tokenize

#生成波特词干算法实例

ps=PorterStemmer()

'''

ps.stem('emancipation')

Out[17]: 'emancip'

ps.stem('love')

Out[18]: 'love'

ps.stem('loved')

Out[19]: 'love'

ps.stem('loving')

Out[20]: 'love'

'''

example_words=['python','pythoner','pythoning','pythoned','pythonly']

example_text="Five score years ago, a great American, in whose symbolic shadow we stand today, signed the Emancipation Proclamation. This momentous decree came as a great beacon light of hope to millions of Negro slaves who had been seared in the flames of withering injustice. It came as a joyous daybreak to end the long night of bad captivity."

#分句

list_sentences=sent_tokenize(example_text)

#分词

list_words=word_tokenize(example_text)

#词干提取

list_stemWords=[ps.stem(w) for w in example_words]

''' ['python', 'python', 'python', 'python', 'pythonli']'''

list_stemWords1=[ps.stem(w) for w in list_words]

Stemming words with NLTK

The idea of stemming is a sort of normalizing method. Many variations of words carry the same meaning, other than when tense is involved.

The reason why we stem is to shorten the lookup, and normalize sentences.

Consider:

I was riding in the car.

This sentence means the same thing. in the car is the same. I was is the same. the ing denotes a clear past-tense in both cases, so is it truly necessary to differentiate between ride and riding, in the case of just trying to figure out the meaning of what this past-tense activity was?

No, not really.

This is just one minor example, but imagine every word in the English language, every possible tense and affix you can put on a word. Having individual dictionary entries per version would be highly redundant and inefficient, especially since, once we convert to numbers, the "value" is going to be identical.

One of the most popular stemming algorithms is the Porter stemmer, which has been around since 1979.

First, we're going to grab and define our stemmer:

from nltk.stem import PorterStemmer

from nltk.tokenize import sent_tokenize, word_tokenize

ps = PorterStemmer()

Now, let's choose some words with a similar stem, like:

example_words = ["python","pythoner","pythoning","pythoned","pythonly"]

Next, we can easily stem by doing something like:

for w in example_words:

print(ps.stem(w))

Our output:

python

python

python

python

pythonli

Now let's try stemming a typical sentence, rather than some words:

new_text = "It is important to by very pythonly while you are pythoning with python. All pythoners have pythoned poorly at least once."

words = word_tokenize(new_text)

for w in words:

print(ps.stem(w))

Now our result is:

It

is

import

to

by

veri

pythonli

while

you

are

python

with

python

.

All

python

have

python

poorli

at

least

onc

.

Next up, we're going to discuss something a bit more advanced from the NLTK module, Part of Speech tagging, where we can use the NLTK module to identify the parts of speech for each word in a sentence.