https://study.163.com/course/courseMain.htm?courseId=1006183019&share=2&shareId=400000000398149

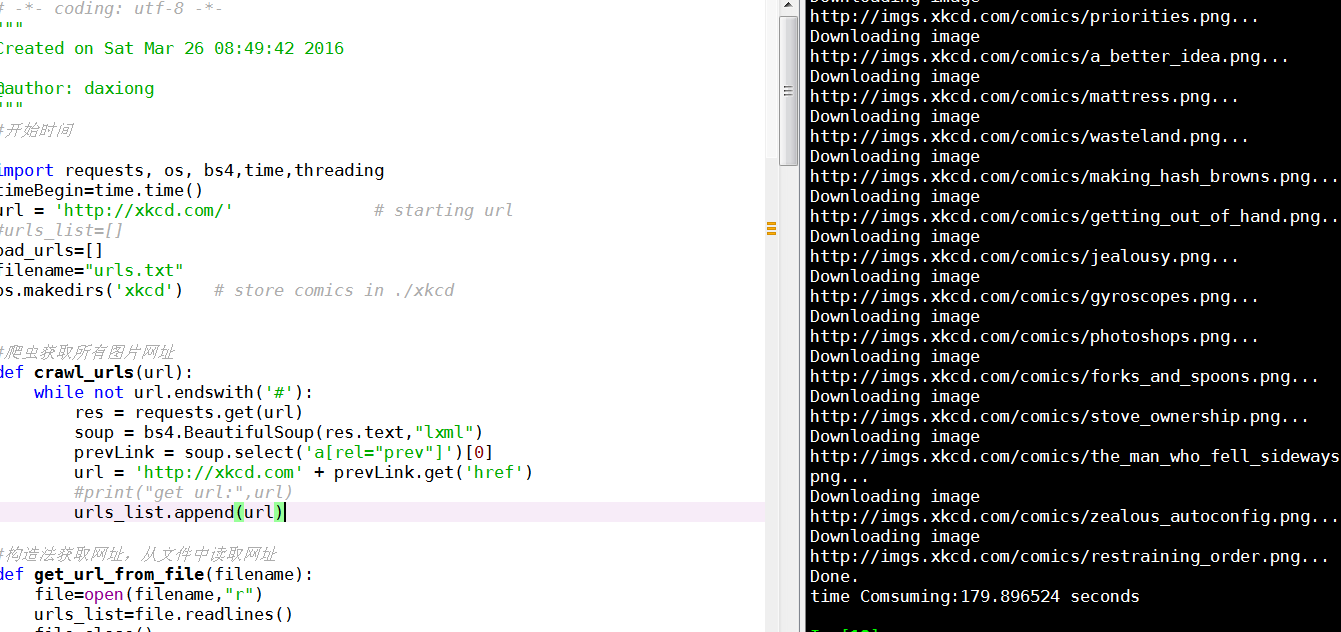

# -*- coding: utf-8 -*-

"""

Created on Sat Mar 26 08:49:42 2016

@author: daxiong

"""

#开始时间

import requests, os, bs4,time,threading

timeBegin=time.time()

url = 'http://xkcd.com/' # starting url

#urls_list=[]

bad_urls=[]

filename="urls.txt"

os.makedirs('xkcd') # store comics in ./xkcd

#爬虫获取所有图片网址

def crawl_urls(url):

while not url.endswith('#'):

res = requests.get(url)

soup = bs4.BeautifulSoup(res.text,"lxml")

prevLink = soup.select('a[rel="prev"]')[0]

url = 'http://xkcd.com' + prevLink.get('href')

#print("get url:",url)

urls_list.append(url)

#构造法获取网址,从文件中读取网址

def get_url_from_file(filename):

file=open(filename,"r")

urls_list=file.readlines()

file.close()

new_urls_list=[]

for url in urls_list:

new_url=url.strip("

")

new_urls_list.append(new_url)

return new_urls_list

#写入网址到文件夹

def file_urls(urls_list):

for url in urls_list:

file.write(url)

file.write("

")

file.close()

#下载一个网址的图片

def download_image(url):

res = requests.get(url)

soup = bs4.BeautifulSoup(res.text,"lxml")

comicElem = soup.select('#comic img')

comicUrl = 'http:' + comicElem[0].get('src')

print('Downloading image %s...' % (comicUrl))

res = requests.get(comicUrl)

imageFile = open(os.path.join('xkcd', os.path.basename(comicUrl)), 'wb')

for chunk in res.iter_content(100000):

imageFile.write(chunk)

imageFile.close()

#下载所有网址的图片

def download_all(urls_list):

for url in urls_list:

try:

download_image(url)

except:

bad_urls.append(url)

continue

print("well Done")

#下载某范围网址的图片

def download_range(start,end):

urls_list_range1=urls_list[start:end]

for url in urls_list_range1:

try:

download_image(url)

except:

bad_urls.append(url)

continue

#print("well Done")

#获取截取数

def Step(urls_list):

step=len(urls_list)/20.0

step=int(round(step,0))

return step

def TimeCount():

timeComsuming=timeEnd-timeBegin

print ("time Comsuming:%f seconds" % timeComsuming)

return timeComsuming

urls_list=get_url_from_file(filename)

step=Step(urls_list)

#urls_list_range1=urls_list[:step]

downloadThreads = [] # a list of all the Thread objects

for i in range(0, len(urls_list), step): # loops 14 times, creates 14 threads

downloadThread = threading.Thread(target=download_range, args=(i, i +step))

downloadThreads.append(downloadThread)

downloadThread.start()

# Wait for all threads to end.

for downloadThread in downloadThreads:

downloadThread.join()

print('Done.')

#结束时间

timeEnd=time.time()

#计算程序消耗时间,可改进算法

timeComsuming=TimeCount()

'''

# Create and start the Thread objects.

downloadThreads = [] # a list of all the Thread objects

for i in range(0, 1400, 100): # loops 14 times, creates 14 threads

downloadThread = threading.Thread(target=downloadXkcd, args=(i, i + 99))

downloadThreads.append(downloadThread)

downloadThread.start()

# Wait for all threads to end.

for downloadThread in downloadThreads:

downloadThread.join()

print('Done.')

download_all(urls_list)

'''

https://study.163.com/provider/400000000398149/index.htm?share=2&shareId=400000000398149(博主视频教学主页)