1、Kafka Broker 工作流程

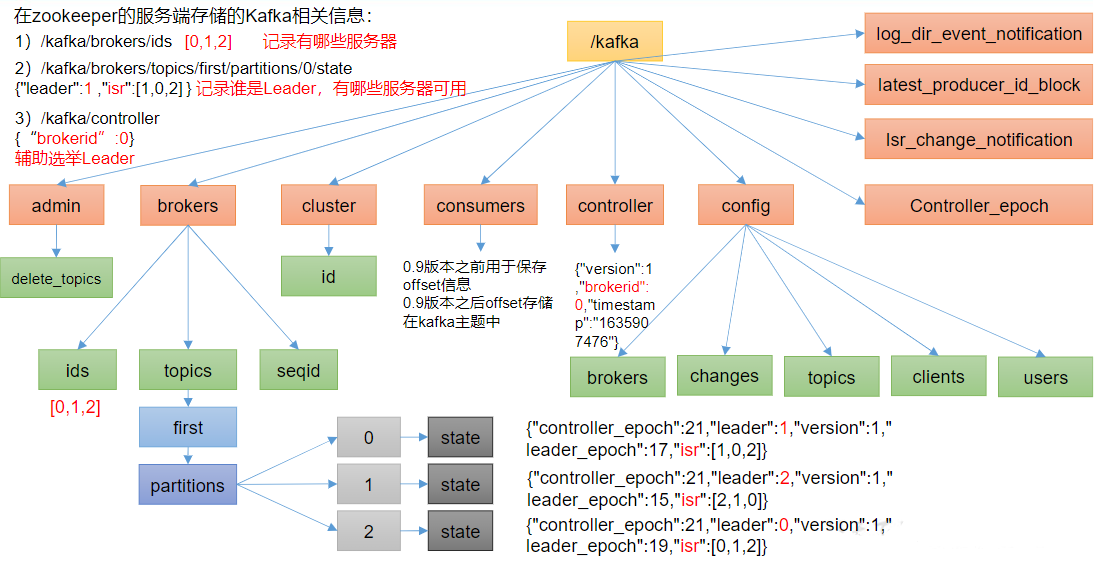

1.1、Zookeeper 存储的 Kafka 信息

[hui@hadoop103 zookeeper-3.4.10]$ bin/zkCli.sh [zk: localhost:2181(CONNECTED) 0] ls / [zookeeper, spark, kafka, hbase] [zk: localhost:2181(CONNECTED) 1] ls /kafka [cluster, controller_epoch, controller, brokers, feature, admin, isr_change_notification, consumers, log_dir_event_notification, latest_producer_id_block, config] [zk: localhost:2181(CONNECTED) 2]

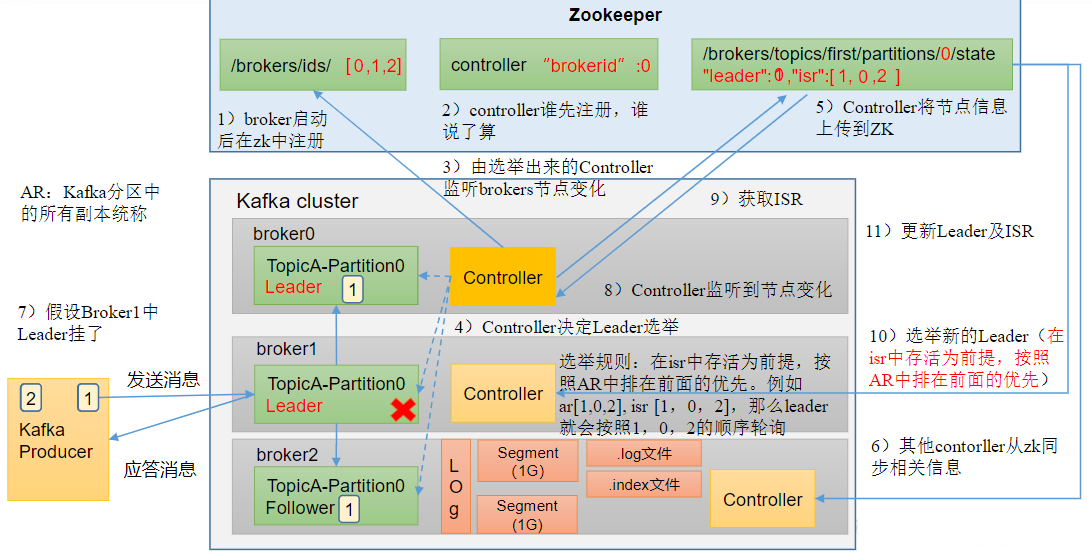

1.2、KafkaBroker总体工作流程

- broker启动后在zk中注册

- controller谁先注册,谁说了算

- 由选举出来的Controller监听brokers节点变化

- Controller决定Leader选举 选举规则:在isr中存活为前提,按照AR中排在前面的优先。例如ar[1,0,2], isr[1,0,2],那么leader就会按照1,0,2的顺序轮询

- Controller将节点信息上传到ZK

- 其他contorller从zk同步相关信息

- 假设Broker1中Leader挂了

- Controller监听到节点变化 获取ISR

- 选举新的Leader(在isr中存活为前提,按照AR中排在前面的优先)

- 更新Leader及ISR

模拟Kafka上下线,Zookeeper中数据变化

1、查看/kafka/brokers/ids路径上的节点。

[zk: localhost:2181(CONNECTED) 2] ls /kafka/brokers/ids

[0, 1, 2]

2、查看/kafka/controller路径上的数据

[zk: localhost:2181(CONNECTED) 3] get /kafka/controller {"version":1,"brokerid":2,"timestamp":"1640209253641"} cZxid = 0x640000003b ctime = Thu Dec 23 05:40:53 CST 2021 mZxid = 0x640000003b mtime = Thu Dec 23 05:40:53 CST 2021 pZxid = 0x640000003b cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x57de415196e0001 dataLength = 54 numChildren = 0

3、查看/kafka/brokers/topics/first/partitions/0/state路径上的数据。

[zk: localhost:2181(CONNECTED) 4] get /kafka/brokers/topics/first/partitions/0/state {"controller_epoch":16,"leader":1,"version":1,"leader_epoch":12,"isr":[1]} cZxid = 0x5e00000006 ctime = Mon Mar 28 13:07:18 CST 2022 mZxid = 0x64000000cb mtime = Thu Dec 23 05:42:03 CST 2021 pZxid = 0x5e00000006 cversion = 0 dataVersion = 12 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 74 numChildren = 0

4、停止hadoop105上的kafka。

[hui@hadoop105 ~]$ jps 2005 QuorumPeerMain 3159 Jps 2399 Kafka [hui@hadoop105 ~]$ kill 2399 [hui@hadoop105 ~]$ jps 2005 QuorumPeerMain 3177 Jps

5、再次查看/kafka/brokers/ids路径上的节点

[zk: localhost:2181(CONNECTED) 5] ls /kafka/brokers/ids

[0, 1]

刚才停止了hadoop105 节点上的kafka,对应的brokerI=2,现在zk已经不显示brokerid=2的信息了。

[zk: localhost:2181(CONNECTED) 6] get /kafka/controller {"version":1,"brokerid":1,"timestamp":"1648591708926"} cZxid = 0x6500000006 ctime = Wed Mar 30 06:08:28 CST 2022 mZxid = 0x6500000006 mtime = Wed Mar 30 06:08:28 CST 2022 pZxid = 0x6500000006 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x37fd7975d1c0002 dataLength = 54 numChildren = 0

现在的leader已经是 hadoop104 了

7、再次查看/kafka/brokers/topics/first/partitions/0/state路径上的数据

[zk: localhost:2181(CONNECTED) 7] get /kafka/brokers/topics/first/partitions/0/state {"controller_epoch":16,"leader":1,"version":1,"leader_epoch":12,"isr":[1]} cZxid = 0x5e00000006 ctime = Mon Mar 28 13:07:18 CST 2022 mZxid = 0x64000000cb mtime = Thu Dec 23 05:42:03 CST 2021 pZxid = 0x5e00000006 cversion = 0 dataVersion = 12 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 74 numChildren = 0

现在我们再次启动 hadoop105 上的kafka

[hui@hadoop103 kafka]$ jps.sh ------------------- hui@hadoop103 -------------- 3168 Kafka 2016 QuorumPeerMain 3412 ZooKeeperMain 3918 Jps ------------------- hui@hadoop104 -------------- 3570 Jps 2023 QuorumPeerMain 2830 Kafka ------------------- hui@hadoop105 -------------- 2005 QuorumPeerMain 3562 Kafka 3661 Jps [zk: localhost:2181(CONNECTED) 8] ls /kafka/brokers/ids [0, 1, 2] [zk: localhost:2181(CONNECTED) 9] get /kafka/controller {"version":1,"brokerid":1,"timestamp":"1648591708926"} cZxid = 0x6500000006 ctime = Wed Mar 30 06:08:28 CST 2022 mZxid = 0x6500000006 mtime = Wed Mar 30 06:08:28 CST 2022 pZxid = 0x6500000006 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x37fd7975d1c0002 dataLength = 54 numChildren = 0 [zk: localhost:2181(CONNECTED) 10] get /kafka/brokers/topics/first/partitions/0/state {"controller_epoch":16,"leader":1,"version":1,"leader_epoch":12,"isr":[1]} cZxid = 0x5e00000006 ctime = Mon Mar 28 13:07:18 CST 2022 mZxid = 0x64000000cb mtime = Thu Dec 23 05:42:03 CST 2021 pZxid = 0x5e00000006 cversion = 0 dataVersion = 12 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 74 numChildren = 0

再次启动后 leader 未重新选取。

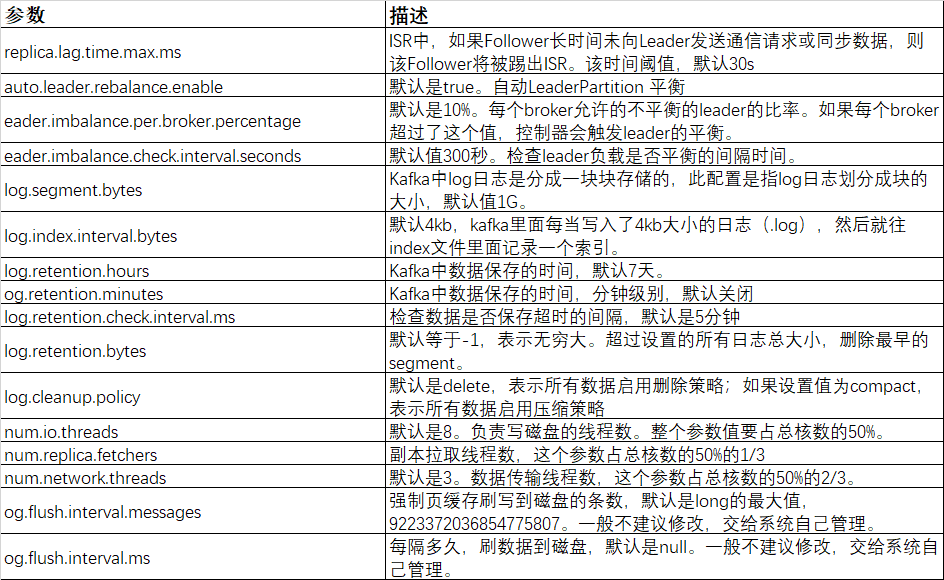

1.3、Broker重要参数

2、节点服役和退役

2,.1 服役新节点

1、新节点准备

为了方便起见,停止原有 kafka 和 zk 集群,关闭hadoop105客户机,进行完整克隆,命名为 hadoop102;

配置主机IP

[hui@hadoop102 ~]$ less /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 HWADDR=00:0c:29:c9:1d:9c TYPE=Ethernet UUID=65e5ce7d-7df7-400b-b519-3c986d9dd3c9 ONBOOT=yes NM_CONTROLLED=yes BOOTPROTO=static IPADDR=192.168.124.131 GATEWAY=192.168.14.2 DNS1=192.168.124.2 BOOTPROTO=dhcp

配置主机名

[hui@hadoop102 ~]$ cat /etc/sysconfig/network NETWORKING=yes HOSTNAME=hadoop102

重启hadoop102,开启hadoop105

群起 zk & kafka

[hui@hadoop103 kafka]$ jps.sh ------------------- hui@hadoop103 -------------- 3168 Kafka 2016 QuorumPeerMain 4310 Jps ------------------- hui@hadoop104 -------------- 3987 Jps 2023 QuorumPeerMain 2830 Kafka ------------------- hui@hadoop105 -------------- 2005 QuorumPeerMain 3562 Kafka 4078 Jps

修改 hadoop102 kafka 跑配置文件的 broker_id = 3

less config/server.properties

broker.id=3

删除 kafka 目录下的data 和 log 目录

hadoop102 kafka]$ rm -rf datas/ logs/

单独启动 hadoop102 上的kafka

[hui@hadoop102 kafka]$ bin/kafka-server-start.sh -daemon ./config/server.properties [hui@hadoop102 kafka]$ jps 2795 Kafka 2812 Jps

2、执行负载均衡

创建一个要均衡的主题

[hui@hadoop103 kafka]$ vim topics-to-move.json { "topics": [{ "topic": "first" }], "version": 1 }

生成负载均衡计划

[hui@hadoop103 kafka]$ bin/kafka-reassign-partitions.sh --bootstrap-server hadoop103:9092 --topics-to-move-json-file topics-to-move.json --broker-list "0,1,2,3" --generate Current partition replica assignment {"version":1,"partitions":[{"topic":"first","partition":0,"replicas":[1],"log_dirs":["any"]},{"topic":"first","partition":1,"replicas":[2],"log_dirs":["any"]},{"topic":"first","partition":2,"replicas":[0],"log_dirs":["any"]}]} Proposed partition reassignment configuration {"version":1,"partitions":[{"topic":"first","partition":0,"replicas":[0],"log_dirs":["any"]},{"topic":"first","partition":1,"replicas":[1],"log_dirs":["any"]},{"topic":"first","partition":2,"replicas":[2],"log_dirs":["any"]}]} [hui@hadoop103 kafka]$

创建副本存储计划(所有副本存储在broker0、broker1、broker2、broker3中)

[hui@hadoop103 kafka]$ vim increase-replication-factor.json

{"version":1,"partitions":[{"topic":"first","partition":0,"replicas":[2,3,0],"log_dirs":["any","any","any"]},{"topic":"first","partition":1,"replicas":[3,0,1],"log_dirs":["any","any","any"]},{"topic":"first","partition":2,"replicas":[0,1,2],"log_dirs":["any","any","any"]}]}

执行副本存储计划

[hui@hadoop103 kafka]$ bin/kafka-reassign-partitions.sh --bootstrap-server hadoop103:9092 --reassignment-json-file increase-replication-factor.json --execute Current partition replica assignment

验证副本存储计划

[hui@hadoop103 kafka]$ bin/kafka-reassign-partitions.sh --bootstrap-server hadoop103:9092 --reassignment-json-file increase-replication-factor.json --verify

2,.2 退役旧节点

先按照退役一台节点,生成执行计划,然后按照服役时操作流程执行负载均衡。

创建一个要均衡的主题

[hui@hadoop103 kafka]$ cat topics-to-move.json { "topics": [{ "topic": "first" }], "version": 1 }

创建执行计划。

bin/kafka-reassign-partitions.sh --bootstrap-server hadoop103:9092 --topics-to-move-json-file topics-to-move.json --broker-list "0,1,2" --generate

创建副本存储计划(所有副本存储在broker0、broker1、broker2中)

vim increase-replication-factor.json {"version":1,"partitions":[{"topic":"first","partition":0,"replicas":[2,0,1],"log_dirs":["any","any","any"]},{"topic":"first","partition":1,"replicas":[0,1,2],"log_dirs":["any","any","any"]},{"topic":"first","partition":2,"replicas":[1,2,0],"log_dirs":["any","any","any"]}]}

执行副本存储计划

bin/kafka-reassign-partitions.sh --bootstrap-server hadoop102:9092 --reassignment-json-file increase-replication-factor.json --execute

验证副本存储计划

bin/kafka-reassign-partitions.sh --bootstrap-server hadoop103:9092 --reassignment-json-file increase-replication-factor.json --verify

最后停止hadoop102 上的 kafka 即可;

[hui@hadoop102 ~]$ jps 3510 Jps 2795 Kafka [hui@hadoop102 ~]$ kill -9 2795 [hui@hadoop102 ~]$ jps 3522 Jps