hadoop

tar -xvf hadoop-2.7.3.tar.gz

mv hadoop-2.7.3 hadoop

在hadoop根目录创建目录

hadoop/hdfs

hadoop/hdfs/tmp

hadoop/hdfs/name

hadoop/hdfs/data

core-site.xml

修改/etc/hadoop中的配置文件

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/hdfs/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://sjck-node01:9000</value>

</property>

</configuration>

hdfs-site.xml

创建hdfs文件系统

dfs.replication维护副本数,默认是3个

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>sjck-node01:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

</configuration>

mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>sjck-node01:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>sjck-node01:19888</value>

</property>

</configuration>

yarn-site.xml

webapp.address是web端口

yarn.resourcemanager.address,port for clients to submit jobs.

arn.resourcemanager.scheduler.address,port for ApplicationMasters to talk to Scheduler to obtain resources.

<property>

<name>yarn.resourcemanager.address</name>

<value>master:18040</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:18030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:18088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:18025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:18141</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

slaves

sjck-node02

sjck-node03

hadoop-env.sh,配置里的变量为集群特有的值

export JAVA_HOME=/usr/local/src/jdk/jdk1.8

export HADOOP_NAMENODE_OPTS="-XX:+UseParallelGC"

yarn-env.sh

export JAVA_HOME=/usr/local/src/jdk/jdk1.8

环境变量

export HADOOP_HOME=/usr/local/hadoop

export PATH="$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH"

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

将目录复制到slaver

scp -r /usr/local/hadoop/ sjck-node02:/usr/local/

scp -r /usr/local/hadoop/ sjck-node03:/usr/local/

启动hadoop的命令都在master上执行,初始化hadoop

hadoop namenode -format

INFO common.Storage: Storage directory /usr/local/hadoop/hdfs/name has been successfully formatted.

master启动

Hadoop守护进程的日志默认写入到 ${HADOOP_HOME}/logs

[root@sjck-node01 sbin]# /usr/local/hadoop/sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [sjck-node01]

sjck-node01: starting namenode, logging to /usr/local/hadoop/logs/hadoop-root-namenode-sjck-node01.out

sjck-node02: starting datanode, logging to /usr/local/hadoop/logs/hadoop-root-datanode-sjck-node02.out

sjck-node03: starting datanode, logging to /usr/local/hadoop/logs/hadoop-root-datanode-sjck-node03.out

Starting secondary namenodes [sjck-node01]

sjck-node01: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-root-secondarynamenode-sjck-node01.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-root-resourcemanager-sjck-node01.out

sjck-node02: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-root-nodemanager-sjck-node02.out

sjck-node03: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-root-nodemanager-sjck-node03.out

master关闭

[root@sjck-node01 sbin]# /usr/local/hadoop/sbin/stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [sjck-node01]

sjck-node01: stopping namenode

sjck-node02: stopping datanode

sjck-node03: stopping datanode

Stopping secondary namenodes [sjck-node01]

sjck-node01: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

sjck-node02: stopping nodemanager

sjck-node03: stopping nodemanager

no proxyserver to stop

查看集群状态

Namenode

文件系统的管理节点,维护文件系统的目录结构元数据,还有文件与block对应关系

master是NameNode,也是JobTracker

DataNode

提供真实文件数据,slaver是DataNode,也是TaskTracker

查看master进程状态

[root@sjck-node01 hadoop]# jps

4404 SecondaryNameNode

4215 NameNode

4808 Jps

4555 ResourceManager

查看slaver进程状态

[root@sjck-node02 hadoop]# jps

2752 DataNode

4995 Jps

2839 NodeManager

[root@sjck-node03 hadoop]# jps

3076 DataNode

3163 NodeManager

3260 Jps

查看防火墙状态

service iptables status

关闭防火墙,开机不启动

service iptables stop

chkconfig --del iptables

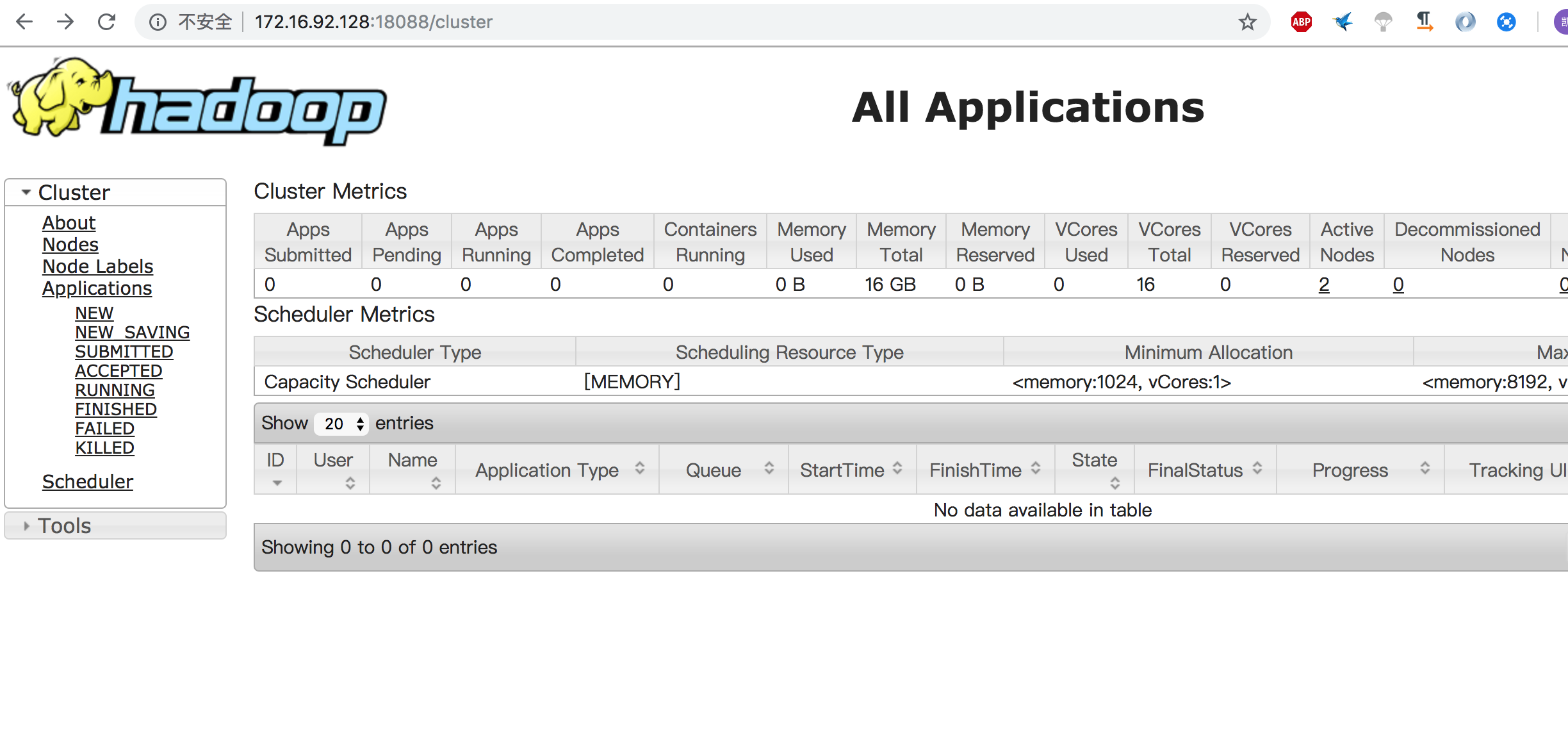

查看集群状态

http://172.16.92.128:18088/cluster

执行测试任务

hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar pi 10 10

19/03/20 20:42:30 INFO mapreduce.Job: map 0% reduce 0%

19/03/20 20:42:51 INFO mapreduce.Job: map 20% reduce 0%

19/03/20 20:43:05 INFO mapreduce.Job: map 20% reduce 7%

19/03/20 20:45:43 INFO mapreduce.Job: map 40% reduce 7%

19/03/20 20:45:44 INFO mapreduce.Job: map 50% reduce 7%

19/03/20 20:45:47 INFO mapreduce.Job: map 60% reduce 7%

19/03/20 20:45:48 INFO mapreduce.Job: map 100% reduce 7%

19/03/20 20:45:49 INFO mapreduce.Job: map 100% reduce 33%

19/03/20 20:45:50 INFO mapreduce.Job: map 100% reduce 100%

Job Finished in 218.298 seconds

配置参数详情

[root@sjck-node01 hadoop]# jps

4404 SecondaryNameNode

4215 NameNode

4808 Jps

4555 ResourceManager