实现官方demo并且将转化后的tvm模型进行保存,重新读取和推理

在jupyter notebook上操作的,代码比较分散,其他编译器将代码全部拼起来编译就ok了

官方文档

1.导入头文件

# tvm, relay import tvm from tvm import te from tvm import relay # os and numpy import numpy as np import os.path # Tensorflow imports import tensorflow as tf try: tf_compat_v1 = tf.compat.v1 except ImportError: tf_compat_v1 = tf # Tensorflow utility functions import tvm.relay.testing.tf as tf_testing from tensorflow.keras.datasets import mnist from tensorflow.python.platform import gfile

2.设置下载路径与配置文件参数(cpu支持的模型,由llvm编译)

repo_base = 'https://github.com/dmlc/web-data/raw/master/tensorflow/models/InceptionV1/' # Test image img_name = 'elephant-299.jpg' image_url = os.path.join(repo_base, img_name) ###################################################################### # Tutorials # --------- # Please refer docs/frontend/tensorflow.md for more details for various models # from tensorflow. model_name = 'classify_image_graph_def-with_shapes.pb' model_url = os.path.join(repo_base, model_name) # Image label map map_proto = 'imagenet_2012_challenge_label_map_proto.pbtxt' map_proto_url = os.path.join(repo_base, map_proto) # Human readable text for labels label_map = 'imagenet_synset_to_human_label_map.txt' label_map_url = os.path.join(repo_base, label_map) # Target settings # Use these commented settings to build for cuda. #target = 'cuda' #target_host = 'llvm' #layout = "NCHW" #ctx = tvm.gpu(0) target = 'llvm' target_host = 'llvm' layout = None ctx = tvm.context(target, 0)#tvm.cpu(0)

3.下载需要资源,如果下载失败,根据错误提示手动下载资源放到对应目录

from tvm.contrib.download import download_testdata img_path = download_testdata(image_url, img_name, module='data') model_path = download_testdata(model_url, model_name, module=['tf', 'InceptionV1']) map_proto_path = download_testdata(map_proto_url, map_proto, module='data') label_path = download_testdata(label_map_url, label_map, module='data') print(model_path)

4.读入模型

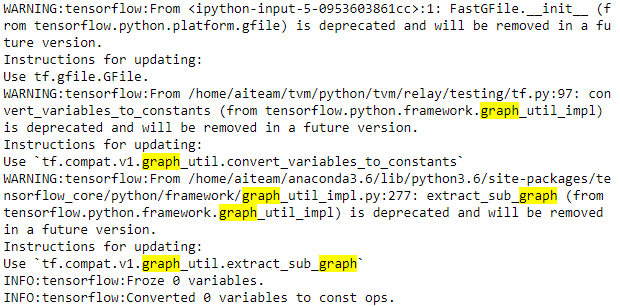

with tf_compat_v1.gfile.FastGFile(model_path, 'rb') as f: graph_def = tf_compat_v1.GraphDef() graph_def.ParseFromString(f.read()) graph = tf.import_graph_def(graph_def, name='') # Call the utility to import the graph definition into default graph. graph_def = tf_testing.ProcessGraphDefParam(graph_def) # Add shapes to the graph. with tf_compat_v1.Session() as sess: graph_def = tf_testing.AddShapesToGraphDef(sess, 'softmax')

5.处理训练数据和读入模型

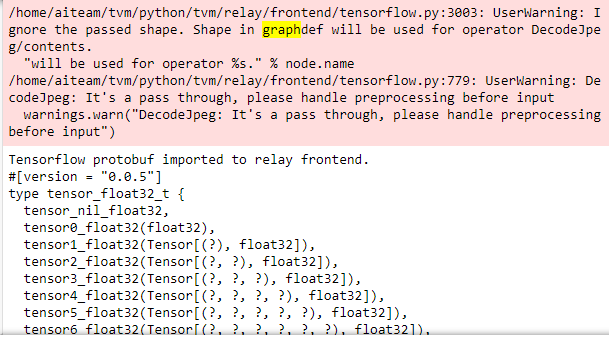

from PIL import Image image = Image.open(img_path).resize((299, 299)) x = np.array(image) ###################################################################### # Import the graph to Relay # ------------------------- # Import tensorflow graph definition to relay frontend. # # Results: # sym: relay expr for given tensorflow protobuf. # params: params converted from tensorflow params (tensor protobuf). shape_dict = {'DecodeJpeg/contents': x.shape} dtype_dict = {'DecodeJpeg/contents': 'uint8'} mod, params = relay.frontend.from_tensorflow(graph_def, layout=layout, shape=shape_dict) print("Tensorflow protobuf imported to relay frontend.") print(mod.astext(show_meta_data=False))

会有warning,问题不大

6.开始编译

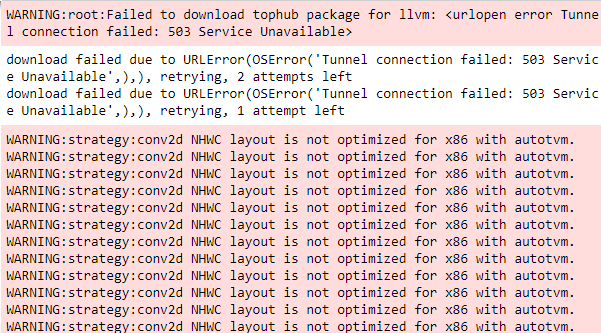

with relay.build_config(opt_level=3): lib = relay.build(mod, target=target, target_host=target_host, params=params)

也是一堆warning,问题不大

7. 进行推理

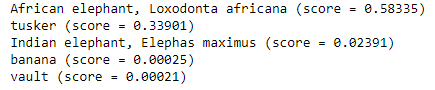

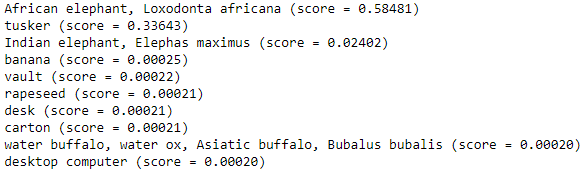

from tvm.contrib import graph_runtime dtype = 'uint8' m = graph_runtime.GraphModule(lib["default"](ctx)) # set inputs m.set_input("DecodeJpeg/contents", tvm.nd.array(x.astype(dtype))) # execute m.run() # get outputs tvm_output = m.get_output(0, tvm.nd.empty(((1, 1008)), 'float32')) predictions = tvm_output.asnumpy() predictions = np.squeeze(predictions) # Creates node ID --> English string lookup. node_lookup = tf_testing.NodeLookup(label_lookup_path=map_proto_path, uid_lookup_path=label_path) # Print top 5 predictions from TVM output. top_k = predictions.argsort()[-5:][::-1] for node_id in top_k: human_string = node_lookup.id_to_string(node_id) score = predictions[node_id] print('%s (score = %.5f)' % (human_string, score))

可以正常推理,转化成功

8.保存

from tvm.contrib import utils temp=utils.tempdir() path_lib=temp.relpath("/home/aiteam/tiwang/tvm_code/inceptionV2.1_lib.tar") lib.export_library(path_lib)

保存成功

9.读入模型并进行推理

loaded_lib=tvm.runtime.load_module(path_lib) input_data=tvm.nd.array(x.astype(dtype)) mm=graph_runtime.GraphModule(loaded_lib["default"](ctx)) mm.run(data=input_data) out_deploy = mm.get_output(0, tvm.nd.empty(((1, 1008)), 'float32')) predictions = out_deploy.asnumpy() predictions = np.squeeze(predictions) # Creates node ID --> English string lookup. node_lookup = tf_testing.NodeLookup(label_lookup_path=map_proto_path, uid_lookup_path=label_path) # Print top 5 predictions from TVM output. top_k = predictions.argsort()[-10:][::-1] for node_id in top_k: human_string = node_lookup.id_to_string(node_id) score = predictions[node_id] print('%s (score = %.5f)' % (human_string, score))

整个流程ok

但是在转换自己模型的时候还是有很多问题,第一个是从tf-serving中拿出来的demo模型在第4步会出现解码错误

参照

https://stackoverflow.com/questions/61883290/to-load-pb-file-decodeerror-error-parsing-message

进行修改

with tf_compat_v1.gfile.FastGFile(model_dir+model_name, 'rb') as f: data=compat.as_bytes(f.read()) graph_def=saved_model_pb2.SavedModel() graph_def.ParseFromString(data) graph_def=graph_def.meta_graphs[0].graph_def #graph_def = tf_compat_v1.GraphDef() #graph_def.ParseFromString(f.read()) graph = tf.import_graph_def(graph_def, name='') # Call the utility to import the graph definition into default graph. graph_def = tf_testing.ProcessGraphDefParam(graph_def) # Add shapes to the graph. with tf_compat_v1.Session() as sess: graph_def = tf_testing.AddShapesToGraphDef(sess, 'softmax')

可以正常解码,但是后面还会出现问题

AssertionError: softmax is not in graph

这里还需要看一下tensorflow源码,学一下graph和def_graph

另外我自己又写了一个mnist模型,是可以正常读取的,只不过在softmax这里仍会出现同样错误,根据其他博客的说法我觉得第一个错误是模型保存时调用函数的问题,倒是保存时文件格式一样但是编码却不同,因为stackoverflow里面的解决方案

compat.as_bytes(f.read())

明显是转换了格式