改造dubbo-demo-web项目为Tomcat启动项目

Tomcat官网

准备Tomcat的镜像底包

准备tomcat二进制包

运维主机HDSS7-200.host.com上:Tomcat8下载链接

复制 /opt/src

1234

[root@hdss7-200 src]# ls -l|grep tomcat-rw-r--r-- 1 root root 9690027 Apr 10 22:57 apache-tomcat-8.5.40.tar.gz[root@hdss7-200 src]# mkdir -p /data/dockerfile/tomcat8 && tar xf apache-tomcat-8.5.40.tar.gz -C /data/dockerfile/tomcat[root@hdss7-200 src]# cd /data/dockerfile/tomcat8 && rm -fr apache-tomcat-8.5.40/webapps/*

简单配置tomcat

关闭AJP端口

复制 /data/dockerfile/tomcat/apache-tomcat-8.5.40/conf/server.xml

1

<!-- <Connector port="8009" protocol="AJP/1.3" redirectPort="8443" /> -->

配置日志

删除3manager,4host-manager的handlers

复制 /data/dockerfile/tomcat/apache-tomcat-8.5.40/conf/logging.properties

1

handlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler,java.util.logging.ConsoleHandler

复制 /data/dockerfile/tomcat/apache-tomcat-8.5.40/conf/logging.properties

123

1catalina.org.apache.juli.AsyncFileHandler.level = INFO2localhost.org.apache.juli.AsyncFileHandler.level = INFOjava.util.logging.ConsoleHandler.level = INFO

注释掉所有关于3manager,4host-manager日志的配置

复制 /data/dockerfile/tomcat/apache-tomcat-8.5.40/conf/logging.properties

123456789

#3manager.org.apache.juli.AsyncFileHandler.level = FINE#3manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs#3manager.org.apache.juli.AsyncFileHandler.prefix = manager.#3manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8#4host-manager.org.apache.juli.AsyncFileHandler.level = FINE#4host-manager.org.apache.juli.AsyncFileHandler.directory = ${catalina.base}/logs#4host-manager.org.apache.juli.AsyncFileHandler.prefix = host-manager.#4host-manager.org.apache.juli.AsyncFileHandler.encoding = UTF-8

准备Dockerfile

复制 /data/dockerfile/tomcat/Dockerfile

1234567891011

From stanleyws/jre8:8u112RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezoneENV CATALINA_HOME /opt/tomcatENV LANG zh_CN.UTF-8ADD apache-tomcat-8.5.40/ /opt/tomcatADD config.yml /opt/prom/config.ymlADD jmx_javaagent-0.3.1.jar /opt/prom/jmx_javaagent-0.3.1.jarWORKDIR /opt/tomcatADD entrypoint.sh /entrypoint.shCMD ["/entrypoint.sh"]

vi config.yml

复制

123

—rules: - pattern: '.*'

制作镜像并推送

复制

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950

[root@hdss7-200 tomcat]# docker build . -t harbor.od.com/base/tomcat:v8.5.40Sending build context to Docker daemon 9.502 MBStep 1 : FROM stanleyws/jre8:8u112 ---> fa3a085d6ef1Step 2 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone ---> Using cache ---> 5da5ab0b1a48Step 3 : ENV CATALINA_HOME /opt/tomcat ---> Running in edf8f2bbeae3 ---> 05c7b829c8abRemoving intermediate container edf8f2bbeae3Step 4 : ENV LANG zh_CN.UTF-8 ---> Running in 50516133f65b ---> 421d67c4c188Removing intermediate container 50516133f65bStep 5 : ADD apache-tomcat-8.5.40/ /opt/tomcat ---> 460a5d0ef93bRemoving intermediate container 0be2b4897d23Step 6 : ADD config.yml /opt/prom/config.yml ---> 04c9d4679f0dRemoving intermediate container 0e88a4ed2088Step 7 : ADD jmx_javaagent-0.3.1.jar /opt/prom/jmx_javaagent-0.3.1.jar ---> 06e4a78e9cbfRemoving intermediate container 1e6b2919be00Step 8 : WORKDIR /opt/tomcat ---> Running in a51676d15a01 ---> e8d164847eb3Removing intermediate container a51676d15a01Step 9 : ADD entrypoint.sh /entrypoint.sh ---> 0522db421536Removing intermediate container 7f0be318fde8Step 10 : CMD /entrypoint.sh ---> Running in c2df6e511c00 ---> 8d735515bb42Removing intermediate container c2df6e511c00Successfully built 8d735515bb42[root@hdss7-200 tomcat8]# docker push harbor.od.com/base/tomcat:v8.5.40The push refers to a repository [harbor.od.com/base/tomcat]498eaadf86a8: Pushed fab679acf269: Pushed 0e65f86c3a75: Pushed 938c8a5617cc: Pushed 052016a734be: Mounted from app/dubbo-demo-web 0690f10a63a5: Mounted from app/dubbo-demo-web c843b2cf4e12: Mounted from app/dubbo-demo-web fddd8887b725: Mounted from base/jre8 42052a19230c: Mounted from base/jre8 8d4d1ab5ff74: Mounted from base/jre8 v8.5.40: digest: sha256:407c6abd7c4fa5119376efa71090b49637d7a52ef2fc202f4019ab4c91f6dc50 size: 2409

改造dubbo-demo-web项目

修改dubbo-client/pom.xml

复制 /d/workspace/dubbo-demo-web/dubbo-client/pom.xml

12345678910111213141516171819

<packaging>war</packaging><dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-tomcat</artifactId> </exclusion> </exclusions></dependency><dependency> <groupId>org.apache.tomcat</groupId> <artifactId>tomcat-servlet-api</artifactId> <version>8.0.36</version> <scope>provided</scope></dependency>

修改Application.java

复制 /d/workspace/dubbo-demo-web/dubbo-client/src/main/java/com/od/dubbotest/Application.java

123456

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;import org.springframework.context.annotation.ImportResource;@ImportResource(value={"classpath*:spring-config.xml"})@EnableAutoConfiguration(exclude={DataSourceAutoConfiguration.class})

创建ServletInitializer.java

复制 /d/workspace/dubbo-demo-web/dubbo-client/src/main/java/com/od/dubbotest/ServletInitializer.java

1234567891011121314

package com.od.dubbotest;import org.springframework.boot.SpringApplication;import org.springframework.boot.builder.SpringApplicationBuilder;import org.springframework.boot.context.web.SpringBootServletInitializer;import com.od.dubbotest.Application;public class ServletInitializer extends SpringBootServletInitializer { @Override protected SpringApplicationBuilder configure(SpringApplicationBuilder builder) { return builder.sources(Application.class); }}

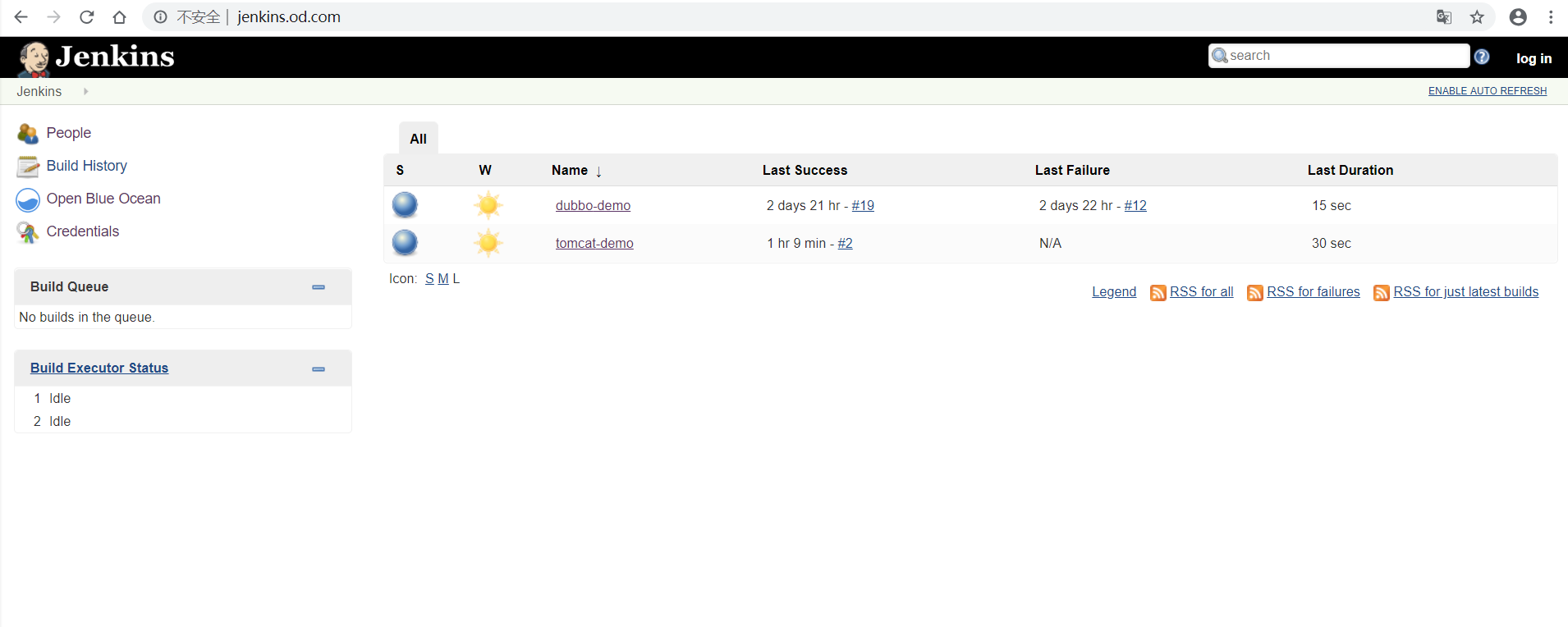

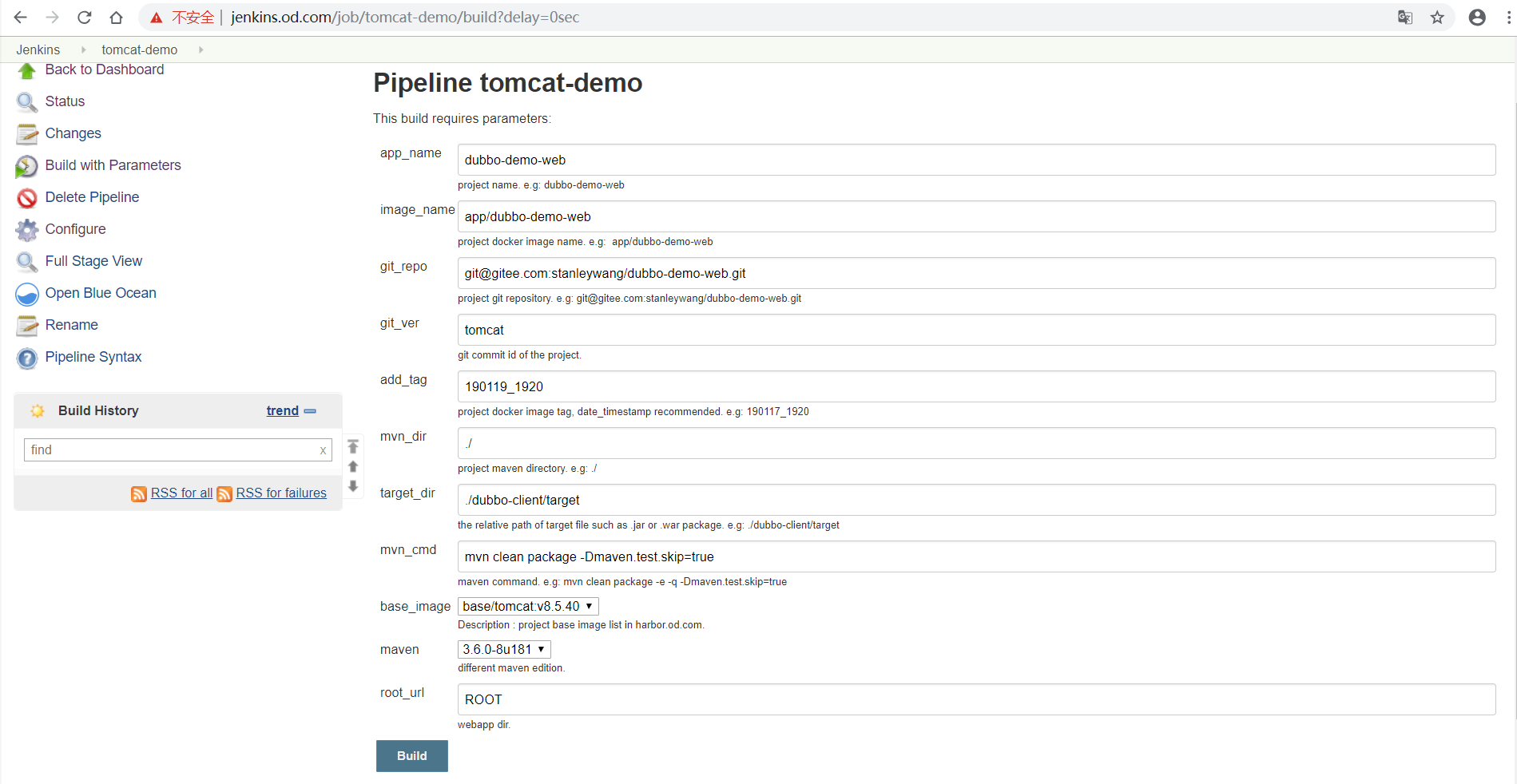

新建Jenkins的pipeline

配置New job

Add Parameter -> String Parameter

Name : app_name

Add Parameter -> String Parameter

Name : image_name

Add Parameter -> String Parameter

Name : git_repogit@gitee.com :stanleywang/dubbo-demo-web.git

Add Parameter -> String Parameter

Name : git_ver

Add Parameter -> String Parameter

Name : add_tag

Add Parameter -> String Parameter

Name : mvn_dir

Add Parameter -> String Parameter

Name : target_dir

Add Parameter -> String Parameter

Name : mvn_cmd

Add Parameter -> Choice Parameter

Name : base_image

base/tomcat:v7.0.94

base/tomcat:v8.5.40

base/tomcat:v9.0.17

Add Parameter -> Choice Parameter

Name : maven

3.6.0-8u181

3.2.5-6u025

2.2.1-6u025

Add Parameter -> String Parameter

Name : root_url

Pipeline Script

复制

123456789101112131415161718192021222324252627

pipeline { agent any stages { stage('pull') { //get project code from repo steps { sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}" } } stage('build') { //exec mvn cmd steps { sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}" } } stage('unzip') { //unzip target/*.war -c target/project_dir steps { sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && unzip *.war -d ./project_dir" } } stage('image') { //build image and push to registry steps { writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}ADD ${params.target_dir}/project_dir /opt/tomcat/webapps/${params.root_url}""" sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}" } } }}

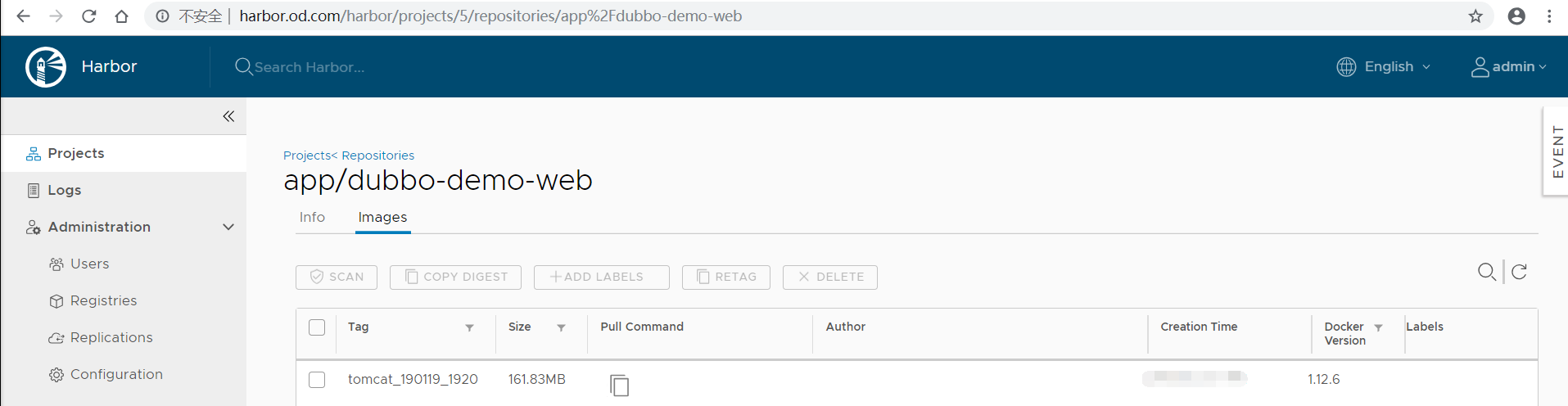

构建应用镜像

使用Jenkins进行CI,并查看harbor仓库

准备k8s的资源配置清单

不再需要单独准备资源配置清单

应用资源配置清单

k8s的dashboard上直接修改image的值为jenkins打包出来的镜像harbor.od.com/app/dubbo-demo-web:tomcat_190119_1920

浏览器访问

http://demo.od.com?hello=wangdao

检查tomcat运行情况

任意一台运算节点主机上:

复制

123456789

[root@hdss7-22 ~]# kubectl get pods -n appNAME READY STATUS RESTARTS AGEdubbo-demo-consumer-v025-htfx8 2/2 Running 0 1h[root@hdss7-22 ~]# kubectl exec -ti dubbo-demo-consumer-v025-htfx8 -n app bashdubbo-demo-consumer-v025-htfx8:/opt/tomcat# ls -lsr logs/total 16-rw-r----- 1 root root 7435 Jan 19 19:26 catalina.2019-01-19.log-rw-r----- 1 root root 629 Jan 19 19:26 localhost.2019-01-19.log-rw-r----- 1 root root 249 Jan 15 19:27 localhost_access_log.2019-01-19.txt

使用Prometheus和Grafana监控kubernetes集群

部署kube-state-metrics

运维主机HDSS7-200.host.com

准备kube-state-metrics镜像

kube-state-metrics官方quay.io地址

复制

12345678910111213

[root@hdss7-200 ~]# docker pull quay.io/coreos/kube-state-metrics:v1.5.0v1.5.0: Pulling from coreos/kube-state-metricscd784148e348: Pull complete f622528a393e: Pull complete Digest: sha256:b7a3143bd1eb7130759c9259073b9f239d0eeda09f5210f1cd31f1a530599ea1Status: Downloaded newer image for quay.io/coreos/kube-state-metrics:v1.5.0[root@hdss7-200 ~]# docker tag 91599517197a harbor.od.com/k8s/kube-state-metrics:v1.5.0[root@hdss7-200 ~]# docker push harbor.od.com/k8s/kube-state-metrics:v1.5.0docker push harbor.od.com/k8s/kube-state-metrics:v1.5.0The push refers to a repository [harbor.od.com/k8s/kube-state-metrics]5b3c36501a0a: Pushed 7bff100f35cb: Pushed v1.5.0: digest: sha256:0d9bea882f25586543254d9ceeb54703eb6f8f94c0dd88875df216b6a0f60589 size: 739

准备资源配置清单

vi /data/k8s-yaml/kube-state-metrics/rbac.yaml

复制

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273747576777879808182

apiVersion: v1kind: ServiceAccountmetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metrics namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metricsrules:- apiGroups: - "" resources: - configmaps - secrets - nodes - pods - services - resourcequotas - replicationcontrollers - limitranges - persistentvolumeclaims - persistentvolumes - namespaces - endpoints verbs: - list - watch- apiGroups: - extensions resources: - daemonsets - deployments - replicasets verbs: - list - watch- apiGroups: - apps resources: - statefulsets verbs: - list - watch- apiGroups: - batch resources: - cronjobs - jobs verbs: - list - watch- apiGroups: - autoscaling resources: - horizontalpodautoscalers verbs: - list - watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: kube-state-metricsroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metricssubjects:- kind: ServiceAccount name: kube-state-metrics namespace: kube-system

vi /data/k8s-yaml/kube-state-metrics/deployment.yaml

复制

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950

apiVersion: extensions/v1beta1kind: Deploymentmetadata: annotations: deployment.kubernetes.io/revision: "2" labels: grafanak8sapp: "true" app: kube-state-metrics name: kube-state-metrics namespace: kube-systemspec: selector: matchLabels: grafanak8sapp: "true" app: kube-state-metrics strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: grafanak8sapp: "true" app: kube-state-metrics spec: containers: - image: harbor.od.com/k8s/kube-state-metrics:v1.5.0 name: kube-state-metrics ports: - containerPort: 8080 name: http-metrics protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always serviceAccount: kube-state-metrics serviceAccountName: kube-state-metrics

应用资源配置清单

任意一台运算节点上:

复制

123456

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/rbac.yaml serviceaccount/kube-state-metrics createdclusterrole.rbac.authorization.k8s.io/kube-state-metrics createdclusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/deployment.yaml deployment.extensions/kube-state-metrics created

检查启动情况

复制

12

[root@hdss7-21 ~]# kubectl get pods -n kube-system|grep kube-state-metricskube-state-metrics-645bd94c55-wdtqh 1/1 Running 0 94s

部署node-exporter

运维主机HDSS7-200.host.com上:

准备node-exporter镜像

node-exporter官方dockerhub地址 node-expoerer官方github地址

复制

123456789101112131415

[root@hdss7-200 ~]# docker pull prom/node-exporter:v0.15.0v0.15.0: Pulling from prom/node-exporter0de338cf4258: Pull complete f508012419d8: Pull complete d764f7880123: Pull complete Digest: sha256:c390c8fea4cd362a28ad5070aedd6515aacdfdffd21de6db42ead05e332be5a9Status: Downloaded newer image for prom/node-exporter:v0.15.0[root@hdss7-200 ~]# docker tag b3e7f67a1480 harbor.od.com/k8s/node-exporter:v0.15.0[root@hdss7-200 ~]# docker push harbor.od.com/k8s/node-exporter:v0.15.0docker push harbor.od.com/k8s/node-exporter:v0.15.0The push refers to a repository [harbor.od.com/k8s/node-exporter]0bf893ee7433: Pushed 17ab2671f87e: Pushed b8873621dfbc: Pushed v0.15.0: digest: sha256:4e13dd75f00a6114675ea3e62e61dbd79dcb2205e8f3bbe1f8f8ef2fd3e28113 size: 949

准备资源配置清单

复制 /data/k8s-yaml/node-exporter/node-exporter-ds.yaml

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051

kind: DaemonSetapiVersion: extensions/v1beta1metadata: name: node-exporter namespace: kube-system labels: daemon: "node-exporter" grafanak8sapp: "true"spec: selector: matchLabels: daemon: "node-exporter" grafanak8sapp: "true" template: metadata: name: node-exporter labels: daemon: "node-exporter" grafanak8sapp: "true" spec: volumes: - name: proc hostPath: path: /proc type: "" - name: sys hostPath: path: /sys type: "" containers: - name: node-exporter image: harbor.od.com/k8s/node-exporter:v0.15.0 args: - --path.procfs=/host_proc - --path.sysfs=/host_sys ports: - name: node-exporter hostPort: 9100 containerPort: 9100 protocol: TCP volumeMounts: - name: sys readOnly: true mountPath: /host_sys - name: proc readOnly: true mountPath: /host_proc imagePullSecrets: - name: harbor restartPolicy: Always hostNetwork: true

应用资源配置清单

任意运算节点上:

复制

12

[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/node-exporter/node-exporter-ds.yamldaemonset.extensions/node-exporter created

部署cadvisor

运维主机HDSS7-200.host.com上:

准备cadvisor镜像

cadvisor官方dockerhub地址 cadvisor官方github地址

复制

123456789101112131415

[root@hdss7-200 ~]# docker pull google/cadvisor:v0.28.3v0.28.3: Pulling from google/cadvisor49388a8c9c86: Pull complete 21c698e54ae5: Pull complete fafc7cbc1edf: Pull complete Digest: sha256:09c8d73c9b799d30777763b7029cfd8624b8a1bd33af652ec3b51a6b827d492aStatus: Downloaded newer image for google/cadvisor:v0.28.3[root@hdss7-200 ~]# docker tag d60fd8aeb74c harbor.od.com/k8s/cadvisor:v0.28.3[root@hdss7-200 ~]# docker push !$docker push harbor.od.com/k8s/cadvisor:v0.28.3The push refers to a repository [harbor.od.com/k8s/cadvisor]daab541dbbf0: Pushed ba67c95cca3d: Pushed ef763da74d91: Pushed v0.28.3: digest: sha256:319812db86e7677767bf6a51ea63b5bdb17dc18ffa576eb6c8a667e899d1329d size: 951

准备资源配置清单

vi /data/k8s-yaml/cadvisor/daemonset.yaml

复制

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465666768

apiVersion: apps/v1kind: DaemonSetmetadata: name: cadvisor namespace: kube-system labels: app: cadvisorspec: selector: matchLabels: name: cadvisor template: metadata: labels: name: cadvisor spec: hostNetwork: true tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule key: enabledDiskSchedule value: "true" effect: NoSchedule containers: - name: cadvisor image: harbor.od.com/k8s/cadvisor:v0.28.3 imagePullPolicy: IfNotPresent volumeMounts: - name: rootfs mountPath: /rootfs readOnly: true - name: var-run mountPath: /var/run readOnly: true - name: sys mountPath: /sys readOnly: true - name: docker mountPath: /var/lib/docker readOnly: true ports: - name: http containerPort: 4194 protocol: TCP readinessProbe: tcpSocket: port: 4194 initialDelaySeconds: 5 periodSeconds: 10 args: - --housekeeping_interval=10s - --port=4194 imagePullSecrets: - name: harbor terminationGracePeriodSeconds: 30 volumes: - name: rootfs hostPath: path: / - name: var-run hostPath: path: /var/run - name: sys hostPath: path: /sys - name: docker hostPath: path: /data/docker

修改运算节点软连接

所有运算节点上:

复制

123456789

[root@hdss7-21 ~]# mount -o remount,rw /sys/fs/cgroup/[root@hdss7-21 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct/ /sys/fs/cgroup/cpuacct,cpu[root@hdss7-21 ~]# ll /sys/fs/cgroup/ | grep cputotal 0lrwxrwxrwx 1 root root 11 Jan 28 22:41 cpu -> cpu,cpuacctlrwxrwxrwx 1 root root 11 Jan 28 22:41 cpuacct -> cpu,cpuacctlrwxrwxrwx 1 root root 27 May 5 11:15 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct/drwxr-xr-x 8 root root 0 Apr 26 11:06 cpu,cpuacctdrwxr-xr-x 7 root root 0 Jan 28 22:41 cpuset

应用资源配置清单

任意运算节点上:

复制

1234

[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/cadvisor/deamon.yamldaemonset.apps/cadvisor created[root@hdss7-21 ~]# netstat -luntp|grep 4194tcp6 0 0 :::4194 :::* LISTEN 49153/cadvisor

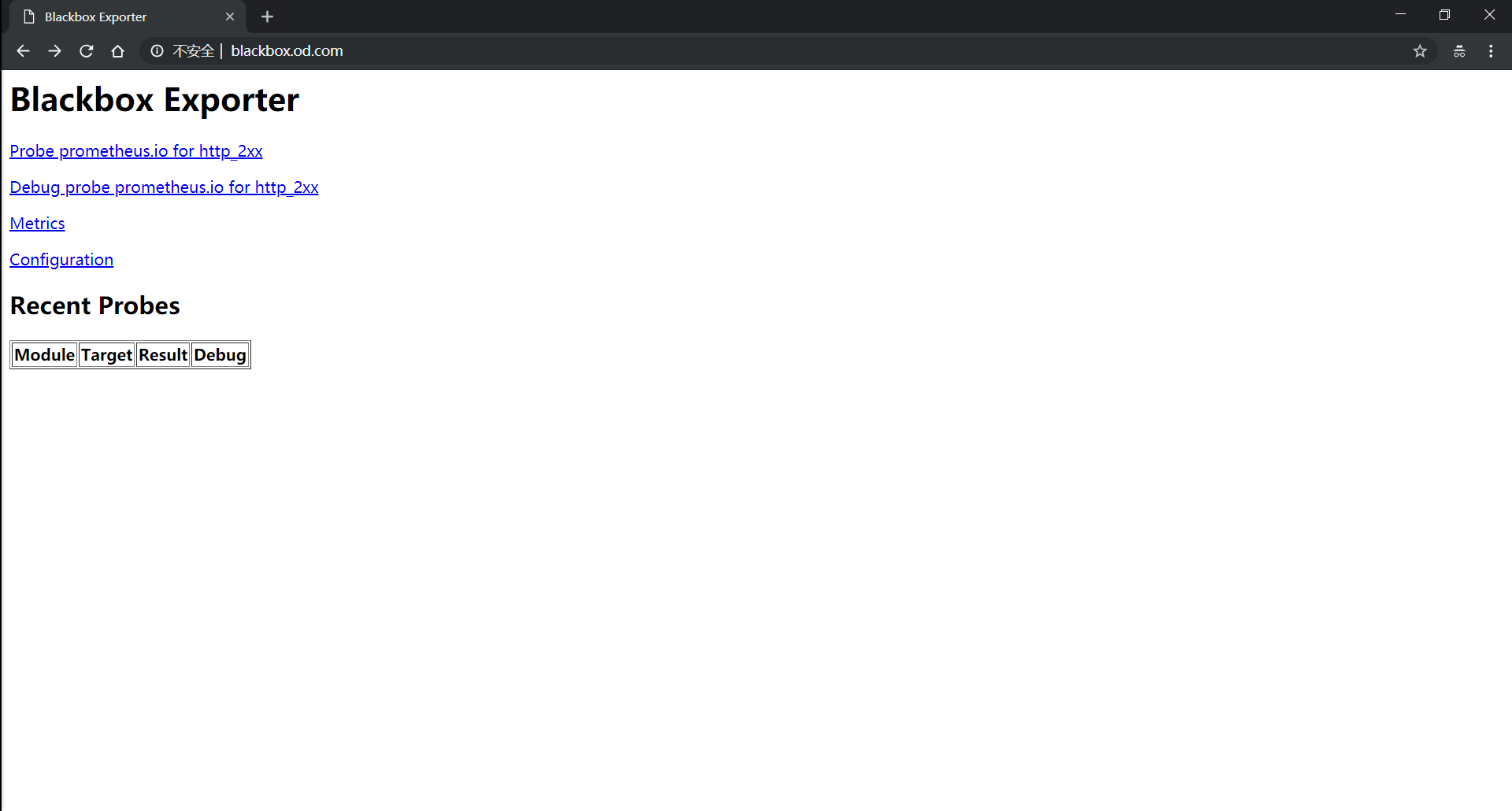

部署blackbox-exporter

运维主机HDSS7-200.host.com上:

准备blackbox-exporter镜像

blackbox-exporter官方dockerhub地址 blackbox-exporter官方github地址

复制

1234567891011121314151617

[root@hdss7-200 ~]# docker pull prom/blackbox-exporter:v0.14.0v0.14.0: Pulling from prom/blackbox-exporter697743189b6d: Pull complete f1989cfd335b: Pull complete 8918f7b8f34f: Pull complete a128dce6256a: Pull complete Digest: sha256:c20445e0cc628fa4b227fe2f694c22a314beb43fd8297095b6ee6cbc67161336Status: Downloaded newer image for prom/blackbox-exporter:v0.14.0[root@hdss7-200 ~]# docker tag d3a00aea3a01 harbor.od.com/k8s/blackbox-exporter:v0.14.0[root@hdss7-200 ~]# docker push harbor.od.com/k8s/blackbox-exporter:v0.14.0docker push harbor.od.com/k8s/blackbox-exporter:v0.14.0The push refers to a repository [harbor.od.com/k8s/blackbox-exporter]256c4aa8ebe5: Pushed 4b6cc55de649: Pushed 986894c42222: Mounted from infra/prometheus adab5d09ba79: Mounted from infra/prometheus v0.14.0: digest: sha256:b127897cf0f67c47496d5cbb7ecb86c001312bddd04192f6319d09292e880a5f size: 1155

准备资源配置清单

vi /data/k8s-yaml/blackbox-exporter/configmap.yaml

复制

123456789101112131415161718192021

apiVersion: v1kind: ConfigMapmetadata: labels: app: blackbox-exporter name: blackbox-exporter namespace: kube-systemdata: blackbox.yml: |- modules: http_2xx: prober: http timeout: 2s http: valid_http_versions: ["HTTP/1.1", "HTTP/2"] valid_status_codes: [200,301,302] method: GET preferred_ip_protocol: "ip4" tcp_connect: prober: tcp timeout: 2s

vi /data/k8s-yaml/blackbox-exporter/deployment.yaml

复制

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657

kind: DeploymentapiVersion: extensions/v1beta1metadata: name: blackbox-exporter namespace: kube-system labels: app: blackbox-exporter annotations: deployment.kubernetes.io/revision: 1spec: replicas: 1 selector: matchLabels: app: blackbox-exporter template: metadata: labels: app: blackbox-exporter spec: volumes: - name: config configMap: name: blackbox-exporter defaultMode: 420 containers: - name: blackbox-exporter image: harbor.od.com/k8s/blackbox-exporter:v0.14.0 args: - --config.file=/etc/blackbox_exporter/blackbox.yml - --log.level=debug - --web.listen-address=:9115 ports: - name: blackbox-port containerPort: 9115 protocol: TCP resources: limits: cpu: 200m memory: 256Mi requests: cpu: 100m memory: 50Mi volumeMounts: - name: config mountPath: /etc/blackbox_exporter readinessProbe: tcpSocket: port: 9115 initialDelaySeconds: 5 timeoutSeconds: 5 periodSeconds: 10 successThreshold: 1 failureThreshold: 3 imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always

vi /data/k8s-yaml/blackbox-exporter/service.yaml

复制

123456789101112

kind: ServiceapiVersion: v1metadata: name: blackbox-exporter namespace: kube-systemspec: selector: app: blackbox-exporter ports: - protocol: TCP port: 9115 name: http

vi /data/k8s-yaml/blackbox-exporter/ingress.yaml

复制

12345678910111213

apiVersion: extensions/v1beta1kind: Ingressmetadata: name: blackbox-exporter namespace: kube-systemspec: rules: - host: blackbox.od.com http: paths: - backend: serviceName: blackbox-exporter servicePort: 9115

解析域名

HDSS7-11.host.com上

复制 /var/named/od.com.zone

1

blackbox 60 IN A 10.4.7.10

应用资源配置清单

任意运算节点上:

复制

12345678

[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/blackbox-exporter/configmap.yamlconfigmap/blackbox-exporter created[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/blackbox-exporter/deployment.yamldeployment.extensions/blackbox-exporter created[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/blackbox-exporter/service.yamlservice/blackbox-exporter created[root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/blackbox-exporter/ingress.yamlingress.extensions/blackbox-exporter created

浏览器访问

http://blackbox.od.com

部署prometheus

运维主机HDSS7-200.host.com上:

准备prometheus镜像

prometheus官方dockerhub地址 prometheus官方github地址

复制

1234567891011121314151617181920212223242526

[root@hdss7-200 ~]# docker pull prom/prometheus:v2.9.1v2.9.1: Pulling from prom/prometheus697743189b6d: Pull complete f1989cfd335b: Pull complete b60c2f039ea7: Pull complete 6a189f2c500c: Pull complete bd6be4aea906: Pull complete 81b69caae2b5: Pull complete 5e7226eda004: Pull complete 564568254ec8: Pull complete 9bd07902fc4b: Pull complete Digest: sha256:e02bb3dec47631b4d31cede2d35ff901d892b57f33144406ee7994e8c94fb2d7Status: Downloaded newer image for prom/prometheus:v2.9.1[root@hdss7-200 ~]# docker tag 4737a2d79d1a harbor.od.com/infra/prometheus:v2.9.1[root@hdss7-200 ~]# docker push harbor.od.com/infra/prometheus:v2.9.1The push refers to a repository [harbor.od.com/infra/prometheus]a67e5326fa35: Pushed 02c0c0b3065f: Pushed b7b1f5015c12: Pushed 38be466f60e1: Pushed 9c3fb6c27da7: Pushed a06c616e78e9: Pushed 05c0d5c2ae72: Pushed 986894c42222: Pushed adab5d09ba79: Pushed v2.9.1: digest: sha256:2357e59541f5596dd90d9f4218deddecd9b4880c1e417a42592b00b30b47b0a9 size: 2198

准备资源配置清单

运维主机HDSS7-200.host.com上:

复制 /data/k8s-yaml

1

[root@hdss7-200 k8s-yaml]# mkdir /data/k8s-yaml/prometheus && mkdir -p /data/nfs-volume/prometheus/etc && cd /data/k8s-yaml/prometheus

vi /data/k8s-yaml/prometheus/rbac.yaml

复制

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455

apiVersion: v1kind: ServiceAccountmetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheus namespace: infra---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheusrules:- apiGroups: - "" resources: - nodes - nodes/metrics - services - endpoints - pods verbs: - get - list - watch- apiGroups: - "" resources: - configmaps verbs: - get- nonResourceURLs: - /metrics verbs: - get---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: prometheusroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheussubjects:- kind: ServiceAccount name: prometheus namespace: infra

vi /data/k8s-yaml/prometheus/deployment.yaml

复制

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364

apiVersion: extensions/v1beta1kind: Deploymentmetadata: annotations: deployment.kubernetes.io/revision: "5" labels: name: prometheus name: prometheus namespace: infraspec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 7 selector: matchLabels: app: prometheus strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: prometheus spec: nodeName: 10.4.7.21 containers: - image: harbor.od.com/infra/prometheus:v2.9.1 args: - --config.file=/data/etc/prometheus.yml - --storage.tsdb.path=/data/prom-db - --storage.tsdb.retention=72h command: - /bin/prometheus name: prometheus ports: - containerPort: 9090 protocol: TCP resources: limits: cpu: 500m memory: 2500Mi requests: cpu: 100m memory: 100Mi volumeMounts: - mountPath: /data name: data imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor securityContext: runAsUser: 0 dnsPolicy: ClusterFirst restartPolicy: Always serviceAccount: prometheus serviceAccountName: prometheus volumes: - name: data nfs: server: hdss7-200 path: /data/nfs-volume/prometheus

vi /data/k8s-yaml/prometheus/service.yaml

复制

12345678910111213

apiVersion: v1kind: Servicemetadata: name: prometheus namespace: infraspec: ports: - port: 9090 protocol: TCP name: prometheus selector: app: prometheus type: ClusterIP

vi /data/k8s-yaml/prometheus/ingress.yaml

复制

123456789101112131415

apiVersion: extensions/v1beta1kind: Ingressmetadata: annotations: kubernetes.io/ingress.class: traefik name: prometheus namespace: infraspec: rules: - host: prometheus.od.com http: paths: - backend: serviceName: prometheus servicePort: 9090

准备prometheus的配置文件

运算节点HDSS7-21.host.com上:

复制

1234567

[root@hdss7-21 ~]# mkdir -pv /data/k8s-volume/prometheus/{etc,prom-db}mkdir: created directory ‘/data/k8s-volume/prometheus/etc’mkdir: created directory ‘/data/k8s-volume/prometheus/prom-db’[root@hdss7-21 ~]# cd /data/k8s-volume/prometheus/etc[root@hdss7-21 ~]# scp root@10.4.7.200:/opt/certs/ca.pem .[root@hdss7-21 ~]# scp root@10.4.7.200:/opt/certs/client.pem .[root@hdss7-21 ~]# scp root@10.4.7.200:/opt/certs/client-key.pem .

复制 /data/k8s-volume/prometheus/etc/prometheus.yml

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135136137138139140141142143144145146147148149150151152153154155156157158159160161162163164165166167168169

global: scrape_interval: 15s evaluation_interval: 15sscrape_configs:- job_name: 'etcd' tls_config: ca_file: /data/etc/ca.pem cert_file: /data/etc/client.pem key_file: /data/etc/client-key.pem scheme: https static_configs: - targets: - '10.4.7.12:2379' - '10.4.7.21:2379' - '10.4.7.22:2379'- job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https- job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name- job_name: 'kubernetes-kubelet' kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __address__ replacement: ${1}:10255- job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __address__ replacement: ${1}:4194- job_name: 'kubernetes-kube-state' kubernetes_sd_configs: - role: pod relabel_configs: - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - source_labels: [__meta_kubernetes_pod_label_grafanak8sapp] regex: .*true.* action: keep - source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name'] regex: 'node-exporter;(.*)' action: replace target_label: nodename- job_name: 'blackbox_http_pod_probe' metrics_path: /probe kubernetes_sd_configs: - role: pod params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme] action: keep regex: http - source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path] action: replace regex: ([^:]+)(?::d+)?;(d+);(.+) replacement: $1:$2$3 target_label: __param_target - action: replace target_label: __address__ replacement: blackbox-exporter.kube-system:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name- job_name: 'blackbox_tcp_pod_probe' metrics_path: /probe kubernetes_sd_configs: - role: pod params: module: [tcp_connect] relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme] action: keep regex: tcp - source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __param_target - action: replace target_label: __address__ replacement: blackbox-exporter.kube-system:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name- job_name: 'traefik' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme] action: keep regex: traefik - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name

应用资源配置清单

任意运算节点上:

复制

12345678910

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/rbac.yamlserviceaccount/prometheus createdclusterrole.rbac.authorization.k8s.io/prometheus createdclusterrolebinding.rbac.authorization.k8s.io/prometheus created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/deployment.yamldeployment.extensions/prometheus created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/service.yamlservice/prometheus created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/ingress.yamlingress.extensions/prometheus created

解析域名

HDSS7-11.host.com上

复制 /var/named/od.com.zone

1

prometheus 60 IN A 10.4.7.10

浏览器访问

http://prometheus.od.com

Prometheus监控内容

Targets(jobs)

etcd

监控etcd服务

key value

etcd_server_has_leader

1

etcd_http_failed_total

1

…

…

kubernetes-apiserver

监控apiserver服务

kubernetes-kubelet

监控kubelet服务

kubernetes-kube-state

监控基本信息

node-exporter

监控Node节点信息

kube-state-metrics

监控pod信息

traefik

监控traefik-ingress-controller

key value

traefik_entrypoint_requests_total{code=”200”,entrypoint=”http”,method=”PUT”,protocol=”http”}

138

traefik_entrypoint_requests_total{code=”200”,entrypoint=”http”,method=”GET”,protocol=”http”}

285

traefik_entrypoint_open_connections{entrypoint=”http”,method=”PUT”,protocol=”http”}

1

…

…

注意:在traefik的pod控制器上加annotations,并重启pod,监控生效

复制

12345

"annotations": { "prometheus_io_scheme": "traefik", "prometheus_io_path": "/metrics", "prometheus_io_port": "8080"}

blackbox*

监控服务是否存活

blackbox_tcp_pod_porbe

监控tcp协议服务是否存活

key value

probe_success

1

probe_ip_protocol

4

probe_failed_due_to_regex

0

probe_duration_seconds

0.000597546

probe_dns_lookup_time_seconds

0.00010898

注意:在pod控制器上加annotations,并重启pod,监控生效

复制

1234

"annotations": { "blackbox_port": "20880", "blackbox_scheme": "tcp"}

blackbox_http_pod_probe

监控http协议服务是否存活

key value

probe_success

1

probe_ip_protocol

4

probe_http_version

1.1

probe_http_status_code

200

probe_http_ssl

0

probe_http_redirects

1

probe_http_last_modified_timestamp_seconds

1.553861888e+09

probe_http_duration_seconds{phase=”transfer”}

0.000238343

probe_http_duration_seconds{phase=”tls”}

0

probe_http_duration_seconds{phase=”resolve”}

5.4095e-05

probe_http_duration_seconds{phase=”processing”}

0.000966104

probe_http_duration_seconds{phase=”connect”}

0.000520821

probe_http_content_length

716

probe_failed_due_to_regex

0

probe_duration_seconds

0.00272609

probe_dns_lookup_time_seconds

5.4095e-05

注意:在pod控制器上加annotations,并重启pod,监控生效

复制

12345

"annotations": { "blackbox_path": "/", "blackbox_port": "8080", "blackbox_scheme": "http"}

kubernetes-pods*

监控JVM信息

key value

jvm_info{version=”1.7.0_80-b15”,vendor=”Oracle Corporation”,runtime=”Java(TM) SE Runtime Environment”,}

1.0

jmx_config_reload_success_total

0.0

process_resident_memory_bytes

4.693897216E9

process_virtual_memory_bytes

1.2138840064E10

process_max_fds

65536.0

process_open_fds

123.0

process_start_time_seconds

1.54331073249E9

process_cpu_seconds_total

196465.74

jvm_buffer_pool_used_buffers{pool=”mapped”,}

0.0

jvm_buffer_pool_used_buffers{pool=”direct”,}

150.0

jvm_buffer_pool_capacity_bytes{pool=”mapped”,}

0.0

jvm_buffer_pool_capacity_bytes{pool=”direct”,}

6216688.0

jvm_buffer_pool_used_bytes{pool=”mapped”,}

0.0

jvm_buffer_pool_used_bytes{pool=”direct”,}

6216688.0

jvm_gc_collection_seconds_sum{gc=”PS MarkSweep”,}

1.867

…

…

注意:在pod控制器上加annotations,并重启pod,监控生效

复制

12345

"annotations": { "prometheus_io_scrape": "true", "prometheus_io_port": "12346", "prometheus_io_path": "/"}

修改traefik服务接入prometheus监控

dashboard上:

复制

12345

"annotations": { "prometheus_io_scheme": "traefik", "prometheus_io_path": "/metrics", "prometheus_io_port": "8080"}

删除pod,重启traefik,观察监控

继续添加blackbox监控配置项

复制

12345678

"annotations": { "prometheus_io_scheme": "traefik", "prometheus_io_path": "/metrics", "prometheus_io_port": "8080", "blackbox_path": "/", "blackbox_port": "8080", "blackbox_scheme": "http"}

修改dubbo-service服务接入prometheus监控

dashboard上:

复制

1234567

"annotations": { "prometheus_io_scrape": "true", "prometheus_io_path": "/", "prometheus_io_port": "12346", "blackbox_port": "20880", "blackbox_scheme": "tcp"}

删除pod,重启traefik,观察监控

修改dubbo-consumer服务接入prometheus监控

app名称空间->deployment->dubbo-demo-consumer->spec->template->metadata下,添加

复制

12345678

"annotations": { "prometheus_io_scrape": "true", "prometheus_io_path": "/", "prometheus_io_port": "12346", "blackbox_path": "/hello", "blackbox_port": "8080", "blackbox_scheme": "http"}

删除pod,重启traefik,观察监控

部署Grafana

运维主机HDSS7-200.host.com上:

准备grafana镜像

grafana官方dockerhub地址 grafana官方github地址 grafana官网

复制

123456789101112131415161718192021

[root@hdss7-200 ~]# docker pull grafana/grafana:6.1.46.1.4: Pulling from grafana/grafana27833a3ba0a5: Pull complete 9412d126b236: Pull complete 1b7d6aaa6217: Pull complete 530d1110a8c8: Pull complete fdcf73917f64: Pull complete f5009e3ea28a: Pull complete Digest: sha256:c2100550937e7aa0f3e33c2fc46a8c9668c3b5f2f71a8885e304d35de9fea009Status: Downloaded newer image for grafana/grafana:6.1.4[root@hdss7-200 ~]# docker tag d9bdb6044027 harbor.od.com/infra/grafana:v6.1.4[root@hdss7-200 ~]# docker push harbor.od.com/infra/grafana:v6.1.4docker push harbor.od.com/infra/grafana:v6.1.4The push refers to a repository [harbor.od.com/infra/grafana]b57e9e94fc2d: Pushed 3d4e16e25cba: Pushed 9642e67d431a: Pushed af52591a894f: Pushed 0a8c2d04bf65: Pushed 5dacd731af1b: Pushed v6.1.4: digest: sha256:db87ab263f90bdae66be744ac7935f6980c4bbd30c9756308e7382e00d4eeae8 size: 1576

准备资源配置清单

vi /data/k8s-yaml/grafana/rbac.yaml

复制

123456789101112131415161718192021222324252627282930313233

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: grafanarules:- apiGroups: - "*" resources: - namespaces - deployments - pods verbs: - get - list - watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" name: grafanaroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: grafanasubjects:- kind: User name: k8s-node

vi /data/k8s-yaml/grafana/deployment.yaml

复制

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647

apiVersion: extensions/v1beta1kind: Deploymentmetadata: labels: app: grafana name: grafana name: grafana namespace: infraspec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 7 selector: matchLabels: name: grafana strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 type: RollingUpdate template: metadata: labels: app: grafana name: grafana spec: containers: - image: harbor.od.com/infra/grafana:v6.1.4 imagePullPolicy: IfNotPresent name: grafana ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /var/lib/grafana name: data imagePullSecrets: - name: harbor nodeName: 10.4.7.21 restartPolicy: Always securityContext: runAsUser: 0 volumes: - nfs: server: hdss7-200 path: /data/nfs-volume/grafana name: data

vi /data/k8s-yaml/grafana/service.yaml

复制

123456789101112

apiVersion: v1kind: Servicemetadata: name: grafana namespace: infraspec: ports: - port: 3000 protocol: TCP selector: app: grafana type: ClusterIP

vi /data/k8s-yaml/grafana/ingress.yaml

复制

1234567891011121314

apiVersion: extensions/v1beta1kind: Ingressmetadata: name: grafana namespace: infraspec: rules: - host: grafana.od.com http: paths: - path: / backend: serviceName: grafana servicePort: 3000

应用资源配置清单

任意运算节点上:

复制

123456

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/deployment.yaml deployment.extensions/grafana created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/service.yaml service/grafana created[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/ingress.yaml ingress.extensions/grafana created

解析域名

HDSS7-11.host.com上

复制 /var/named/od.com.zone

1

grafana 60 IN A 10.4.7.10

浏览器访问

http://grafana.od.com

登录后需要修改管理员密码

配置grafana页面

外观

Configuration -> Preferences

UI Theme

Light

Home Dashboard

Default

Timezone

Local browser time

save

插件

Configuration -> Plugins

安装方法一:

复制

1

grafana-cli plugins install grafana-kubernetes-app

安装方法二:下载地址

复制 /data/nfs-volume/grafana/plugins

1234567891011121314151617

[root@hdss7-21 plugins]# wget https://grafana.com/api/plugins/grafana-kubernetes-app/versions/1.0.1/download -O grafana-kubernetes-app.zip--2019-04-28 16:15:33-- https://grafana.com/api/plugins/grafana-kubernetes-app/versions/1.0.1/downloadResolving grafana.com (grafana.com)... 35.241.23.245Connecting to grafana.com (grafana.com)|35.241.23.245|:443... connected.HTTP request sent, awaiting response... 302 FoundLocation: https://codeload.github.com/grafana/kubernetes-app/legacy.zip/31da38addc1d0ce5dfb154737c9da56e3b6692fc [following]--2019-04-28 16:15:37-- https://codeload.github.com/grafana/kubernetes-app/legacy.zip/31da38addc1d0ce5dfb154737c9da56e3b6692fcResolving codeload.github.com (codeload.github.com)... 13.229.189.0, 13.250.162.133, 54.251.140.56Connecting to codeload.github.com (codeload.github.com)|13.229.189.0|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 3084524 (2.9M) [application/zip]Saving to: ‘grafana-kubernetes-app.zip’100%[===================================================================================================================>] 3,084,524 116KB/s in 12s 2019-04-28 16:15:51 (250 KB/s) - ‘grafana-kubernetes-app.zip’ saved [3084524/3084524][root@hdss7-200 plugins]# unzip grafana-kubernetes-app.zip

安装方法一:

复制

1

grafana-cli plugins install grafana-clock-panel

安装方法二:下载地址

安装方法一:

复制

1

grafana-cli plugins install grafana-piechart-panel

安装方法二:下载地址

安装方法一:

复制

1

grafana-cli plugins install briangann-gauge-panel

安装方法二:下载地址

安装方法一:

复制

1

grafana-cli plugins install natel-discrete-panel

安装方法二:下载地址

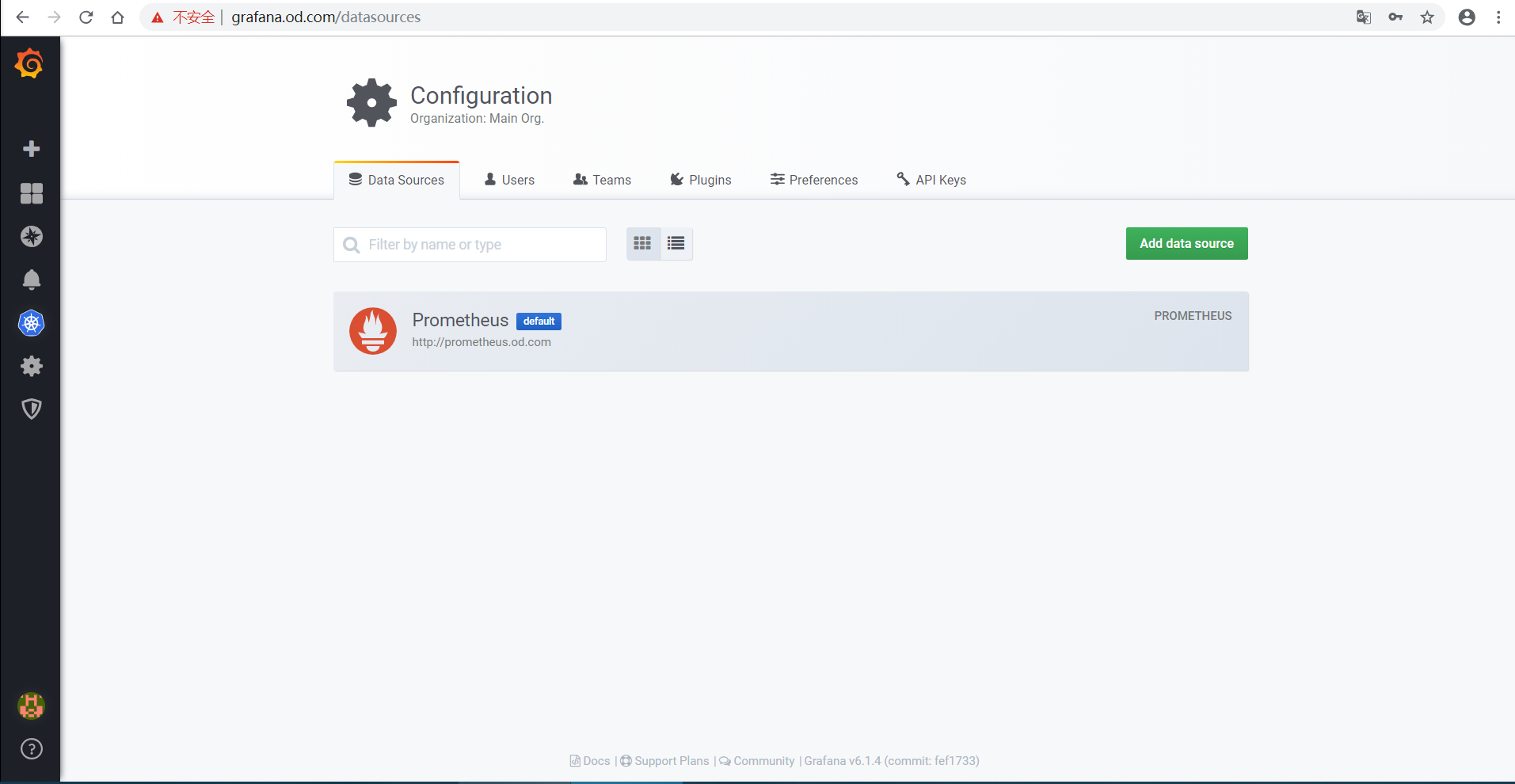

配置grafana数据源

Configuration -> Data Sources

配置Kubernetes集群Dashboard

kubernetes -> +New Cluster

key value

TLS Client Auth

勾选

With Ca Cert

勾选

将ca.pem、client.pem和client-key.pem粘贴至文本框内

key value

Datasource

Prometheus

注意:

K8S Container中,所有Pannel的

pod_name -> container_label_io_kubernetes_pod_name

配置自定义dashboard

根据Prometheus数据源里的数据,配置如下dashboard:

etcd dashboard

traefik dashboard

generic dashboard

JMX dashboard

blackbox dashboard

使用ELK Stack收集kubernetes集群内的应用日志

部署ElasticSearch

官网 官方github地址 下载地址 HDSS7-12.host.com上:

安装

复制 /opt/src

1234

[root@hdss7-12 src]# ls -l|grep elasticsearch-5.6.15.tar.gz-rw-r--r-- 1 root root 72262257 Jan 30 15:18 elasticsearch-5.6.15.tar.gz[root@hdss7-12 src]# tar xf elasticsearch-5.6.15.tar.gz -C /opt[root@hdss7-12 src]# ln -s /opt/elasticsearch-5.6.15/ /opt/elasticsearch

配置

elasticsearch.yml

复制

123456789

[root@hdss7-12 src]# mkdir -p /data/elasticsearch/{data,logs}[root@hdss7-12 elasticsearch]# vi config/elasticsearch.ymlcluster.name: es.od.comnode.name: hdss7-12.host.compath.data: /data/elasticsearch/datapath.logs: /data/elasticsearch/logsbootstrap.memory_lock: truenetwork.host: 10.4.7.12http.port: 9200

jvm.options

复制

123

[root@hdss7-12 elasticsearch]# vi config/jvm.options-Xms512m-Xmx512m

创建普通用户

复制

123

[root@hdss7-12 elasticsearch]# useradd -s /bin/bash -M es[root@hdss7-12 elasticsearch]# chown -R es.es /opt/elasticsearch-5.6.15[root@hdss7-12 elasticsearch]# chown -R es.es /data/elasticsearch

文件描述符

复制 /etc/security/limits.d/es.conf

1234

es hard nofile 65536es soft fsize unlimitedes hard memlock unlimitedes soft memlock unlimited

调整内核参数

复制

1234

[root@hdss7-12 elasticsearch]# sysctl -w vm.max_map_count=262144or[root@hdss7-12 elasticsearch]# echo "vm.max_map_count=262144" > /etc/sysctl.conf[root@hdss7-12 elasticsearch]# sysctl -p

启动

复制

123456

[root@hdss7-12 elasticsearch]# su - essu: warning: cannot change directory to /home/es: No such file or directory-bash-4.2$ /opt/elasticsearch/bin/elasticsearch &[1] 8714[root@hdss7-12 elasticsearch]# ps -ef|grep elastic|grep -v grepes 8714 1 58 10:29 pts/0 00:00:19 /usr/java/jdk/bin/java -Xms512m -Xmx512m -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -server -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -Djdk.io.permissionsUseCanonicalPath=true -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j.skipJansi=true -XX:+HeapDumpOnOutOfMemoryError -Des.path.home=/opt/elasticsearch -cp /opt/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch

调整ES日志模板

复制

12345678

[root@hdss7-12 elasticsearch]# curl -XPUT http://10.4.7.12:9200/_template/k8s -d '{ "template" : "k8s*", "index_patterns": ["k8s*"], "settings": { "number_of_shards": 5, "number_of_replicas": 0 }}'

部署kafka

官网 官方github地址 下载地址 HDSS7-11.host.com上:

安装

复制 /opt/src

1234

[root@hdss7-11 src]# ls -l|grep kafka-rw-r--r-- 1 root root 57028557 Mar 23 08:57 kafka_2.12-2.2.0.tgz[root@hdss7-11 src]# tar xf kafka_2.12-2.1.1.tgz -C /opt[root@hdss7-11 src]# ln -s /opt/kafka_2.12-2.1.1/ /opt/kafka

配置

复制 /opt/kafka/config/server.properties

123456

log.dirs=/data/kafka/logszookeeper.connect=localhost:2181log.flush.interval.messages=10000log.flush.interval.ms=1000delete.topic.enable=truehost.name=hdss7-11.host.com

启动

复制 /opt/kafka

123

[root@hdss7-11 kafka]# bin/kafka-server-start.sh -daemon config/server.properties[root@hdss7-11 kafka]# netstat -luntp|grep 9092tcp6 0 0 10.4.7.12:9092 :::* LISTEN 17543/java

部署kafka-manager

官方github地址 源码下载地址 HDSS7-200.host.com上:

方法一:1、准备Dockerfile

复制 /data/dockerfile/kafka-manager/Dockerfile

123456789101112131415161718

FROM hseeberger/scala-sbtENV ZK_HOSTS=10.4.7.11:2181 KM_VERSION=2.0.0.2RUN mkdir -p /tmp && cd /tmp && wget https://github.com/yahoo/kafka-manager/archive/${KM_VERSION}.tar.gz && tar xxf ${KM_VERSION}.tar.gz && cd /tmp/kafka-manager-${KM_VERSION} && sbt clean dist && unzip -d / ./target/universal/kafka-manager-${KM_VERSION}.zip && rm -fr /tmp/${KM_VERSION} /tmp/kafka-manager-${KM_VERSION}WORKDIR /kafka-manager-${KM_VERSION}EXPOSE 9000ENTRYPOINT ["./bin/kafka-manager","-Dconfig.file=conf/application.conf"]

方法一:2、制作docker镜像

复制 /data/dockerfile/kafka-manager

12

[root@hdss7-200 kafka-manager]# docker build . -t harbor.od.com/infra/kafka-manager:v2.0.0.2(漫长的过程)

方法二:直接下载docker镜像

镜像下载地址

复制

12345678910111213141516171819

[root@hdss7-200 ~]# docker pull sheepkiller/kafka-manager:stable[root@hdss7-200 ~]# docker tag 34627743836f harbor.od.com/infra/kafka-manager:stable[root@hdss7-200 ~]# docker push harbor.od.com/infra/kafka-manager:stabledocker push harbor.od.com/infra/kafka-manager:stableThe push refers to a repository [harbor.od.com/infra/kafka]ef97dbc2670b: Pushed ec01aa005e59: Pushed de05a1bdf878: Pushed 9c553f3feafd: Pushed 581533427a4f: Pushed f6b229974fdd: Pushed ae150883d6e2: Pushed 3df7be729841: Pushed f231cc200afe: Pushed 9752c15164a8: Pushed 9ab7eda5c826: Pushed 402964b3d72e: Pushed 6b3f8ebf864c: Pushed stable: digest: sha256:57fd46a3751284818f1bc6c0fdf097250bc0feed03e77135fb8b0a93aa8c6cc7 size: 3056

准备资源配置清单

vi /data/k8s-yaml/kafka-manager/deployment.yaml

复制

1234567891011121314151617181920212223242526272829303132333435363738394041424344

kind: DeploymentapiVersion: extensions/v1beta1metadata: name: kafka-manager namespace: infra labels: name: kafka-managerspec: replicas: 1 selector: matchLabels: name: kafka-manager template: metadata: labels: app: kafka-manager name: kafka-manager spec: containers: - name: kafka-manager image: harbor.od.com/infra/kafka-manager:stable ports: - containerPort: 9000 protocol: TCP env: - name: ZK_HOSTS value: zk1.od.com:2181 - name: APPLICATION_SECRET value: letmein imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600

vi /data/k8s-yaml/kafka-manager/svc.yaml

复制

123456789101112131415

kind: ServiceapiVersion: v1metadata: name: kafka-manager namespace: infraspec: ports: - protocol: TCP port: 9000 targetPort: 9000 selector: app: kafka-manager clusterIP: None type: ClusterIP sessionAffinity: None

vi /data/k8s-yaml/kafka-manager/ingress.yaml

复制

1234567891011121314

kind: IngressapiVersion: extensions/v1beta1metadata: name: kafka-manager namespace: infraspec: rules: - host: km.od.com http: paths: - path: / backend: serviceName: kafka-manager servicePort: 9000

应用资源配置清单

任意一台运算节点上:

复制

123456

[root@hdss7-21 kafka-manager]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/deployment.yaml deployment.extensions/kafka-manager created[root@hdss7-21 kafka-manager]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/svc.yaml service/kafka-manager created[root@hdss7-21 kafka-manager]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/ingress.yaml ingress.extensions/kafka-manager created

解析域名

HDSS7-11.host.com上

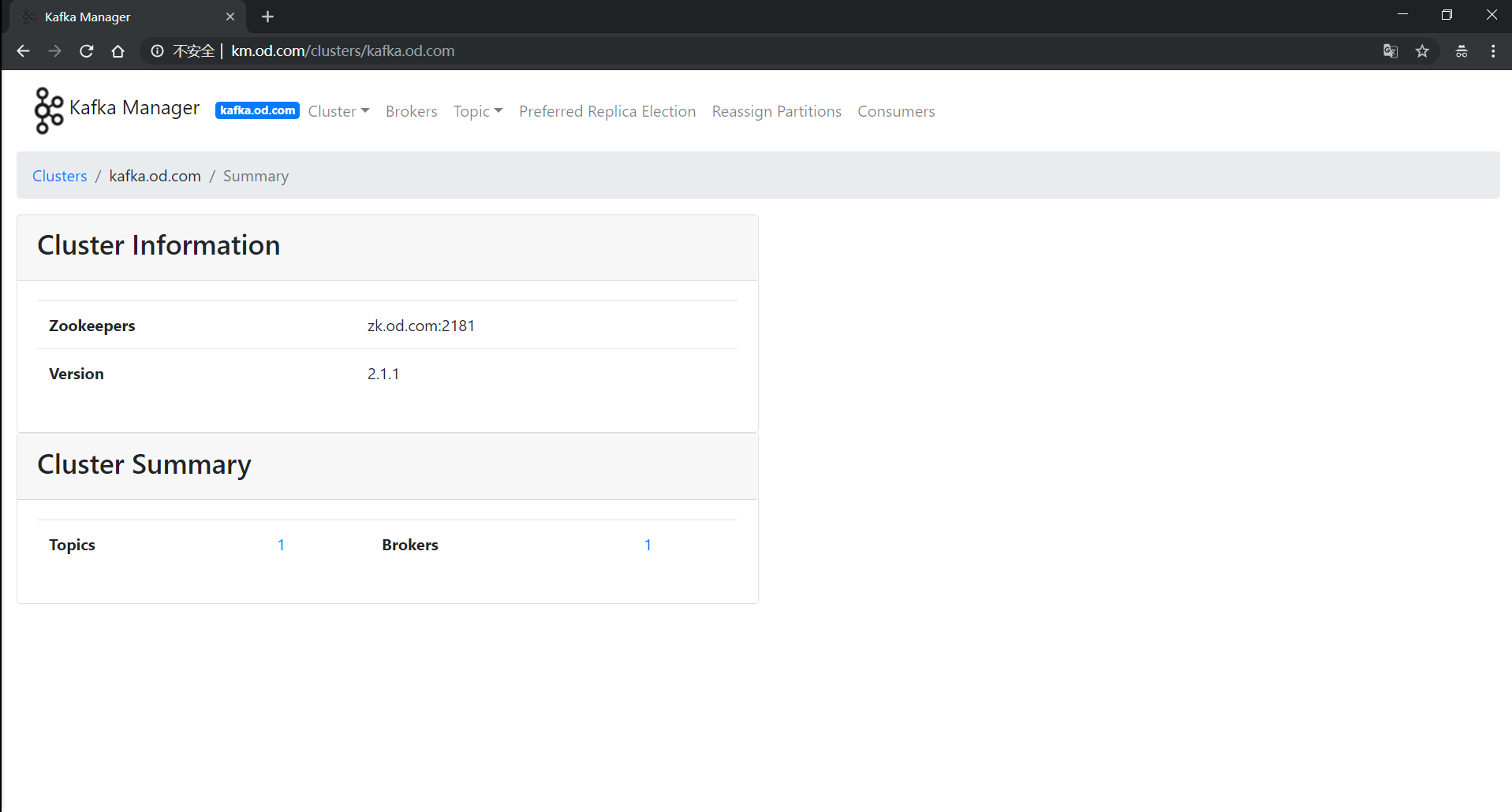

浏览器访问

http://km.od.com

部署filebeat

官方下载地址 HDSS7-200.host.com上:

制作docker镜像

准备Dockerfile

vi /data/dockerfile/filebeat/Dockerfile

复制

12345678910111213141516171819202122

FROM debian:jessieENV FILEBEAT_VERSION=7.0.1 FILEBEAT_SHA1=fdddfa32a7d9db5ac4504b34499e6259e09b86205bac842f78fddd45e8ee00c3cb76419af2313659fd23db4fcbcc29f7568a3663c5d3c52ac0fc9e641d0ae8b1RUN set -x && apt-get update && apt-get install -y wget && wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-${FILEBEAT_VERSION}-linux-x86_64.tar.gz -O /opt/filebeat.tar.gz && cd /opt && echo "${FILEBEAT_SHA1} filebeat.tar.gz" | sha512sum -c - && tar xzvf filebeat.tar.gz && cd filebeat-* && cp filebeat /bin && cd /opt && rm -rf filebeat* && apt-get purge -y wget && apt-get autoremove -y && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*COPY docker-entrypoint.sh /ENTRYPOINT ["/docker-entrypoint.sh"]

vi /data/dockerfile/filebeat/docker-entrypoint.sh

复制

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354

#!/bin/bashENV=${ENV:-"test"}PROJ_NAME=${PROJ_NAME:-"no-define"}MULTILINE=${MULTILINE:-"^d{2}"}cat > /etc/filebeat.yaml << EOFfilebeat.inputs:- type: log fields_under_root: true fields: topic: logm-${PROJ_NAME} paths: - /logm/*.log - /logm/*/*.log - /logm/*/*/*.log - /logm/*/*/*/*.log - /logm/*/*/*/*/*.log scan_frequency: 120s max_bytes: 10485760 multiline.pattern: '$MULTILINE' multiline.negate: true multiline.match: after multiline.max_lines: 100- type: log fields_under_root: true fields: topic: logu-${PROJ_NAME} paths: - /logu/*.log - /logu/*/*.log - /logu/*/*/*.log - /logu/*/*/*/*.log - /logu/*/*/*/*/*.log - /logu/*/*/*/*/*/*.logoutput.kafka: hosts: ["10.4.7.11:9092"] topic: k8s-fb-$ENV-%{[topic]} version: 2.0.0 required_acks: 0 max_message_bytes: 10485760EOFset -xe# If user don't provide any command# Run filebeatif [[ "$1" == "" ]]; then exec filebeat -c /etc/filebeat.yaml else # Else allow the user to run arbitrarily commands like bash exec "$@"fi

制作镜像

复制 /data/dockerfile/filebeat

123456789101112131415161718192021222324252627282930313233343536373839

[root@hdss7-200 filebeat]# docker build . -t harbor.od.com/infra/filebeat:v7.0.1...+ apt-get autoremove -yReading package lists...Building dependency tree...Reading state information...The following packages will be REMOVED: ca-certificates libicu52 libidn11 libpsl0 libssl1.0.0 openssl0 upgraded, 0 newly installed, 6 to remove and 6 not upgraded.After this operation, 33.6 MB disk space will be freed.(Reading database ... 7962 files and directories currently installed.)Removing ca-certificates (20141019+deb8u4) ...Removing dangling symlinks from /etc/ssl/certs... done.Removing libpsl0:amd64 (0.5.1-1) ...Removing libicu52:amd64 (52.1-8+deb8u7) ...Removing libidn11:amd64 (1.29-1+deb8u3) ...Removing openssl (1.0.1t-1+deb8u11) ...Removing libssl1.0.0:amd64 (1.0.1t-1+deb8u11) ...Processing triggers for libc-bin (2.19-18+deb8u10) ...+ apt-get clean+ rm -rf /var/lib/apt/lists/deb.debian.org_debian_dists_jessie_Release /var/lib/apt/lists/deb.debian.org_debian_dists_jessie_Release.gpg /var/lib/apt/lists/deb.debian.org_debian_dists_jessie_main_binary-amd64_Packages.gz /var/lib/apt/lists/lock /var/lib/apt/lists/partial /var/lib/apt/lists/security.debian.org_debian-security_dists_jessie_updates_InRelease /var/lib/apt/lists/security.debian.org_debian-security_dists_jessie_updates_main_binary-amd64_Packages.gz /tmp/* /var/tmp/* ---> a78678659f2cRemoving intermediate container 2cfff130c15cStep 4 : COPY docker-entrypoint.sh / ---> 62dc7fe5a98fRemoving intermediate container 87e271482593Step 5 : ENTRYPOINT /docker-entrypoint.sh ---> Running in d367b6e3bb5a ---> 23c8fbdc088aRemoving intermediate container d367b6e3bb5aSuccessfully built 23c8fbdc088a[root@hdss7-200 filebeat]# docker tag 23c8fbdc088a harbor.od.com/infra/filebeat:v7.0.1[root@hdss7-200 filebeat]# docker push !$docker push harbor.od.com/infra/filebeat:v7.0.1The push refers to a repository [harbor.od.com/infra/filebeat]6a765e653161: Pushed 8e89ae5c6fc2: Pushed 9abb3997a540: Pushed v7.0.1: digest: sha256:c35d7cdba29d8555388ad41ac2fc1b063ed9ec488082e33b5d0e91864b3bb35c size: 948

修改资源配置清单

使用dubbo-demo-consumer的Tomcat版镜像

复制 /data/k8s-yaml/dubbo-demo-consumer/deployment.yaml

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859

kind: DeploymentapiVersion: extensions/v1beta1metadata: name: dubbo-demo-consumer namespace: app labels: name: dubbo-demo-consumerspec: replicas: 1 selector: matchLabels: name: dubbo-demo-consumer template: metadata: labels: app: dubbo-demo-consumer name: dubbo-demo-consumer spec: containers: - name: dubbo-demo-consumer image: harbor.od.com/app/dubbo-demo-web:tomcat ports: - containerPort: 8080 protocol: TCP env: - name: C_OPTS value: -Denv=dev -Dapollo.meta=config.od.com imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /opt/tomcat/logs name: logm - name: filebeat image: harbor.od.com/infra/filebeat:v7.0.1 env: - name: ENV value: test - name: PROJ_NAME value: dubbo-demo-web imagePullPolicy: IfNotPresent volumeMounts: - mountPath: /logm name: logm volumes: - emptyDir: {} name: logm imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600

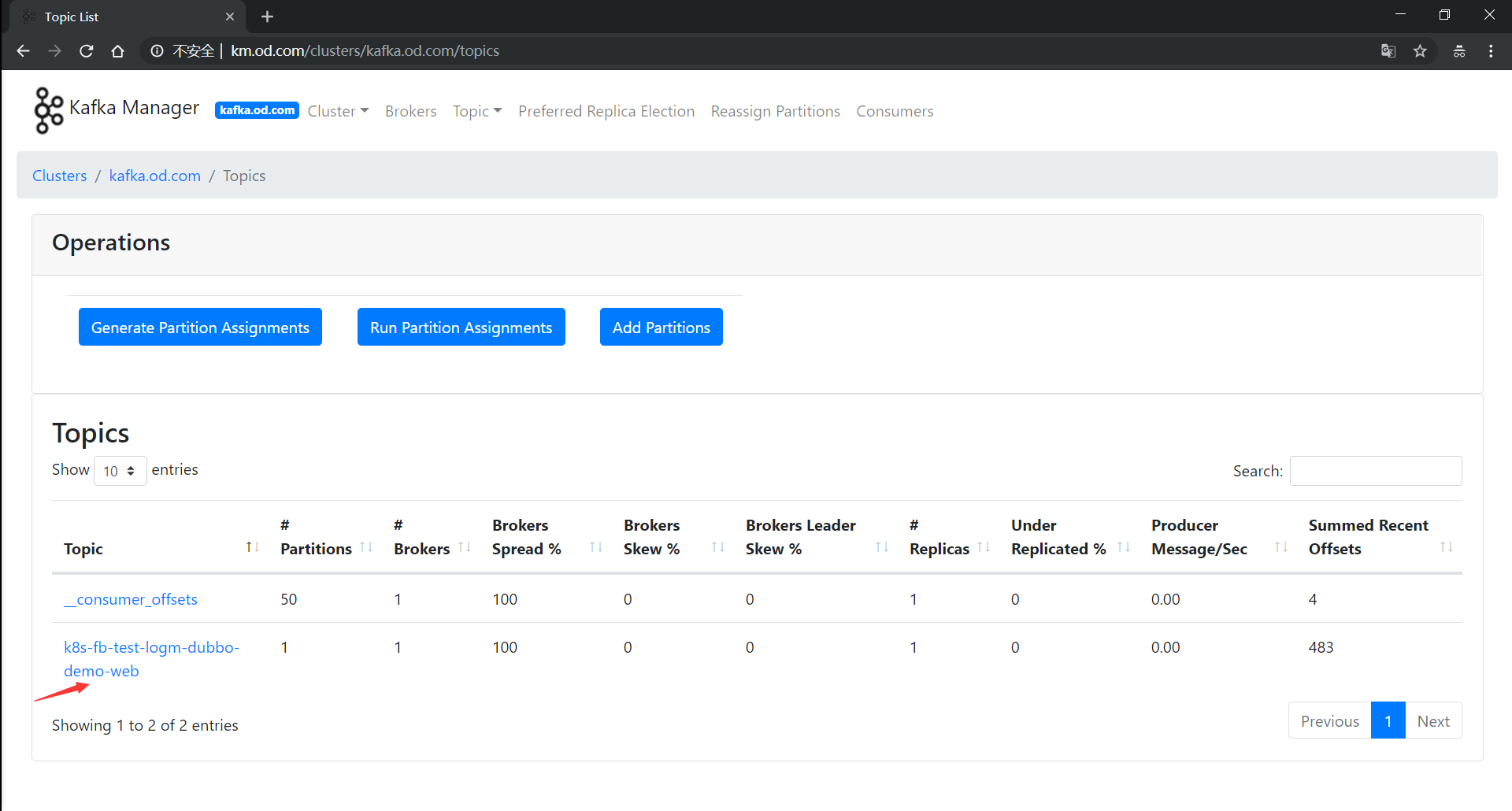

看到kafaka-manager里,topic打进来,即为成功。

验证数据

复制 /opt/kafka/bin

1

./kafka-console-consumer.sh --bootstrap-server 10.9.6.200:9092 --topic k8s-fb-test-logm-dubbo-demo-web --from-beginning

部署logstash

运维主机HDSS7-200.host.com上:

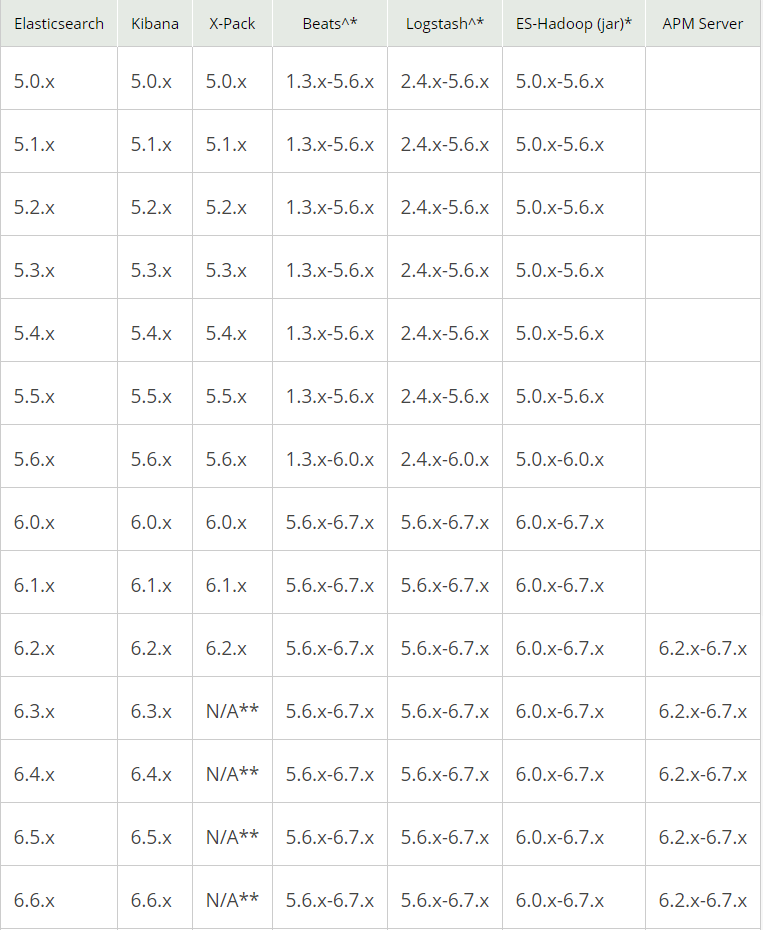

选版本

logstash选型

准备docker镜像

复制

123456789101112131415161718192021222324252627282930313233

[root@hdss7-200 ~]# docker pull logstash:6.7.26.7.2: Pulling from library/logstash8ba884070f61: Pull complete 063405b57b96: Pull complete 0a0115b8825c: Pull complete 825b124900cb: Pull complete ed736d9e3c41: Pull complete 6efb3934697a: Pull complete c0a3a0c8ebc2: Pull complete f55d8adfbc3d: Pull complete 3a6c56fbbef8: Pull complete ca45dc8946a2: Pull complete 8b852a079ea9: Pull complete Digest: sha256:46eaff19af5e14edd9b78e1d5bf16f6abcd9ad50e0338cbaf3024f2aadb2903bStatus: Downloaded newer image for logstash:6.7.2[root@hdss7-200 ~]# docker tag 857d9a1f8221 harbor.od.com/public/logstash:v6.7.2[root@hdss7-200 ~]# docker push harbor.od.com/public/logstash:v6.7.2docker push harbor.od.com/public/logstash:v6.7.2The push refers to a repository [harbor.od.com/public/logstash]c2b00f70cade: Mounted from public/filebeat 9a2c2851596d: Mounted from public/filebeat 86564f3ca0e2: Mounted from public/filebeat e9bc8faf823a: Mounted from public/filebeat 3f3bcfa85067: Mounted from public/filebeat 65d3b56913bd: Mounted from public/filebeat 9b48a60607ee: Mounted from public/filebeat df859db41dd0: Mounted from public/filebeat 1cbe912d7c00: Mounted from public/filebeat ab5fbaca7e70: Mounted from public/filebeat d69483a6face: Mounted from public/filebeat v6.7.2: digest: sha256:6aacf97dfbcc5c64c2f1a12f43ee48a8dadb98657b9b8d4149d0fee0ec18be81 size: 2823

复制

12

From harbor.od.com/public/logstash:v6.7.2ADD logstash.yml /usr/share/logstash/config

复制

123

http.host: "0.0.0.0"path.config: /etc/logstashxpack.monitoring.enabled: false

复制 /data/dockerfile/logstash

1234567891011121314151617181920212223

[root@hdss7-200 logstash]# docker build . -t harbor.od.com/infra/logstash:v6.7.2Sending build context to Docker daemon 249.3 MBStep 1 : FROM harbor.od.com/public/logstash:v6.7.2v6.7.2: Pulling from public/logstashe60be144a6a5: Pull complete 6292c2b22d35: Pull complete 1bb4586d90e7: Pull complete 3f11f6d21132: Pull complete f0aaeeafebd3: Pull complete 38c751a91f0f: Pull complete b80e2bad9681: Pull complete e3c97754ddde: Pull complete 840f2d82a9fb: Pull complete 32063a9aaefb: Pull complete e87b22bf50f5: Pull complete Digest: sha256:6aacf97dfbcc5c64c2f1a12f43ee48a8dadb98657b9b8d4149d0fee0ec18be81Status: Downloaded newer image for harbor.od.com/public/logstash:v6.7.2 ---> 857d9a1f8221Step 2 : ADD logstash.yml /usr/share/logstash/config ---> 2ad32d3f5fefRemoving intermediate container 1d3a1488c1b7Successfully built 2ad32d3f5fef[root@hdss7-200 logstash]# docker push harbor.od.com/infra/logstash:v6.7.2

启动docker镜像

复制 /etc/logstash/logstash-test.conf

12345678910111213141516171819202122

input { kafka { bootstrap_servers => "10.4.7.11:9092" client_id => "10.4.7.200" consumer_threads => 4 group_id => "k8s_test" topics_pattern => "k8s-fb-test-.*" }}filter { json { source => "message" }}output { elasticsearch { hosts => ["10.4.7.12:9200"] index => "k8s-test-%{+YYYY.MM}" }}

复制

123

[root@hdss7-200 ~]# docker run -d --name logstash-test -v /etc/logstash:/etc/logstash harbor.od.com/infra/logstash:v6.7.2 -f /etc/logstash/logstash-test.conf[root@hdss7-200 ~]# docker ps -a|grep logstasha5dcf56faa9a harbor.od.com/infra/logstash:v6.7.2 "/usr/local/bin/docke" 8 seconds ago Up 6 seconds 5044/tcp, 9600/tcp jovial_swanson

复制

123

[root@hdss7-200 ~]# curl http://10.4.7.12:9200/_cat/indices?vhealth status index uuid pri rep docs.count docs.deleted store.size pri.store.sizegreen open k8s-test-2019.04 H3MY9d8WSbqQ6uL0DFhenQ 5 0 55 0 249.9kb 249.9kb

部署Kibana

运维主机HDSS7-200.host.com上:

准备docker镜像

kibana官方镜像下载地址

复制

12345678910111213141516171819202122232425

[root@hdss7-200 ~]# docker pull kibana:5.6.165.6.16: Pulling from library/kibana8014725ed6f5: Pull complete 19b590251e94: Pull complete 7fa3e54c0b5a: Pull complete 60ee9811b356: Pull complete d18bafa420f4: Pull complete 8cee55751899: Pull complete c395be470eb2: Pull complete Digest: sha256:71f776596244877597fd679b2fa6fb0f1fa9c5b11388199997781d1ce77b73b1Status: Downloaded newer image for kibana:5.6.16[root@hdss7-200 ~]# docker tag 62079cf74c23 harbor.od.com/infra/kibana:v5.6.16[root@hdss7-200 ~]# docker push harbor.od.com/infra/kibana:v5.6.16docker push harbor.od.com/infra/kibana:v5.6.16The push refers to a repository [harbor.od.com/infra/kibana]be94745c5390: Pushed 652dcbd52cdd: Pushed a3508a095ca7: Pushed 56d52080e2fe: Pushed dbce28d91bf0: Pushed dcddc432ebdf: Pushed 3e466685bf43: Pushed d69483a6face: Mounted from infra/logstash v5.6.16: digest: sha256:17dd243d6cc4e572f74f3de83eafc981e54c1710f8fe2d0bf74357b28bddaf08 size: 1991

解析域名

HDSS7-11.host.com上

复制 /var/named/od.com.zone

1

kibana 60 IN A 10.4.7.10

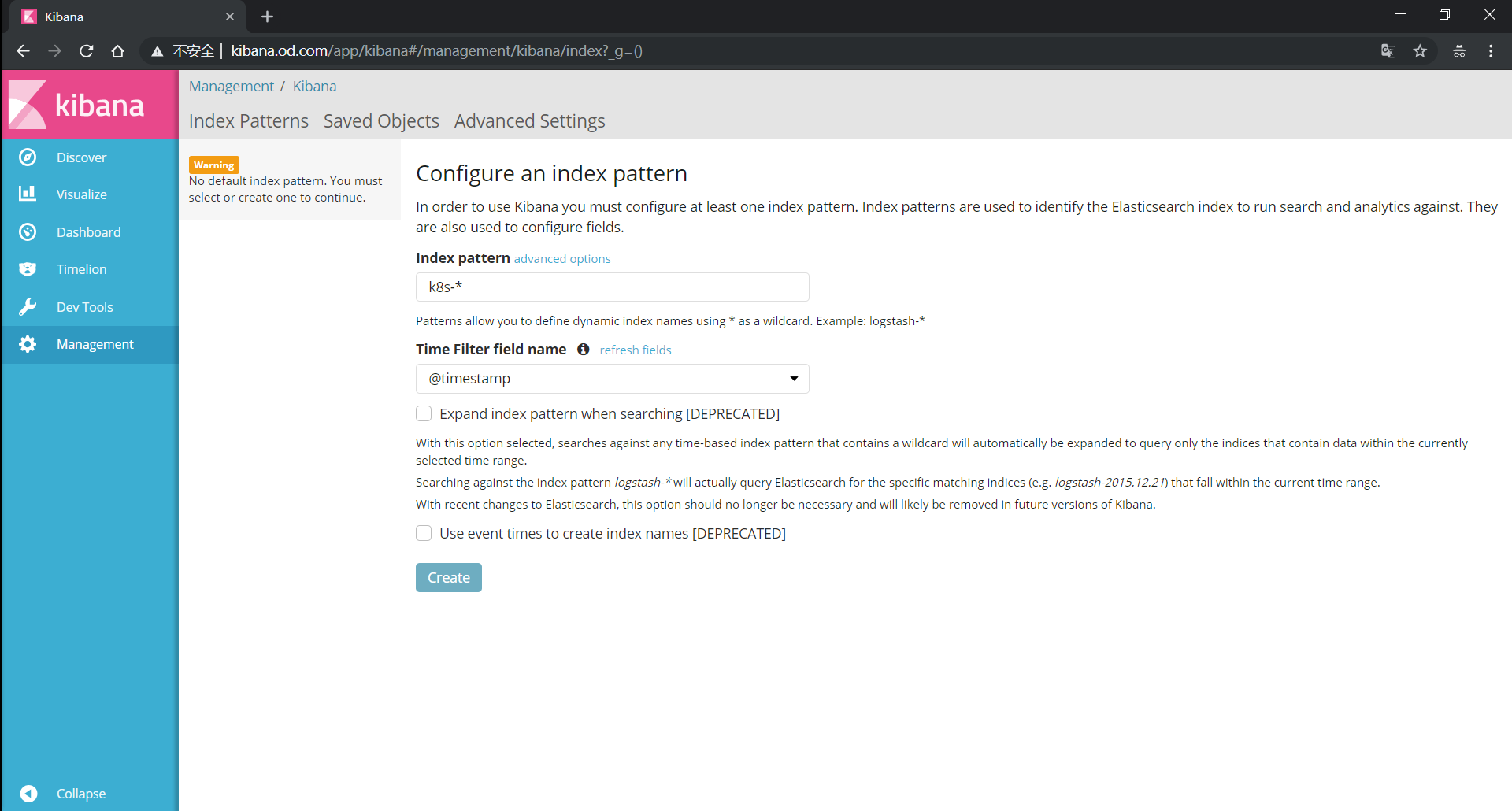

准备资源配置清单

vi /data/k8s-yaml/kibana/deployment.yaml

复制

123456789101112131415161718192021222324252627282930313233343536373839404142

kind: DeploymentapiVersion: extensions/v1beta1metadata: name: kibana namespace: infra labels: name: kibanaspec: replicas: 1 selector: matchLabels: name: kibana template: metadata: labels: app: kibana name: kibana spec: containers: - name: kibana image: harbor.od.com/infra/kibana:v5.6.16 ports: - containerPort: 5601 protocol: TCP env: - name: ELASTICSEARCH_URL value: http://10.4.7.12:9200 imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600

vi /data/k8s-yaml/kibana/svc.yaml

复制

123456789101112131415

kind: ServiceapiVersion: v1metadata: name: kibana namespace: infraspec: ports: - protocol: TCP port: 5601 targetPort: 5601 selector: app: kibana clusterIP: None type: ClusterIP sessionAffinity: None

vi /data/k8s-yaml/kibana/ingress.yaml

复制

1234567891011121314

kind: IngressapiVersion: extensions/v1beta1metadata: name: kibana namespace: infraspec: rules: - host: kibana.od.com http: paths: - path: / backend: serviceName: kibana servicePort: 5601

应用资源配置清单

任意运算节点上:

复制

123456

[root@hdss7-21 ~]# kubectl apply -f deployment.yaml deployment.extensions/kibana created[root@hdss7-21 ~]# kubectl apply -f svc.yaml service/kibana created[root@hdss7-21 ~]# kubectl apply -f ingress.yaml ingress.extensions/kibana created

浏览器访问

http://kibana.od.com

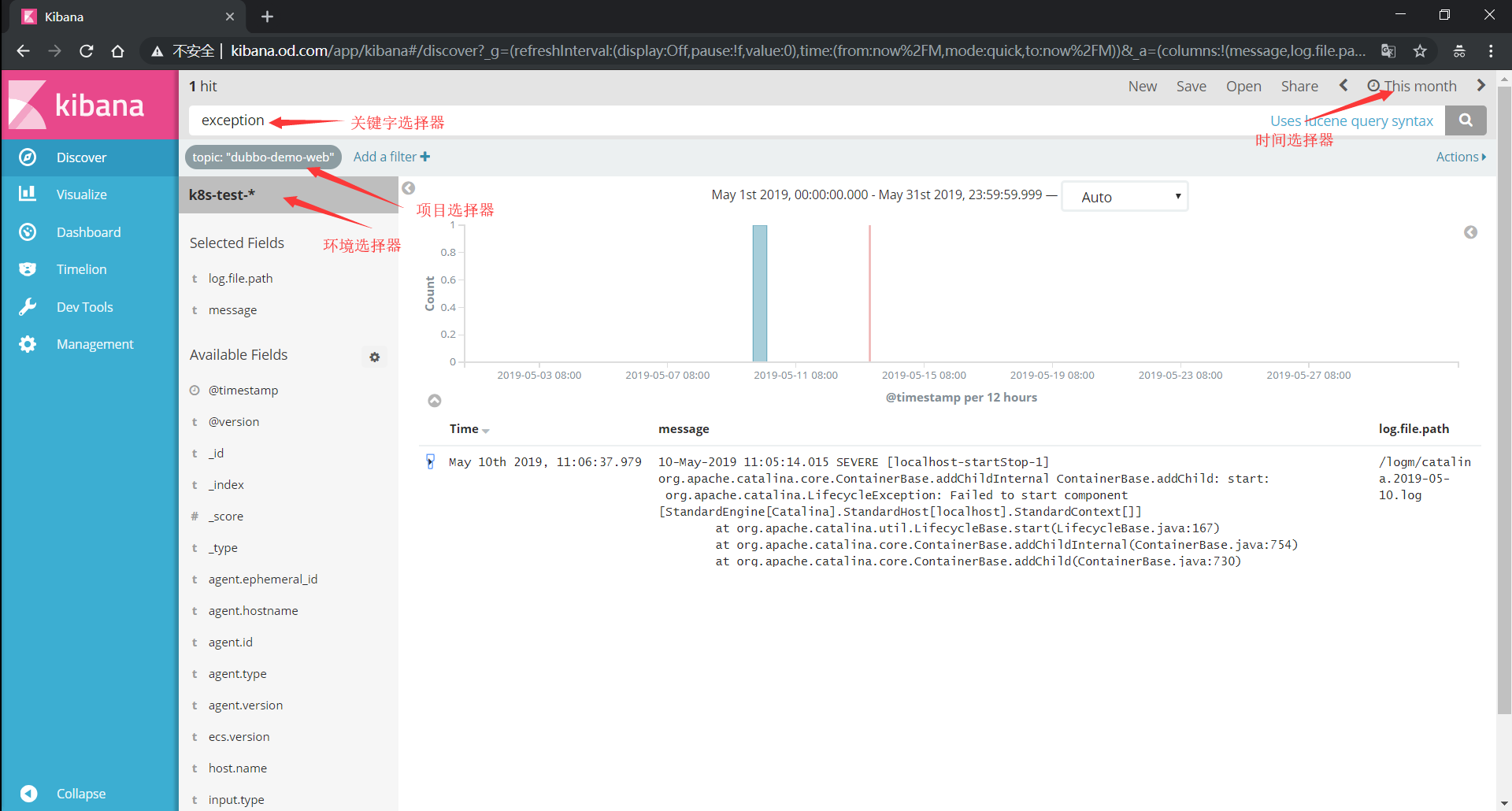

kibana的使用

选择区域

@timestamp

对应日志的时间戳

log.file.path

对应日志文件名

message

对应日志内容

时间选择器

环境选择器

选择对应环境的日志

k8s-test-

项目选择器

对应filebeat的PROJ_NAME值

Add a fillter

topic is ${PROJ_NAME}

dubbo-demo-service

关键字选择器