Performance difference of graph-based and alignment-based hybrid error correction methods for error-prone long reads

针对 容易出错的长读,基于图 和 基于对准 的混合纠错方法的性能差异

Abstract

The error-prone third-generation sequencing (TGS) long reads can be corrected by the high-quality second-generation sequencing (SGS) short reads, which is referred to as hybrid error correction. We here investigate the influences of the principal algorithmic factors of two major types of hybrid error correction methods by mathematical modeling and analysis on both simulated and real data. Our study reveals the distribution of accuracy gain with respect to the original long read error rate. We also demonstrate that the original error rate of 19% is the limit for perfect correction, beyond which long reads are too error-prone to be corrected by these methods.

易出错的第三代测序(TGS)长reads可以通过高质量的第二代测序(SGS)短reads进行校正,即杂交纠错。

本文通过对模拟数据和真实数据的数学建模和分析,研究了两种主要类型的混合误差修正方法的主要算法因素的影响。

我们的研究揭示了相对于原始长读错误率的准确度增益的分布。

我们还证明,原始错误率为19%是完美纠正的极限,超过这个限度,长读的错误就太容易被这些方法纠正。

Background

Third-generation sequencing (TGS) technologies [1], including Pacific Biosciences (PacBio) and Oxford Nanopore Technologies (ONT), have been demonstrated useful in many biomedical research since the unprecedented read lengths (average for PacBio and ONT can be over 10 kb and 20 kb, and maximum over 60 kb and 800 kb) are very informative for addressing complex problems, such as genome assembly and haplotyping [1,2,3,4,5,6,7,8,9,10]. However, the high error rates of TGS data (average 10–15% for the raw data) [11,12,13,14] reduce the mappability and the resolution of downstream analysis. To address this limitation, the high-quality short reads have been used to correct the long reads, which is termed as hybrid error correction. The existing hybrid error correction methods can be classified into two categories: alignment-based method [15,16,17,18,19,20,21] and de Bruijn graph (DBG)-based method (referred as “graph-based method”) [22,23,24,25,26]. Regardless of the lower algorithmic complexity by the graph-based method than the alignment-based one [27] and the difference of software implementations, several principal factors have significant effects on the error correction performance for both methods: long read error rate, short read error rate, short read coverage, alignment criterion, and solid k-mer size. Although previous studies examined some of these factors separately in the corresponding software development [28,29,30], here we establish mathematical frameworks to perform a comprehensive investigation of all these factors in hybrid error correction. Through studying their influences on short read alignment rate and solid k-mer detection in DBG, we finally interrogate how these factors determinate the accuracy gain in hybrid error correction. This research does not only study the algorithmic frameworks of two major hybrid error correction methods, more importantly it also offers an informative guidance for method selection, parameter design, and future method development for long read error correction.

第三代测序(TGS)技术[1],包括太平洋生物科学(PacBio)和牛津纳米孔技术(ONT),在许多生物医学研究中被证明是有用的,因为前所未有的读取长度(PacBio和ONT的平均长度可超过10kb和20kb,最大可超过60kb和800kb)对于解决基因组组装和单倍型[1-10]等复杂问题非常有用]。 然而,TGS数据的高错误率(原始数据的平均10-15%)[11-14]降低了下游分析的可映射性和分辨率。 为了解决这一限制,高质量的短读被用来纠正长读,这被称为混合纠错。

现有的混合纠错方法可分为两类:基于对齐的方法[15-21]和基于de Bruijn图(DBG)的方法(称为“基于图的方法”)[22-26]。

尽管基于图形的方法比基于对齐的方法具有更低的算法复杂度[27]以及软件实现的差异,但几个主要因素对这两种方法的纠错性能都有显著的影响:长读错误率、短读错误率、短读覆盖率、对齐准则和实心k-mer大小。 虽然以前的研究在相应的软件开发[28-30]中分别研究了其中的一些因素,但在这里我们建立了数学框架,对混合纠错中的所有这些因素进行了全面的研究。 通过研究它们对DBG中短读对准率和实k-mer检测的影响,最后探讨了这些因素是如何决定混合纠错精度增益的。

本研究不仅研究了两种主要的混合纠错方法的算法框架,更重要的是为长读纠错的方法 选择、参数设计和未来方法开发提供了信息指导。

Results and discussion

Overall, we first evaluate the accuracy gains by the alignment-based and graph-based methods at each error rate level by mathematical modeling, following by validating the model fitness with simulated and real data. With these data and results, we study the influences of key algorithmic factors under different data scenarios, and compare two methods.

Two major stages of the alignment-based method determine the accuracy gain: short read alignment and consensus inference (Fig. 1a). Denote C as the number of short reads generated at a certain base in sequencing process, which is referred as the real short reads. At the first stage, the C real short reads are aligned to the long reads. Let N be the number of successfully aligned real short reads. Next, per the base of interest, the consensus of the aligned real short reads is generated as the corrected base. We define accuracy gain as γ − (1 − EA), where γ is the original long read error rate and EA is the expected accuracy after error correction:

总的来说,我们首先通过数学建模来评估在每个错误率水平下基于比对和基于图的方法所获得的精度,然后用模拟数据和真实数据验证模型的适用性。

利用这些数据和结果,我们研究了不同数据场景下关键算法因素的影响,并比较了两种方法。

基于比对的方法决定精度增益的两个主要阶段:短读比对和共识推断(图1a)。

表示C为测序过程中在一定碱基上产生的短读数,称为真实短读。

在第一阶段,C实际短读与长读对齐。

设N为成功对齐的实际短读的数量。

接下来,根据感兴趣的基值,生成对齐的实际短读的一致性作为修正的基值。

我们将精度增益定义为:out -(1−EA),其中,fae为原始长读错误率,EA为纠错后的期望精度:

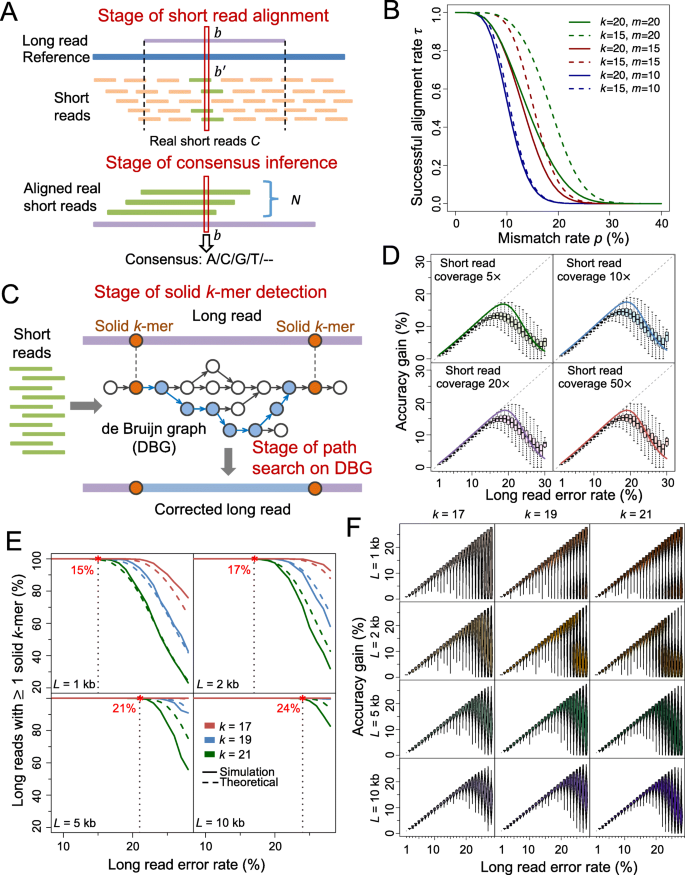

Illustration of alignment-based and graph-based method; results for model fitness and accuracy gain on simulated data.

a Schematic of alignment-based method. b is a certain base on the long read, and b′ is the corresponding base on the reference sequence. The C real short reads are aligned to the long read (with N of them being successfully aligned), and then the consensus is inferred at each base.

b Relationship of the successful alignment probability for short reads τ with the mismatch rate p, lower threshold on perfect match k-mer size k and the upper threshold of mismatches m. In spite of the changes of k or/and m, τ is near to one when p < 5%, and is near to zero when p > 30%. This indicates that mismatch rate is the most dominant factor on τ. As m increases from 10 to 20, the curves move upper (from blue to red and green), implying that τ increases with m. Moreover, the divergence between the dashed and solid blue, red, and green lines also shows an increasing tendency, which means the effect of k on τ also increases with m.

c Schematic of graph-based error correction method. DBG is built based on short reads. Solid k-mers are detected on the long reads. The fragment between two adjacent solid k-mers is then aligned with the correlated path on the DBG. The path is used to correct the fragment when certain criteria are satisfied.

d Accuracy gain at each error rate for simulated long reads corrected by alignment-based method. The boxplots represent the accuracy gain distribution for long reads. The solid lines represent the theoretical values. The dashed gray lines (diagonal lines) correspond to perfect correction.

e Proportion of simulated long reads with solid k-mer detected at each error rate level. The solid lines represent the theoretical values. The dashed lines represent the results on simulated long reads.

f Accuracy gain at each error rate for simulated long reads corrected by graph-based method.

L: long read length;

k: size of perfectly matched seed or solid k-mer

Pr(N = n) represents the probability that n real short read can be successfully aligned, corresponding to the stage of short read alignment, and g(n, β) is the probability that the consensus equals to the true base, corresponding to the stage of consensus inference. β is the short read error rate. At first we calculate Pr(N = n) via obtaining the probability of successfully aligning a single short read to long read, which highly depends on the tolerance of mismatches and the length of perfectly matched seed required by an aligner. For two sequences X and Y with equal length l, denote M as the number of mismatched bases, and K as the length of the largest perfectly matched seed. Let k be a lower threshold of K, and m be an upper threshold of M and thus the couple of conditions K ≥ k and M ≤ m sets up a criterion of alignment. The following theorem measures the probability τ that a single short read can be successfully aligned under the criterion.

Theorem 1. Let X and Y be two sequences with equal length l. Denote Xi and Yi (1 ≤ i ≤ l) as the ith bases of X and Y, respectively. Suppose all the events {Xi = Yi} are independent, and all the bases have a common mismatch rate p. Let τ(k, m, p, l) ≜ Pr(K ≥ k, M ≤ m), 0 ≤ m ≤ l, where τ is namely the probability that a short read can be successfully aligned to a target place on the long read by an aligner requiring a perfectly matched seed not shorter than k and the number of mismatched bases not more than m. We have:

where Q(n) = max {s| l − ks ≥ n} ⋀ (n + 1). τ increases with m and l, and decreases with k and p.

The proof is provided in Additional file 1: Note 1. Based on τ, we are able to calculate the alignment rate of N short reads Pr(N = n). Given a set of errors in a long read, alignments of short reads are not completely independent, so we consider short reads in several batches (Additional file 1: Note 2, Figure S1). The mismatch rate p can roughly be estimated by β + γ (Additional file 1: Note 3). The analytical results indicate that the mismatch rate (i.e., approximately the long read error rate, because β ≪ γ), is the most dominant factor on τ; as m increases, both τ and the effect of k on τ increase (Fig. 1b, Additional file 1: Note 4). The accuracy of consensus inference g(n, β) can be deducted based on binomial distribution (Methods, Additional file 1: Note 5). The theoretical calculation shows that shallow aligned short read coverage is enough to generate high-accuracy consensus (e.g., only 9× aligned short reads can achieve consensus with accuracy >99.99%), so short read alignment is the dominant stage that impacts accuracy gain (Additional file 1: Figure S2).

Two stages in the graph-based method, including detection of solid k-mer and path search in DBG, influence the accuracy gain (Fig. 1c). At the first stage, all k-mers on the long read are scanned to find the “solid k-mers” that exist in the DBG generated by short reads. At the second stage, all paths that link two adjacent solid k-mers or link a solid k-mer with the end of long read on the DBG are searched to find the optimal one to correct the long read. Let φ(k, γ, L) be the probability that the long read (with length L) contains at least one solid k-mer. According to Theorem 1, we have:

(see Methods, Additional file 1: Note 6, Figure S3 for details). To investigate the second stage, we examine the distance between adjacent solid regions, since it represents the overall difficulty of path search in DBG. We model the solid region distance by a truncated geometric distribution compounded with a geometric distribution, and its expectation increases with k-mer size k and long read error rate γ (see Methods for details).

Next, we examine the model fitness and accuracy gains of both methods on simulated data. The long reads and short reads are simulated from the E. coli reference genome (strain K-12 MG1655) (Additional file 1: Note 7) [31, 32]. The alignment-based software proovread [19] is applied to correct the long reads (Additional file 1: Note 8, Figure S4). The tendencies of the theoretical accuracy gains fit the actual accuracy gains on the simulated data under different short read coverages (Fig. 1d). When γ ≤ 15%, even if very shallow short read coverage is used (5×), the accuracy gain increases along the diagonal line, which implies nearly perfect correction. When γ ≥ 18%, the accuracy gain decreases and the corresponding variance increases, and thus very few reads can be perfectly corrected. These results show the upper limit of long read error rate that the alignment-based method can perfectly solve, and the similar results are demonstrated in the graph-based method (as shown below). Moreover, both theoretical calculation and simulated data reveal that the accuracy gain can rarely exceed 20%, although there is slight increment (e.g., <2% and <1%) with respect to short read coverage (e.g., from 5× to 10× and from 20× to 50×, respectively, Fig. 1d). Therefore, the hybrid error correction benefit marginally from increase of short read coverage, especially when it is greater than 10×.

To evaluate the model of graph-based method, we apply LoRDEC (version 0.5.3) [23] to correct the simulated long reads (Additional file 1: Note 9). The short read coverage is 10× in this evaluation. The overall tendencies of the theoretical solid k-mer detection rate φ with respect to the length of long read L and the required k-mer size k align well with the values generated from the simulated data (Fig. 1e), though φ is slightly higher when L is over 2 kb. Overall, the solid k-mer detection rate is close to 1 when long read error rate γ is below certain threshold (such as 15% for k = 21 and L = 1 kb), and it decreases dramatically as γ increases beyond the threshold. This threshold increase with L (e.g., from 15% to 24% for 1 to 10 kb given k = 21) (Fig. 1e). In addition, the increase of k-mer size has an overall negative effect on solid k-mer detection, which is more remarkable when long reads are shorter (Fig. 1e). Of note, high long read error rate results in high probability that no solid k-mer can be detected so that the long read cannot be corrected. Following solid k-mer detection, we investigate the distances between adjacent solid regions: for all k-mer sizes in the test, the theoretical distances are consistent with the actual values obtained in the simulated data at different levels of long read error rates (Additional file 1: Figure S5). Given a k-mer size, both the mean and variance of the distances increase remarkably when long read error rate is ≥18% while it rarely exceeds 500 bp otherwise (Additional file 1: Figure S5). In addition, the increase of k also leads to a substantial increment on the distance.

In term of accuracy gain, the simulated data show that long reads can be almost perfectly corrected by the graph-based method when the long read error rate γ ≤ 19%, and the accuracy gain decreases and the corresponding variance increases when γ > 19%. The corresponding change point of γ in the alignment-based method is ~ 15%. However, instead of a single peak of accuracy gain with respect to γ, there is a bimodal pattern with γ > 19% in some scenarios of the graph-based method (e.g., k ≥ 19 and L ≤ 2 kb): some long reads can be corrected almost perfectly while some others have zero or very low accuracy gain (Fig. 1f). The latter subset of long reads may likely contain no or only one solid k-mer, so no or very difficult correction is performed. When the length of long read L increases to ≥5 kb, the distribution of accuracy gain shrinks at every error rate level and the bimodal pattern fades. Because longer read length improves the probability of solid k-mer detection (see the abovementioned results and Fig. 1e), a larger proportion of long reads can be corrected even though not perfectly.

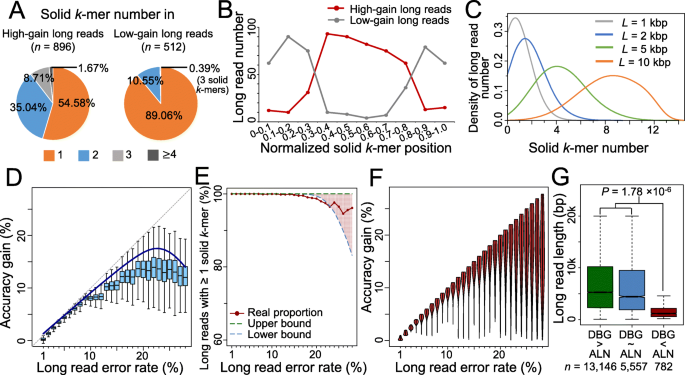

The bimodal pattern of accuracy gain is further investigated through a concrete scenario, in which k = 19, L = 1 kb, γ = 25%. The corrected reads are classified into two groups: “high-gain long reads” with accuracy gain >12.5%, and “low-gain long reads” otherwise. Much higher fraction of the low-gain long reads contains only one solid 19-mer than the high-gain long reads (89.04% vs. 54.58%, Fig. 2a), and overall, the former contain more solid 19-mers than the latter. Moreover, for long reads with single 19-mer, the locations of the 19-mers are different for two classes of long reads: at the middle of high-gain long reads, while near either end of low-gain long reads (Fig. 2b). When the solid k-mer occurs near an end of the long read, one fragment is particularly long so that the correction by path search in DBG becomes more difficult, resulting in lower accuracy gain. In the case that no solid 19-mer is detected, long reads are uncorrected and contribute to the modal with low accuracy again as well. As the read length increases, more reads contain multiple solid 19-mer (Fig. 2c) and the effect of fragments at the ends becomes marginal so that the bimodal pattern disappears.

Explanation of bimodal accuracy gain for graph-based method; model fitness and accuracy gain on real dataset. a Proportion of long reads with different solid k-mer number. Without loss of generosity, the simulated long reads with length of 1 kb and error rate of 25% are taken as example. A long read is labeled as “high-gain long read” of the accuracy gain is larger than 12.5% (half of the value of error rate), and “low-gain long read” otherwise. b Distribution of the single solid k-mer locations on the high-gain and low-gain long reads. Only the long reads with one solid k-mer are considered. c Distribution of solid k-mer number on the long reads with different lengths. d Accuracy gain distribution at each error rate level for alignment-based method. e Proportion of long reads with solid k-mer detected. Due to the mixture of different long read lengths, an upper boundary and lower boundary is provided. f Accuracy gain distribution at each error rate level for graph-based method. g Length distribution of long reads on which graph-based method (labeled as DBG) has better, equal, or worse performance than the alignment-based method (labeled as ALN). The p value is calculated by Wilcoxon rank sum test

We further study the accuracy gains on a real PacBio dataset [23] corrected by proovread and LoRDEC, respectively (Additional file 1: Note 8–10, Figure S6, Figure S7). Short reads are randomly sampled with coverage 10×. The overall tendency of the real accuracy gain by proovread is in accordance with the theoretical calculation of the alignment-based method, though there is slight overestimation by the latter (Fig. 2d). On the real data, long reads can rarely obtain accuracy gain >20% (Fig. 2d). However, when the long read error rate increases from 25 to 30%, the accuracy gain maintains at a range of 10–15% rather than showing a sharp decrease as the theoretical modeling. When evaluating the accuracy gain by LoRDEC on the real data, it should be noticed that the real data contains long reads with different lengths, in contrast to the fixed read length in the abovementioned mathematical model of the graph-based method. Despite this difference, the proportion of the real long reads with solid k-mer detected is within the theoretical range (Fig. 2e), and the pattern of accuracy gain is very similar with the simulated results (Fig. 2f and Fig. 1f): most long reads achieve nearly perfect correction when the error rate is <20%, and the variance becomes larger for higher error rates.

Furthermore, two methods are compared based on the real dataset. The difference of accuracy gains between two methods becomes remarkable when the long read error rate >15%. Among 19,485 long reads with original error rates >15%, LoRDEC outperforms proovread on 13,146 (67.47%) reads, i.e., the difference of accuracy gains is >2% (boxplots in Fig. 2d vs. violin plots in Fig. 2f). Two methods show similar accuracy gains in 5,557 (28.52%) long reads, i.e., the difference of accuracy gains is ≤2%. proovread performs better for the remaining 782 (4.01%) reads. The third group of long reads is significantly shorter than the other two groups (p value of Wilcoxon rank sum test 1.78 × 10−6, Fig. 2g). It is consistent with the abovementioned inference: for the graph-based method, shorter reads are more likely to contain few or no solid k-mers, and the location of the solid k-mer highly affects the correction (Fig. 2a–c).

In summary, the theoretical calculation by mathematical frameworks together with both analyses of simulated and real data shows how key algorithmic factors and data parameters affect the accuracy gains by two main types of hybrid error correction algorithms. When the original long read error rate is below certain thresholds (e.g., 15%), both methods can correct most errors. For highly error-prone long reads (especially γ ≥ 20%), the graph-based method can obtain generally higher accuracy gain, while the variance is also larger. Among such highly error-prone long reads, the alignment-based method tends to have more advantage in correcting relatively shorter ones (e.g., median length 1,195 bp in our test, Fig. 2g). Although it is not possible to analyze all published software, the results generated by proovread and LoRDEC are representative for the alignment-based and graph-based methods, respectively, as shown by our previous benchmark work on 10 error correction software [27]. Of note, sequencing errors along real long reads may not be independent, or short read coverage may not be evenly distributed (e.g., transcriptome data), so specific adjustment is necessary in the analysis of real data (see Additional file 1: Note 10–11 for details). As both PacBio and ONT improve the technologies, the error rates of most raw data become <20%. At this range, our results fit the real data very well and thus will be beneficial for the analyses of the real data and provide a guidance for method selection, parameter design (Additional file 1: Note 12–13, Figure S8) and future method development. In addition, for modeling the alignment-based method, the mathematical theorem is established to measure the probability of short read alignment, which also lays the groundwork of development and analyses of the other alignment-based algorithms.