话说网上记录这本书内容的博客好多,而且挺多写的不错的。emmmmm,我写的这么渣我都不好意思写了。

3点半睡7点起,困困的一天,看懂了原理但愣是没看懂Logistic回归的代码,这里先贴个代码暂存一下,改天弄懂了再补。

from numpy import *

def loadDataSet():

dataMat = []

labelMat = []

fr = open('testSet.txt')

for line in fr.readlines():

lineArr = line.strip().split()

dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])])

labelMat.append(int(lineArr[2]))

return dataMat, labelMat

def sigmoid(inX):

return 1.0 / (1 + exp(-inX))

def gradAscent(dataMatIn, classLabels):

dataMatrix = mat(dataMatIn)

labelMat = mat(classLabels).transpose()

m, n = shape(dataMatrix)

alpha = 0.001

maxCycles = 500

weights = ones((n, 1))

for k in range(maxCycles):

h = sigmoid(dataMatrix * weights)

error = (labelMat - h)

weights = weights + alpha * dataMatrix.transpose() * error

return weights

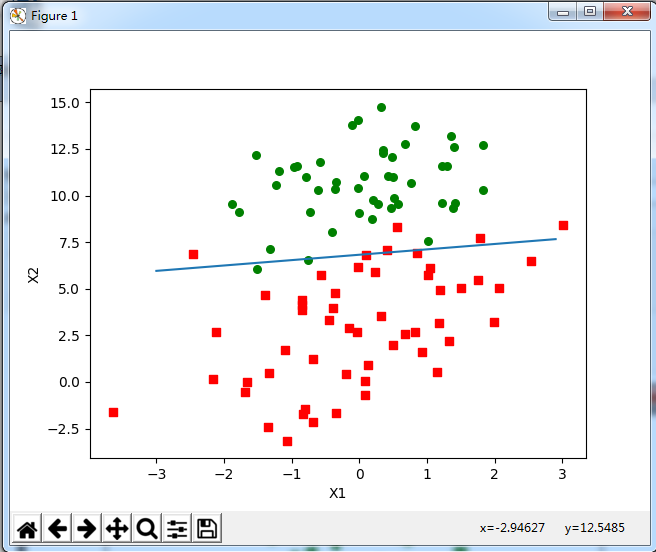

def plotBestFit(weights):

import matplotlib.pyplot as plt

dataMat, labelMat = loadDataSet()

dataArr = array(dataMat)

n = shape(dataArr)[0]

xcord1 = []

ycord1 = []

xcord2 = []

ycord2 = []

for i in range(n):

if labelMat[i] == 1:

xcord1.append(dataArr[i, 1])

ycord1.append(dataArr[i, 2])

else:

xcord2.append(dataArr[i, 1])

ycord2.append(dataArr[i, 2])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord1, ycord1, s=30, c='red', marker='s')

ax.scatter(xcord2, ycord2, s=30, c='green')

x = arange(-3.0, 3.0, 0.1)

y = (-weights[0] - weights[1] * x) / weights[2]

ax.plot(x, y)

plt.xlabel('X1')

plt.ylabel('X2')

plt.show()

def stocGradAscent0(dataMatrix, classLabels):

m, n = shape(dataMatrix)

alpha = 0.01

weights = ones(n)

for i in range(m):

h = sigmoid(sum(dataMatrix[i] * weights))

error = classLabels[i] - h

weights = weights + alpha * error * dataMatrix[i]

return weights

def stocGradAscent1(dataMatrix, classLabels, numIter=150):

m, n = shape(dataMatrix)

alpha = 0.01

weights = ones(n)

for j in range(numIter):

dataIndex = list(range(m))

for i in range(m):

alpha = 4 / (1.0 + j + i) + 0.01

randIndex = int(random.uniform(0, len(dataIndex)))

h = sigmoid(sum(dataMatrix[randIndex] * weights))

error = classLabels[randIndex] - h

weights = weights + alpha * error * dataMatrix[randIndex]

del (dataIndex[randIndex])

return weights

def classifyVector(inX, weights):

prob = sigmoid(sum(inX * weights))

if prob > 0.5:

return 1

else:

return 0

def colicTest():

frTrain = open('horseColicTraining.txt')

frTest = open('horseColicTest.txt')

trainingSet = []

trainingLabels = []

for line in frTrain.readlines():

currLine = line.strip().split(' ')

lineArr = []

for i in range(21):

lineArr.append(float(currLine[i]))

trainingSet.append(lineArr)

trainingLabels.append(float(currLine[21]))

trainWeights = stocGradAscent1(array(trainingSet), trainingLabels, 500)

errorCount = 0

numTestVec = 0.0

for line in frTest.readlines():

numTestVec += 1.0

currLine = line.strip().split(' ')

lineArr = []

for i in range(21):

lineArr.append(float(currLine[i]))

if int(classifyVector(array(lineArr), trainWeights)) != int(currLine[21]):

errorCount += 1

errorRate = (float(errorCount / numTestVec))

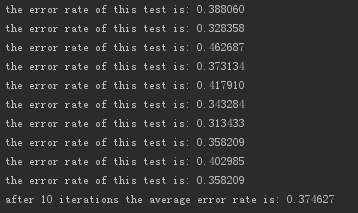

print("the error rate of this test is: %f" % errorRate)

return errorRate

def multiTest():

numTests = 10

errorSum = 0.0

for k in range(numTests):

errorSum += colicTest()

print("after %d iterations the average error rate is: %f"

% (numTests, errorSum / float(numTests)))

if __name__ == '__main__':

dataArr, labelMat = loadDataSet()

# weights = gradAscent(dataArr, labelMat)

# weights = stocGradAscent0(array(dataArr), labelMat)

weights = stocGradAscent1(array(dataArr), labelMat)

# plotBestFit(weights.getA())

plotBestFit(weights)

multiTest()

Logistic回归的目的是寻找一个非线性函数Sigmoid的最佳拟合参数,求解过程可以由最优化算法来完成。

在最优化算法中,最常用的就是梯度上升算法,而它又可以简化为随机梯度上升算法。

两者效果相当,但后者占用更少的计算资源。

此外它还是一个在线算法,可以在新数据到来时就完成参数更新,而不需要重新读取整个数据集来进行批处理运算。