HBase shell 、写和读HDFS、HBase建表、编程、开启debug模式

使用Hadoop shell命令导入和导出数据到HDFS

1.2 使用Hadoop shell命令导入和导出数据到HDFS(1)

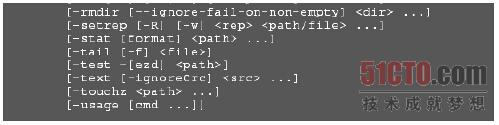

HDFS提供了许多shell命令来实现访问文件系统的功能,这些命令都是构建在HDFS FileSystem API之上的。Hadoop自带的shell脚本是通过命令行来执行所有操作的。这个脚本的名称叫做hadoop,通常安装在$HADOOP_BIN目录下,其中$HADOOP_BIN是Hadoopbin文件完整的安装目录,同时有必要将$HADOOP_BIN配置到$PATH环境变量中,这样所有的命令都可以通过hadoop fs -command这样的形式来执行。

如果需要获取文件系统的所有命令,可以运行hadoop命令传递不带参数的选项fs。

- hadoop fs

这些按照功能进行命名的命令的名称与Unix shell命令非常相似。使用help选项可以获得某个具体命令的详细说明。

- hadoop fs –help ls

这些shell命令和其简要的参数描述可在官方在线文档http://hadoop.apache.org/docs/r1.0.4/file_system_shell.html中进行查阅。

在这一节中,我们将使用Hadoop shell命令将数据导入HDFS中,以及将数据从HDFS中导出。这些命令更多地用于加载数据,下载处理过的数据,管理文件系统,以及预览相关数据。掌握这些命令是高效使用HDFS的前提。

准备工作

你需要在http://www.packtpub.com/support这个网站上下载数据集weblog_ entries.txt。

操作步骤

完成以下步骤,实现将weblog_entries.txt文件从本地文件系统复制到HDFS上的一个指定文件夹下。 在HDFS中创建一个新文件夹,用于保存weblog_entries.txt文件:

- hadoop fs -mkdir /data/weblogs

将weblog_entries.txt文件从本地文件系统复制到HDFS刚创建的新文件夹下:

- hadoop fs -copyFromLocal weblog_entries.txt /data/weblogs

列出HDFS上weblog_entries.txt文件的信息:

- hadoop fs –ls /data/weblogs/weblog_entries.txt

在Hadoop处理的一些结果数据可能会直接被外部系统使用,可能需要其他系统做更进一步的处理,或者MapReduce处理框架根本就不符合该场景,任何类似的情况下都需要从HDFS上导出数据。下载数据最简单的办法就是使用Hadoop shell。

//将本地文件上传到hdfs。

String target="hdfs://localhost:9000/user/Administrator/geoway_portal/tes2.dmp";

FileInputStream fis=new FileInputStream(new File("C:\tes2.dmp"));//读取本地文件

Configuration config=new Configuration();

FileSystem fs=FileSystem.get(URI.create(target), config);

OutputStream os=fs.create(new Path(target));

//copy

IOUtils.copyBytes(fis, os, 4096, true);

System.out.println("拷贝完成...");

查看上传的hdfs的文件信息:

使用Hadoop fs -ls shell命令查询geoway_portal下的文件情况:

以下代码实现将本地文件拷到HDFS集群中

package com.njupt.Hadoop;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class CopyToHDFS {

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Path source = new Path("/home/hadoop/word.txt");

Path dst = new Path("/user/root/njupt/");

fs.copyFromLocalFile(source,dst);

}

}

使用HDFS java api 下载文件到本地的代码如下:

String file="hdfs://localhost:9000/user/Administrator/fooo/j-spatial.zip";//hdfs文件 地址

Configuration config=new Configuration();

FileSystem fs=FileSystem.get(URI.create(file),config);//构建FileSystem

InputStream is=fs.open(new Path(file));//读取文件

IOUtils.copyBytes(is, new FileOutputStream(new File("c:\likehua.zip")),2048, true);//保存到本地 最后 关闭输入输出流

./start

关于配置环境变量

./hbase shell

会了和能做项目是两个层次,完全不同的概念的撒。

时间戳可以变成时间表那样??

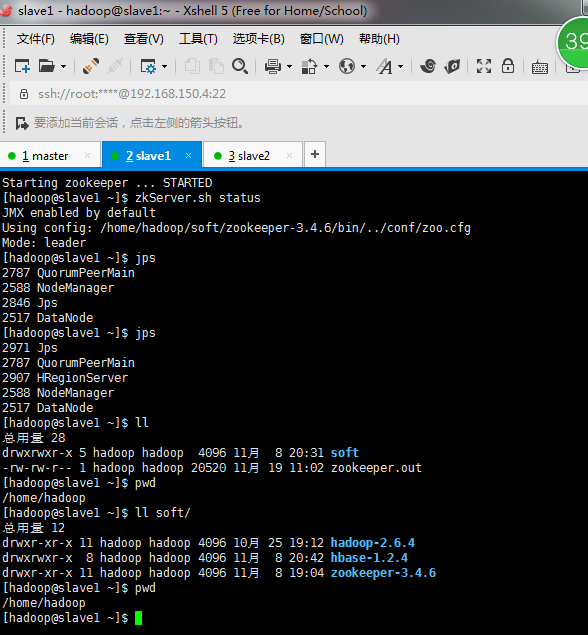

slave2:

slave1:

[root@slave1 ~]# ll

总用量 52

-rw-------. 1 root root 950 10月 14 16:02 anaconda-ks.cfg

-rw-r--r--. 1 root root 12081 5月 22 21:31 post-install

-rw-r--r--. 1 root root 552 5月 22 21:31 post-install.log

drwxr-xr-x. 2 root root 4096 10月 22 17:43 公共的

drwxr-xr-x. 2 root root 4096 10月 22 17:43 模板

drwxr-xr-x. 2 root root 4096 10月 22 17:43 视频

drwxr-xr-x. 2 root root 4096 10月 22 17:43 图片

drwxr-xr-x. 2 root root 4096 10月 22 17:43 文档

drwxr-xr-x. 2 root root 4096 10月 22 17:43 下载

drwxr-xr-x. 2 root root 4096 10月 22 17:43 音乐

drwxr-xr-x. 2 root root 4096 10月 22 17:43 桌面

[root@slave1 ~]# cd /etc/sysconfig/network-scripts/

如何从master面板快速切换到slave2? shift+tab

[root@slave1 network-scripts]# cat ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=no

NAME=eth0

UUID=16a631ff-0a3b-4915-9d6d-99743e5b0b56

ONBOOT=yes

HWADDR=00:0C:29:66:10:7b

IPADDR=192.168.150.4

PREFIX=24

GATEWAY=192.168.150.2

DNS1=192.168.150.2

LAST_CONNECT=1477239855

[root@slave1 network-scripts]# su hadoop

[hadoop@slave1 network-scripts]$ cd

[hadoop@slave1 ~]$ ll

总用量 68

drwxrwxr-x 5 hadoop hadoop 4096 11月 8 20:31 soft

-rw-rw-r-- 1 hadoop hadoop 60181 11月 9 11:44 zookeeper.out

[hadoop@slave1 ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/soft/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@slave1 ~]$ zkServer.sh status

JMX enabled by default

Using config: /home/hadoop/soft/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: leader

[hadoop@slave1 ~]$ jps

2971 Jps

2787 QuorumPeerMain

2907 HRegionServer

2588 NodeManager

2517 DataNode

slave2:

[root@slave2 ~]# ifconfig

[root@slave2 ~]# su hadoop

[hadoop@slave2 ~]$ zkServer.

zkServer.cmd zkServer.sh

[hadoop@slave2 ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/soft/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@slave2 ~]$ jps

2609 NodeManager

2797 QuorumPeerMain

2534 DataNode

2824 Jps

[hadoop@slave2 ~]$

master:

[root@master ~]# su hadoop

[hadoop@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

16/11/19 10:55:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [master]

master: starting namenode, logging to /home/hadoop/soft/hadoop-2.6.4/logs/hadoop-hadoop-namenode-master.out

slave2: starting datanode, logging to /home/hadoop/soft/hadoop-2.6.4/logs/hadoop-hadoop-datanode-slave2.out

slave1: starting datanode, logging to /home/hadoop/soft/hadoop-2.6.4/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/soft/hadoop-2.6.4/logs/hadoop-hadoop-secondarynamenode-master.out

16/11/19 10:55:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/soft/hadoop-2.6.4/logs/yarn-hadoop-resourcemanager-master.out

slave2: starting nodemanager, logging to /home/hadoop/soft/hadoop-2.6.4/logs/yarn-hadoop-nodemanager-slave2.out

slave1: starting nodemanager, logging to /home/hadoop/soft/hadoop-2.6.4/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

5623 SecondaryNameNode

5466 NameNode

6050 Jps

5793 ResourceManager

[hadoop@master ~]$ zkServer.sh start

JMX enabled by default

Using config: /home/hadoop/soft/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@master ~]$ zkServer.sh status

JMX enabled by default

Using config: /home/hadoop/soft/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: follower

[hadoop@master ~]$ jps

5623 SecondaryNameNode

5466 NameNode

6117 QuorumPeerMain

5793 ResourceManager

6174 Jps

[hadoop@master ~]$ start-hbase.sh

starting master, logging to /home/hadoop/soft/hbase-1.2.4/logs/hbase-hadoop-master-master.out

192.168.150.3: starting regionserver, logging to /home/hadoop/soft/hbase-1.2.4/logs/hbase-hadoop-regionserver-master.out

192.168.150.5: starting regionserver, logging to /home/hadoop/soft/hbase-1.2.4/logs/hbase-hadoop-regionserver-slave2.out

192.168.150.4: starting regionserver, logging to /home/hadoop/soft/hbase-1.2.4/logs/hbase-hadoop-regionserver-slave1.out

[hadoop@master ~]$ jps

jps^H^H5623 SecondaryNameNode

6437 HRegionServer

5466 NameNode

6117 QuorumPeerMain

5793 ResourceManager

6547 Jps

6304 HMaster

[hadoop@master ~]$ hdfs dfs -ls /

16/11/19 11:01:23 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your pla

Found 3 items

drwxr-xr-x - hadoop supergroup 0 2016-11-19 10:59 /hbase

drwxr-xr-x - hadoop supergroup 0 2016-10-25 20:40 /tmp

drwxr-xr-x - hadoop supergroup 0 2016-10-25 19:43 /user

[hadoop@master ~]$ free

total used free shared buffers cached

Mem: 1012076 899848 112228 8 872 36104

-/+ buffers/cache: 862872 149204

Swap: 2031612 152652 1878960

[hadoop@master ~]$ free -m

total used free shared buffers cached

Mem: 988 880 107 0 0 35

-/+ buffers/cache: 844 144

Swap: 1983 148 1835

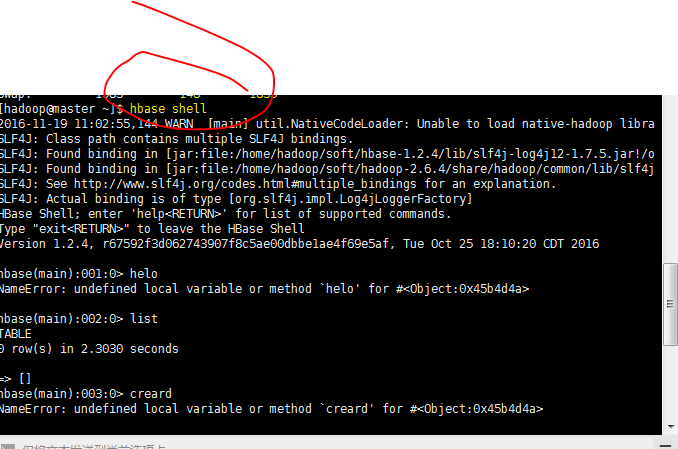

[hadoop@master ~]$ hbase shell

2016-11-19 11:02:55,144 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop librar

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/or

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/slf4j-

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.4, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2016

hbase(main):001:0> helo

NameError: undefined local variable or method `helo' for #<Object:0x45b4d4a>

hbase(main):002:0> list

TABLE

0 row(s) in 2.3030 seconds

=> []

hbase(main):003:0> creard

NameError: undefined local variable or method `creard' for #<Object:0x45b4d4a>

hbase(main):004:0> put "''

hbase(main):005:0" put

hbase(main):006:0" [hadoop@master ~]$

[hadoop@master ~]$

[hadoop@master ~]$ hbase shell

2016-11-19 11:12:23,455 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.4, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2016

hbase(main):001:0> list

TABLE

0 row(s) in 1.0970 seconds

=> []

hbase(main):002:0>

hbase删除输入错误:

执行删除操作时,需要使用组合键:Ctrl+Back Space同时按下

HBase: 灵活的,如果网页更改,适用于结构化、半结构化;只需要更改列和限定符就行。 以列的方法存储 ,

非稀疏,容易形成数据块。

传统的数据库:稀疏的(就是有空格,有空缺的元素留空白)

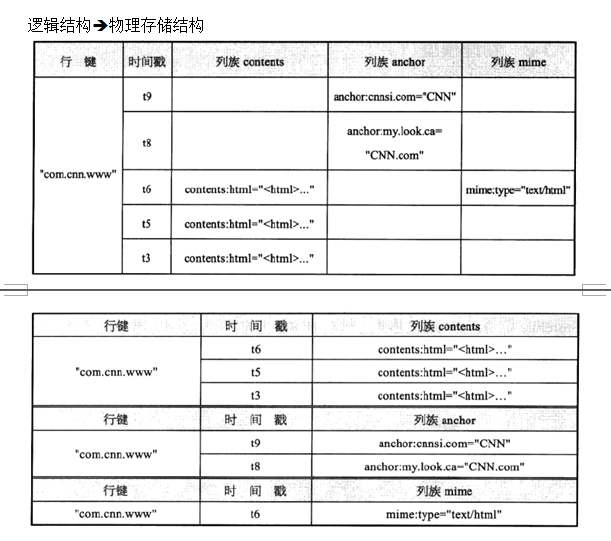

逻辑结构 --> 物理结构

第一个表格中每一个时间戳的增加,会可能带来三个新元素,然后找到第二个表的相对应行增加相应行的子行

hbasecontents:html = "<html>..."_百度搜索

https://www.baidu.com/s?wd=hbasecontents%3Ahtml%20%3D%20%22%3Chtml%3E...%22&rsv_spt=1&rsv_iqid=0xe281c553000aa0bf&issp=1&f=3&rsv_bp=1&rsv_idx=2&ie=utf-8&rqlang=cn&tn=site888_3_pg&rsv_enter=1&oq=contents%3Ahtml%20%3D%20%26quot%3B%26lt%3Bhtml%26gt%3B...%26quot%3B&inputT=2068&rsv_t=0cfeUlBQhS2%2B9h%2BkqlPLaajeoQzElnvneQ8%2BdFYDgKQeMeNR3FouOAsDE6nbEiGcSW1s&rsv_sug3=4&rsv_pq=f0944adf000e0101&rsv_sug2=0&prefixsug=hbasecontents%3Ahtml%20%3D%20%22%3Chtml%3E...%22&rsp=6&rsv_sug9=es_0_1&rsv_sug4=3285&rsv_sug=9

HBase使用教程 - 小码哥BASE64 - ITeye技术网站

http://coderbase64.iteye.com/blog/2074601

hbase(main):后面的>变成‘’_百度搜索

https://www.baidu.com/s?wd=hbase(main)%3A%E5%90%8E%E9%9D%A2%E7%9A%84%3E%E5%8F%98%E6%88%90%E2%80%98%E2%80%99&rsv_spt=1&rsv_iqid=0xf52382ec000cdcb3&issp=1&f=3&rsv_bp=1&rsv_idx=2&ie=utf-8&rqlang=cn&tn=site888_3_pg&rsv_enter=1&rsv_t=9ecfwiGV7s4Gk1e0lIpu67VyNgGsC%2BiNO1tA%2FuEb4dWNkbY3Y6CqoOSE838ePQLnjfLO&oq=hbase(main)%3A005%3A0%26quot%3Bhbase(main)%3A005%3A0%26quot%3B&rsv_sug3=32&rsv_pq=8550b5d3000a62b9&rsv_sug2=0&prefixsug=hbase(main)%3A%E5%90%8E%E9%9D%A2%E7%9A%84%3E%E5%8F%98%E6%88%90%E2%80%98%E2%80%99&rsp=7&rsv_sug9=es_0_1&inputT=49183&rsv_sug4=49743&rsv_sug=9

HBase是按列存储的,稀疏(列族少,数据吸收)矩阵。

HBase是列存储,以列族为存储单位,传统的数据库是按行存储。

即使行、列相同,得到的值也是不一样的(有时间戳)。

回收策略:数据的压缩和整理,没用的都会删掉。

HBase 优化需要的源代码

一个物理机有且仅有一个HRegionServer 、可能含有一个HBase(集群中只有一个物理机含有)

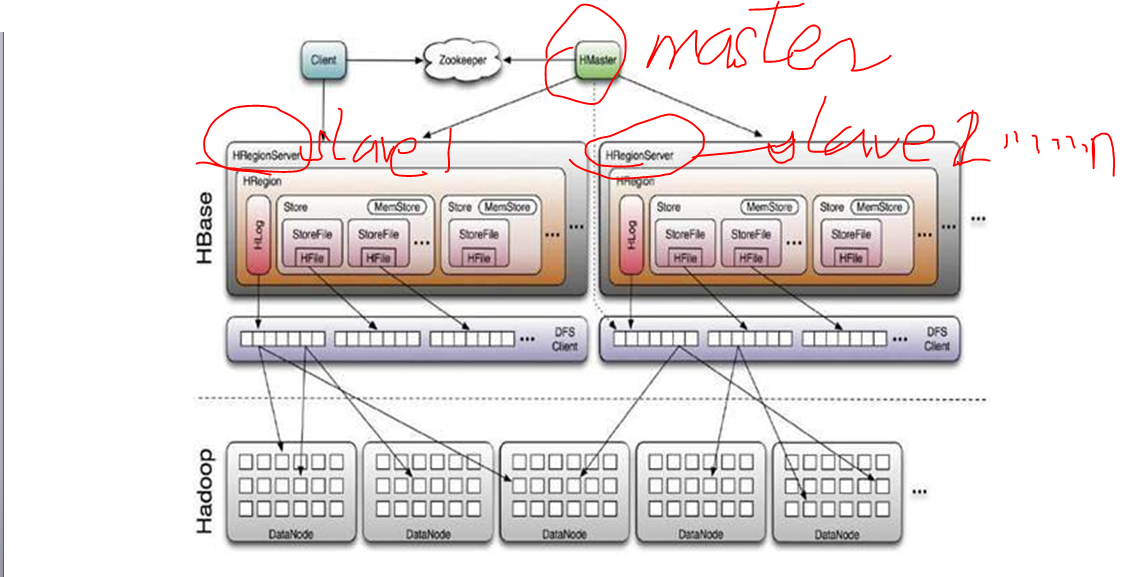

见图 HRegionServer 、HBase之间的关系相当于虚拟机的master、slave1、slave2....n

这个架构也类似于hadoop本身的架构,由master和namenode存储元数据,真正的数据存储在datanode上(会有多个datanode)。

- Hmaster负责元数据存储,维护整个hbase集群的状态。

- HRegionServer是真正管理数据的增删改查(数据库的功能),用户提交的数据请求都是通过HRegionServer协调处理。

² 每一个物理机器节点只能有一个HRegionServer。

² 在HRegionServer下管理HRegion。

HRegion中存放的真正存储单元。

HRegion就是我们创建表的时候创建的,在默认情况下建立的表都有一个HRegion(最基本的存储单元,个数由列族决定),region根据行键划分,对应部分范围放到一起。

HRegion到底交个哪个HRegionServer管理,是由Hmaster管理,它会考虑负载均衡和资源管理。

HRegion中有一系列的结构。

HLog (hbase log)日志记录文件。相当于oracle中的重做日志文件;Redo log记录增删改查的每一笔记录。

Store单元存放真实的数据放。

数据以列族为单位存放,列族就存放在store中。

Store又分为两个部分:MemStore内存存储;StoreFile 存储文件,是以HFile存在的,存在于hdfs系统中datanode上。

在HRegionServer中 HRegion会有多个,随着数据不断增加就会产生新的HRegion,hlog只有一个。

在hadoop中,存储是均匀的,hbase的hfile就会分布在datanode上。整体上数据进行了两次分布。在hbase和hadoop上进行两次分布。

第一次分布:HBase --》HRegionServicer

第二次分布: storefile --》 HDFS

一次一个块读取效率高,所以会有memstore(内存缓存区);memstore多了在以数据块的方式存在

最终落实在磁盘上时是HDFS

一个数据块64

memstore -》 storefile以Hfile的形式才能发给 -》HDFS

这里HBase是虚的,不干具体的实事,找到一个空闲即负载均衡的HRegionServicer去做具体的工作,

HRegionServicer相当于数据库服务器 数据管理单位HRegion

Store真正数据存放的地方,以文件多个HFile的方式持久化存储,MemStore用来存放零碎的,存到一定的数量再存放到StoreFile

再经过客户端存、取

HLog用日志来数据持久化

HBase的访问方式 见1.4文档详细内容

thrift 序列化技术

每一个store 对应一个列族

2个映像是为了备份HDFS中的文件都要备份? 其他的地方不需要备份吗?

阻抗失谐

Redis 教程 | 菜鸟教程

http://www.runoob.com/redis/redis-tutorial.html

针对快照:

拷贝给别人会生成一份日志,要删掉;从别人那里拷贝过来要调网络配置

现在安装的 密码是root

老师的是打开的 是fdq , 进去是111111

跟教授稀里糊涂的学的一塌糊涂

Hadoop、hbase、Mapreduce

hadoop的安装 - [ hadoop学习 ]

http://www.kancloud.cn/zizhilong/hadoop/224101

虾皮工作室 - 赠人玫瑰,手留余香。

http://www.xiapistudio.com/

hadoop中典型Writable类详解 - wuyudong - 博客园

http://www.cnblogs.com/wuyudong/p/hadoop-writable.html

Hadoop学习笔记(1):WordCount程序的实现与总结 - JD_Beatles - 博客园

http://www.cnblogs.com/pengyingzhi/p/5361008.html#3401994

Hadoop示例程序WordCount详解及实例 - xwdreamer - 博客园

http://www.cnblogs.com/xwdreamer/archive/2011/01/04/2297049.html

Hadoop WordCount解读 - 犀利的代码总是很精炼 - ITeye技术网站

http://a123159521.iteye.com/blog/1226924

import java.io.IOException;

import java.net.URI;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

/**

*

*

* 小结:Hadoop程序处理流程

*

* 1. 分析输入文件

* 2. 文件里面的每一行都会执行一次 TokenizerMapper.map() 分割成单词

* 3. 每一个单词都会被送到 KeyCombiner.reduce() 进行处理,统计次数

* 4. 当所有的单词都统计完成以后,会合并到一起然后送到 IntSumReducer.reduce() 进行最后的处理

*

*/

public class WordCount {

private static Configuration conf;

private static String hdfsPath = "hdfs://192.168.150.3:9000"; //hdfs 主机

private static Path inputPath = new Path(hdfsPath + "/tmp/wordcount/in"); //hdfs完整输入路径

private static String outPathStr = "/tmp/wordcount/out"; //hdfs输出路径

private static Path outPath = new Path(hdfsPath + outPathStr); //hdfs完整输出路径

/**

* Mapper 处理

*

* 四个参数的含义,前两个是输入的值 Key/Value的类型,后两个是输出的值Key/Value的类型 , 此处的输出类型需要KeyCombiner的输入参数保持一致

*/

public static class TokenizerMapper extends Mapper<Object,Text,Text,IntWritable>{

/**

* one 词的个数,刚开始都为1,也可以不定义,直接context.write(keytext, 1);

*/

private final static IntWritable one=new IntWritable(1);

/**

* word 用于临时保存分隔 出 的 单词

*/

private Text word =new Text();

/**

* @param key 即行偏移量,作用不大

* @param value 匹配到的当前行的内容

* @param context

*/

public void map(Object key,Text value,Context context) throws IOException,InterruptedException{

System.out.println("mapper --- 行内容:"+value);

StringTokenizer itr=new StringTokenizer(value.toString()); //把内容行按空格进行分隔

while (itr.hasMoreTokens()) { //返回是否还有分隔符。

word.set(itr.nextToken()); //返回从当前位置到下一个分隔符的字符串。

//System.out.println("mapper --- 分割成单词:"+word);

//以key/value的形式发送给reduce

context.write(word, one);

}

}

}

/**

* Reducer 处理

*

* 四个参数的含义,前两个是输入的值 Key/Value的类型,后两个是输出的值Key/Value的类型

*

* 此处的【输入参数类型】需要和 KeyCombiner 的【输出参数类型】保持一致

*

*/

public static class IntSumReducer extends Reducer<Text,IntWritable,Text,IntWritable> {

/**

* result 用于记录单词的出现数量

*/

private IntWritable result = new IntWritable();

/**

* @param key 单词名称

* @param values 单词对应的值的集合

* @param context

*/

public void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) { //遍历值集合

int getval = val.get(); //得到值

sum += getval; //累加数量

//System.out.println("reduce --- getval:"+getval);

}

System.out.println("reduce --- key:"+key +",sum:"+sum);

result.set(sum); //保存累加的数值

context.write(key, result); //将累加后的数值

}

}

/**

* Combiner 处理

*

* 四个参数的含义,前两个是输入的值 Key/Value的类型,后两个是输出的值Key/Value的类型

*

* 此处的【输入参数类型】需要和 TokenizerMapper 的【输出参数类型】保持一致

*

* 此处的【输出参数类型】需要和 IntSumReducer 的【输入参数类型】保持一致

*

*/

public static class KeyCombiner extends Reducer<Text,IntWritable,Text,IntWritable> {

/**

* result 用于记录单词的出现数量

*/

private IntWritable result = new IntWritable();

/**

* @param key 单词名称

* @param values 单词对应的值的集合

* @param context

*/

public void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) { //遍历值集合

int getval = val.get(); //得到值

sum += getval; //累加数量

//System.out.println("reduce --- getval:"+getval);

}

System.out.println("combiner --- key:"+key+",sum:"+sum);

result.set(sum); //保存累加的数值

context.write(key, result); //将结果传递给 IntSumReducer

}

}

/**

*

* 执行流程

*

* 输入文件 -> TokenizerMapper -> KeyCombiner -> IntSumReducer -> 输出到文件

*

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

System.setProperty("hadoop.home.dir", "D:\soft\hadoop-2.6.4");

//删除临时输出目录

WordCount.deleteDir();

conf = new Configuration();

Job job = Job.getInstance(); //得到 job的实例

job.setJobName("word count"); //设置任务名称

job.setJarByClass(WordCount.class); //设置类名

job.setMapperClass(TokenizerMapper.class); //设置用于处理maper的类

job.setCombinerClass(KeyCombiner.class); //用于合并处理的类

job.setReducerClass(IntSumReducer.class); //设置用于处理reduce的类

job.setOutputKeyClass(Text.class); //设置输出KEY的类

job.setOutputValueClass(IntWritable.class); //设置用于输出VALUE的类

FileInputFormat.addInputPath(job, inputPath); //设置输入参数路径

FileOutputFormat.setOutputPath(job, outPath); //设置持久化输出位置

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

/**

* 删除临时输出目录

*/

private static void deleteDir(){

Configuration conf = new Configuration();

FileSystem fs;

try {

fs = FileSystem.get(URI.create(hdfsPath),conf);

Path p=new Path(outPathStr);

boolean a=fs.delete(p,true);

System.out.println(a);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.out.println("delete Dir faild!");

}

}

}

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

tar jxvf 是解压指今

解压以bzip2压缩的文件

-j 解压命令(*.bz)

-x 释放

-v 释放时的信息

-f 指定解压文件

看看下面这些

tar -cvf a.tar a 创建文件a的tar包

tar -tvf a.tar 查看tar包包含的文件

tar -xvf a.tar 释放tar包文件

tar -rvf a.tar b 追加文件b到tar包a.tar

tar -Avf a.tar c.tar 追加c.tar包到a.tar包

tar -zcvf a.tar.gz a 创建文件a的gzip压缩的tar包

tar -ztvf a.tar.gz 查看文件a的tar压缩包内容

tar -zxvf aa.tar.gz 释放aa.tar.gz包的内容

tar -jcvf aa.tar.bz2 aa 创建文件a的bzip压缩的tar包

tar -jtvf aa.tar.bz2 查看文件a的tar压缩包内容

tar -jxvf aa.tar.bz2 释放aa.tar.bz2包的内

StringTokenizer类_Techome_新浪博客

http://blog.sina.com.cn/s/blog_45c6564d0100kmee.html

start-hbase.sh

stop-hbase.sh

status

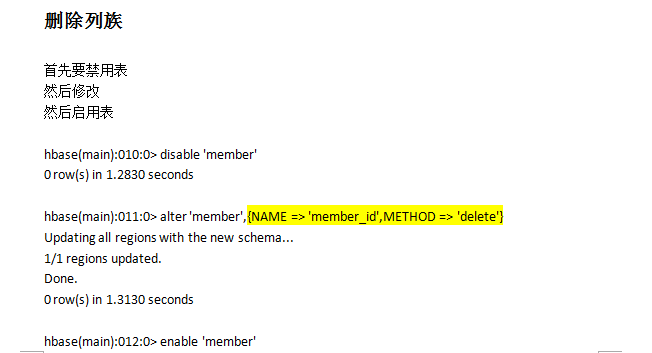

指定列族删除

11.22.2016

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:QTINC=/usr/lib64/qt-3.3/include

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:USER=hadoop

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:QTLIB=/usr/lib64/qt-3.3/lib

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:KDE_IS_PRELINKED=1

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:HBASE_SECURITY_LOGGER=INFO,RFAS

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:HOME=/home/hadoop

2016-11-22 17:33:08,101 INFO [main] util.ServerCommandLine: env:LESSOPEN=||/usr/bin/lesspipe.sh %s

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:HBASE_START_FILE=/tmp/hbase-hadoop-regionserver.autorestart

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:HBASE_LOGFILE=hbase-hadoop-regionserver-master.log

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:LANG=zh_CN.UTF-8

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:SSH_ASKPASS=/usr/libexec/openssh/gnome-ssh-askpass

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:HBASE_LOG_PREFIX=hbase-hadoop-regionserver-master

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: env:HBASE_IDENT_STRING=hadoop

2016-11-22 17:33:08,102 INFO [main] util.ServerCommandLine: vmName=Java HotSpot(TM) 64-Bit Server VM, vmVendor=Oracle Corporation, vmVersion=24.65-b04

2016-11-22 17:33:08,111 INFO [main] util.ServerCommandLine: vmInputArguments=[-Dproc_regionserver, -XX:OnOutOfMemoryError=kill -9 %p, -Xmx8G, -XX:+UseConcMarkSweepGC, -XX:PermSize=128m, -XX:MaxPermSize=128m, -Dhbase.log.dir=/home/hadoop/soft/hbase-1.2.4/logs, -Dhbase.log.file=hbase-hadoop-regionserver-master.log, -Dhbase.home.dir=/home/hadoop/soft/hbase-1.2.4, -Dhbase.id.str=hadoop, -Dhbase.root.logger=INFO,RFA, -Djava.library.path=/home/hadoop/soft/hadoop-2.6.4/lib/native, -Dhbase.security.logger=INFO,RFAS]

2016-11-22 17:33:08,594 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2016-11-22 17:33:09,651 INFO [main] regionserver.RSRpcServices: regionserver/master/192.168.150.3:16020 server-side HConnection retries=350

2016-11-22 17:33:11,094 INFO [main] ipc.SimpleRpcScheduler: Using deadline as user call queue, count=3

2016-11-22 17:33:11,261 INFO [main] ipc.RpcServer: regionserver/master/192.168.150.3:16020: started 10 reader(s) listening on port=16020

2016-11-22 17:33:12,096 INFO [main] impl.MetricsConfig: loaded properties from hadoop-metrics2-hbase.properties

2016-11-22 17:33:12,567 INFO [main] impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2016-11-22 17:33:12,567 INFO [main] impl.MetricsSystemImpl: HBase metrics system started

2016-11-22 17:33:16,916 INFO [main] fs.HFileSystem: Added intercepting call to namenode#getBlockLocations so can do block reordering using class class org.apache.hadoop.hbase.fs.HFileSystem$ReorderWALBlocks

2016-11-22 17:33:17,793 INFO [main] zookeeper.RecoverableZooKeeper: Process identifier=regionserver:16020 connecting to ZooKeeper ensemble=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:host.name=master

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:java.version=1.7.0_67

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.7.0_67/jre

2016-11-22 17:33:17,835 INFO [main] zookeeper.ZooKeeper: Client environment:java.class.path=/home/hadoop/soft/hbase-1.2.4/conf:/usr/java/jdk1.7.0_67/lib/tools.jar:/home/hadoop/soft/hbase-1.2.4:/home/hadoop/soft/hbase-1.2.4/lib/activation-1.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/aopalliance-1.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/apacheds-i18n-2.0.0-M15.jar:/home/hadoop/soft/hbase-1.2.4/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/hadoop/soft/hbase-1.2.4/lib/api-asn1-api-1.0.0-M20.jar:/home/hadoop/soft/hbase-1.2.4/lib/api-util-1.0.0-M20.jar:/home/hadoop/soft/hbase-1.2.4/lib/asm-3.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/avro-1.7.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/aws-java-sdk-core-1.10.77.jar:/home/hadoop/soft/hbase-1.2.4/lib/aws-java-sdk-s3-1.11.34.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-beanutils-1.7.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-beanutils-core-1.8.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-cli-1.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-codec-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-collections-3.2.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-compress-1.4.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-configuration-1.6.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-daemon-1.0.13.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-digester-1.8.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-el-1.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-httpclient-3.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-io-2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-lang-2.6.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-logging-1.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-math-2.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-math3-3.1.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/commons-net-3.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/disruptor-3.3.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/findbugs-annotations-1.3.9-1.jar:/home/hadoop/soft/hbase-1.2.4/lib/guava-12.0.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/guice-3.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/guice-servlet-3.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-annotations-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-ant-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-archives-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-archives-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-archives-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-auth-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-aws-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-common-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-common-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-common-2.6.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-common-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-datajoin-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-datajoin-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-datajoin-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-distcp-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-distcp-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-distcp-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-extras-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-extras-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-extras-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-gridmix-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-gridmix-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-gridmix-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-hdfs-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-hdfs-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-hdfs-2.6.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-hdfs-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-hdfs-nfs-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-kms-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-app-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-app-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-app-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-common-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-common-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-common-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-core-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-core-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-core-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-plugins-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-plugins-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-hs-plugins-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-jobclient-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-jobclient-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-jobclient-2.6.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-jobclient-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-shuffle-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-shuffle-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-client-shuffle-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-examples-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-examples-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-mapreduce-examples-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-nfs-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-openstack-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-rumen-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-rumen-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-rumen-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-sls-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-sls-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-sls-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-streaming-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-streaming-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-streaming-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-api-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-api-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-distributedshell-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-distributedshell-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-distributedshell-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-client-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-client-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-client-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-common-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-common-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-common-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-registry-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-applicationhistoryservice-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-applicationhistoryservice-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-applicationhistoryservice-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-common-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-common-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-common-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-nodemanager-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-nodemanager-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-nodemanager-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-resourcemanager-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-resourcemanager-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-resourcemanager-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-tests-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-tests-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-tests-2.6.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-tests-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-web-proxy-2.6.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-web-proxy-2.6.4-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hadoop-yarn-server-web-proxy-2.6.4-test-sources.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-annotations-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-annotations-1.2.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-client-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-common-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-common-1.2.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-examples-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-external-blockcache-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-hadoop2-compat-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-hadoop-compat-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-it-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-it-1.2.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-prefix-tree-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-procedure-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-protocol-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-resource-bundle-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-rest-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-server-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-server-1.2.4-tests.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-shell-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/hbase-thrift-1.2.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/htrace-core-3.1.0-incubating.jar:/home/hadoop/soft/hbase-1.2.4/lib/httpclient-4.2.5.jar:/home/hadoop/soft/hbase-1.2.4/lib/httpcore-4.4.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/soft/hbase-1.2.4/lib/jackson-jaxrs-1.9.13.jar:/home/hadoop/soft/hbase-1.2.4/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/soft/hbase-1.2.4/lib/jackson-xc-1.9.13.jar:/home/hadoop/soft/hbase-1.2.4/lib/jamon-runtime-2.4.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/jasper-compiler-5.5.23.jar:/home/hadoop/soft/hbase-1.2.4/lib/jasper-runtime-5.5.23.jar:/home/hadoop/soft/hbase-1.2.4/lib/javax.inject-1.jar:/home/hadoop/soft/hbase-1.2.4/lib/java-xmlbuilder-0.4.jar:/home/hadoop/soft/hbase-1.2.4/lib/jaxb-api-2.2.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/soft/hbase-1.2.4/lib/jcodings-1.0.8.jar:/home/hadoop/soft/hbase-1.2.4/lib/jersey-client-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/jersey-core-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/jersey-guice-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/jersey-json-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/jersey-server-1.9.jar:/home/hadoop/soft/hbase-1.2.4/lib/jets3t-0.9.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/jettison-1.3.3.jar:/home/hadoop/soft/hbase-1.2.4/lib/jetty-6.1.26.jar:/home/hadoop/soft/hbase-1.2.4/lib/jetty-sslengine-6.1.26.jar:/home/hadoop/soft/hbase-1.2.4/lib/jetty-util-6.1.26.jar:/home/hadoop/soft/hbase-1.2.4/lib/joni-2.1.2.jar:/home/hadoop/soft/hbase-1.2.4/lib/jruby-complete-1.6.8.jar:/home/hadoop/soft/hbase-1.2.4/lib/jsch-0.1.42.jar:/home/hadoop/soft/hbase-1.2.4/lib/jsp-2.1-6.1.14.jar:/home/hadoop/soft/hbase-1.2.4/lib/jsp-api-2.1-6.1.14.jar:/home/hadoop/soft/hbase-1.2.4/lib/junit-4.12.jar:/home/hadoop/soft/hbase-1.2.4/lib/leveldbjni-all-1.8.jar:/home/hadoop/soft/hbase-1.2.4/lib/libthrift-0.9.3.jar:/home/hadoop/soft/hbase-1.2.4/lib/log4j-1.2.17.jar:/home/hadoop/soft/hbase-1.2.4/lib/metrics-core-2.2.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/netty-all-4.0.23.Final.jar:/home/hadoop/soft/hbase-1.2.4/lib/paranamer-2.3.jar:/home/hadoop/soft/hbase-1.2.4/lib/protobuf-java-2.5.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/servlet-api-2.5-6.1.14.jar:/home/hadoop/soft/hbase-1.2.4/lib/servlet-api-2.5.jar:/home/hadoop/soft/hbase-1.2.4/lib/slf4j-api-1.7.7.jar:/home/hadoop/soft/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar:/home/hadoop/soft/hbase-1.2.4/lib/snappy-java-1.0.4.1.jar:/home/hadoop/soft/hbase-1.2.4/lib/spymemcached-2.11.6.jar:/home/hadoop/soft/hbase-1.2.4/lib/xmlenc-0.52.jar:/home/hadoop/soft/hbase-1.2.4/lib/xz-1.0.jar:/home/hadoop/soft/hbase-1.2.4/lib/zookeeper-3.4.6.jar:/home/hadoop/soft/hadoop-2.6.4/etc/hadoop:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jettison-1.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jsr305-1.3.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jsp-api-2.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-lang-2.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/curator-client-2.6.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/asm-3.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/gson-2.2.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/htrace-core-3.0.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/hadoop-annotations-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/httpclient-4.2.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-io-2.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/hadoop-auth-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jersey-server-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-httpclient-3.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jersey-json-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jsch-0.1.42.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/activation-1.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-cli-1.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jersey-core-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/paranamer-2.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/zookeeper-3.4.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/httpcore-4.2.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-el-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/xmlenc-0.52.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/hamcrest-core-1.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/guava-11.0.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-digester-1.8.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jetty-util-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/curator-framework-2.6.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-codec-1.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/junit-4.11.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/log4j-1.2.17.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/avro-1.7.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/servlet-api-2.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/jetty-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-compress-1.4.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/commons-net-3.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/netty-3.6.2.Final.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/xz-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/hadoop-nfs-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4-tests.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/asm-3.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-el-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4-tests.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jettison-1.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/asm-3.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/javax.inject-1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/activation-1.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/guice-3.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jline-0.9.94.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-6.1.26.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/lib/xz-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-registry-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-client-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-api-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-common-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-common-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/lib/xz-1.0.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4-tests.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.4.jar:/home/hadoop/soft/hadoop-2.6.4/contrib/capacity-scheduler/*.jar

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:java.library.path=/home/hadoop/soft/hadoop-2.6.4/lib/native

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:os.name=Linux

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:os.arch=amd64

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:os.version=2.6.32-642.el6.x86_64

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:user.name=hadoop

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:user.home=/home/hadoop

2016-11-22 17:33:17,836 INFO [main] zookeeper.ZooKeeper: Client environment:user.dir=/home/hadoop/soft/hbase-1.2.4

2016-11-22 17:33:17,844 INFO [main] zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181 sessionTimeout=90000 watcher=regionserver:160200x0, quorum=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181, baseZNode=/hbase

2016-11-22 17:33:17,953 INFO [main-SendThread(192.168.150.5:2181)] zookeeper.ClientCnxn: Opening socket connection to server 192.168.150.5/192.168.150.5:2181. Will not attempt to authenticate using SASL (unknown error)

2016-11-22 17:33:17,998 INFO [main-SendThread(192.168.150.5:2181)] zookeeper.ClientCnxn: Socket connection established to 192.168.150.5/192.168.150.5:2181, initiating session

2016-11-22 17:33:18,035 INFO [main-SendThread(192.168.150.5:2181)] zookeeper.ClientCnxn: Session establishment complete on server 192.168.150.5/192.168.150.5:2181, sessionid = 0x3588b8bd1a70002, negotiated timeout = 40000

2016-11-22 17:33:18,201 INFO [RpcServer.responder] ipc.RpcServer: RpcServer.responder: starting

2016-11-22 17:33:18,202 INFO [RpcServer.listener,port=16020] ipc.RpcServer: RpcServer.listener,port=16020: starting

2016-11-22 17:33:18,659 INFO [main] mortbay.log: Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2016-11-22 17:33:18,670 INFO [main] http.HttpRequestLog: Http request log for http.requests.regionserver is not defined

2016-11-22 17:33:18,717 INFO [main] http.HttpServer: Added global filter 'safety' (class=org.apache.hadoop.hbase.http.HttpServer$QuotingInputFilter)

2016-11-22 17:33:18,718 INFO [main] http.HttpServer: Added global filter 'clickjackingprevention' (class=org.apache.hadoop.hbase.http.ClickjackingPreventionFilter)

2016-11-22 17:33:18,726 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context regionserver

2016-11-22 17:33:18,726 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2016-11-22 17:33:18,726 INFO [main] http.HttpServer: Added filter static_user_filter (class=org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2016-11-22 17:33:18,810 INFO [main] http.HttpServer: Jetty bound to port 16030

2016-11-22 17:33:18,810 INFO [main] mortbay.log: jetty-6.1.26

2016-11-22 17:33:21,264 INFO [main] mortbay.log: Started SelectChannelConnector@0.0.0.0:16030

2016-11-22 17:33:21,631 INFO [regionserver/master/192.168.150.3:16020] zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x66bc473e connecting to ZooKeeper ensemble=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181

2016-11-22 17:33:21,632 INFO [regionserver/master/192.168.150.3:16020] zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181 sessionTimeout=90000 watcher=hconnection-0x66bc473e0x0, quorum=192.168.150.3:2181,192.168.150.4:2181,192.168.150.5:2181, baseZNode=/hbase

2016-11-22 17:33:21,637 INFO [regionserver/master/192.168.150.3:16020-SendThread(192.168.150.4:2181)] zookeeper.ClientCnxn: Opening socket connection to server 192.168.150.4/192.168.150.4:2181. Will not attempt to authenticate using SASL (unknown error)

2016-11-22 17:33:21,641 INFO [regionserver/master/192.168.150.3:16020-SendThread(192.168.150.4:2181)] zookeeper.ClientCnxn: Socket connection established to 192.168.150.4/192.168.150.4:2181, initiating session

2016-11-22 17:33:21,648 INFO [regionserver/master/192.168.150.3:16020-SendThread(192.168.150.4:2181)] zookeeper.ClientCnxn: Session establishment complete on server 192.168.150.4/192.168.150.4:2181, sessionid = 0x2588b290c690004, negotiated timeout = 40000

2016-11-22 17:33:22,008 INFO [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: ClusterId : 3c1b7721-de8f-42a6-90a9-8c0a694da768

2016-11-22 17:33:22,216 INFO [regionserver/master/192.168.150.3:16020] regionserver.MemStoreFlusher: globalMemStoreLimit=3.2 G, globalMemStoreLimitLowMark=3.0 G, maxHeap=8.0 G

2016-11-22 17:33:22,241 INFO [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: CompactionChecker runs every 10sec

2016-11-22 17:33:22,362 INFO [regionserver/master/192.168.150.3:16020] regionserver.RegionServerCoprocessorHost: System coprocessor loading is enabled

2016-11-22 17:33:22,362 INFO [regionserver/master/192.168.150.3:16020] regionserver.RegionServerCoprocessorHost: Table coprocessor loading is enabled

2016-11-22 17:33:22,376 INFO [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: reportForDuty to master=master,16000,1479807189530 with port=16020, startcode=1479807192653

2016-11-22 17:33:22,996 WARN [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: reportForDuty failed; sleeping and then retrying.

2016-11-22 17:33:26,000 INFO [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: reportForDuty to master=master,16000,1479807189530 with port=16020, startcode=1479807192653

2016-11-22 17:33:26,084 INFO [regionserver/master/192.168.150.3:16020] hfile.CacheConfig: Allocating LruBlockCache size=3.20 GB, blockSize=64 KB

2016-11-22 17:33:26,103 INFO [regionserver/master/192.168.150.3:16020] hfile.CacheConfig: Created cacheConfig: blockCache=LruBlockCache{blockCount=0, currentSize=3520936, freeSize=3428966488, maxSize=3432487424, heapSize=3520936, minSize=3260862976, minFactor=0.95, multiSize=1630431488, multiFactor=0.5, singleSize=815215744, singleFactor=0.25}, cacheDataOnRead=true, cacheDataOnWrite=false, cacheIndexesOnWrite=false, cacheBloomsOnWrite=false, cacheEvictOnClose=false, cacheDataCompressed=false, prefetchOnOpen=false

2016-11-22 17:33:26,336 INFO [regionserver/master/192.168.150.3:16020] wal.WALFactory: Instantiating WALProvider of type class org.apache.hadoop.hbase.wal.DefaultWALProvider

2016-11-22 17:33:26,390 INFO [regionserver/master/192.168.150.3:16020] wal.FSHLog: WAL configuration: blocksize=128 MB, rollsize=121.60 MB, prefix=master%2C16020%2C1479807192653.default, suffix=, logDir=hdfs://192.168.150.3:9000/hbase/WALs/master,16020,1479807192653, archiveDir=hdfs://192.168.150.3:9000/hbase/oldWALs

2016-11-22 17:33:26,655 INFO [regionserver/master/192.168.150.3:16020] wal.FSHLog: Slow sync cost: 199 ms, current pipeline: []

2016-11-22 17:33:26,656 INFO [regionserver/master/192.168.150.3:16020] wal.FSHLog: New WAL /hbase/WALs/master,16020,1479807192653/master%2C16020%2C1479807192653.default.1479807206391

2016-11-22 17:33:26,742 INFO [regionserver/master/192.168.150.3:16020] regionserver.MetricsRegionServerWrapperImpl: Computing regionserver metrics every 5000 milliseconds

2016-11-22 17:33:26,761 INFO [regionserver/master/192.168.150.3:16020] regionserver.ReplicationSourceManager: Current list of replicators: [master,16020,1479807192653, slave1,16020,1479712512545, master,16020,1479712420627] other RSs: [master,16020,1479807192653, slave2,16020,1479813638869, slave1,16020,1479807188273]

2016-11-22 17:33:27,468 INFO [main-EventThread] replication.ReplicationTrackerZKImpl: /hbase/rs/slave2,16020,1479813638869 znode expired, triggering replicatorRemoved event

2016-11-22 17:33:27,490 INFO [SplitLogWorker-master:16020] regionserver.SplitLogWorker: SplitLogWorker master,16020,1479807192653 starting

2016-11-22 17:33:27,495 INFO [regionserver/master/192.168.150.3:16020] regionserver.HeapMemoryManager: Starting HeapMemoryTuner chore.

2016-11-22 17:33:27,518 INFO [regionserver/master/192.168.150.3:16020] regionserver.HRegionServer: Serving as master,16020,1479807192653, RpcServer on master/192.168.150.3:16020, sessionid=0x3588b8bd1a70002

2016-11-22 17:33:27,542 INFO [regionserver/master/192.168.150.3:16020] quotas.RegionServerQuotaManager: Quota support disabled

[hadoop@master soft]$ hbase shell

2016-11-22 17:34:14,980 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/soft/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.4, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2016

hbase(main):001:0> list

TABLE

0 row(s) in 0.3460 seconds

=> []

hbase(main):002:0> create 'scores','grad','course'

0 row(s) in 1.3900 seconds

=> Hbase::Table - scores

hbase(main):003:0> put 'scores','zkd','grad:','5'

0 row(s) in 0.3950 seconds

hbase(main):004:0> list

TABLE

scores

1 row(s) in 0.0220 seconds

=> ["scores"]

hbase(main):005:0> put 'scores','zkd','course:math','97'

0 row(s) in 0.0260 seconds

hbase(main):006:0> get 'scores','zkd'

COLUMN CELL

course:math timestamp=1479807433445, value=97

grad: timestamp=1479807365109, value=5

2 row(s) in 0.0730 seconds

hbase(main):007:0> scan 'scores'

ROW COLUMN+CELL

zkd column=course:math, timestamp=1479807433445, value=97

zkd column=grad:, timestamp=1479807365109, value=5

1 row(s) in 0.0450 seconds

hbase(main):008:0> list

TABLE

scores

1 row(s) in 0.0180 seconds

=> ["scores"]

hbase(main):009:0> disable 'menmber

hbase(main):010:0' disable 'menmber'

hbase(main):011:0' disable 'member'

hbase(main):012:0'

hbase(main):013:0' disable 'scores'

hbase(main):014:0' '

SyntaxError: (hbase):10: syntax error, unexpected tIDENTIFIER

disable 'menmber'

^

hbase(main):015:0> disable 'scores'

0 row(s) in 2.3940 seconds

hbase(main):016:0> drop 'scores'

0 row(s) in 1.3230 seconds

hbase(main):017:0> describe 'member'

ERROR: Unknown table member!

Here is some help for this command:

Describe the named table. For example:

hbase> describe 't1'

hbase> describe 'ns1:t1'

Alternatively, you can use the abbreviated 'desc' for the same thing.

hbase> desc 't1'

hbase> desc 'ns1:t1'

hbase(main):018:0> create 'member','member_id','address','info'

0 row(s) in 1.2870 seconds

=> Hbase::Table - member

hbase(main):019:0> list

TABLE

member

1 row(s) in 0.0120 seconds

=> ["member"]

hbase(main):020:0> describe 'member'

Table member is ENABLED

member

COLUMN FAMILIES DESCRIPTION

{NAME => 'address', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS =>

'0', TTL => 'FOREVER', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

{NAME => 'info', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0'

, TTL => 'FOREVER', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

{NAME => 'member_id', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS =

> '0', TTL => 'FOREVER', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

3 row(s) in 0.0720 seconds

hbase(main):021:0> disable 'member'

0 row(s) in 2.3090 seconds

hbase(main):022:0> list

TABLE

member

1 row(s) in 0.0170 seconds

=> ["member"]

hbase(main):023:0> is_disabled 'member'

true

0 row(s) in 0.0220 seconds

hbase(main):024:0> is_disabled 'scores'

ERROR: Unknown table scores!

Here is some help for this command:

Is named table disabled? For example:

hbase> is_disabled 't1'

hbase> is_disabled 'ns1:t1'

hbase(main):025:0> enable 'member'

0 row(s) in 1.3500 seconds

hbase(main):026:0> create 'tmp_member','member'

0 row(s) in 1.2740 seconds

=> Hbase::Table - tmp_member

hbase(main):027:0> describe 'tmp_member'

Table tmp_member is ENABLED

tmp_member

COLUMN FAMILIES DESCRIPTION

{NAME => 'member', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '

0', TTL => 'FOREVER', KEEP_DELETED_CELLS => 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

1 row(s) in 0.0320 seconds

hbase(main):028:0> list

TABLE

member

tmp_member

2 row(s) in 0.0160 seconds

=> ["member", "tmp_member"]

hbase(main):029:0> diable 'tmp_member'

NoMethodError: undefined method `diable' for #<Object:0x64d4ebfb>

hbase(main):030:0> disable 'tmp_member'

0 row(s) in 2.2810 seconds

hbase(main):031:0> put 'member','xueba','info:age','25'

0 row(s) in 0.0250 seconds

hbase(main):032:0> put 'member','xueba','info:birthday','1989-06-19'

0 row(s) in 0.0070 seconds

hbase(main):033:0> put 'member','xueba','info:company','tecent'

0 row(s) in 0.0070 seconds

hbase(main):034:0> put 'member','xueba','address:contry','china'

0 row(s) in 0.0070 seconds

hbase(main):035:0> put 'member','xueba','address:province','guangdong'

0 row(s) in 0.0080 seconds

hbase(main):036:0> put 'member','xueba','address:city','shenzhen'

0 row(s) in 0.0080 seconds

hbase(main):037:0> put 'member','xiaoming','info:age','24'

0 row(s) in 0.0070 seconds

hbase(main):038:0> put 'member','xiaoming','info:birthday','1990-03-22'

0 row(s) in 0.0060 seconds

hbase(main):039:0> put 'member','xiaoming','info:company','tecent'

0 row(s) in 0.0120 seconds

hbase(main):040:0> put 'member','xiaoming','info:favorite','movie'

0 row(s) in 0.0070 seconds

hbase(main):041:0> put 'member','xiaoming','address:contry','china'

0 row(s) in 0.0060 seconds

hbase(main):042:0> put 'member','xiaoming','address:province','guangdong'

0 row(s) in 0.0070 seconds

hbase(main):043:0> put 'member','xiaoming','address:city','guangzhou'

0 row(s) in 0.0100 seconds

hbase(main):044:0> scan 'member'

ROW COLUMN+CELL

xiaoming column=address:city, timestamp=1479808315381, value=guangzhou

xiaoming column=address:contry, timestamp=1479808315320, value=china

xiaoming column=address:province, timestamp=1479808315354, value=guangdong

xiaoming column=info:age, timestamp=1479808315155, value=24

xiaoming column=info:birthday, timestamp=1479808315187, value=1990-03-22

xiaoming column=info:company, timestamp=1479808315230, value=tecent

xiaoming column=info:favorite, timestamp=1479808315283, value=movie

xueba column=address:city, timestamp=1479808315123, value=shenzhen

xueba column=address:contry, timestamp=1479808315056, value=china

xueba column=address:province, timestamp=1479808315089, value=guangdong

xueba column=info:age, timestamp=1479808314937, value=25

xueba column=info:birthday, timestamp=1479808314975, value=1989-06-19

xueba column=info:company, timestamp=1479808315013, value=tecent

2 row(s) in 0.0960 seconds

hbase(main):045:0> list

TABLE

member

tmp_member

2 row(s) in 0.0220 seconds

=> ["member", "tmp_member"]

hbase(main):046:0> create 'scores','grad','course'

0 row(s) in 2.3230 seconds

=> Hbase::Table - scores

hbase(main):047:0> put 'scores','zkd','grade','5'

ERROR: Unknown column family! Valid column names: course:*, grad:*

Here is some help for this command:

Put a cell 'value' at specified table/row/column and optionally

timestamp coordinates. To put a cell value into table 'ns1:t1' or 't1'

at row 'r1' under column 'c1' marked with the time 'ts1', do:

hbase> put 'ns1:t1', 'r1', 'c1', 'value'

hbase> put 't1', 'r1', 'c1', 'value'

hbase> put 't1', 'r1', 'c1', 'value', ts1

hbase> put 't1', 'r1', 'c1', 'value', {ATTRIBUTES=>{'mykey'=>'myvalue'}}

hbase> put 't1', 'r1', 'c1', 'value', ts1, {ATTRIBUTES=>{'mykey'=>'myvalue'}}

hbase> put 't1', 'r1', 'c1', 'value', ts1, {VISIBILITY=>'PRIVATE|SECRET'}

The same commands also can be run on a table reference. Suppose you had a reference

t to table 't1', the corresponding command would be:

hbase> t.put 'r1', 'c1', 'value', ts1, {ATTRIBUTES=>{'mykey'=>'myvalue'}}

hbase(main):048:0> put 'scores','zkd','course:math','97'

0 row(s) in 0.0290 seconds

hbase(main):049:0> get 'scores','zkd'

COLUMN CELL

course:math timestamp=1479808523355, value=97

1 row(s) in 0.0310 seconds

hbase(main):050:0> scan

ERROR: wrong number of arguments (0 for 1)

Here is some help for this command:

Scan a table; pass table name and optionally a dictionary of scanner

specifications. Scanner specifications may include one or more of:

TIMERANGE, FILTER, LIMIT, STARTROW, STOPROW, ROWPREFIXFILTER, TIMESTAMP,

MAXLENGTH or COLUMNS, CACHE or RAW, VERSIONS, ALL_METRICS or METRICS

If no columns are specified, all columns will be scanned.

To scan all members of a column family, leave the qualifier empty as in

'col_family'.

The filter can be specified in two ways:

1. Using a filterString - more information on this is available in the

Filter Language document attached to the HBASE-4176 JIRA

2. Using the entire package name of the filter.

If you wish to see metrics regarding the execution of the scan, the

ALL_METRICS boolean should be set to true. Alternatively, if you would

prefer to see only a subset of the metrics, the METRICS array can be

defined to include the names of only the metrics you care about.

Some examples:

hbase> scan 'hbase:meta'

hbase> scan 'hbase:meta', {COLUMNS => 'info:regioninfo'}

hbase> scan 'ns1:t1', {COLUMNS => ['c1', 'c2'], LIMIT => 10, STARTROW => 'xyz'}

hbase> scan 't1', {COLUMNS => ['c1', 'c2'], LIMIT => 10, STARTROW => 'xyz'}

hbase> scan 't1', {COLUMNS => 'c1', TIMERANGE => [1303668804, 1303668904]}

hbase> scan 't1', {REVERSED => true}

hbase> scan 't1', {ALL_METRICS => true}

hbase> scan 't1', {METRICS => ['RPC_RETRIES', 'ROWS_FILTERED']}

hbase> scan 't1', {ROWPREFIXFILTER => 'row2', FILTER => "

(QualifierFilter (>=, 'binary:xyz')) AND (TimestampsFilter ( 123, 456))"}

hbase> scan 't1', {FILTER =>

org.apache.hadoop.hbase.filter.ColumnPaginationFilter.new(1, 0)}

hbase> scan 't1', {CONSISTENCY => 'TIMELINE'}

For setting the Operation Attributes

hbase> scan 't1', { COLUMNS => ['c1', 'c2'], ATTRIBUTES => {'mykey' => 'myvalue'}}

hbase> scan 't1', { COLUMNS => ['c1', 'c2'], AUTHORIZATIONS => ['PRIVATE','SECRET']}

For experts, there is an additional option -- CACHE_BLOCKS -- which

switches block caching for the scanner on (true) or off (false). By

default it is enabled. Examples:

hbase> scan 't1', {COLUMNS => ['c1', 'c2'], CACHE_BLOCKS => false}

Also for experts, there is an advanced option -- RAW -- which instructs the

scanner to return all cells (including delete markers and uncollected deleted

cells). This option cannot be combined with requesting specific COLUMNS.

Disabled by default. Example:

hbase> scan 't1', {RAW => true, VERSIONS => 10}

Besides the default 'toStringBinary' format, 'scan' supports custom formatting

by column. A user can define a FORMATTER by adding it to the column name in

the scan specification. The FORMATTER can be stipulated:

1. either as a org.apache.hadoop.hbase.util.Bytes method name (e.g, toInt, toString)

2. or as a custom class followed by method name: e.g. 'c(MyFormatterClass).format'.

Example formatting cf:qualifier1 and cf:qualifier2 both as Integers:

hbase> scan 't1', {COLUMNS => ['cf:qualifier1:toInt',

'cf:qualifier2:c(org.apache.hadoop.hbase.util.Bytes).toInt'] }

Note that you can specify a FORMATTER by column only (cf:qualifier). You cannot

specify a FORMATTER for all columns of a column family.

Scan can also be used directly from a table, by first getting a reference to a

table, like such:

hbase> t = get_table 't'

hbase> t.scan

Note in the above situation, you can still provide all the filtering, columns,

options, etc as described above.

hbase(main):051:0> scan 'member'

ROW COLUMN+CELL

xiaoming column=address:city, timestamp=1479808315381, value=guangzhou

xiaoming column=address:contry, timestamp=1479808315320, value=china

xiaoming column=address:province, timestamp=1479808315354, value=guangdong

xiaoming column=info:age, timestamp=1479808315155, value=24

xiaoming column=info:birthday, timestamp=1479808315187, value=1990-03-22

xiaoming column=info:company, timestamp=1479808315230, value=tecent

xiaoming column=info:favorite, timestamp=1479808315283, value=movie

xueba column=address:city, timestamp=1479808315123, value=shenzhen

xueba column=address:contry, timestamp=1479808315056, value=china

xueba column=address:province, timestamp=1479808315089, value=guangdong

xueba column=info:age, timestamp=1479808314937, value=25

xueba column=info:birthday, timestamp=1479808314975, value=1989-06-19

xueba column=info:company, timestamp=1479808315013, value=tecent

2 row(s) in 0.1080 seconds

hbase(main):052:0> list

TABLE

member

scores

tmp_member

3 row(s) in 0.0150 seconds

=> ["member", "scores", "tmp_member"]

hbase(main):053:0> get 'member','xueba'

COLUMN CELL

address:city timestamp=1479808315123, value=shenzhen

address:contry timestamp=1479808315056, value=china

address:province timestamp=1479808315089, value=guangdong

info:age timestamp=1479808314937, value=25

info:birthday timestamp=1479808314975, value=1989-06-19

info:company timestamp=1479808315013, value=tecent

6 row(s) in 0.0310 seconds

hbase(main):054:0> list

TABLE

member

scores

tmp_member

3 row(s) in 0.0200 seconds

=> ["member", "scores", "tmp_member"]

hbase(main):055:0> get 'member','xueba'

COLUMN CELL

address:city timestamp=1479808315123, value=shenzhen

address:contry timestamp=1479808315056, value=china

address:province timestamp=1479808315089, value=guangdong

info:age timestamp=1479808314937, value=25

info:birthday timestamp=1479808314975, value=1989-06-19

info:company timestamp=1479808315013, value=tecent

6 row(s) in 0.0280 seconds

hbase(main):056:0> get 'member','xueba'

COLUMN CELL

address:city timestamp=1479808315123, value=shenzhen

address:contry timestamp=1479808315056, value=china

address:province timestamp=1479808315089, value=guangdong

info:age timestamp=1479808314937, value=25

info:birthday timestamp=1479808314975, value=1989-06-19

info:company timestamp=1479808315013, value=tecent

6 row(s) in 0.0190 seconds

hbase(main):057:0> list

TABLE

member

scores

tmp_member

3 row(s) in 0.0230 seconds