这篇博文是Model-Free Control的一部分,事实上SARSA和Q-learning with ϵ-greedy Exploration都是不依赖模型的控制的一部分,如果你想要全面的了解它们,建议阅读原文。

SARSA Algorithm

SARSA代表state,action,reward,next state,action taken in next state,算法在每次采样到该五元组时更新,所以得名SARSA。

1

:

S

e

t

1: Set

1: Set Initial

ϵ

epsilon

ϵ-greedy policy

π

,

t

=

0

pi,t=0

π,t=0, initial state

s

t

=

s

0

s_t=s_0

st=s0

2

:

T

a

k

e

a

t

∼

π

(

s

t

)

2: Take a_t sim pi(s_t)

2: Take at∼π(st) // Sample action from policy

3

:

O

b

s

e

r

v

e

(

r

t

,

s

t

+

1

)

3: Observe (r_t, s_{t+1})

3: Observe (rt,st+1)

4

:

l

o

o

p

4: loop

4: loop

5

:

T

a

k

e

5: quad Take

5: Take action

a

t

+

1

∼

π

(

s

t

+

1

)

a_{t+1}sim pi(s_{t+1})

at+1∼π(st+1)

6

:

O

b

s

e

r

v

e

(

r

t

+

1

,

s

t

+

2

)

6: quad Observe (r_{t+1},s_{t+2})

6: Observe (rt+1,st+2)

7

:

Q

(

s

t

,

a

t

)

←

Q

(

s

t

,

a

t

)

+

α

(

r

t

+

γ

Q

(

s

t

+

1

,

a

t

+

1

)

−

Q

(

s

t

,

a

t

)

)

7: quad Q(s_t,a_t) leftarrow Q(s_t,a_t)+alpha(r_t+gamma Q(s_{t+1},a_{t+1})-Q(s_t,a_t))

7: Q(st,at)←Q(st,at)+α(rt+γQ(st+1,at+1)−Q(st,at))

8

:

π

(

s

t

)

=

a

r

g

m

a

x

Q

(

s

t

,

a

)

w

.

p

r

o

b

1

−

ϵ

,

e

l

s

e

r

a

n

d

o

m

8: quad pi(s_t) = mathop{argmax} Q(s_t,a) w.prob 1-epsilon, else random

8: π(st)=argmax Q(st,a)w.prob 1−ϵ,else random

9

:

t

=

t

+

1

9: t=t+1

9: t=t+1

10

:

e

n

d

l

o

o

p

10: end loop

10:end loop

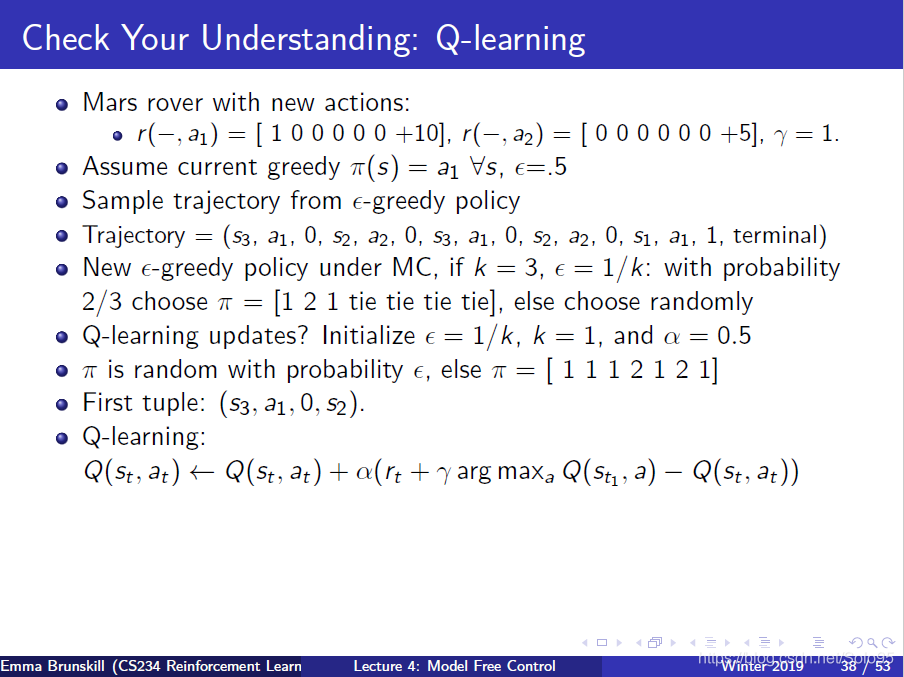

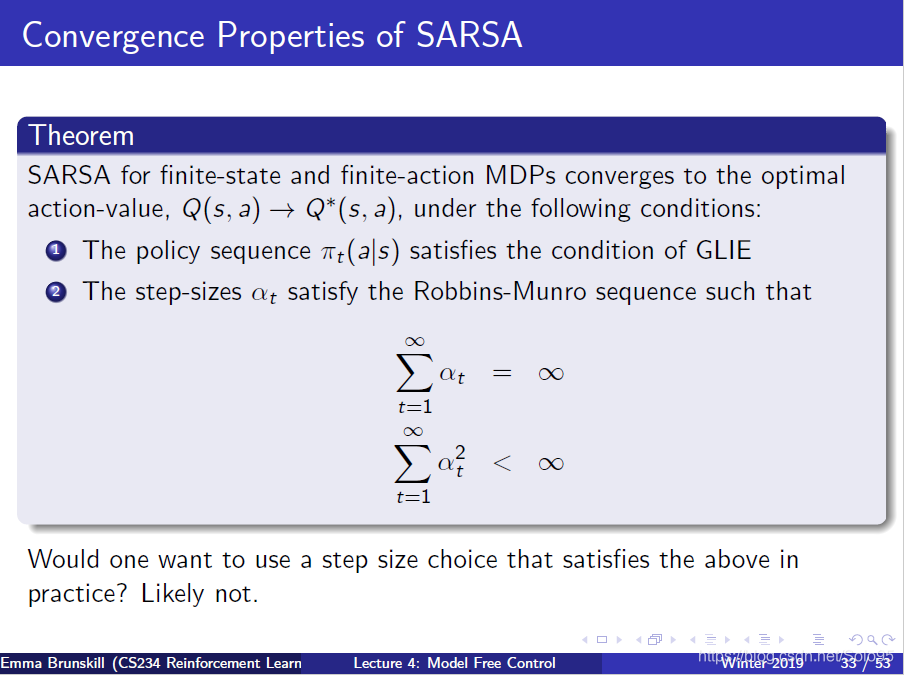

Q-learing: Learning the Optimal State-Action Value

我们能在不知道 π ∗ pi^* π∗的情况下估计最佳策略 π ∗ pi^* π∗的价值吗?

可以。使用Q-learning。

核心思想: 维护state-action Q值的估计并且使用它来bootstrap最佳未来动作的的价值。

回顾SARSA

Q

(

s

t

,

a

t

)

←

Q

(

s

t

,

a

t

)

+

α

(

(

r

t

+

γ

Q

(

s

t

+

1

,

a

t

+

1

)

)

−

Q

(

s

t

,

a

t

)

)

Q(s_t,a_t)leftarrow Q(s_t,a_t)+alpha((r_t+gamma Q(s_{t+1},a_{t+1}))-Q(s_t,a_t))

Q(st,at)←Q(st,at)+α((rt+γQ(st+1,at+1))−Q(st,at))

Q-learning

Q

(

s

t

,

a

t

)

←

Q

(

s

t

,

a

t

)

+

α

(

(

r

t

+

γ

m

a

x

a

′

Q

(

s

t

+

1

,

a

′

)

−

Q

(

s

t

,

a

t

)

)

)

Q(s_t,a_t)leftarrow Q(s_t,a_t)+alpha((r_t+gamma mathop{max}limits_{a'}Q(s_{t+1},a')-Q(s_t,a_t)))

Q(st,at)←Q(st,at)+α((rt+γa′maxQ(st+1,a′)−Q(st,at)))

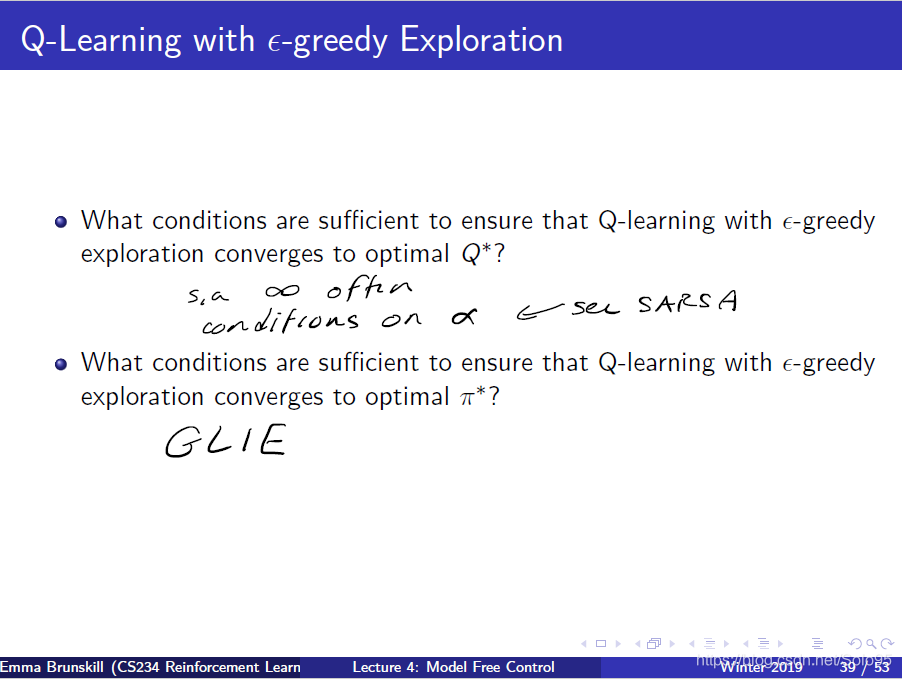

Off-Policy Control Using Q-learning

- 在上一节中假定了有某个策略 π b pi_b πb可以用来执行

- π b pi_b πb决定了实际获得的回报

- 现在在来考虑如何提升行为策略(policy improvement)

- 使行为策略 π b pi_b πb是对(w.r.t)当前的最佳 Q ( s , a ) Q(s,a) Q(s,a)估计的- ϵ epsilon ϵ-greedy策略

Q-learning with ϵ epsilon ϵ-greedy Exploration

1

:

I

n

t

i

a

l

i

z

e

Q

(

s

,

a

)

,

∀

s

∈

S

,

a

∈

A

t

=

0

,

1: Intialize Q(s,a), forall s in S, a in A t=0,

1: Intialize Q(s,a),∀s∈S,a∈A t=0, initial state

s

t

=

s

0

s_t=s_0

st=s0

2

:

S

e

t

π

b

2: Set pi_b

2: Set πb to be

ϵ

epsilon

ϵ-greedy w.r.t. Q$

3

:

l

o

o

p

3: loop

3: loop

4

:

T

a

k

e

a

t

∼

π

b

(

s

t

)

4: quad Take a_t simpi_b(s_t)

4: Take at∼πb(st) // simple action from policy

5

:

O

b

s

e

r

v

e

(

r

t

,

s

t

+

1

)

5: quad Observe (r_t, s_{t+1})

5: Observe (rt,st+1)

6

:

U

p

d

a

t

e

Q

6: quad Update Q

6: Update Q given

(

s

t

,

a

t

,

r

t

,

s

t

+

1

)

(s_t,a_t,r_t,s_{t+1})

(st,at,rt,st+1)

7

:

Q

(

s

r

,

a

r

)

←

Q

(

s

t

,

r

t

)

+

α

(

r

t

+

γ

m

a

x

a

Q

(

s

t

1

,

a

)

−

Q

(

s

t

,

a

t

)

)

7: quad Q(s_r,a_r) leftarrow Q(s_t,r_t)+alpha(r_t+gamma mathop{max}limits_{a}Q(s_{t1},a)-Q(s_t,a_t))

7: Q(sr,ar)←Q(st,rt)+α(rt+γamaxQ(st1,a)−Q(st,at))

8

:

P

e

r

f

o

r

m

8: quad Perform

8: Perform policy impovement:

s

e

t

π

b

set pi_b

set πb to be

ϵ

epsilon

ϵ-greedy w.r.t Q

9

:

t

=

t

+

1

9: quad t=t+1

9: t=t+1

10

:

e

n

d

l

o

o

p

10: end loop

10:end loop

如何初始化

Q

Q

Q重要吗?

无论怎样初始化

Q

Q

Q(设为0,随机初始化)都会收敛到正确值,但是在实际应用上非常重要,以最优化初始化形式初始化它非常有帮助。会在exploration细讲这一点。

例题