Mapreduce实例——倒排索引

实验步骤

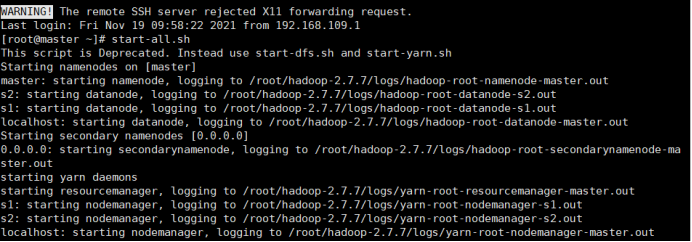

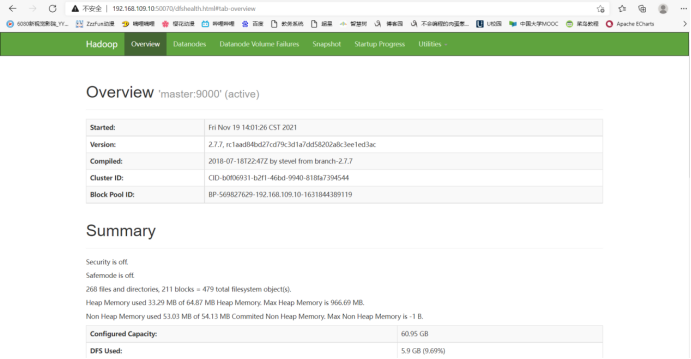

1.开启Hadoop

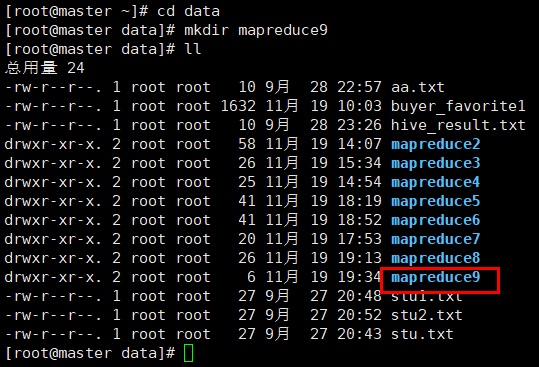

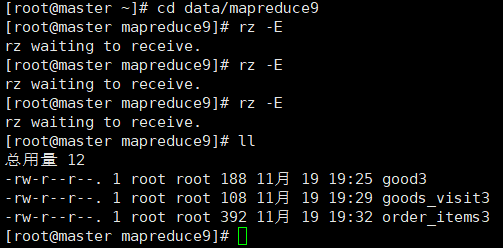

2.新建mapreduce9目录

在Linux本地新建/data/mapreduce9目录

3. 上传文件到linux中

(自行生成文本文件,放到个人指定文件夹下)

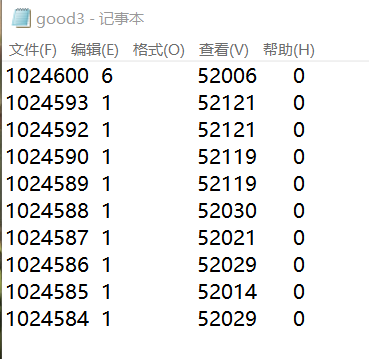

goods3

1024600 6 52006 0

1024593 1 52121 0

1024592 1 52121 0

1024590 1 52119 0

1024589 1 52119 0

1024588 1 52030 0

1024587 1 52021 0

1024586 1 52029 0

1024585 1 52014 0

1024584 1 52029 0

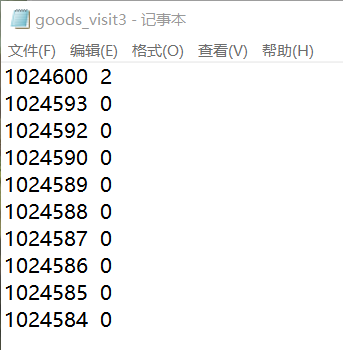

goods_visit3

1024600 2

1024593 0

1024592 0

1024590 0

1024589 0

1024588 0

1024587 0

1024586 0

1024585 0

1024584 0

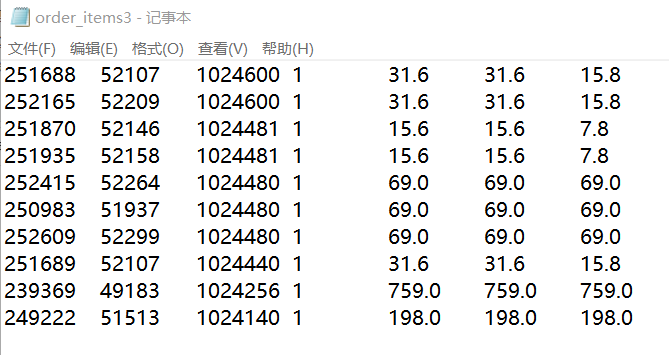

order_items3

251688 52107 1024600 1 31.6 31.6 15.8

252165 52209 1024600 1 31.6 31.6 15.8

251870 52146 1024481 1 15.6 15.6 7.8

251935 52158 1024481 1 15.6 15.6 7.8

252415 52264 1024480 1 69.0 69.0 69.0

250983 51937 1024480 1 69.0 69.0 69.0

252609 52299 1024480 1 69.0 69.0 69.0

251689 52107 1024440 1 31.6 31.6 15.8

239369 49183 1024256 1 759.0 759.0 759.0

249222 51513 1024140 1 198.0 198.0 198.0

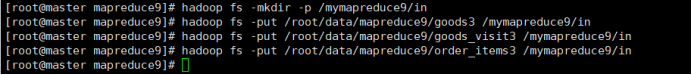

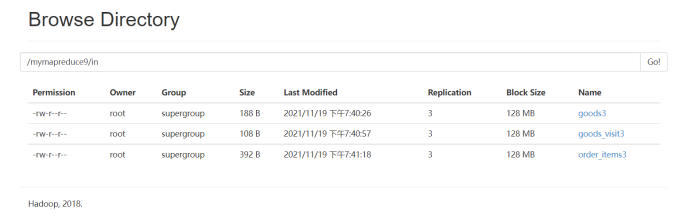

4.在HDFS中新建目录

首先在HDFS上新建/mymapreduce9/in目录,然后将Linux本地/data/mapreduce9目录下的goods3,goods_visit3和order_items3文件导入到HDFS的/mymapreduce9/in目录中。

hadoop fs -mkdir -p /mymapreduce9/in

hadoop fs -put /root/data/mapreduce9/goods3 /mymapreduce9/in

hadoop fs -put /root/data/mapreduce9/order_items3 /mymapreduce9/in

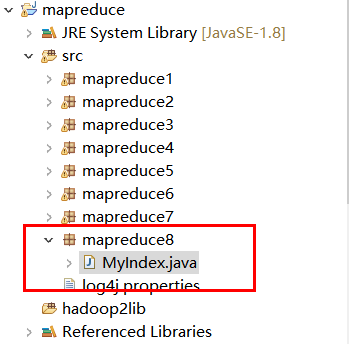

5.新建Java Project项目

新建Java Project项目,项目名为mapreduce。

在mapreduce项目下新建包,包名为mapreduce8。

在mapreduce8包下新建类,类名为MyIndex。

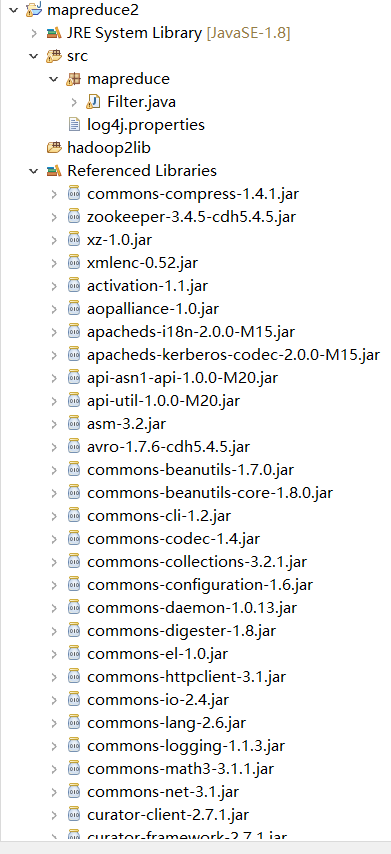

6.添加项目所需依赖的jar包

右键项目,新建一个文件夹,命名为:hadoop2lib,用于存放项目所需的jar包。

将/data/mapreduce2目录下,hadoop2lib目录中的jar包,拷贝到eclipse中mapreduce2项目的hadoop2lib目录下。

hadoop2lib为自己从网上下载的,并不是通过实验教程里的命令下载的

选中所有项目hadoop2lib目录下所有jar包,并添加到Build Path中。

7.编写程序代码

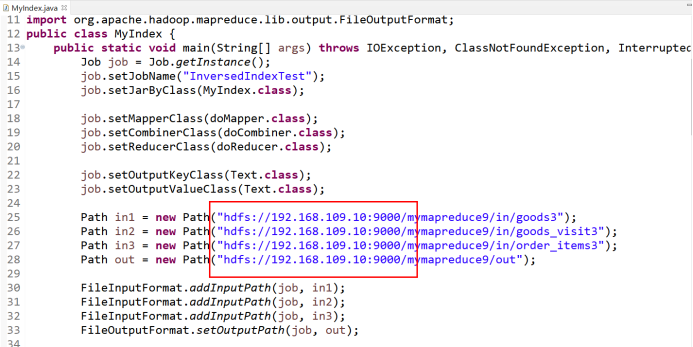

MyIndex.java

package mapreduce8; import java.io.IOException; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.FileSplit; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class MyIndex { public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Job job = Job.getInstance(); job.setJobName("InversedIndexTest"); job.setJarByClass(MyIndex.class); job.setMapperClass(doMapper.class); job.setCombinerClass(doCombiner.class); job.setReducerClass(doReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); Path in1 = new Path("hdfs://192.168.109.10:9000/mymapreduce9/in/goods3"); Path in2 = new Path("hdfs://192.168.109.10:9000/mymapreduce9/in/goods_visit3"); Path in3 = new Path("hdfs://192.168.109.10:9000/mymapreduce9/in/order_items3"); Path out = new Path("hdfs://192.168.109.10:9000/mymapreduce9/out"); FileInputFormat.addInputPath(job, in1); FileInputFormat.addInputPath(job, in2); FileInputFormat.addInputPath(job, in3); FileOutputFormat.setOutputPath(job, out); System.exit(job.waitForCompletion(true) ? 0 : 1); } public static class doMapper extends Mapper<Object, Text, Text, Text>{ public static Text myKey = new Text(); public static Text myValue = new Text(); //private FileSplit filePath; @Override protected void map(Object key, Text value, Context context) throws IOException, InterruptedException { String filePath=((FileSplit)context.getInputSplit()).getPath().toString(); if(filePath.contains("goods")){ String val[]=value.toString().split("\t"); int splitIndex =filePath.indexOf("goods"); myKey.set(val[0] + ":" + filePath.substring(splitIndex)); }else if(filePath.contains("order")){ String val[]=value.toString().split("\t"); int splitIndex =filePath.indexOf("order"); myKey.set(val[2] + ":" + filePath.substring(splitIndex)); } myValue.set("1"); context.write(myKey, myValue); } } public static class doCombiner extends Reducer<Text, Text, Text, Text>{ public static Text myK = new Text(); public static Text myV = new Text(); @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { int sum = 0 ; for (Text value : values) { sum += Integer.parseInt(value.toString()); } int mysplit = key.toString().indexOf(":"); myK.set(key.toString().substring(0, mysplit)); myV.set(key.toString().substring(mysplit + 1) + ":" + sum); context.write(myK, myV); } } public static class doReducer extends Reducer<Text, Text, Text, Text>{ public static Text myK = new Text(); public static Text myV = new Text(); @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { String myList = new String(); for (Text value : values) { myList += value.toString() + ";"; } myK.set(key); myV.set(myList); context.write(myK, myV); } } }

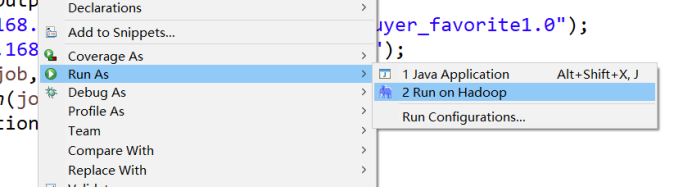

8.运行代码

在MyIndex类文件中,右键并点击=>Run As=>Run on Hadoop选项,将MapReduce任务提交到Hadoop中。

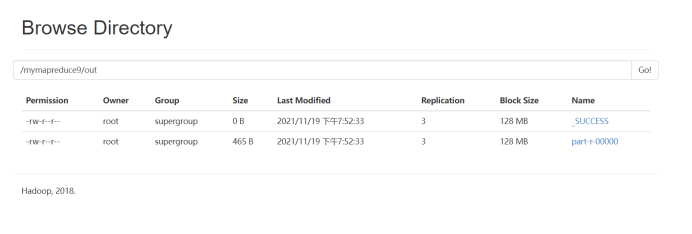

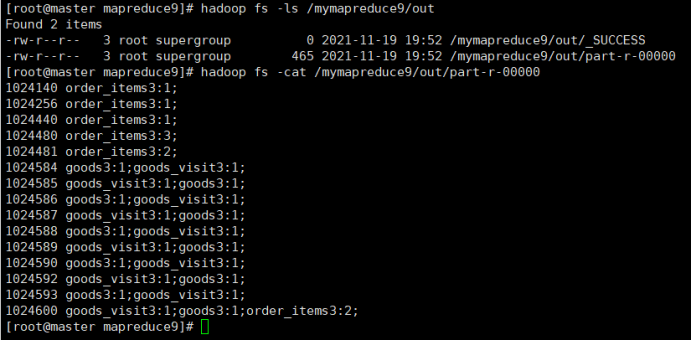

9.查看实验结果

待执行完毕后,进入命令模式下,在HDFS中/mymapreduce9/out查看实验结果。

hadoop fs -ls /mymapreduce9/out

hadoop fs -cat /mymapreduce9/out/part-r-00000

图一为我的运行结果,图二为实验结果

经过对比,发现结果一样

实验结果如下图:

商品id 所在表名称:出现次数

1024140 order_items3:1;

1024256 order_items3:1;

1024440 order_items3:1;

1024480 order_items3:3;

1024481 order_items3:2;

1024584 goods3:1;goods_visit3:1;

1024585 goods_visit3:1;goods3:1;

1024586 goods3:1;goods_visit3:1;

1024587 goods_visit3:1;goods3:1;

1024588 goods3:1;goods_visit3:1;

1024589 goods_visit3:1;goods3:1;

1024590 goods3:1;goods_visit3:1;

1024592 goods_visit3:1;goods3:1;

1024593 goods3:1;goods_visit3:1;

1024600 goods_visit3:1;goods3:1;order_items3:2;

此处为浏览器截图