容器云技术

Docker引擎的安装

准备两台虚拟机,一台为docker主节点,一台为docker客户端,安装CentOS7.5_1804系统

基础环境配置

网卡配置(master节点)

修改docker主节点主机名

# hostnamectl set-hostname master

配置网卡

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=099334fe-751c-4dc4-b062-d421640ceb2e

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.7.10

NETMASK=255.255.255.0

GATEWAY=192.168.7.2

DNS1=114.114.114.114

网卡配置(slave节点)

修改docker客户端主机名

# hostnamectl set-hostname slave

配置网卡

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

iTYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=53bbedb7-248e-4110-bd80-82ca6371f016

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.7.20

NETMASK=255.255.255.0

GATEWAY=192.168.7.2

DNS1=114.114.114.114

配置YUM源(两个节点)

将提供的压缩包Docker.tar.gz上传至/root目录并解压

# tar -zxvf Docker.tar.gz

配置本地YUM源

# vi /etc/yum.repos.d/local.repo

[kubernetes]

name=kubernetes

baseurl=file:///root/Docker

gpgcheck=0

enabled=1

升级系统内核(两个节点)

Docker CE支持64位版本CentOS 7,并且要求内核版本不低于3.10

# yum -y upgrade

配置防火墙(两个节点)

配置防火墙及SELinux

# systemctl stop firewalld && systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

# iptables -t filter -F

# iptables -t filter -X

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# reboot

开启路由转发(两个节点)

# vi /etc/sysctl.conf

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

# modprobe br_netfilter

# sysctl -p

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

Docker引擎安装

安装依赖包(两个节点)

yum-utils提供了yum-config-manager的依赖包,device-mapper-persistent-data和lvm2are需要devicemapper存储驱动

# yum install -y yum-utils device-mapper-persistent-data

安装docker-ce(两个节点)

Docker CE是免费的Docker产品的新名称,Docker CE包含了完整的Docker平台,非常适合开发人员和运维团队构建容器APP

安装指定版本的Docker CE

# yum install -y docker-ce-18.09.6 docker-ce-cli-18.09.6 containerd.io

启动Docker(两个节点)

启动Docker并设置开机自启

# systemctl daemon-reload

# systemctl restart docker

# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

查看docker的系统信息

# docker info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 18.09.6

Storage Driver: overlay2

Docker仓库的使用

构建私有仓库

官方的Docker Hub是一个用于管理公共镜像的仓库,用户可以在上面找到需要的镜像,也可以把私有镜像推送上去。官方在Docker Hub上提供了Registry的镜像,可以直接使用该Registry镜像来构建一个容器,搭建私有仓库服务

运行Registry(master节点)

将Registry镜像运行并生成一个容器

# ./image.sh

# docker run -d -v /opt/registry:/var/lib/registry -p 5000:5000 --restart=always --name registry registry:latest

dff76b9fb042ff1ea15741a50dc81e84d7afad4cc057c79bc16e370d2ce13c2a

Registry服务默认会将上传的镜像保存在容器的/var/lib/registry中,将主机的/opt/registry目录挂载到该目录,可实现将镜像保存到主机的/opt/registry目录

查看运行情况(master节点)

查看容器运行情况

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

dff76b9fb042 registry:latest "/entrypoint.sh /etc…" About a minute ago Up About a minute 0.0.0.0:5000->5000/tcp registry

查看状态

Registry容器启动后,打开浏览器输入地址http://192.168.7.10:5000/v2/

上传镜像(master节点)

配置私有仓库

"insecure-registries":["192.168.7.10:5000"]

}

The push refers to repository [192.168.7.10:5000/centos]

9e607bb861a7: Pushed

latest: digest: sha256:6ab380c5a5acf71c1b6660d645d2cd79cc8ce91b38e0352cbf9561e050427baf size: 529

{"repositories":["centos"]}

拉取镜像(slave节点)

配置私有仓库地址

# vi /etc/docker/daemon.json

{

"insecure-registries":["192.168.7.10:5000"]

}

# systemctl restart docker

拉取镜像并查看结果

# docker pull 192.168.7.10:5000/centos:latest

latest: Pulling from centos

729ec3a6ada3: Pull complete

Digest: sha256:6ab380c5a5acf71c1b6660d645d2cd79cc8ce91b38e0352cbf9561e050427baf

Status: Downloaded newer image for 192.168.7.10:5000/centos:latest

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.7.10:5000/centos latest 0f3e07c0138f 7 months ago 220MB

Docker镜像和容器的使用

在docker主节点master主机上操作

镜像的基本管理和使用

镜像有多种生成方法:

(1)可以从无到有开始创建镜像

(2)可以下载并使用别人创建好的现成的镜像

(3)可以在现有镜像上创建新的镜像

可以将镜像的内容和创建步骤描述在一个文本文件中,这个文件被称作Dockerfile,通过执行docker build <docker-file>命令可以构建出Docker镜像

查看镜像列表

列出本地主机上的镜像

# ./image.sh

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

httpd latest d3017f59d5e2 7 months ago 165MB

busybox latest 020584afccce 7 months ago 1.22MB

nginx latest 540a289bab6c 7 months ago 126MB

redis alpine 6f63d037b592 7 months ago 29.3MB

python 3.7-alpine b11d2a09763f 7 months ago 98.8MB

<none> <none> 4cda95efb0e4 7 months ago 80.6MB

192.168.7.10:5000/centos latest 0f3e07c0138f 7 months ago 220MB

centos latest 0f3e07c0138f 7 months ago 220MB

registry latest f32a97de94e1 14 months ago 25.8MB

swarm latest ff454b4a0e84 24 months ago 12.7MB

httpd 2.2.32 c51e86ea30d1 2 years ago 171MB

httpd 2.2.31 c8a7fb36e3ab 3 years ago 170MB

REPOSITORY:表示镜像的仓库源

TAG:镜像的标签

IMAGE ID:镜像ID

CREATED:镜像创建时间

SIZE:镜像大小

运行容器

同一仓库源可以有多个TAG,代表这个仓库源的不同个版本

# docker run -i -t -d httpd:2.2.31 /bin/bash

be31c7adf30f88fc5d6c649311a5640601714483e8b9ba6f8db853c73fc11638

-i:交互式操作

-t:终端

-d:后台运行

httpd:2.2.31:镜像名,使用https:2.2.31镜像为基础来启动容器

/bin/bash:容器交互式Shell

如果不指定镜像的版本标签,则默认使用latest标签的镜像

获取镜像

当本地主机上使用一个不存在的镜像时,Docker会自动下载这个镜像。如果需要预先下载这个镜像,可以使用docker pull命令来下载

格式

# docker pull [OPTIONS] NAME[:TAG|@DIGEST]

OPTIONS说明:

-a:拉取所有tagged镜像。

--disable-content-trust:忽略镜像的校验,默认开启

查找镜像

查找镜像一般有两种方式,可以通过Docker Hub(https://hub.docker.com/)网站来搜索镜像,也可以使用docker search命令来搜索镜像

格式

# docker search [OPTIONS] TERM

OPTIONS说明:

--automated:只列出automated build类型的镜像

--no-trunc:显示完整的镜像描述

--filter=stars:列出收藏数不小于指定值的镜像

搜索httpd寻找适合的镜像

# docker search --filter=stars=10 java

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

node Node.js is a JavaScript-based platform for s… 8863 [OK]

tomcat Apache Tomcat is an open source implementati… 2739 [OK]

openjdk OpenJDK is an open-source implementation of … 2265 [OK]

java Java is a concurrent, class-based, and objec… 1976 [OK]

ghost Ghost is a free and open source blogging pla… 1188 [OK]

couchdb CouchDB is a database that uses JSON for doc… 347 [OK]

jetty Jetty provides a Web server and javax.servle… 336 [OK]

groovy Apache Groovy is a multi-faceted language fo… 92 [OK]

lwieske/java-8 Oracle Java 8 Container - Full + Slim - Base… 46 [OK]

nimmis/java-centos This is docker images of CentOS 7 with diffe… 42 [OK]

fabric8/java-jboss-openjdk8-jdk Fabric8 Java Base Image (JBoss, OpenJDK 8) 28 [OK]

cloudbees/java-build-tools Docker image with commonly used tools to bui… 15 [OK]

frekele/java docker run --rm --name java frekele/java 12 [OK]

NAME:镜像仓库源的名称

DESCRIPTION:镜像的描述

OFFICIAL:是否是Docker官方发布

stars:类似GitHub里面的star,表示点赞、喜欢的意思

AUTOMATED:自动构建

删除镜像

格式

# docker rmi [OPTIONS] IMAGE [IMAGE...]

OPTIONS说明:

-f:强制删除

--no-prune:不移除该镜像的过程镜像,默认移除

强制删除本地镜像busybox

# docker rmi -f busybox:latest

Untagged: busybox:latest

Deleted: sha256:020584afccce44678ec82676db80f68d50ea5c766b6e9d9601f7b5fc86dfb96d

Deleted: sha256:1da8e4c8d30765bea127dc2f11a17bc723b59480f4ab5292edb00eb8eb1d96b1

容器的基本管理和使用

容器是一种轻量级的、可移植的、自包含的软件打包技术,使应用程序几乎可以在任何地方以相同的方式运行。容器由应用程序本身和依赖两部分组成

运行容器

运行第一个容器

# docker run -it --rm -d -p 80:80 nginx:latest

d7ab2c1aa4511f5ffc76a9bba3ab736fb9817793c6bec5af53e1cfddfb0904cd

-i:交互式操作

-t:终端

-rm:容器退出后随之将其删除,可以避免浪费空间

-p:端口映射

-d:容器在后台运行

过程

简单描述

(1)下载Nginx镜像

(2)启动容器,并将容器的80端口映射到宿主机的80端口

使用docker run来创建容器

(1)检查本地是否存在指定的镜像,不存在就从公有仓库下载

(2)利用镜像创建并启动一个容器

(3)分配一个文件系统,并在只读的镜像层外面挂载一层可读写层

(4)从宿主主机配置的网桥接口中桥接一个虚拟接口到容器中去

(5)从地址池配置一个IP地址给容器

(6)执行用户指定的应用程序

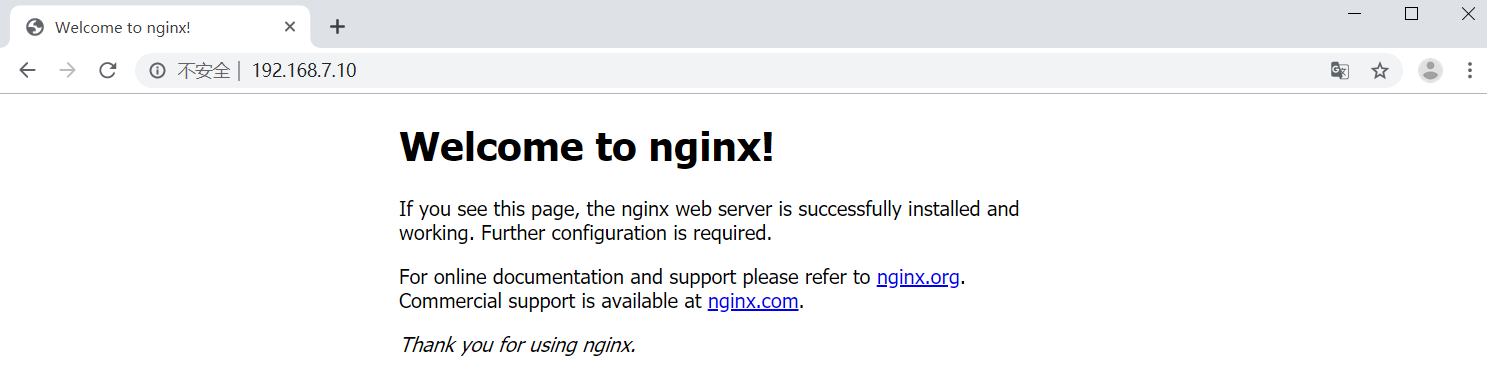

验证容器是否正常工作

在浏览器输入地址 http://192.168.7.10

启动容器

格式

# docker start [CONTAINER ID]

启动所有的docker容器

# docker start $(docker ps -aq)

d7ab2c1aa451

be31c7adf30f

dff76b9fb042

操作容器

列出运行中的容器

# docker ps 或者 # docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d7ab2c1aa451 nginx:latest "nginx -g 'daemon of…" 8 minutes ago Up 8 minutes 0.0.0.0:80->80/tcp distracted_hoover

be31c7adf30f httpd:2.2.31 "/bin/bash" 26 minutes ago Up 26 minutes 80/tcp priceless_hellman

dff76b9fb042 registry:latest "/entrypoint.sh /etc…" 21 hours ago Up 44 minutes 0.0.0.0:5000->5000/tcp registry

列出所有容器

# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d7ab2c1aa451 nginx:latest "nginx -g 'daemon of…" 9 minutes ago Up 9 minutes 0.0.0.0:80->80/tcp distracted_hoover

be31c7adf30f httpd:2.2.31 "/bin/bash" 27 minutes ago Up 27 minutes 80/tcp priceless_hellman

dff76b9fb042 registry:latest "/entrypoint.sh /etc…" 21 hours ago Up About an hour 0.0.0.0:5000->5000/tcp registry

查看具体容器的信息

# docker inspect [container ID or NAMES]

查看容器的使用资源状况

# docker stats [container ID or NAMES]

查看容器日志

# docker logs [OPTIONS] [container ID or NAMES]

OPTIONS说明:

--details:显示更多的信息

-f,--follow:跟踪实时日志

--sincestring:显示自某个timestamp之后的日志,或相对时

--tailstring:从日志末尾显示多少行日志,默认是all

-t,--timestamps:显示时间戳

--until string:显示自某个timestamp之前的日志,或相对时间

进入容器

格式

# docker exec -it [CONTAINER ID] bash

进入容器后,输入exit或者按Crtl+C键即可退出容器

终止容器

删除终止状态的容器

# docker rm [CONTAINER ID]

删除所有处于终止状态的容器

# docker container prune

删除未被使用的数据卷

# docker volume prune

删除运行中的容器

# docker rm -f [CONTAINER ID]

批量停止所有的容器

# docker stop $(docker ps -aq)

批量删除所有的容器

# docker rm $(docker ps -aq)

终止容器进程,容器进入终止状态

# docker container stop [CONTAINER ID]

导入/导出容器

将容器快照导出为本地文件

格式

# docker export [CONTAINER ID] > [tar file]

# docker export d7ab2c1aa451 > nginx.tar

# ll

总用量 1080192

-rw-------. 1 root root 1569 5月 28 02:19 anaconda-ks.cfg

drwxr-xr-x. 4 root root 34 10月 31 2019 Docker

-rw-r--r--. 1 root root 977776539 11月 4 2019 Docker.tar.gz

drwxr-xr-x. 2 root root 4096 10月 31 2019 images

-rwxr-xr-x. 1 root root 498 10月 31 2019 image.sh

drwxr-xr-x. 2 root root 40 11月 4 2019 jdk

-rw-r--r-- 1 root root 128325632 5月 28 17:30 nginx.tar

把容器快照文件再导入为镜像

格式

# cat [tar file] | docker import - [name:tag]

# cat nginx.tar | docker import - nginx:test

sha256:743846df0ce06109d801cb4118e9e4d3082243d6323dbaa6efdcda74f4c000bf

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx test 743846df0ce0 17 seconds ago 125MB

httpd latest d3017f59d5e2 7 months ago 165MB

nginx latest 540a289bab6c 7 months ago 126MB

redis alpine 6f63d037b592 7 months ago 29.3MB

python 3.7-alpine b11d2a09763f 7 months ago 98.8MB

<none> <none> 4cda95efb0e4 7 months ago 80.6MB

centos latest 0f3e07c0138f 7 months ago 220MB

192.168.7.10:5000/centos latest 0f3e07c0138f 7 months ago 220MB

registry latest f32a97de94e1 14 months ago 25.8MB

swarm latest ff454b4a0e84 24 months ago 12.7MB

httpd 2.2.32 c51e86ea30d1 2 years ago 171MB

httpd 2.2.31 c8a7fb36e3ab 3 years ago 170MB

使用docker import命令导入一个容器快照到本地镜像库时,将丢弃所有的历史记录和元数据信息,即仅保存容器当时的快照状态

构建自定义镜像

构建自定义镜像主要有两种方式:docker commit和Dockerfile

docker commit是在以往版本控制系统里提交变更,然后进行变更的提交docker commit

从容器创建一个新的镜像

格式

# docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]]

OPTIONS说明:

-a:提交的镜像作者

-c:使用Dockerfile指令来创建镜像

-m:提交时的说明文字

-p:在commit时,将容器暂停

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d7ab2c1aa451 nginx:latest "nginx -g 'daemon of…" 32 minutes ago Up 32 minutes 0.0.0.0:80->80/tcp distracted_hoover

be31c7adf30f httpd:2.2.31 "/bin/bash" About an hour ago Up About an hour 80/tcp priceless_hellman

dff76b9fb042 registry:latest "/entrypoint.sh /etc…" 22 hours ago Up About an hour 0.0.0.0:5000->5000/tcp registry

sha256:0a18e29db3b007302cd0e0011b4e34a756ef44ce4939d51e599f986204ce1f34

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 0a18e29db3b0 38 seconds ago 126MB

Dockerfile

Dockerfile是一个文本文档,其中包含了组合映像的命令,可以使用在命令行中调用任何命令。Docker通过读取Dockerfile中的指令自动生成映像

知识点

格式

# docker build -f /path/to/a/Dockerfile

Dockerfile一般分为4部分:基础镜像信息、维护者信息、镜像操作指令和容器启动时执行指令,“#”为Dockerfile中的注释

Dockerfile主要指令:

FROM:指定基础镜像,必须为第一个命令

MAINTAINER:维护者信息

RUN:构建镜像时执行的命令

ADD:将本地文件添加到容器中,tar类型文件会自动解压(网络压缩资源不会被解压),可以访问网络资源,类似wget

COPY:功能类似ADD,但是是不会自动解压文件,也不能访问网络资源

CMD:构建容器后调用,也就是在容器启动时才进行调用

ENTRYPOINT:配置容器,使其可执行化。配合CMD可省去“application”,只使用参数

LABEL:用于为镜像添加元数据

ENV:设置环境变量

EXPOSE:指定与外界交互的端口

VOLUME:用于指定持久化目录

WORKDIR:工作目录,类似于cd命令

USER:指定运行容器时的用户名或UID,后续的RUN也会使用指定用户。使用USER指定用户时,可以使用用户名、UID或GID,或是两者的组合。当服务不需要管理员权限时,可通过该命令指定运行用户

ARG:用于指定传递给构建运行时的变量

ONBUILD:用于设置镜像触发器

构建准备

以centos:latest为基础镜像,安装jdk1.8并构建新的镜像centos-jdk

新建文件夹用于存放JDK安装包和Dockerfile文件

# mkdir centos-jdk

# mv jdk-8u141-linux-x64.tar.gz ./centos-jdk/

# cd centos-jdk/

编写Dockerfile

# vi Dockerfile

内容

# CentOS with JDK 8

# Author kei

FROM centos ##指定基础镜像

MAINTAINER kei ##指定作者

RUN mkdir /usr/local/java ##新建文件夹用于存放jdk文件

ADD jdk-8u141-linux-x64.tar.gz /usr/local/java ##将JDK文件复制到镜像内并自动解压

RUN ln -s /usr/local/java/jdk1.8.0_141 /usr/local/java/jdk ##创建软链接

ENV JAVA_HOME /usr/local/java/jdk ##设置环境变量

ENV JRE_HOME ${JAVA_HOME}/jre

ENV CLASSPATH .:${JAVA_HOME}/lib:${JRE_HOME}/lib

ENV PATH ${JAVA_HOME}/bin:$PATH

构建新镜像

# docker build -t="centos-jdk" .

Sending build context to Docker daemon 185.5MB

Step 1/9 : FROM centos

---> 0f3e07c0138f

Step 2/9 : MAINTAINER dockerzlnewbie

---> Running in 1a6a5c210531

Removing intermediate container 1a6a5c210531

---> 286d78e0b9bf

Step 3/9 : RUN mkdir /usr/local/java

---> Running in 2dbbac61b2cf

Removing intermediate container 2dbbac61b2cf

---> 369567834d80

Step 4/9 : ADD jdk-8u141-linux-x64.tar.gz /usr/local/java/

---> 8fb102032ae2

Step 5/9 : RUN ln -s /usr/local/java/jdk1.8.0_141 /usr/local/java/jdk

---> Running in d8301e932f7c

Removing intermediate container d8301e932f7c

---> 7c82ee6703c5

Step 6/9 : ENV JAVA_HOME /usr/local/java/jdk

---> Running in d8159a32efae

Removing intermediate container d8159a32efae

---> d270abf08fa2

Step 7/9 : ENV JRE_HOME ${JAVA_HOME}/jre

---> Running in 5206ba2ec963

Removing intermediate container 5206ba2ec963

---> a52dc52bae76

Step 8/9 : ENV CLASSPATH .:${JAVA_HOME}/lib:${JRE_HOME}/lib

---> Running in 41fbd969bd90

Removing intermediate container 41fbd969bd90

---> ff44f5f90877

Step 9/9 : ENV PATH ${JAVA_HOME}/bin:$PATH

---> Running in 7affe7505c82

Removing intermediate container 7affe7505c82

---> bdf402785277

Successfully built bdf402785277

Successfully tagged centos-jdk:latest

查看构建的新镜像

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos-jdk latest bdf402785277 11 minutes ago 596MB

使用新构建的镜像运行容器验证JDK是否安装成功

# docker run -it centos-jdk /bin/bash

java -version

java version "1.8.0_141"

Java(TM) SE Runtime Environment (build 1.8.0_141-b15)

Java HotSpot(TM) 64-Bit Server VM (build 25.141-b15, mixed mode)

Docker容器编排

准备两台虚拟机,一台为swarm集群Master节点master,一台为swarm集群Node节点node,所有节点已配置好主机名和网卡,并安装好docker-ce

容器编排工具提供了有用且功能强大的解决方案,用于跨多个主机协调创建、管理和更新多个容器

Swarm是Docker自己的编排工具,现在与Docker Engine完全集成,并使用标准API和网络

部署Swarm集群

配置主机映射(两个节点)

修改/etc/hosts文件配置主机映射

# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.7.10 master

192.168.7.20 node

配置时间同步

安装服务(两个节点)

安装chrony服务

# yum install -y chrony

master节点

修改/etc/chrony.conf文件,注释默认NTP服务器,指定上游公共NTP服务器,并允许其他节点同步时间

# sed -i 's/^server/#&/' /etc/chrony.conf

# vi /etc/chrony.conf

local stratum 10

server master iburst

allow all

重启chronyd服务并设为开机启动,开启网络时间同步功能

# systemctl enable chronyd && systemctl restart chronyd

node节点

修改/etc/chrony.conf文件,指定内网 Master节点为上游NTP服务器

# sed -i 's/^server/#&/' /etc/chrony.conf

# echo server 192.168.7.10 iburst >> /etc/chrony.conf

重启服务并设为开机启动

# systemctl enable chronyd && systemctl restart chronyd

查询同步(两个节点)

# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

==================================================================

^* master 10 6 77 7 +13ns[-2644ns] +/- 13us

配置Docker API(两个节点)

开启docker API

# vi /lib/systemd/system/docker.service

将

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

修改为

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock

# systemctl daemon-reload

# systemctl restart docker

# ./image.sh

初始化集群(master节点)

创建swarm集群

# docker swarm init --advertise-addr 192.168.7.10

Swarm initialized: current node (jit2j1itocmsynhecj905vfwp) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2oyrpgkp41z40zg0z6l0yppv6420vz18rr171kqv0mfsbiufii-c3ficc1qh782wo567uav16n3n 192.168.7.10:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

初始化命令中“--advertise-addr”选项表示管理节点公布它的IP是多少。其它节点必须能通过这个IP找到管理节点

输出结果中包含3个步骤:

(1)Swarm创建成功,swarm-manager成为manager node

(2)添加worker node需要执行的命令

(3)添加manager node需要执行的命令node节点加入集群(node节点)

复制前面的docker swarm join命令,执行以加入Swarm集群

# docker swarm join --token SWMTKN-1-2oyrpgkp41z40zg0z6l0yppv6420vz18rr171kqv0mfsbiufii-c3ficc1qh782wo567uav16n3n 192.168.7.10:2377

This node joined a swarm as a worker.

验证集群(master节点)

查看各节点状态

# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

jit2j1itocmsynhecj905vfwp * master Ready Active Leader 18.09.6

8mww97xnbfxfrbzqndplxv3vi node Ready Active 18.09.6

安装portainer(master节点)

Portainer是Docker的图形化管理工具,提供状态显示面板、应用模板快速部署、容器镜像网络数据卷的基本操作(包括上传和下载镜像、创建容器等操作)、事件日志显示、容器控制台操作、Swarm集群和服务等集中管理和操作、登录用户管理和控制等功能

# docker volume create portainer_data

portainer_data# docker service create --name portainer --publish 9000:9000 --replicas=1 --constraint 'node.role == manager' --mount type=bind,src=//var/run/docker.sock,dst=/var/run/docker.sock --mount type=volume,src=portainer_data,dst=/data portainer/portainer -H unix:///var/run/docker.sock

nfgx3xci88rdcdka9j9cowv8g

overall progress: 1 out of 1 tasks

1/1: running

verify: Service converged

登录portainer

打开浏览器,输入地址http://master_IP:9000访问Portainer主页运行service

运行(master节点)

部署一个运行httpd镜像的Service

# docker service create --name web_server httpd

查看当前Swarm中的Service

# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

2g18082sfqa9 web_server replicated 1/1 httpd:latest

REPLICAS显示当前副本信息,1/1意思是web_server这个Service期望的容器副本数量为1,目前已经启动的副本数量为1,即当前Service已经部署完成

查看Service每个副本的状态

# docker service ps web_server

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4vtrynwddd7m web_server.1 httpd:latest node Running Running 27 minutes ago

Service唯一的副本被分派到node,当前的状态是Running

service伸缩(master节点)

副本数增加到5

# docker service scale web_server=5

web_server scaled to 5

overall progress: 5 out of 5 tasks

1/5: running

2/5: running

3/5: running

4/5: running

5/5: running

verify: Service converged

查看副本的详细信息

# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

2g18082sfqa9 web_server replicated 5/5 httpd:latest

# docker service ps web_server

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4vtrynwddd7m web_server.1 httpd:latest node Running Running 36 minutes ago

n3iscmvv9fh5 web_server.2 httpd:latest master Running Running about a minute ago

mur6cc8k6x7e web_server.3 httpd:latest node Running Running 3 minutes ago

rx52najc1txw web_server.4 httpd:latest master Running Running about a minute ago

jl0xjv427goz web_server.5 httpd:latest node Running Running 3 minutes ago

减少副本数

# docker service scale web_server=2

web_server scaled to 2

overall progress: 2 out of 2 tasks

1/2: running

2/2: running

verify: Service converged

# docker service ps web_server

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4vtrynwddd7m web_server.1 httpd:latest node Running Running 40 minutes ago

n3iscmvv9fh5 web_server.2 httpd:latest master Running Running 5 minutes ago

访问service(master节点)

查看容器的网络配置

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

cde0d3489429 httpd:latest "httpd-foreground" 9 minutes ago Up 9 minutes 80/tcp web_server.2.n3iscmvv9fh590fx452ezu9hu

将Service暴露到外部

# docker service update --publish-add 8080:80 web_server

web_server

overall progress: 2 out of 2 tasks

1/2: running

2/2: running

verify: Service converged

service存储数据(master节点)

volume NFS共享存储模式:管理节点宿主同步到工作节点宿主,工作节点宿主同步到容器

安装NFS服务端、配置NFS主配置文件、添加权限并启动

# yum install -y nfs-utils

添加目录让相应网段可以访问并添加读写权限

# vi /etc/exports

/root/share 192.168.7.10/24(rw,async,insecure,anonuid=1000,anongid=1000,no_root_squash)

创建共享目录,添加权限

# mkdir -p /root/share

# chmod 777 /root/share

# exportfs -rv

exporting 192.168.7.10/24:/root/share

开启RPC服务并设置开机自启# systemctl start rpcbind

# systemctl enable rpcbind启动NFS服务并设置开机自启

# systemctl start nfs

# systemctl enable nfs

查看NFS是否挂载成功

# cat /var/lib/nfs/etab

/root/share 192.168.7.10/24(rw,async,wdelay,hide,nocrossmnt,insecure,no_root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=1000,anongid=1000,sec=sys,rw,insecure,no_root_squash,no_all_squash)

安装NFS客户端并启动服务(node节点)

# yum install nfs-utils -y

# systemctl start rpcbind

# systemctl enable rpcbind

# systemctl start nfs

# systemctl enable nfs

创建docker volume(两个节点)

# docker volume create --driver local --opt type=nfs --opt o=addr=10.18.4.39,rw --opt device=:/root/share foo33

查看volume。

# docker volume ls

DRIVER VOLUME NAME

local foo33

local nfs-test

local portainer_data

查看volume详细信息

# docker volume inspect foo33

[

{

"CreatedAt": "2020-5-31T07:36:47Z",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/foo33/_data",

"Name": "foo33",

"Options": {

"device": ":/root/share",

"o": "addr=192.168.7.10,rw",

"type": "nfs"

},

"Scope": "local"

}

]

创建并发布服务(master节点)

# docker service create --name test-nginx-nfs --publish 80:80 --mount type=volume,source=foo33,destination=/app/share --replicas 3 nginx

otp60kfc3br7fz5tw4fymhtcy

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service converged

查看服务分布的节点。

# docker service ps test-nginx-nfs

ID NAME IMAGE NODE DESIRED S TATE CURRENT STATE ERROR PORTS

z661rc7h8rrn test-nginx-nfs.1 nginx:latest node Running Running about a minute ago

j2b9clk37kuc test-nginx-nfs.2 nginx:latest node Running Running about a minute ago

nqduca4andz0 test-nginx-nfs.3 nginx:latest master Running Running about a minute ago

生成一个index.html文件

# cd /root/share/

# touch index.html

# ll

total 0

-rw-r--r-- 1 root root 0 Oct 31 07:44 index.html

查看宿主机目录挂载情况

# docker volume inspect foo33

[

{

"CreatedAt": "2020-5-31T07:44:49Z",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/foo33/_data",

"Name": "foo33",

"Options": {

"device": ":/root/share",

"o": "addr=192.168.7.10,rw",

"type": "nfs"

},

"Scope": "local"

}

]

# ls /var/lib/docker/volumes/foo33/_data

index.html

查看容器目录

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a1bce967830e nginx:latest "nginx -g 'daemon of…" 6 minutes ago Up 6 minutes 80/tcp test-nginx-nfs.3.nqduca4andz0nsxus11nwd8qt

# docker exec -it a1bce967830e bash

root@a1bce967830e:/# ls app/share/

index.html

调度节点(master节点)

默认配置下Master也是worker node,所以Master上也运行了副本。如果不希望在Master上运行Service

# docker node update --availability drain master

master

查看各节点现在的状态

# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

jit2j1itocmsynhecj905vfwp * master Ready Drain Leader 18.09.6

8mww97xnbfxfrbzqndplxv3vi node Ready Active 18.09.6

# docker service ps test-nginx-nfs

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

z661rc7h8rrn test-nginx-nfs.1 nginx:latest node Running Running 10 minutes ago

j2b9clk37kuc test-nginx-nfs.2 nginx:latest node Running Running 10 minutes ago

rawt8mtsstwd test-nginx-nfs.3 nginx:latest node Running Running 30 seconds ago

nqduca4andz0 \_ test-nginx-nfs.3 nginx:latest master Shutdown Shutdown 32 seconds ago

Master上的副本test-nginx-nfs.3已经被Shut down了,为了达到3个副本数的目标,在Node上添加了新的副本test-nginx-nfs.3

原生Kuberbetes云平台部署

部署架构

Kubernetes(简称K8S)是开源的容器集群管理系统,可以实现容器集群的自动化部署、自动扩缩容、维护等功能。它既是一款容器编排工具,也是全新的基于容器技术的分布式架构领先方案。在Docker技术的基础上,为容器化的应用提供部署运行、资源调度、服务发现和动态伸缩等功能,提高了大规模容器集群管理的便捷性

节点规划

准备两台虚拟机,一台master节点,一台node节点,所有节点安装CentOS_7.2.1511系统,配置网卡和主机名

基础环境配置

配置YUM源(两个节点)

将提供的压缩包K8S.tar.gz上传至/root目录并解压

# tar -zxvf K8S.tar.gz

配置本地YUM源。

# cat /etc/yum.repod.s/local.repo

[kubernetes]

name=kubernetes

baseurl=file:///root/Kubernetes

gpgcheck=0

enabled=1

升级系统内核(两个节点)

# yum upgrade -y

配置主机映射(两个节点)

修改/etc/hosts文件

# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.7.10master

192.168.7.20 node

配置防火墙(两个节点)

配置防火墙及SELinux。

# systemctl stop firewalld && systemctl disable firewalld

# iptables -F

# iptables -X

# iptables -Z

# /usr/sbin/iptables-save

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# reboot

关闭swap(两个节点)

# swapoff -a

# sed -i "s//dev/mapper/centos-swap/#/dev/mapper/centos-swap/g" /etc/fstab

配置时间同步

安装服务(两个节点)

安装chrony服务

# yum install -y chrony

master节点

修改/etc/chrony.conf文件,注释默认NTP服务器,指定上游公共NTP服务器,并允许其他节点同步时间

# sed -i 's/^server/#&/' /etc/chrony.conf

# vi /etc/chrony.conf

local stratum 10

server master iburst

allow all

重启chronyd服务并设为开机启动,开启网络时间同步功能

# systemctl enable chronyd && systemctl restart chronyd

# timedatectl set-ntp true

node节点

修改/etc/chrony.conf文件,指定内网Master节点为上游NTP服务器,重启服务并设为开机启动

# sed -i 's/^server/#&/' /etc/chrony.conf

# echo server 192.168.7.10 iburst >> /etc/chrony.conf

# systemctl enable chronyd && systemctl restart chronyd

执行命令(两个节点)

执行chronyc sources命令,查询结果中如果存在以“^*”开头的行,即说明已经同步成功

# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

==================================================================

^* master 10 6 77 7 +13ns[-2644ns] +/- 13us

配置路由转发(两个节点)

# vi /etc/sysctl.d/K8S.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

# modprobe br_netfilter

# sysctl -p /etc/sysctl.d/K8S.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

配置IPVS(两个节点)

# vi /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

# chmod 755 /etc/sysconfig/modules/ipvs.modules

# bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

查看是否已经正确加载所需的内核模块

# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 15053 0

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139224 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

安装ipset软件包

# yum install -y ipset ipvsadm

安装docker(两个节点)

装Docker,启动Docker引擎并设置开机自启

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum install docker-ce-18.09.6 docker-ce-cli-18.09.6 containerd.io -y

# mkdir -p /etc/docker

# vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

# systemctl daemon-reload

# systemctl restart docker

# systemctl enable docker

# docker info |grep Cgroup

Cgroup Driver: system

安装Kubernetes集群

安装工具(两个节点)

Kubelet负责与其他节点集群通信,并进行本节点Pod和容器生命周期的管理。Kubeadm是Kubernetes的自动化部署工具,降低了部署难度,提高效率。Kubectl是Kubernetes集群命令行管理工具

安装Kubernetes工具并启动Kubelet

# yum install -y kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1

# systemctl enable kubelet && systemctl start kubelet

## 此时启动不成功正常,后面初始化的时候会变成功

初始化集群(master节点)

# ./kubernetes_base.sh

# kubeadm init --apiserver-advertise-address 192.168.7.10 --kubernetes-version="v1.14.1" --pod-network-cidr=10.16.0.0/16 --image-repository=registry.aliyuncs.com/google_containers

[init] Using Kubernetes version: v1.14.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.18.4.33]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [10.18.4.33 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [10.18.4.33 127.0.0.1 ::1]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 25.502670 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: i9k9ou.ujf3blolfnet221b

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.7.10:6443 --token i9k9ou.ujf3blolfnet221b

--discovery-token-ca-cert-hash sha256:a0402e0899cf798b72adfe9d29ae2e9c20d5c62e06a6cc6e46c93371436919dc

初始化操作主要经历了下面15个步骤,每个阶段均输出均使用[步骤名称]作为开头:

①[init]:指定版本进行初始化操作。

②[preflight]:初始化前的检查和下载所需要的Docker镜像文件。

③[kubelet-start]:生成Kubelet的配置文件/var/lib/kubelet/config.yaml,没有这个文件Kubelet无法启动,所以初始化之前的Kubelet实际上启动失败。

④[certificates]:生成Kubernetes使用的证书,存放在/etc/kubernetes/pki目录中。

⑤[kubeconfig]:生成KubeConfig文件,存放在/etc/kubernetes目录中,组件之间通信需要使用对应文件。

⑥[control-plane]:使用/etc/kubernetes/manifest目录下的YAML文件,安装Master组件。

⑦[etcd]:使用/etc/kubernetes/manifest/etcd.yaml安装Etcd服务。

⑧[wait-control-plane]:等待control-plan部署的Master组件启动。

⑨[apiclient]:检查Master组件服务状态。

⑩[uploadconfig]:更新配置。

11[kubelet]:使用configMap配置Kubelet。

12[patchnode]:更新CNI信息到Node上,通过注释的方式记录。

13[mark-control-plane]:为当前节点打标签,打了角色Master和不可调度标签,这样默认就不会使用Master节点来运行Pod。

14[bootstrap-token]:生成的Token需要记录下来,后面使用kubeadm join命令往集群中添加节点时会用到。

15[addons]:安装附加组件CoreDNS和kube-proxy。

输出结果中的最后一行用于其它节点加入集群

Kubectl默认会在执行的用户home目录下面的.kube目录中寻找config文件,配置kubectl工具

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

检查集群状态

# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

配置网络(master节点)

部署flannel网络

# kubectl apply -f yaml/kube-flannel.yml

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-8686dcc4fd-v88br 0/1 Running 0 4m42s

coredns-8686dcc4fd-xf28r 0/1 Running 0 4m42s

etcd-master 1/1 Running 0 3m51s

kube-apiserver-master 1/1 Running 0 3m46s

kube-controller-manager-master 1/1 Running 0 3m48s

kube-flannel-ds-amd64-6hf4w 1/1 Running 0 24s

kube-proxy-r7njz 1/1 Running 0 4m42s

kube-scheduler-master 1/1 Running 0 3m37s

加入集群

在master节点执行

# ./kubernetes_base.sh

在node节点使用kubeadm join命令将Node节点加入集群

# kubeadm join 192.168.7.10:6443 --token qf4lef.d83xqvv00l1zces9 --discovery-token-ca-cert-hash

sha256:ec7c7db41a13958891222b2605065564999d124b43c8b02a3b32a6b2ca1a1c6c

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在master节点检查各节点状态

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 4m53s v1.14.1

node Ready <none> 13s v1.14.1

安装Dashboard(master节点)

安装Dashboard

# kubectl create -f yaml/kubernetes-dashboard.yaml

创建管理员

# kubectl create -f yaml/dashboard-adminuser.yaml

serviceaccount/kubernetes-dashboard-admin created

clusterrolebinding.rbac.authorization.K8S.io/kubernetes-dashboard-admin created

检查所有Pod状态

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-8686dcc4fd-8jqzh 1/1 Running 0 11m

coredns-8686dcc4fd-dkbhw 1/1 Running 0 11m

etcd-master 1/1 Running 0 11m

kube-apiserver-master 1/1 Running 0 11m

kube-controller-manager-master 1/1 Running 0 11m

kube-flannel-ds-amd64-49ssg 1/1 Running 0 7m56s

kube-flannel-ds-amd64-rt5j8 1/1 Running 0 7m56s

kube-proxy-frz2q 1/1 Running 0 11m

kube-proxy-xzq4t 1/1 Running 0 11m

kube-scheduler-master 1/1 Running 0 11m

kubernetes-dashboard-5f7b999d65-djgxj 1/1 Running 0 11m

查看端口号

# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 15m

kubernetes-dashboard NodePort 10.102.195.101 <none> 443:30000/TCP 4m43s

浏览器中输入地址(https://192.168.7.10:30000),访问Kubernetes Dashboard

单击“接受风险并继续”按钮,即可进入Kubernetes Dasboard认证界面

获取访问Dashboard的认证令牌

# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep kubernetes-dashboard-admin-token | awk '{print $1}')

Name: kubernetes-dashboard-admin-token-j5dvd

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: 1671a1e1-cbb9-11e9-8009-ac1f6b169b00

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1qNWR2ZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjE2NzFhMWUxLWNiYjktMTFlOS04MDA5LWFjMWY2YjE2OWIwMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.u6ZaVO-WR632jpFimnXTk5O376IrZCCReVnu2Brd8QqsM7qgZNTHD191Zdem46ummglbnDF9Mz4wQBaCUeMgG0DqCAh1qhwQfV6gVLVFDjHZ2tu5yn0bSmm83nttgwMlOoFeMLUKUBkNJLttz7-aDhydrbJtYU94iG75XmrOwcVglaW1qpxMtl6UMj4-bzdMLeOCGRQBSpGXmms4CP3LkRKXCknHhpv-pqzynZu1dzNKCuZIo_vv-kO7bpVvi5J8nTdGkGTq3FqG6oaQIO-BPM6lMWFeLEUkwe-EOVcg464L1i6HVsooCESNfTBHjjLXZ0WxXeOOslyoZE7pFzA0qg

将获取到的令牌输入浏览器,认证后即可进入Kubernetes控制台

配置kuboard(master节点)

Kuboard是一款免费的Kubernetes图形化管理工具,其力图帮助用户快速在Kubernetes上落地微服务

# kubectl create -f yaml/kuboard.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.K8S.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.K8S.io/kuboard-viewer created

clusterrolebinding.rbac.authorization.K8S.io/kuboard-viewer-node created

clusterrolebinding.rbac.authorization.K8S.io/kuboard-viewer-pvp created

ingress.extensions/kuboard created

在浏览器中输入地址http://192.168.7.10:31000,即可进入Kuboard的认证界面

在Token文本框中输入令牌后可进入Kuboard控制台

配置Kubernetes集群

开启IPVS(master节点)

IPVS是基于TCP四层(IP+端口)的负载均衡软件

IPVS会从TCPSYNC包开始为一个TCP连接所有的数据包,建立状态跟踪机制,保证一个TCP连接中所有的数据包能到同一个后端

根据处理请求和响应数据包的模式的不同,IPVS具有如下4种工作模式:

①NAT模式

②DR(Direct Routing)模式

③TUN(IP Tunneling)模式

④FULLNAT模式

而根据响应数据包返回路径的不同,可以分为如下2种模式:

①双臂模式:请求、转发和返回在同一路径上,client和IPVS director、IPVS director和后端real server都是由请求和返回2个路径连接。

②三角模式:请求、转发和返回3个路径连接client、IPVS director和后端real server成为一个三角形。

修改ConfigMap的kube-system/kube-proxy中的config.conf文件,修改为mode: "ipvs"

# kubectl edit cm kube-proxy -n kube-system

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs" //修改此处

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

resourceContainer: /kube-proxy

udpIdleTimeout: 250ms

重启kube-proxy(master节点)

# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

pod "kube-proxy-bd68w" deleted

pod "kube-proxy-qq54f" deleted

pod "kube-proxy-z9rp4" deleted

查看日志

# kubectl logs kube-proxy-9zv5x -n kube-system

I1004 07:11:17.538141 1 server_others.go:177] Using ipvs Proxier. #正在使用ipvs

W1004 07:11:17.538589 1 proxier.go:381] IPVS scheduler not specified, use rr by default

I1004 07:11:17.540108 1 server.go:555] Version: v1.14.1

I1004 07:11:17.555484 1 conntrack.go:52] Setting nf_conntrack_max to 524288

I1004 07:11:17.555827 1 config.go:102] Starting endpoints config controller

I1004 07:11:17.555899 1 controller_utils.go:1027] Waiting for caches to sync for endpoints config controller

I1004 07:11:17.555927 1 config.go:202] Starting service config controller

I1004 07:11:17.555965 1 controller_utils.go:1027] Waiting for caches to sync for service config controller

I1004 07:11:17.656090 1 controller_utils.go:1034] Caches are synced for service config controller

I1004 07:11:17.656091 1 controller_utils.go:1034] Caches are synced for endpoints config controller

日志中打印出了“Using ipvs Proxier”字段,说明IPVS模式已经开启

测试IPVS(master节点)

# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:30099 rr

TCP 172.17.0.1:30188 rr

TCP 172.17.0.1:30301 rr

TCP 172.17.0.1:31000 rr

调度(master节点)

查看Taints字段默认配置

# kubectl describe node master

……

CreationTimestamp: Fri, 04 Oct 2020 06:02:45 +0000

Taints: node-role.kubernetes.io/master:NoSchedule //状态为NoSchedule

Unschedulable: false

……

希望将K8S-master也当作Node节点使用,可以执行如下命令

# kubectl taint node master node-role.kubernetes.io/master-

node/master untainted

# kubectl describe node master

……

CreationTimestamp: Fri, 04 Oct 2020 06:02:45 +0000

Taints: <none> //状态已经改变

Unschedulable: false

……