环境篇:Atlas2.0.0兼容CDH6.2.0部署

Atlas 是什么?

Atlas是一组可扩展和可扩展的核心基础治理服务,使企业能够有效地满足Hadoop中的合规性要求,并允许与整个企业数据生态系统集成。

Apache Atlas为组织提供了开放的元数据管理和治理功能,以建立其数据资产的目录,对这些资产进行分类和治理,并为数据科学家,分析师和数据治理团队提供围绕这些数据资产的协作功能。

如果没有Atlas

大数据表依赖问题不好解决,元数据管理需要自行开发,如:hive血缘依赖图

对于表依赖问题,没有一个可以查询的工具,不方便错误定位,即业务sql开发

-

表与表之间的血缘依赖

- 字段与字段之间的血缘依赖

1 Atlas 架构原理

2 Atlas 安装及使用

安装需要组件,HDFS、Yarn、Zookeeper、Kafka、Hbase、Solr、Hive,Python2.7环境

需要Maven3.5.0以上,jdk_151以上,python2.7。

2.1 下载源码包2.0.0,IDEA打开

2.2 修改相关版本与CDH版本对应

<hadoop.version>3.0.0</hadoop.version>

<hbase.version>2.1.0</hbase.version>

<kafka.version>2.1.0</kafka.version>

<zookeeper.version>3.4.5</zookeeper.version>

2.3 修改配置文件

apache-atlas-2.0.0-sourcesapache-atlas-sources-2.0.0distrosrcconfatlas-application.properties

#集成修改hbase配置

atlas.graph.storage.hostname=cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181

#集成修改solr配置

atlas.graph.index.search.solr.zookeeper-url=cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181/solr

#集成修改kafka配置

atlas.notification.embedded=false #false外置的kafka

atlas.kafka.zookeeper.connect=cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181

atlas.kafka.bootstrap.servers=cdh01.cm:9092,cdh02.cm:9092,cdh03.cm:9092

atlas.kafka.zookeeper.session.timeout.ms=60000

atlas.kafka.zookeeper.connection.timeout.ms=30000

atlas.kafka.enable.auto.commit=true

#集成修改其他配置

atlas.rest.address=http://cdh01.cm:21000 #访问地址端口,此值修改不生效,默认本地21000端口,此端口和impala冲突

atlas.server.run.setup.on.start=false #如果启用并设置为true,则在服务器启动时将运行安装步骤

atlas.audit.hbase.zookeeper.quorum=cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181

#集成添加hive钩子配置(文件最下面即可)

#在hive中做任何操作,都会被钩子所感应到,并生成相应的事件发往atlas所订阅的kafka-topic,再由atlas进行元数据生成和存储管理

######### Hive Hook Configs #######

atlas.hook.hive.synchronous=false

atlas.hook.hive.numRetries=3

atlas.hook.hive.queueSize=10000

atlas.cluster.name=primary

#配置用户名密码(选做)

#开启或关闭三种验证方法

atlas.authentication.method.kerberos=true|false

atlas.authentication.method.ldap=true|false

atlas.authentication.method.file=true

#vim users-credentials.properties(修改该文件)

#>>>源文件

#username=group::sha256-password

admin=ADMIN::8c6976e5b5410415bde908bd4dee15dfb167a9c873fc4bb8a81f6f2ab448a918

rangertagsync=RANGER_TAG_SYNC::e3f67240f5117d1753c940dae9eea772d36ed5fe9bd9c94a300e40413f1afb9d

#<<<

#>>>修改成用户名bigdata123,密码bigdata123

#username=group::sha256-password

bigdata123=ADMIN::aa0336d976ba6db36f33f75a20f68dd9035b1e0e2315c331c95c2dc19b2aac13

rangertagsync=RANGER_TAG_SYNC::e3f67240f5117d1753c940dae9eea772d36ed5fe9bd9c94a300e40413f1afb9d

#<<<

#计算sha256:echo -n "bigdata123"|sha256sum

apache-atlas-2.0.0-sourcesapache-atlas-sources-2.0.0distrosrcconfatlas-env.sh

#集成添加hbase配置->下面的目录为atlas下的hbase配置目录,需要后面加入集群hbase配置

export HBASE_CONF_DIR=/usr/local/src/atlas/apache-atlas-2.0.0/conf/hbase/conf

#export MANAGE_LOCAL_HBASE=false (false外置的zk和hbase)

#export MANAGE_LOCAL_SOLR=false (false外置的solr)

#修改内存指标(根据线上机器配置)

export ATLAS_SERVER_OPTS="-server -XX:SoftRefLRUPolicyMSPerMB=0

-XX:+CMSClassUnloadingEnabled -XX:+UseConcMarkSweepGC

-XX:+CMSParallelRemarkEnabled -XX:+PrintTenuringDistribution

-XX:+HeapDumpOnOutOfMemoryError

-XX:HeapDumpPath=dumps/atlas_server.hprof

-Xloggc:logs/gc-worker.log -verbose:gc

-XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10

-XX:GCLogFileSize=1m -XX:+PrintGCDetails -XX:+PrintHeapAtGC

-XX:+PrintGCTimeStamps"

#优化 JDK1.8(以下需要16G内存)

export ATLAS_SERVER_HEAP="-Xms15360m -Xmx15360m

-XX:MaxNewSize=5120m -XX:MetaspaceSize=100M

-XX:MaxMetaspaceSize=512m"

apache-atlas-2.0.0-sourcesapache-atlas-sources-2.0.0distrosrcconfatlas-log4j.xml

#去掉如下代码的注释(开启如下代码)

<appender name="perf_appender" class="org.apache.log4j.DailyRollingFileAppender">

<param name="file" value="${atlas.log.dir}/atlas_perf.log" />

<param name="datePattern" value="'.'yyyy-MM-dd" />

<param name="append" value="true" />

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%d|%t|%m%n" />

</layout>

</appender>

<logger name="org.apache.atlas.perf" additivity="false">

<level value="debug" />

<appender-ref ref="perf_appender" />

</logger>

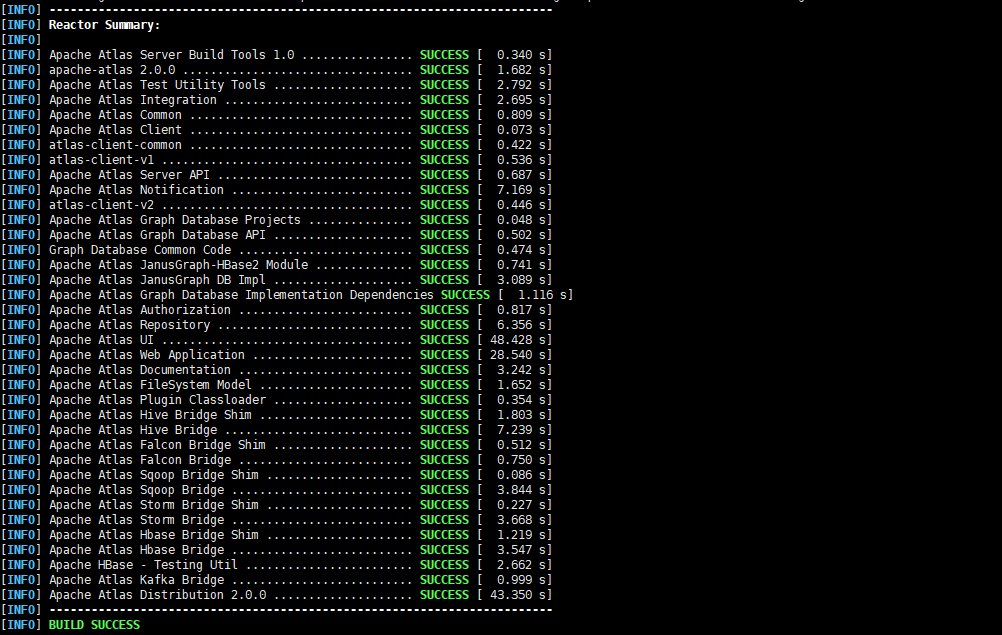

2.4 编译打包

export MAVEN_OPTS="-Xms2g -Xmx2g"

mvn clean -DskipTests install

mvn clean -DskipTests package -Pdist

出现错误,使用-X参数打印详情对应解决,无非是一些网络,node代理等

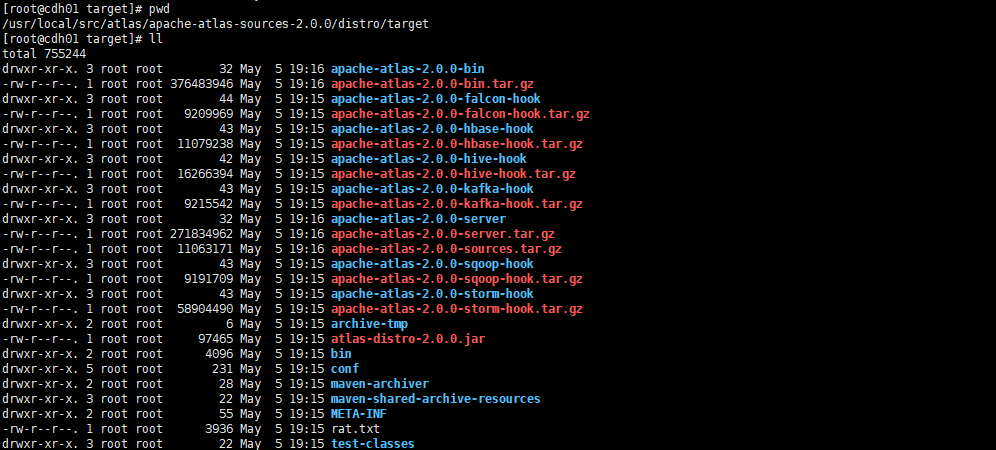

Build将创建以下文件,这些文件用于安装Apache Atlas。

2.5 创建安装目录,上传安装包

mkdir /usr/local/src/atlas

cd /usr/local/src/atlas

#复制apache-atlas-2.0.0-bin.tar.gz到安装目录

tar -zxvf apache-atlas-2.0.0-bin.tar.gz

cd apache-atlas-2.0.0/conf

2.5.1 集成Hbase

- 添加hbase集群配置文件到apache-atlas-2.0.0/conf/hbase下(这里连接的路径需要和上面配置中一样)

ln -s /etc/hbase/conf/ /usr/local/src/atlas/apache-atlas-2.0.0/conf/hbase/

#ln -s /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hbase/conf/ /usr/local/src/atlas/apache-atlas-2.0.0/conf/hbase/

2.5.2 安装集成Solr

http://archive.apache.org/dist/lucene/solr/7.5.0/

官网推荐的solr节点数为3,内存为32G

- 所有节点

mkdir /usr/local/src/solr

cd /usr/local/src/solr

- 主节点上传包

tar -zxvf solr-7.5.0.tgz

cd solr-7.5.0/

- 主节点修改 vim /usr/local/src/solr/solr-7.5.0/bin/solr.in.sh

ZK_HOST="cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181"

SOLR_HOST="cdh01.cm"

SOLR_PORT=8983

- 主节点分发

scp -r /usr/local/src/solr/solr-7.5.0 root@cdh02.cm:/usr/local/src/solr

scp -r /usr/local/src/solr/solr-7.5.0 root@cdh03.cm:/usr/local/src/solr

分发完,将SOLR_HOST改为对应机器

- 集成solr

将apache-atlas-2.0.0/conf/solr文件拷贝到/usr/local/src/solr/solr-7.5.0/,更名为atlas-solr

scp -r /usr/local/src/atlas/apache-atlas-2.0.0/conf/solr/ root@cdh01.cm:/usr/local/src/solr/solr-7.5.0/

scp -r /usr/local/src/atlas/apache-atlas-2.0.0/conf/solr/ root@cdh02.cm:/usr/local/src/solr/solr-7.5.0/

scp -r /usr/local/src/atlas/apache-atlas-2.0.0/conf/solr/ root@cdh03.cm:/usr/local/src/solr/solr-7.5.0/

- 所有solr节点操作并启动

cd /usr/local/src/solr/solr-7.5.0/

mv solr/ atlas-solr

#所有节点创建用户附权限

useradd atlas && echo atlas | passwd --stdin atlas

chown -R atlas:atlas /usr/local/src/solr/

#所有节点执行启动(cloud模式)

su atlas

/usr/local/src/solr/solr-7.5.0/bin/solr start -c -z cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181 -p 8983

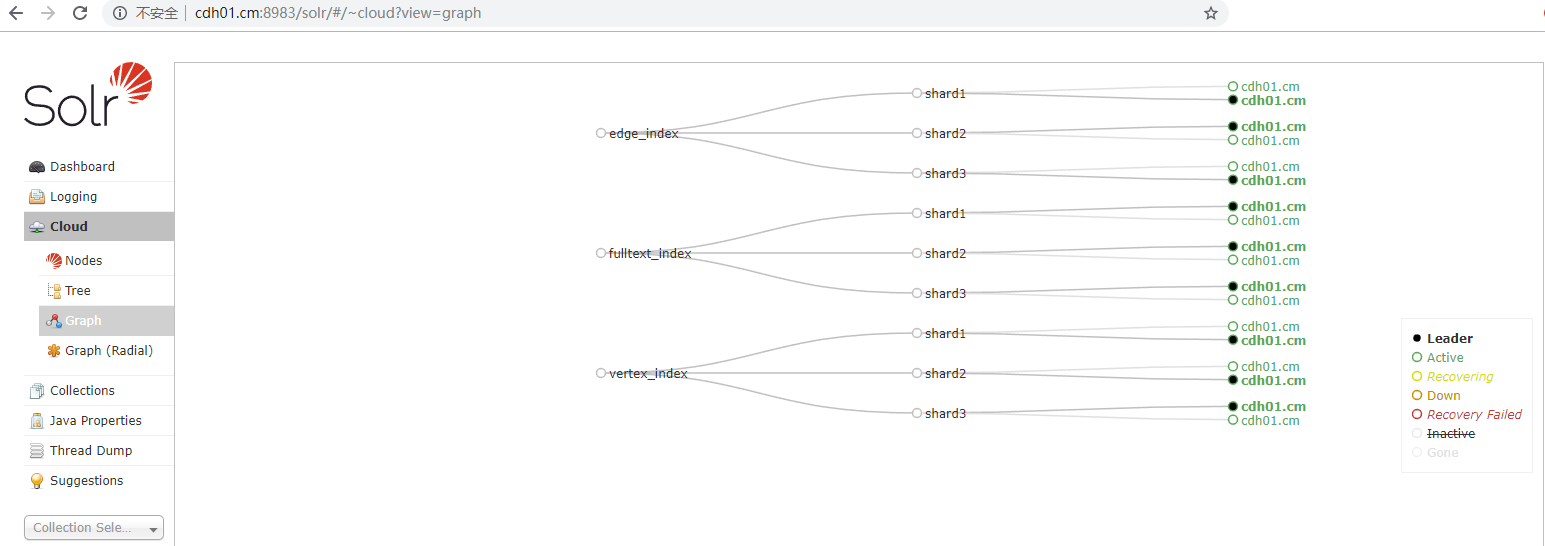

- 随便solr节点创建collection

/usr/local/src/solr/solr-7.5.0/bin/solr create -c vertex_index -d /usr/local/src/solr/solr-7.5.0/atlas-solr -shards 3 -replicationFactor 2

/usr/local/src/solr/solr-7.5.0/bin/solr create -c edge_index -d /usr/local/src/solr/solr-7.5.0/atlas-solr -shards 3 -replicationFactor 2

/usr/local/src/solr/solr-7.5.0/bin/solr create -c fulltext_index -d /usr/local/src/solr/solr-7.5.0/atlas-solr -shards 3 -replicationFactor 2

#如果创建错误,可使用 /opt/cloudera/parcels/CDH/lib/solr/bin/solr delete -c ${collection_name} 删除

#切换root用户继续配置其他

su root

2.5.3 集成kafka

- 创建kafka-topic

kafka-topics --zookeeper cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181 --create --replication-factor 3 --partitions 3 --topic _HOATLASOK

kafka-topics --zookeeper cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181 --create --replication-factor 3 --partitions 3 --topic ATLAS_ENTITIES

kafka-topics --zookeeper cdh01.cm:2181,cdh02.cm:2181,cdh03.cm:2181 --create --replication-factor 3 --partitions 3 --topic ATLAS_HOOK

2.5.5 集成Hive

- 将 atlas-application.properties 配置文件,压缩加入到 atlas-plugin-classloader-2.0.0.jar 中

#必须在此路径打包,才能打到第一级目录下

cd /usr/local/src/atlas/apache-atlas-2.0.0/conf

zip -u /usr/local/src/atlas/apache-atlas-2.0.0/hook/hive/atlas-plugin-classloader-2.0.0.jar atlas-application.properties

- 修改 hive-site.xml

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.atlas.hive.hook.HiveHook</value>

</property>

- 修改 hive-env.sh 的 Gateway 客户端环境高级配置代码段(安全阀)

HIVE_AUX_JARS_PATH=/usr/local/src/atlas/apache-atlas-2.0.0/hook/hive

- 修改 HIVE_AUX_JARS_PATH

- 修改 hive-site.xml 的 HiveServer2 高级配置代码段(安全阀)

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.atlas.hive.hook.HiveHook</value>

</property>

<property>

<name>hive.reloadable.aux.jars.path</name>

<value>/usr/local/src/atlas/apache-atlas-2.0.0/hook/hive</value>

</property>

- 修改 HiveServer2 环境高级配置代码段

HIVE_AUX_JARS_PATH=/usr/local/src/atlas/apache-atlas-2.0.0/hook/hive

- 将配置好的Atlas包发往各个hive节点后重启集群

scp -r /usr/local/src/atlas/apache-atlas-2.0.0 root@cdh02.cm:/usr/local/src/atlas/

scp -r /usr/local/src/atlas/apache-atlas-2.0.0 root@cdh02.cm:/usr/local/src/atlas/

重启集群

- 将atlas配置文件copy到/etc/hive/conf下(集群各个节点)

scp /usr/local/src/atlas/apache-atlas-2.0.0/conf/atlas-application.properties root@cdh01.cm:/etc/hive/conf

scp /usr/local/src/atlas/apache-atlas-2.0.0/conf/atlas-application.properties root@cdh02.cm:/etc/hive/conf

scp /usr/local/src/atlas/apache-atlas-2.0.0/conf/atlas-application.properties root@cdh03.cm:/etc/hive/conf

2.6 启动 Atlas

#启动

./bin/atlas_start.py

#停止:./bin/atlas_stop.py

注意监控日志,看是否报错。主要日志application.log

2.7 将 Hive 元数据导入 Atlas

- 所有节点添加hive环境变量

vim /etc/profile

#>>>

#hive

export HIVE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive

export HIVE_CONF_DIR=/etc/hive/conf

export PATH=$HIVE_HOME/bin:$PATH

#<<<

source /etc/profile

- 执行atlas脚本

./bin/import-hive.sh

#输入用户名:admin;输入密码:admin(如修改请使用修改的)

体验一下吧