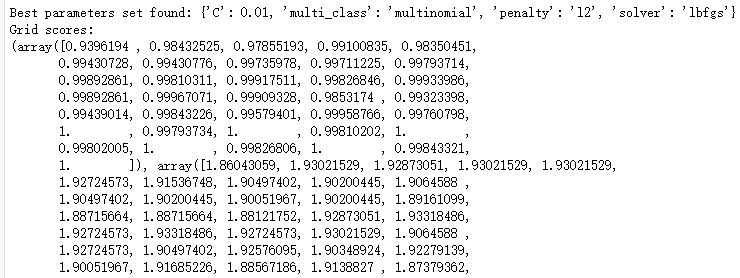

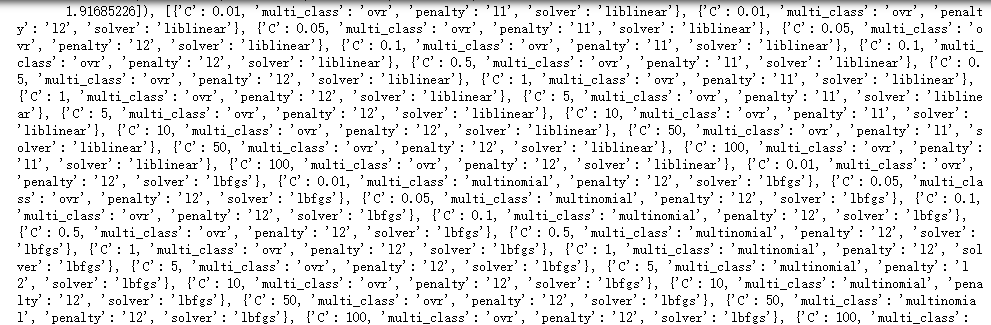

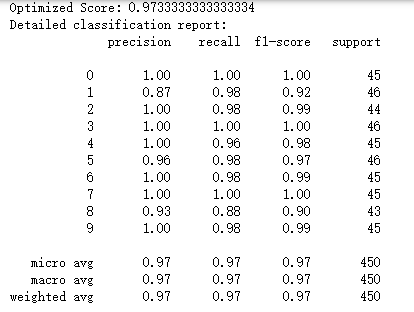

import scipy from sklearn.datasets import load_digits from sklearn.metrics import classification_report from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.model_selection import GridSearchCV,RandomizedSearchCV #模型选择参数优化暴力搜索寻优GridSearchCV模型 def test_GridSearchCV(): ''' 测试 GridSearchCV 的用法。使用 LogisticRegression 作为分类器,主要优化 C、penalty、multi_class 等参数 ''' ### 加载数据 digits = load_digits() X_train,X_test,y_train,y_test=train_test_split(digits.data, digits.target,test_size=0.25,random_state=0,stratify=digits.target) #### 参数优化 ###### tuned_parameters = [{'penalty': ['l1','l2'], 'C': [0.01,0.05,0.1,0.5,1,5,10,50,100], 'solver':['liblinear'], 'multi_class': ['ovr']}, {'penalty': ['l2'], 'C': [0.01,0.05,0.1,0.5,1,5,10,50,100], 'solver':['lbfgs'], 'multi_class': ['ovr','multinomial']}, ] clf=GridSearchCV(LogisticRegression(tol=1e-6),tuned_parameters,cv=10) clf.fit(X_train,y_train) print("Best parameters set found:",clf.best_params_) print("Grid scores:") # for params, mean_train_score, mean_test_score in clf.cv_results_.params,cv_results_.mean_train_score,cv_results_.mean_test_score: # print(" %0.3f (+/-%0.03f) for %s" % (mean_train_score, mean_test_score() * 2, params)) print((clf.cv_results_["mean_train_score"], clf.cv_results_["mean_test_score"] * 2, clf.cv_results_["params"])) print("Optimized Score:",clf.score(X_test,y_test)) print("Detailed classification report:") y_true, y_pred = y_test, clf.predict(X_test) print(classification_report(y_true, y_pred)) #调用test_GridSearchCV() test_GridSearchCV()