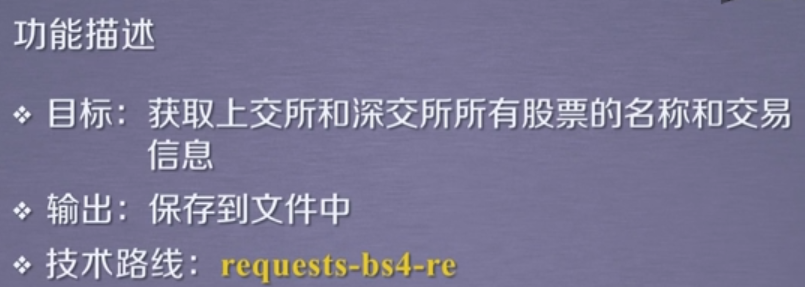

方法一、用request,bs4,re实现爬取数据。

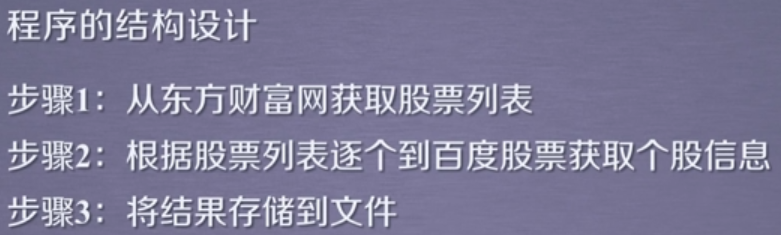

查看源代码,东方财富网中的数据都是用js生成的,

用字典来存储数据,再将数据存入文件中。

1 #CrawBaiduStocksA.py 2 import requests 3 from bs4 import BeautifulSoup 4 import traceback 5 import re 6 7 def getHTMLText(url): 8 try: 9 r = requests.get(url) 10 r.raise_for_status() 11 r.encoding = r.apparent_encoding 12 return r.text 13 except: 14 return "" 15 16 def getStockList(lst, stockURL): 17 html = getHTMLText(stockURL) 18 soup = BeautifulSoup(html, 'html.parser') 19 a = soup.find_all('a') 20 for i in a: 21 try: 22 href = i.attrs['href'] 23 lst.append(re.findall(r"[s][hz]d{6}", href)[0]) 24 except: 25 continue 26 27 def getStockInfo(lst, stockURL, fpath): 28 for stock in lst: 29 url = stockURL + stock + ".html" 30 html = getHTMLText(url) 31 try: 32 if html=="": 33 continue 34 infoDict = {} 35 soup = BeautifulSoup(html, 'html.parser') 36 stockInfo = soup.find('div',attrs={'class':'stock-bets'}) 37 38 name = stockInfo.find_all(attrs={'class':'bets-name'})[0] 39 infoDict.update({'股票名称': name.text.split()[0]}) 40 41 keyList = stockInfo.find_all('dt') 42 valueList = stockInfo.find_all('dd') 43 for i in range(len(keyList)): 44 key = keyList[i].text 45 val = valueList[i].text 46 infoDict[key] = val 47 48 with open(fpath, 'a', encoding='utf-8') as f: 49 f.write( str(infoDict) + ' ' ) 50 except: 51 traceback.print_exc() 52 continue 53 54 def main(): 55 stock_list_url = 'https://quote.eastmoney.com/stocklist.html' 56 stock_info_url = 'https://gupiao.baidu.com/stock/' 57 output_file = 'D:/BaiduStockInfo.txt' 58 slist=[] 59 getStockList(slist, stock_list_url) 60 getStockInfo(slist, stock_info_url, output_file) 61 62 main()