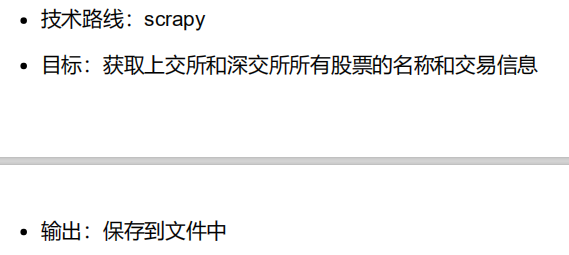

功能描述

获取股票列表:

http://quote.eastmoney.com/stock_list.html'

获取个股信息:

https://www.laohu8.com/stock/

步骤

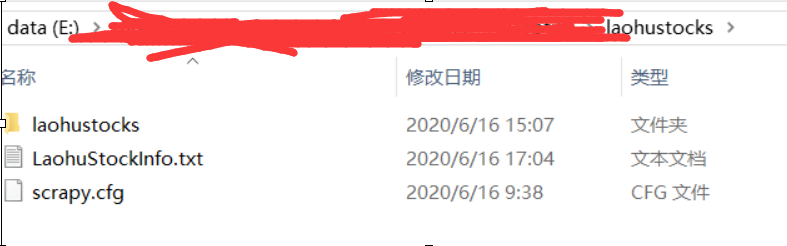

步骤1:建立工程和Spider模板

> scrapy startproject laohustocks

> laohustocks

> scrapy genspider stocks laohu

> 进一步修改spiders/stocks.py

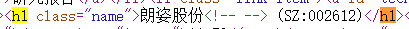

步骤2:编写Spider

配置stocks.py文件

修改对返回页面的处理

修改对新增URL爬取请求的处理(stocks.py)

# -*- coding: utf-8 -*- import scrapy import re import collections class StocksSpider(scrapy.Spider): name = 'stocks' #allowed_domains = ['laohu'] start_urls = ['http://quote.eastmoney.com/stock_list.html'] def parse(self, response): for href in response.css('a::attr(href)').extract():#返回列表 try: stock = re.findall(r'd{6}',href)[0] url = 'https://www.laohu8.com/stock/' +stock yield scrapy.Request(url,callback=self.parse_stock) except: continue def parse_stock(self,response): #infodict = {} infodict = collections.OrderedDict()#按照插入顺序更新infodict name = response.css('.name').extract()[0] print('name: ',name) infodict.update({'股票名称': re.findall('>.*?<', name)[0][1:-1] + '-' + re.findall('(.*)', name)[0][1:-1]}) stocksinfo = response.css('.detail-data') keylist = stocksinfo.css('dt').extract() valuelist = stocksinfo.css('dd').extract() for i in range(len(keylist)): key = re.findall(r'>.*</dt>', keylist[i])[0][1:-5] try: value =re.findall(r'>.*</dd>', valuelist[i])[0][1:-5] except: value ='--' print('key : value',key,value) infodict[key] = value yield infodict

name = response.css('.name').extract()[0]

print('name: ',name)

stocksinfo = response.css('.detail-data')

keylist = stocksinfo.css('dt').extract()

valuelist = stocksinfo.css('dd').extract()

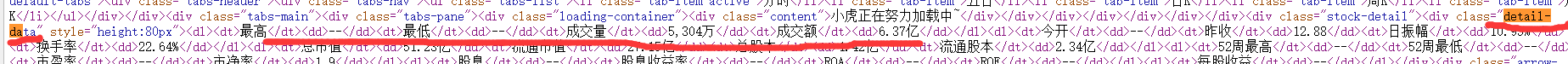

步骤3:编写ITEM Pipelines

配置pipelines.py文件

定义对爬取项(Scrapy Item)的处理类(pipelines.py)

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html class LaohustocksPipeline(object): def process_item(self, item, spider): return item class LaohustocksInfoPipeline(object): def open_spider(self,spider): self.f = open('LaohuStockInfo.txt','w') def close_spider(self,spider): self.f.close() def process_item(self,item,spider): try: line = str(dict(item)) + ' ' self.f.write(line) except: pass return item

步骤四:配置ITEM_PIPELINES选项(settings.py)

# -*- coding: utf-8 -*- # Scrapy settings for laohustocks project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://docs.scrapy.org/en/latest/topics/settings.html # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html # https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'laohustocks' SPIDER_MODULES = ['laohustocks.spiders'] NEWSPIDER_MODULE = 'laohustocks.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'laohustocks (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'laohustocks.middlewares.LaohustocksSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'laohustocks.middlewares.LaohustocksDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'laohustocks.pipelines.LaohustocksInfoPipeline': 300, } #一定按照自己部署顺序写 # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

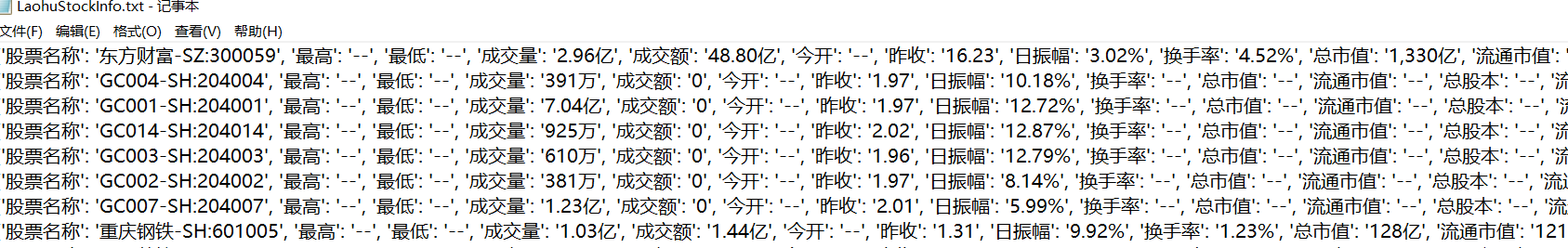

爬取数据的 部分内容