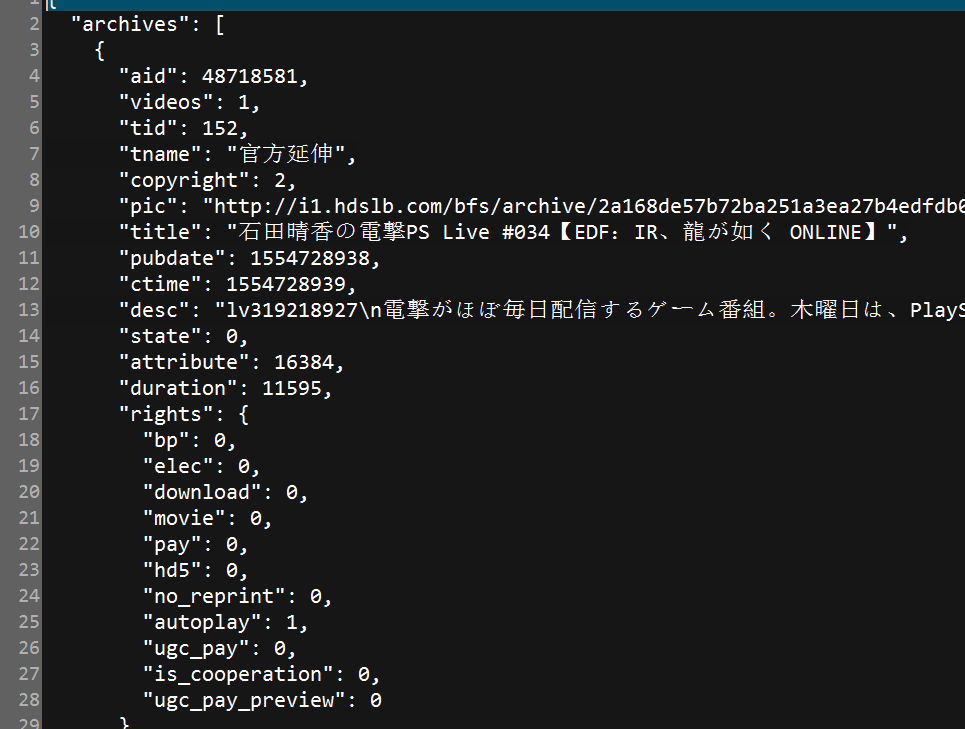

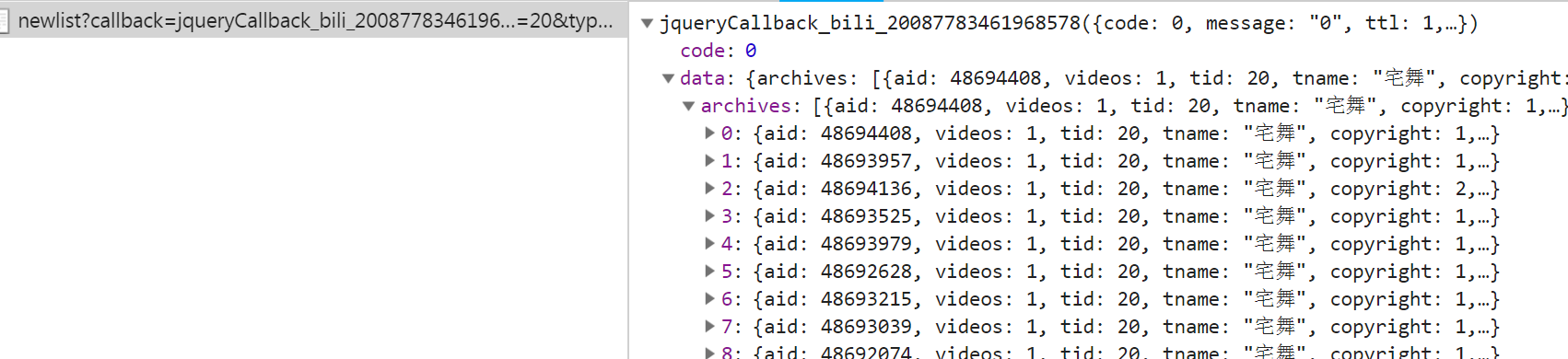

抓包时发现子菜单请求数据时一般需要rid,但的确存在一些如游戏->游戏赛事不使用rid,对于这种未进行处理,此外rid一般在主菜单的响应中,但有的如番剧这种,rid在子菜单的url中,此外返回的data中含有页数相关信息,可以据此定义爬取的页面数量

1 # -*- coding: utf-8 -*-

2 # @author: Tele

3 # @Time : 2019/04/08 下午 1:01

4 import requests

5 import json

6 import os

7 import re

8 import shutil

9 from lxml import etree

10

11

12 # 爬取每个菜单的前5页内容

13 class BiliSplider:

14 def __init__(self, save_dir, menu_list):

15 self.target = menu_list

16 self.url_temp = "https://www.bilibili.com/"

17 self.headers = {

18 "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

19 # "Cookie": "LIVE_BUVID=AUTO6715546997211617; buvid3=07192BD6-2288-4BA5-9259-8E0BF6381C9347193infoc; stardustvideo=1; CURRENT_FNVAL=16; sid=l0fnfa5e; rpdid=bfAHHkDF:cq6flbmZ:Ohzhw:1Hdog8",

20 }

21 self.proxies = {

22 "http": "http://61.190.102.50:15845"

23 }

24 self.father_dir = save_dir

25

26 def get_menu_list(self):

27 regex = re.compile("//")

28 response = requests.get(self.url_temp, headers=self.headers)

29 html_element = etree.HTML(response.content)

30 nav_menu_list = html_element.xpath("//div[@id='primary_menu']/ul[@class='nav-menu']/li/a")

31

32 menu_list = list()

33 for item in nav_menu_list:

34 menu = dict()

35 title = item.xpath("./*/text()")

36 menu["title"] = title[0] if len(title) > 0 else None

37 href = item.xpath("./@href")

38 menu["href"] = "https://" + regex.sub("", href[0]) if len(href) > 0 else None

39

40 # 子菜单

41 submenu_list = list()

42 sub_nav_list = item.xpath("./../ul[@class='sub-nav']/li")

43 if len(sub_nav_list) > 0:

44 for sub in sub_nav_list:

45 submenu = dict()

46 sub_title = sub.xpath("./a/span/text()")

47 submenu["title"] = sub_title[0] if len(sub_title) > 0 else None

48 sub_href = sub.xpath("./a/@href")

49 submenu["href"] = "https://" + regex.sub("", sub_href[0]) if len(sub_href) > 0 else None

50 submenu_list.append(submenu)

51 menu["submenu_list"] = submenu_list if len(submenu_list) > 0 else None

52 menu_list.append(menu)

53 return menu_list

54

55 # rid=tid

56 def parse_index_url(self, url):

57 result_list = list()

58 # 正则匹配

59 regex = re.compile("<script>window.__INITIAL_STATE__=(.*)</script>")

60 response = requests.get(url, headers=self.headers)

61 result = regex.findall(response.content.decode())

62 temp = re.compile("(.*);(function").findall(result[0]) if len(result) > 0 else None

63 sub_list = json.loads(temp[0])["config"]["sub"] if temp else list()

64 if len(sub_list) > 0:

65 for sub in sub_list:

66 # 一些子菜单没有rid,需要请求不同的url,暂不处理

67 if "tid" in sub:

68 if sub["tid"]:

69 sub_menu = dict()

70 sub_menu["rid"] = sub["tid"] if sub["tid"] else None

71 sub_menu["title"] = sub["name"] if sub["name"] else None

72 result_list.append(sub_menu)

73 else:

74 pass

75

76 return result_list

77

78 # 最新动态 region?callback

79 # 数据 newlist?callback

80 def parse_sub_url(self, item):

81 self.headers["Referer"] = item["referer"]

82 url_pattern = "https://api.bilibili.com/x/web-interface/newlist?rid={}&type=0&pn={}&ps=20"

83

84 # 每个菜单爬取前5页

85 for i in range(1, 6):

86 data = dict()

87 url = url_pattern.format(item["rid"], i)

88 print(url)

89 try:

90 response = requests.get(url, headers=self.headers, proxies=self.proxies, timeout=10)

91 except:

92 return

93 if response.status_code == 200:

94 data["content"] = json.loads(response.content.decode())["data"]

95 data["title"] = item["title"]

96 data["index"] = i

97 data["menu"] = item["menu"]

98 # 保存数据

99 self.save_data(data)

100 else:

101 print("请求超时") # 一般是403,被封IP了

102

103 def save_data(self, data):

104 if len(data["content"]) == 0:

105 return

106 parent_path = self.father_dir + "/" + data["menu"] + "/" + data["title"]

107 if not os.path.exists(parent_path):

108 os.makedirs(parent_path)

109 file_dir = parent_path + "/" + "第" + str(data["index"]) + "页.txt"

110

111 # 保存

112 with open(file_dir, "w", encoding="utf-8") as file:

113 file.write(json.dumps(data["content"], ensure_ascii=False, indent=2))

114

115 def run(self):

116 # 清除之前保存的数据

117 if os.path.exists(self.father_dir):

118 shutil.rmtree(self.father_dir)

119

120 menu_list = self.get_menu_list()

121 menu_info = list()

122 # 获得目标菜单信息

123 # 特殊列表,一些菜单的rid必须从子菜单的url中获得

124 special_list = list()

125 for menu in menu_list:

126 for t in self.target:

127 if menu["title"] == t:

128 if menu["title"] == "番剧" or menu["title"] == "国创" or menu["title"] == "影视":

129 special_list.append(menu)

130 menu_info.append(menu)

131 break

132

133 # 目标菜单的主页

134 if len(menu_info) > 0:

135 for info in menu_info:

136 menu_index_url = info["href"]

137 # 处理特殊列表

138 if info in special_list:

139 menu_index_url = info["submenu_list"][0]["href"]

140 # 获得rid

141 result_list = self.parse_index_url(menu_index_url)

142 print(result_list)

143 if len(result_list) > 0:

144 for item in result_list:

145 # 大菜单

146 item["menu"] = info["title"]

147 item["referer"] = menu_index_url

148 # 爬取子菜单

149 self.parse_sub_url(item)

150

151

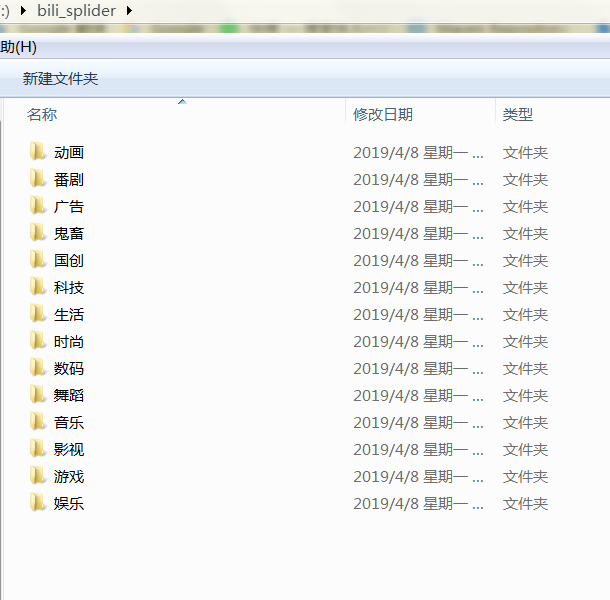

152 def main():

153 target = ["动画", "番剧", "国创", "音乐", "舞蹈", "游戏", "科技", "数码", "生活", "鬼畜", "时尚", "广告", "娱乐", "影视"]

154 splider = BiliSplider("f:/bili_splider", target)

155 splider.run()

156

157

158 if __name__ == '__main__':

159 main()

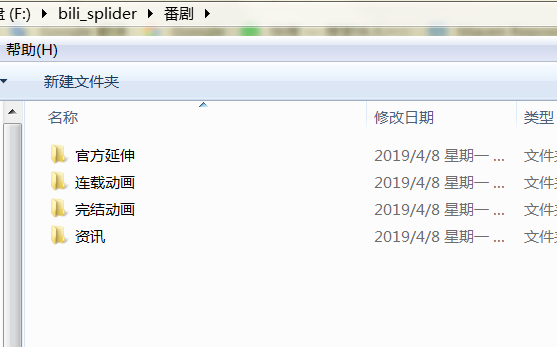

可以看到番剧少了新番时间表与番剧索引,因为这两个请求不遵循https://api.bilibili.com/x/web-interface/newlist?rid={}&type=0&pn={}&ps=20的格式,类似的不再赘述