Kubernetes基本操作篇

k8s 是经典的一对多模型,有一个主要的管理节点

master和许多的工作节点node。当然,k8s 也可以配置多个管理节点,拥有两个以上的管理节点被称为 高可用。k8s 包括了许多的组件,每个组件都是单运行在一个docker容器中,然后通过自己规划的虚拟网络相互访问。

集群基本操作

- Cluster 集群,一个集群里有一个 Master 和数个 Node

- Node 通常拿一台物理机座位图一个 Node,也可以用虚拟机

- Pod 是一个 docker 实例,一个 Node 里有一个或多个 Pod

- Deployment 一个发布,可以包含一个或多个 Pod

- Service 暴露出来的服务,内置了负载分发

-

查看集群节点

[root@kubernetes-master-01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kubernetes-master-01 Ready master 3m44s v1.19.0 kubernetes-node-01 Ready <none> 2m21s v1.19.0 kubernetes-node-02 Ready <none> 2m15s v1.19.0Copy to clipboardErrorCopied -

查看系统组件

[root@kubernetes-master-01 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-7df6d7c9f9-w5b9r 1/1 Running 0 3m10s coredns-7df6d7c9f9-zxlx4 1/1 Running 0 3m10s etcd-kubernetes-master-01 1/1 Running 0 3m20s kube-apiserver-kubernetes-master-01 1/1 Running 0 3m20s kube-controller-manager-kubernetes-master-01 1/1 Running 0 3m20s kube-flannel-ds-amd64-9vqc2 1/1 Running 0 2m7s kube-flannel-ds-amd64-d5v74 1/1 Running 0 2m1s kube-flannel-ds-amd64-f424g 1/1 Running 0 2m45s kube-proxy-5g5vs 1/1 Running 0 3m10s kube-proxy-h67k7 1/1 Running 0 2m1s kube-proxy-thb9g 1/1 Running 0 2m7s kube-scheduler-kubernetes-master-01 1/1 Running 0 3m20sCopy to clipboardErrorCopied -

加入Master节点

kubeadm join 172.16.0.55:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:777cdc282d7988dccb109dc95d781bc2f6aa3fb145b6adc54b8499d49c12ebb9 --control-plane --certificate-key 4f1c28f81b1ba8a7518002f47aa9fb99c233092a7cc9d468d7cfd436bdb44c0fCopy to clipboardErrorCopied -

加入Node节点

kubeadm join 172.16.0.55:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:777cdc282d7988dccb109dc95d781bc2f6aa3fb145b6adc54b8499d49c12ebb9Copy to clipboardErrorCopied

k8s最小单元Pod

Pod是Kubernetes创建或部署的最小/最简单的基本单位,一个Pod代表集群上正在运行的一个进程。

一个Pod封装一个应用容器(也可以有多个容器),存储资源、一个独立的网络IP以及管理控制容器运行方式的策略选项。Pod代表部署的一个单位:Kubernetes中单个应用的实例,它可能由单个容器或多个容器共享组成的资源。

POD 基本概念

每个Pod都是运行应用的单个实例,如果需要水平扩展应用(例如,运行多个实例),则应该使用多个Pods,每个实例一个Pod。

Pod中的容器在集群中Node上被自动分配,容器之间可以共享资源、网络和相互依赖关系,并同时被调度使用。

- 单容器Pod,最常见的应用方式。

- Pod中不同容器之间的端口是不能冲突的

- 多容器Pod,对于多容器Pod,Kubernetes会保证所有的容器都在同一台物理主机或虚拟主机中运行。多容器Pod是相对高阶的使用方式,除非应用耦合特别严重,一般不推荐使用这种方式。一个Pod内的容器共享IP地址和端口范围,容器之间可以通过 localhost 互相访问。

Pod并不提供保证正常运行的能力,因为可能遭受Node节点的物理故障、网络分区等等的影响,整体的高可用是Kubernetes集群通过在集群内调度Node来实现的。通常情况下我们不要直接创建Pod,一般都是通过Controller来进行管理,但是了解Pod对于我们熟悉控制器非常有好处。

网络

每个Pod被分配一个独立的IP地址,Pod中的每个容器共享网络命名空间,包括IP地址和网络端口。Pod内的容器可以使用localhost相互通信。当Pod中的容器与Pod 外部通信时,他们必须协调如何使用共享网络资源(如端口)。

存储

Pod可以指定一组共享存储volumes。Pod中的所有容器都可以访问共享volumes,允许这些容器共享数据。volumes 还用于Pod中的数据持久化,以防其中一个容器需要重新启动而丢失数据。

Pod带来的好处

- Pod做为一个可以独立运行的服务单元,简化了应用部署的难度,以更高的抽象层次为应用部署管提供了极大的方便。

- Pod做为最小的应用实例可以独立运行,因此可以方便的进行部署、水平扩展和收缩、方便进行调度管理与资源的分配。

- Pod中的容器共享相同的数据和网络地址空间,Pod之间也进行了统一的资源管理与分配。

POD基本操作

-

创建pod

[root@kubernetes-master-01 ~]# kubectl run django --image=alvinos/django:v1 pod/django createdCopy to clipboardErrorCopied -

查看pod

[root@kubernetes-master-01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE django 1/1 Running 0 2m9sCopy to clipboardErrorCopied -

测试pod

[root@kubernetes-master-01 ~]# curl 10.244.2.2/index 主机名:django,版本:v1Copy to clipboardErrorCopied -

查看pod IP

[root@kubernetes-master-01 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES django 1/1 Running 0 3m19s 10.244.2.2 kubernetes-node-02 <none> <none>Copy to clipboardErrorCopied

通过配置文件创建POD

Pod的配置信息中有几个重要部分,apiVersion、kind、metadata(元数据)、spec以及status。其中apiVersion和kind是比较固定的,status是运行时的状态,所以最重要的就是metadata和spec两个部分。

-

Pod经典案例

apiVersion: v1 kind: Pod metadata: name: first-pod labels: app: bash tir: backend spec: containers: - name: bash-container image: busybox command: ['sh', '-c', 'echo Hello Kubernetes! && sleep 10']Copy to clipboardErrorCopied -

操作结果

[root@kubernetes-master-01 ~]# vim pod.yaml [root@kubernetes-master-01 ~]# kubectl apply -f pod.yaml pod/first-pod created [root@kubernetes-master-01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE django 1/1 Running 0 21m first-pod 1/1 Running 0 32s [root@kubernetes-master-01 ~]# kubectl logs first-pod Hello Kubernetes!Copy to clipboardErrorCopied -

查看Pod运行信息

[root@kubernetes-master-01 ~]# kubectl describe pod first-pod Name: first-pod Namespace: default Priority: 0 Node: kubernetes-node-01/172.16.0.53 Start Time: Sat, 05 Sep 2020 17:39:17 +0800 Labels: app=bash tir=backend Annotations: <none> Status: Running IP: 10.244.1.2 IPs: IP: 10.244.1.2 Containers: bash-container: Container ID: docker://de1374c6bb241df68a8e983cdf3acf4093d354da1fe8e6a819e886a27d43edaa Image: busybox Image ID: docker-pullable://busybox@sha256:c3dbcbbf6261c620d133312aee9e858b45e1b686efbcead7b34d9aae58a37378 Port: <none> Host Port: <none> Command: sh -c echo Hello Kubernetes! && sleep 10 State: Running Started: Sat, 05 Sep 2020 17:40:59 +0800 Last State: Terminated Reason: Completed Exit Code: 0 Started: Sat, 05 Sep 2020 17:40:11 +0800 Finished: Sat, 05 Sep 2020 17:40:21 +0800 Ready: True Restart Count: 3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-ldlxt (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-ldlxt: Type: Secret (a volume populated by a Secret) SecretName: default-token-ldlxt Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 108s default-scheduler Successfully assigned default/first-pod to kubernetes-node-01 Normal Pulled 89s kubelet, kubernetes-node-01 Successfully pulled image "busybox" in 17.675119792s Normal Pulled 77s kubelet, kubernetes-node-01 Successfully pulled image "busybox" in 1.107122896s Normal Pulled 54s kubelet, kubernetes-node-01 Successfully pulled image "busybox" in 1.690204423s Warning BackOff 33s (x3 over 66s) kubelet, kubernetes-node-01 Back-off restarting failed container Normal Pulling 22s (x4 over 106s) kubelet, kubernetes-node-01 Pulling image "busybox" Normal Pulled 7s kubelet, kubernetes-node-01 Successfully pulled image "busybox" in 15.376057413s Normal Created 6s (x4 over 89s) kubelet, kubernetes-node-01 Created container bash-container Normal Started 6s (x4 over 89s) kubelet, kubernetes-node-01 Started container bash-containerCopy to clipboardErrorCopied -

查看Pod配置项信息

[root@kubernetes-master-01 ~]# kubectl explain pod KIND: Pod VERSION: v1 DESCRIPTION: Pod is a collection of containers that can run on a host. This resource is created by clients and scheduled onto hosts. FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <Object> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <Object> Specification of the desired behavior of the pod. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status status <Object> Most recently observed status of the pod. This data may not be up to date. Populated by the system. Read-only. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status Copy to clipboardErrorCopied

Pod配置标签

Label是Kubernetes系统中另外一个核心概念。一个Label是一个key=value的键值对,其中key与vaue由用户自己指定。Label可以附加到各种资源对象上,例如Node、Pod、Service、RC等,一个资源对象可以定义任意数量的Label,同一个Label也可以被添加到任意数量的资源对象上去,Label通常在资源对象定义时确定,也可以在对象创建后动态添加或者删除。

我们可以通过指定的资源对象捆绑一个或多个不同的Label来实现多维度的资源分组管理功能,以便于灵活、方便地进行资源分配、调度、配置、部署等管理工作。例如:部署不同版本的应用到不同的环境中;或者监控和分析应用(日志记录、监控、告警)等。一些常用等label示例如下。

- 版本标签:"release" : "stable" , "release" : "canary"

- 环境标签:"environment" : "dev" , "environment" : "production"

- 架构标签:"tier" : "frontend" , "tier" : "backend" , "tier" : "middleware"

- 分区标签:"partition" : "customerA" , "partition" : "customerB"

- 质量管控标签:"track" : "daily" , "track" : "weekly"

Label相当于我们熟悉的“标签”,给某个资源对象定义一个Label,就相当于给它打了一个标签,随后可以通过Label Selector(标签选择器)查询和筛选拥有某些Label的资源对象,Kubernetes通过这种方式实现了类似SQL的简单又通用的对象查询机制。

-

查看标签

[root@kubernetes-master-01 ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS django 1/1 Running 0 27m run=djangoCopy to clipboardErrorCopied -

新增标签

[root@kubernetes-master-01 ~]# kubectl label pod django env=test pod/django labeled [root@kubernetes-master-01 ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS django 1/1 Running 0 28m env=test,run=djangoCopy to clipboardErrorCopied -

删除标签

[root@kubernetes-master-01 ~]# kubectl label pod django env- pod/django labeled [root@kubernetes-master-01 ~]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS django 1/1 Running 0 29m run=djangoCopy to clipboardErrorCopied -

通过标签筛选Pod

[root@kubernetes-master-01 ~]# kubectl get pods -l run=django --show-labels NAME READY STATUS RESTARTS AGE LABELS django 1/1 Running 0 30m run=djangoCopy to clipboardErrorCopied -

通过标签删除Pod

[root@kubernetes-master-01 ~]# kubectl delete pod -l run=django pod "django" deleted [root@kubernetes-master-01 ~]# kubectl get pods -l run=django No resources found in default namespace.Copy to clipboardErrorCopied

Replication Controller

Replication Controller控制器简称:rc。Replication Controller 确保在任何时候都有特定数量的 pod 副本处于正常运行状态。则Replication Controller会终止额外的pod,如果减少,RC会创建新的pod,始终保持在定义范围。

- 保证Pod数量

- 跨节点监控Pod数量

-

创建RC

[root@kubernetes-master-01 ~]# vim rc.yaml [root@kubernetes-master-01 ~]# cat rc.yaml apiVersion: v1 kind: ReplicationController metadata: name: nginx spec: replicas: 3 selector: app: nginx template: metadata: name: nginx labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 [root@kubernetes-master-01 ~]# kubectl apply -f rc.yaml replicationcontroller/nginx created [root@kubernetes-master-01 ~]# kubectl get rc NAME DESIRED CURRENT READY AGE nginx 3 3 0 5s [root@kubernetes-master-01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-cfp5l 0/1 ContainerCreating 0 10s nginx-p546l 0/1 ContainerCreating 0 10s nginx-vn9ng 0/1 ContainerCreating 0 10sCopy to clipboardErrorCopied -

测试RC自动创建POD

[root@kubernetes-master-01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-hhnw4 1/1 Running 0 16s nginx-p546l 1/1 Running 0 3m31s nginx-vn9ng 1/1 Running 0 3m31s [root@kubernetes-master-01 ~]# kubectl delete pod nginx-p546l pod "nginx-p546l" deleted [root@kubernetes-master-01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-hhnw4 1/1 Running 0 35s nginx-phbh9 0/1 ContainerCreating 0 5s nginx-vn9ng 1/1 Running 0 3m50sCopy to clipboardErrorCopied可以看到删除一个Pod之后,RC自动创建了Pod。

Service

部署在容器中的服务想要被外界访问到,就得做端口映射才行。但是这种方式在K8s中是无法实现的,因为每一次Pod的创建和重启其IP都会改变,而且同一个节点上的Pod可能存在多个,这个时候做端口映射是不现实的。而Service相当于我们Pod的VIP

Service的三种模式

Service有三种运行模式,其实是Service升级的三个版本。

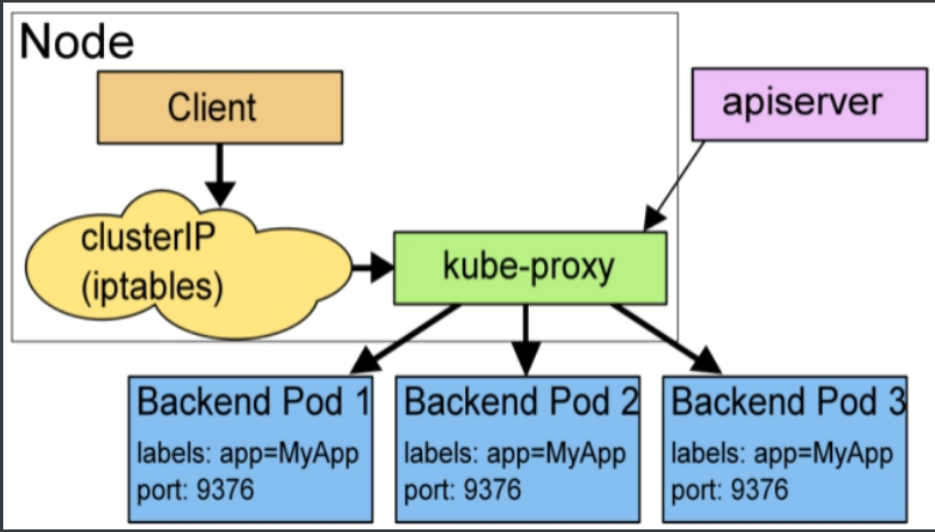

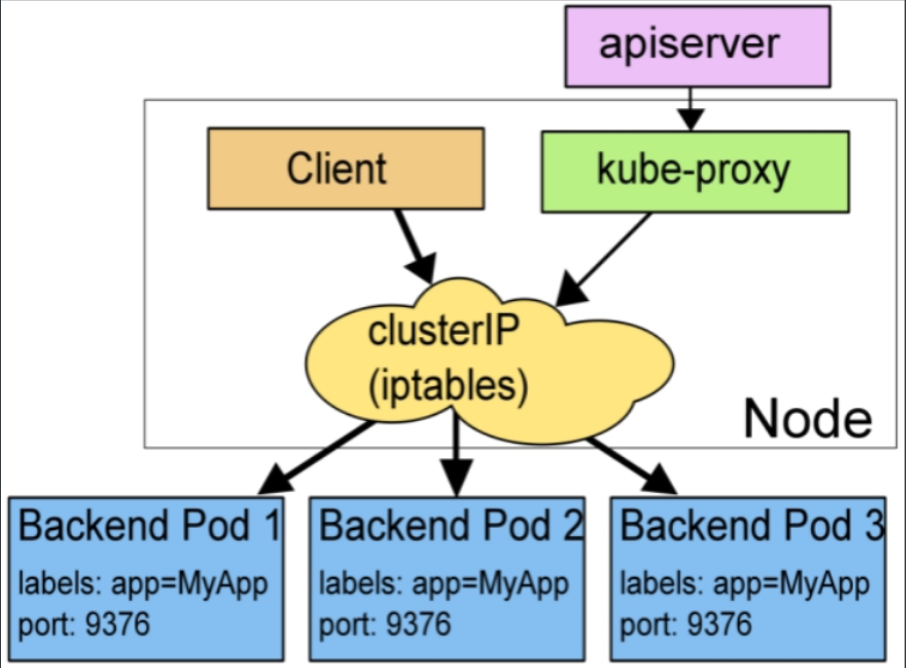

userspace

Client要访问Pod时,它先将请求发给本机内核空间中的service规则,由它再将请求,转给监听在指定套接字上的kube-proxy,kube-proxy处理完请求,并分发请求到指定Server Pod后,再将请求递交给内核空间中的service,由service将请求转给指定的Server Pod。

iptables

直接由内核的Iptables转发到Pod,不在进过kube-proxy。

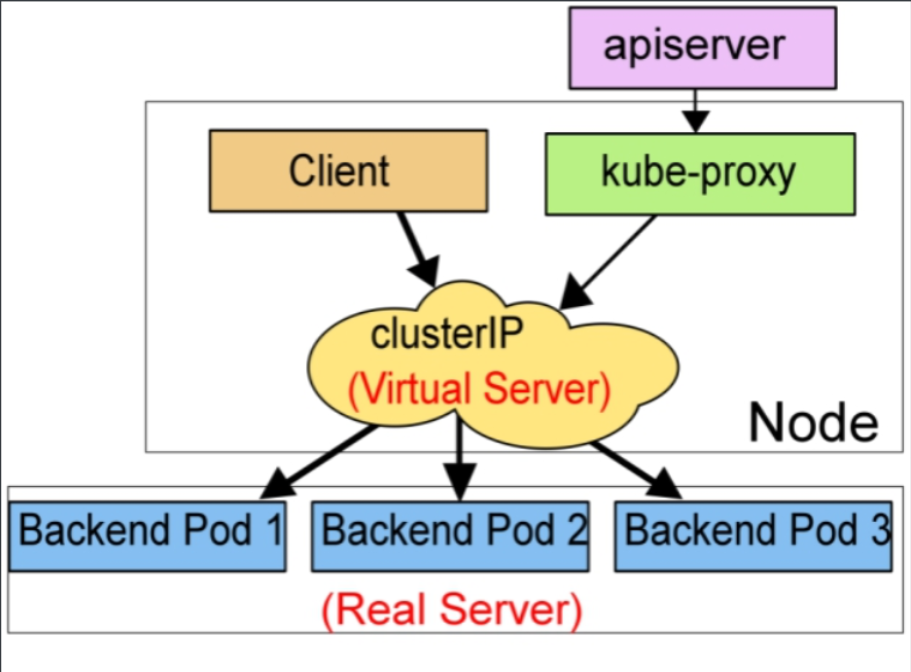

ipvs

它是直接有内核中的ipvs规则来接受Client Pod请求,并处理该请求,再有内核封包后,直接发给指定的Server Pod。

Service的四种类型

NodePort

创建这种方式的Service,内部可以通过ClusterIP进行访问,外部用户可以通过NodeIP:NodePort的方式单独访问每个Node上的实例。

[root@kubernetes-master-01 ~]# vim svc.yaml

[root@kubernetes-master-01 ~]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector:

app: nginx

---

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 3

selector:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: alvinos/django:v1

ports:

- containerPort: 80

[root@kubernetes-master-01 ~]# kubectl apply -f svc.yaml

service/nginx created

replicationcontroller/nginx configured

[root@kubernetes-master-01 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-47s6q 0/1 ContainerCreating 0 13s

pod/nginx-kd6d6 1/1 Running 0 13s

pod/nginx-t2vqz 1/1 Running 0 13s

NAME DESIRED CURRENT READY AGE

replicationcontroller/nginx 3 3 2 13s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 106m

service/nginx NodePort 10.102.211.15 <none> 80:30001/TCP 13sCopy to clipboardErrorCopied

-

测试连接

[root@kubernetes-master-01 ~]# while true; do curl 10.102.211.15/index; echo ''; sleep 1; done 主机名:nginx-47s6q,版本:v1 主机名:nginx-47s6q,版本:v1 主机名:nginx-t2vqz,版本:v1 主机名:nginx-47s6q,版本:v1 主机名:nginx-47s6q,版本:v1 主机名:nginx-t2vqz,版本:v1 主机名:nginx-47s6q,版本:v1Copy to clipboardErrorCopied

LoadBalancer

接入外部负载均衡器的IP,到我们的k8s上

- 创建LoadBalancer

[root@kubernetes-master-01 ~]# cat svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: loadbalancer

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 80

selector:

app: nginx

EOFCopy to clipboardErrorCopied

- 查看

[root@kubernetes-node-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 110d

loadbalancer LoadBalancer 10.0.129.18 81.71.12.240 80:30346/TCP 11sCopy to clipboardErrorCopied

HeadLess Service

创建一个没有IP的特殊的Cluster IP类型的Service。

-

创建

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 name: http --- apiVersion: v1 kind: Service metadata: name: headless-service spec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 clusterIP: None Copy to clipboardErrorCopied -

查看

[root@kubernetes-master-01 ~]# kubectl apply -f headless.yaml deployment.apps/nginx-deployment created service/headless-service created [root@kubernetes-master-01 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE headless-service ClusterIP None <none> 80/TCP 4s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 119m [root@kubernetes-master-01 ~]# kubectl describe svc headless-service Name: headless-service Namespace: default Labels: <none> Annotations: <none> Selector: app=nginx Type: ClusterIP IP: None Port: <unset> 80/TCP TargetPort: 80/TCP Endpoints: 10.244.1.5:80,10.244.1.6:80,10.244.2.6:80 + 2 more... Session Affinity: None Events: <none>Copy to clipboardErrorCopied

Nginx Ingress

将新加入的Ingress转化成Nginx的配置文件并使之生效。

- 组成

- Ingress Controller:将新加入的Ingress配置转化成nginx配置,并使其生效。

- Ingress服务:将Nginx配置抽象成Ingress配置,方便使用。

- 工作原理

ingress controller通过和kubernetes api交互,动态的去感知集群中ingress规则变化。然后读取它,按照自定义的规则,去集群当中寻找对应Service管理的Pod。再将生成的Nginx配置写到nginx-ingress-control的pod里,这个Ingress controller的pod里运行着一个Nginx服务,控制器会把生成的nginx配置写入/etc/nginx.conf文件中。然后reload一下使配置生效。以此达到域名分配置和动态更新的问题。

- 优点

- 动态配置服务

- 减少不必要的端口

-

安装

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.35.0/deploy/static/provider/baremetal/deploy.yamlCopy to clipboardErrorCopied -

查看安装

[root@kubernetes-master-01 ~]# kubectl get pods -n ingress-nginx NAME READY STATUS RESTARTS AGE ingress-nginx-admission-create-nn7mh 0/1 Completed 0 14s ingress-nginx-admission-patch-vwdxb 0/1 Completed 0 14s ingress-nginx-controller-77f4649599-dtrrm 1/1 Running 0 14sCopy to clipboardErrorCopied -

测试

[root@kubernetes-master-01 ~]# curl 10.96.191.89 <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>nginx/1.19.2</center> </body> </html> # 出现404是因为我们后台没有启动任何服务Copy to clipboardErrorCopied

Nginx Ingress 经典案例

-

创建Deployment

kind: Deployment apiVersion: apps/v1 metadata: name: ingress-deployment namespace: default labels: app: deployment spec: replicas: 3 selector: matchLabels: app: pod template: metadata: labels: app: pod spec: containers: - name: ingress-pod image: nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 name: httpCopy to clipboardErrorCopied查看部署结果

[root@kubernetes-master-01 ~]# kubectl get deployments.apps -l app=deployment NAME READY UP-TO-DATE AVAILABLE AGE ingress-deployment 3/3 3 3 4m37s [root@kubernetes-master-01 ~]# kubectl get pods -l app=pod NAME READY STATUS RESTARTS AGE ingress-deployment-5f6798968b-8zsn8 1/1 Running 0 4m46s ingress-deployment-5f6798968b-bls6q 1/1 Running 0 4m46s ingress-deployment-5f6798968b-h6hgg 1/1 Running 0 4m46sCopy to clipboardErrorCopied -

创建Service

kind: Service apiVersion: v1 metadata: name: ingress-service namespace: default labels: app: svc spec: type: ClusterIP selector: app: pod ports: - port: 80 targetPort: 80 name: httpCopy to clipboardErrorCopied查看部署结果

[root@kubernetes-master-01 ~]# kubectl get svc -l app=svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-service ClusterIP 10.111.19.36 <none> 80/TCP 5m38sCopy to clipboardErrorCopied -

创建Ingress

kind: Ingress apiVersion: extensions/v1beta1 metadata: name: ingress-ingress namespace: default annotations: kubernetes.io/ingress.class: "nginx" spec: rules: - host: www.test.com http: paths: - path: / backend: serviceName: ingress-service servicePort: 80Copy to clipboardErrorCopied查看部署结果

[root@kubernetes-master-01 ~]# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-ingress <none> www.test.com 172.16.0.53 80 6m36sCopy to clipboardErrorCopied -

测试

# 添加Host解析 127.0.0.1 www.test.com [root@kubernetes-master-01 ~]# curl www.test.com <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>Copy to clipboardErrorCopied

Deployment

前面使用rc和service是通过selector进行关联的,但是在rc的滚动升级过程中selector是可能发生改变的,所以升级之后service与rc可能失去关联关系导致无法访问。而Deployment升级是新创建一个rc,然后在创建Pod,但是对Service没有影响。

-

经典案例

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80Copy to clipboardErrorCopied查看部署结果

[root@kubernetes-master-01 ~]# vim deployment.yaml [root@kubernetes-master-01 ~]# kubectl apply -f deployment.yaml deployment.apps/nginx-deployment configured [root@kubernetes-master-01 ~]# kubectl get deployments.apps -l app=nginx NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 2/3 1 2 71m [root@kubernetes-master-01 ~]# kubectl get pods -l app=nginx NAME READY STATUS RESTARTS AGE nginx-deployment-6b5fc5c798-452cf 0/1 ContainerCreating 0 12s nginx-deployment-6b5fc5c798-7f4nc 0/1 ContainerCreating 0 12s nginx-deployment-6b5fc5c798-jnqhc 0/1 ContainerCreating 0 12sCopy to clipboardErrorCopied

滚动升级

-

创建

[root@kubernetes-master-01 ~]# kubectl apply -f de.yaml deployment.apps/nginx-deployment created [root@kubernetes-master-01 ~]# kubectl get deployments.apps -l app=nginx NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 3/3 3 3 15sCopy to clipboardErrorCopied -

升级

[root@kubernetes-master-01 ~]# kubectl set image deployment/nginx-deployment nginx=alvinos/django:v2 deployment.apps/nginx-deployment image updated [root@kubernetes-master-01 ~]# kubectl get pods -l app=nginx -w NAME READY STATUS RESTARTS AGE nginx-deployment-566c49dbc7-47t6k 1/1 Terminating 0 6m47s nginx-deployment-566c49dbc7-54lmk 1/1 Terminating 0 6m47s nginx-deployment-566c49dbc7-zfrvg 1/1 Terminating 0 6m47s nginx-deployment-69d86bb564-8728c 1/1 Running 0 14s nginx-deployment-69d86bb564-d67ng 1/1 Running 0 32s nginx-deployment-69d86bb564-rn2r9 1/1 Running 0 9sCopy to clipboardErrorCopied

回滚

-

查看部署历史

[root@kubernetes-master-01 ~]# kubectl rollout history deployment nginx-deployment deployment.apps/nginx-deployment REVISION CHANGE-CAUSE 1 kubectl apply --filename=de.yaml --record=true 2 kubectl apply --filename=de.yaml --record=true 3 kubectl apply --filename=de.yaml --record=trueCopy to clipboardErrorCopied -

回滚上一个版本

[root@kubernetes-master-01 ~]# kubectl rollout undo deployment nginx-deployment deployment.apps/nginx-deployment rolled back [root@kubernetes-master-01 ~]# kubectl rollout history deployment nginx-deployment deployment.apps/nginx-deployment REVISION CHANGE-CAUSE 1 kubectl apply --filename=de.yaml --record=true 3 kubectl apply --filename=de.yaml --record=true 4 kubectl apply --filename=de.yaml --record=trueCopy to clipboardErrorCopied -

回滚指定版本

[root@kubernetes-master-01 ~]# kubectl rollout undo deployment nginx-deployment --to-revision=3 deployment.apps/nginx-deployment rolled back [root@kubernetes-master-01 ~]# kubectl rollout history deployment nginx-deployment deployment.apps/nginx-deployment REVISION CHANGE-CAUSE 1 kubectl apply --filename=de.yaml --record=true 4 kubectl apply --filename=de.yaml --record=true 5 kubectl apply --filename=de.yaml --record=trueCopy to clipboardErrorCopied

扩容与缩容

-

patch

[root@kubernetes-master-01 ~]# kubectl patch deployments.apps nginx-deployment -p '{"spec":{"replicas": 5}}' deployment.apps/nginx-deployment patched [root@kubernetes-master-01 ~]# kubectl get deployments.apps nginx-deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 5/5 5 5 5m21sCopy to clipboardErrorCopied -

scale

[root@kubernetes-master-01 ~]# kubectl scale deployment nginx-deployment --replicas=10 deployment.apps/nginx-deployment scaled [root@kubernetes-master-01 ~]# kubectl get deployments.apps nginx-deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 5/10 10 5 5m57sCopy to clipboardErrorCopied

部署dashboard

[root@kubernetes-master-01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

[root@kubernetes-master-01 ~]# sed -i 's#kubernetesui/dashboard#registry.cn-hangzhou.aliyuncs.com/k8sos/dashboard#g' recommended.yaml

[root@kubernetes-master-01 ~]# sed -i 's#kubernetesui/metrics-scraper#registry.cn-hangzhou.aliyuncs.com/k8sos/metrics-scraper#g' recommended.yaml

[root@kubernetes-master-01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@kubernetes-master-01 ~]# kubectl get all -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-55f5746456-j5thm 0/1 ContainerCreating 0 15s

pod/kubernetes-dashboard-68784c9d-rffmb 1/1 Running 0 16s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.102.154.91 <none> 8000/TCP 16s

service/kubernetes-dashboard ClusterIP 10.111.190.51 <none> 443/TCP 16s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dashboard-metrics-scraper 0/1 1 0 15s

deployment.apps/kubernetes-dashboard 1/1 1 1 16s

NAME DESIRED CURRENT READY AGE

replicaset.apps/dashboard-metrics-scraper-55f5746456 1 1 0 15s

replicaset.apps/kubernetes-dashboard-68784c9d 1 1 1 16sCopy to clipboardErrorCopied

查看部署结果

持久化存储

我们知道,Pod是由容器组成的,而容器宕机或停止之后,数据就随之丢了,那么这也就意味着我们在做Kubernetes集群的时候就不得不考虑存储的问题,而存储卷就是为了Pod保存数据而生的。存储卷的类型有很多,我们常用到一般有四种:emptyDir,hostPath,NFS以及云存储等。

emptyDir

emptyDir类型的volume在pod分配到node上时被创建,kubernetes会在node上自动分配 一个目录,因此无需指定宿主机node上对应的目录文件。这个目录的初始内容为空,当Pod从node上移除时,emptyDir中的数据会被永久删除。emptyDir Volume主要用于某些应用程序无需永久保存的临时目录。

-

经典案例

kind: Deployment apiVersion: apps/v1 metadata: name: test-volume-deployment namespace: default labels: app: test-volume-deployment spec: selector: matchLabels: app: test-volume-pod template: metadata: labels: app: test-volume-pod spec: containers: - name: nginx image: busybox imagePullPolicy: IfNotPresent ports: - containerPort: 80 name: http - containerPort: 443 name: https volumeMounts: - mountPath: /data/ name: empty command: ['/bin/sh','-c','while true;do echo $(date) >> /data/index.html;sleep 2;done'] - name: os imagePullPolicy: IfNotPresent image: busybox volumeMounts: - mountPath: /data/ name: empty command: ['/bin/sh','-c','while true;do echo 'budybox' >> /data/index.html;sleep 2;done'] volumes: - name: empty emptyDir: {}Copy to clipboardErrorCopied查看部署状态

[root@kubernetes-master-01 ~]# kubectl get pods -l app=test-volume-pod NAME READY STATUS RESTARTS AGE test-volume-deployment-66ccd5586b-s7j26 2/2 Running 0 4m13sCopy to clipboardErrorCopied

hostPath

hostPath类型则是映射node文件系统中的文件或者目录到pod里。在使用hostPath类型的存储卷时,也可以设置type字段,支持的类型有文件、目录、File、Socket、CharDevice和BlockDevice。

- 经典案例

apiVersion: v1

kind: Pod

metadata:

name: vol-hostpath

namespace: default

spec:

volumes:

- name: html

hostPath:

path: /data/pod/volume1/

type: DirectoryOrCreate

containers:

- name: myapp

image: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/Copy to clipboardErrorCopied

-

查看部署结果

# 在node-02上执行 [root@kubernetes-node-02 volume1]# echo "index" > index.html # 在master-01上执行 [root@kubernetes-master-01 data]# kubectl get pods vol-hostpath -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vol-hostpath 1/1 Running 0 66s 10.244.2.34 kubernetes-node-02 <none> <none> [root@kubernetes-master-01 data]# curl 10.244.2.34 indexCopy to clipboardErrorCopied

PV和PVC

PersistentVolume(PV)是集群中已由管理员配置的一段网络存储。 集群中的资源就像一个节点是一个集群资源。 PV是诸如卷之类的卷插件,但是具有独立于使用PV的任何单个pod的生命周期。 该API对象捕获存储的实现细节,即NFS,iSCSI或云提供商特定的存储系统。

PersistentVolumeClaim(PVC)是用户存储的请求。PVC的使用逻辑:在pod中定义一个存储卷(该存储卷类型为PVC),定义的时候直接指定大小,pvc必须与对应的pv建立关系,pvc会根据定义去pv申请,而pv是由存储空间创建出来的。pv和pvc是kubernetes抽象出来的一种存储资源。

nfs

nfs使得我们可以挂载已经存在的共享到我们的Pod中,和emptyDir不同的是,当Pod被删除时,emptyDir也会被删除。但是nfs不会被删除,仅仅是解除挂在状态而已,这就意味着NFS能够允许我们提前对数据进行处理,而且这些数据可以在Pod之间相互传递,并且nfs可以同时被多个pod挂在并进行读写。

-

在每个需要装nfs的节点上安装nfs

yum install nfs-utils.x86_64 -yCopy to clipboardErrorCopied -

在每一个节点上配置nfs

[root@kubernetes-master-01 nfs]# mkdir -p /nfs/v{1..5} [root@kubernetes-master-01 nfs]# cat > /etc/exports <<EOF /nfs/v1 172.16.0.0/16(rw,no_root_squash) /nfs/v2 172.16.0.0/16(rw,no_root_squash) /nfs/v3 172.16.0.0/16(rw,no_root_squash) /nfs/v4 172.16.0.0/16(rw,no_root_squash) /nfs/v5 172.16.0.0/16(rw,no_root_squash) EOF [root@kubernetes-master-01 nfs]# exportfs -arv exporting 172.16.0.0/16:/nfs/v5 exporting 172.16.0.0/16:/nfs/v4 exporting 172.16.0.0/16:/nfs/v3 exporting 172.16.0.0/16:/nfs/v2 exporting 172.16.0.0/16:/nfs/v1 [root@kubernetes-master-01 nfs]# showmount -e Export list for kubernetes-master-01: /nfs/v5 172.16.0.0/16 /nfs/v4 172.16.0.0/16 /nfs/v3 172.16.0.0/16 /nfs/v2 172.16.0.0/16 /nfs/v1 172.16.0.0/16Copy to clipboardErrorCopied -

创建POD使用Nfs

[root@kubernetes-master-01 ~]# kubectl get pods -l app=nfs NAME READY STATUS RESTARTS AGE nfs-5f56db5995-9shkg 1/1 Running 0 24s nfs-5f56db5995-ht7ww 1/1 Running 0 24s [root@kubernetes-master-01 ~]# echo "index" > /nfs/v1/index.html [root@kubernetes-master-01 ~]# kubectl exec -it nfs-5f56db5995-ht7ww -- bash root@nfs-5f56db5995-ht7ww:/# cd /usr/share/nginx/html/ root@nfs-5f56db5995-ht7ww:/usr/share/nginx/html# ls index.htmlCopy to clipboardErrorCopied

PV 的访问模式

| ReadWriteOnce(RWO) | 可读可写,但只支持被单个节点挂载。 |

|---|---|

| ReadOnlyMany(ROX) | 只读,可以被多个节点挂载。 |

| ReadWriteMany(RWX) | 多路可读可写。这种存储可以以读写的方式被多个节点共享。不是每一种存储都支持这三种方式,像共享方式,目前支持的还比较少,比较常用的是 NFS。在 PVC 绑定 PV 时通常根据两个条件来绑定,一个是存储的大小,另一个就是访问模式。 |

PV 的回收策略

| Retain | 不清理, 保留 Volume(需要手动清理) |

|---|---|

| Recycle | 删除数据,即 rm -rf /thevolume/*(只有 NFS 和 HostPath 支持) |

| Delete | 删除存储资源,比如删除 AWS EBS 卷(只有 AWS EBS, GCE PD, Azure Disk 和 Cinder 支持) |

PV 的四种状态

| Available | 可用。 |

|---|---|

| Bound | 已经分配给 PVC。 |

| Released | PVC 解绑但还未执行回收策略。 |

| Failed | 发生错误。 |

创建PV

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv001

labels:

app: pv001

spec:

nfs:

path: /nfs/v2

server: 172.16.0.50

accessModes:

- "ReadWriteMany"

- "ReadWriteOnce"

capacity:

storage: 2Gi

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv002

labels:

app: pv002

spec:

nfs:

path: /nfs/v3

server: 172.16.0.50

accessModes:

- "ReadWriteMany"

- "ReadWriteOnce"

capacity:

storage: 5Gi

persistentVolumeReclaimPolicy: Delete

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv003

labels:

app: pv003

spec:

nfs:

path: /nfs/v4

server: 172.16.0.50

accessModes:

- "ReadWriteMany"

- "ReadWriteOnce"

capacity:

storage: 10Gi

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv004

labels:

app: pv004

spec:

nfs:

path: /nfs/v5

server: 172.16.0.50

accessModes:

- "ReadWriteMany"

- "ReadWriteOnce"

capacity:

storage: 20GiCopy to clipboardErrorCopied

通过PVC使用PV

[root@kubernetes-master-01 ~]# kubectl apply -f pv.yaml

persistentvolume/pv001 created

persistentvolume/pv002 created

persistentvolume/pv003 created

persistentvolume/pv004 created

[root@kubernetes-master-01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 2Gi RWO,RWX Retain Available 10s

pv002 5Gi RWO,RWX Delete Available 10s

pv003 10Gi RWO,RWX Retain Available 10s

pv004 20Gi RWO,RWX Retain Available 10s

[root@kubernetes-master-01 ~]# cat > pvc.yaml <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

namespace: default

spec:

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "6Gi"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nfs

template:

metadata:

labels:

app: nfs

spec:

containers:

- name: nginx

imagePullPolicy: IfNotPresent

image: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: html

persistentVolumeClaim:

claimName: pvc # PVC 名字

EOF

[root@kubernetes-master-01 ~]# kubectl apply -f pv.yaml

persistentvolumeclaim/pvc created

deployment.apps/nfs configured

[root@kubernetes-master-01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc Bound pv003 10Gi RWO,RWX 8s

[root@kubernetes-master-01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 2Gi RWO,RWX Retain Available 4m18s

pv002 5Gi RWO,RWX Delete Available 4m18s

pv003 10Gi RWO,RWX Retain Bound default/pvc 4m18s

pv004 20Gi RWO,RWX Retain Available 4m18sCopy to clipboardErrorCopied

StorageClass

在一个大规模的Kubernetes集群里,可能有成千上万个PVC,这就意味着运维人员必须实现创建出这个多个PV,此外,随着项目的需要,会有新的PVC不断被提交,那么运维人员就需要不断的添加新的,满足要求的PV,否则新的Pod就会因为PVC绑定不到PV而导致创建失败。而且通过 PVC 请求到一定的存储空间也很有可能不足以满足应用对于存储设备的各种需求,而且不同的应用程序对于存储性能的要求可能也不尽相同,比如读写速度、并发性能等,为了解决这一问题,Kubernetes 又为我们引入了一个新的资源对象:StorageClass,通过 StorageClass 的定义,管理员可以将存储资源定义为某种类型的资源,比如快速存储、慢速存储等,kubernetes根据 StorageClass 的描述就可以非常直观的知道各种存储资源的具体特性了,这样就可以根据应用的特性去申请合适的存储资源了。

定义StorageClass

每一个存储类都包含provisioner、parameters和reclaimPolicy这三个参数域,当一个属于某个类的PersistentVolume需要被动态提供时,将会使用上述的参数域。

- 创建StorageClass

[root@kubernetes-master-01 ~]# cat > sc.yaml <<EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage-class

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes/nfs-storage-class

parameters:

archiveOnDelete: "false" # pod删除后,不删除数据

EOF

[root@kubernetes-master-01 ~]# kubectl apply -f sc.yaml

storageclass.storage.k8s.io/nfs-storage-class created

[root@kubernetes-master-01 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage-class (default) kubernetes/nfs-storage-class Delete Immediate false 5sCopy to clipboardErrorCopied

- 创建PVC

[root@kubernetes-master-01 ~]# cat > sc.yaml <<EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get", "create", "update", "delete"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-provisioner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/kubeapps/quay-nfs-client-provisioner

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: pri/nfs

- name: NFS_SERVER

value: 172.16.0.50

- name: NFS_PATH

value: /nfs/v8

volumes:

- name: nfs-client-root

nfs:

server: 172.16.0.50

path: /nfs/v8

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: nfs

provisioner: pri/nfs

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-sc

spec:

accessModes:

- ReadWriteOnce

- ReadWriteMany

storageClassName: "nfs"

resources:

requests:

storage: 1Gi

EOF

[root@kubernetes-master-01 ~]# kubectl apply -f sc.yaml

storageclass.storage.k8s.io/nfs-storage-class created

deployment.apps/nfs-client-provisioner created

persistentvolumeclaim/claim1 created

[root@kubernetes-master-01 ~]# kubectl get pvc -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

pvc-sc Bound pvc-33a45e8b-e178-4c6b-b64c-fe28073e0a30 1Gi RWO,RWX nfs 24s Filesystem

[root@kubernetes-master-01 ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-client-provisioner 1/1 1 1 118sCopy to clipboardErrorCopied