公司有需求爬取全国所有城市的楼盘,解析出经纬度,这种大范围的爬取一般用scrapy框架比较合适,但是刚好最近学完了go语言,go语言的http库用起来也很方便,因此尝试用go语言完成

一,程序支持根据用户输入城市进行爬取,使用flag方法可以解析用户数据,定义好要爬取的城市、爬取翻页时等待时长(慢速爬取更友好)和是否打印爬取结果

func init() {

flag.StringVar(&p, "p", "湖北", "省份")

flag.StringVar(&c, "c", "武汉", "城市")

flag.IntVar(&t, "t", 5, "翻页间隔")

flag.BoolVar(&r, "r", true, "打印记录")

flag.Parse()

}

二,判断用户输入的省份城市是否合法(是否在该网站中存在记录),在进行元素定位时用到了htmlquery库,采用xpath语法定位

func CheckCityExist() bool {

rsp, err := engine.GetRsp("https://www.ke.com/city/")

if err != nil {

log.Fatalln("请求查询城市失败: ", err)

}

defer rsp.Body.Close()

doc, _ := htmlquery.Parse(rsp.Body)

retLis := htmlquery.Find(doc, `//div[@class="city_province"]`)

for _, i := range retLis {

pr := strings.TrimSpace(htmlquery.InnerText(htmlquery.FindOne(i, `./div[@class="city_list_tit c_b"]`)))

if p == pr {

ct := htmlquery.FindOne(i, fmt.Sprintf(`./ul/li[@class="CLICKDATA"]/a[text()="%s"]`, c))

if ct != nil {

ctURL = htmlquery.SelectAttr(ct, "href")

if ctURL != "" {

ctURL = "https:" + ctURL

return true

}

}

}

}

return false

}

三,每个城市会存在楼盘(新房)和小区(旧房)两个爬取源,GetCityURL方法找到楼盘和小区的根路径,并将其存入结构体pp中

func GetCityURL(u string) {

rsp, err := engine.GetRsp(u)

if err != nil {

log.Fatalln("请求查询城市失败: ", err)

}

defer rsp.Body.Close()

doc, _ := htmlquery.Parse(rsp.Body)

louPan := htmlquery.FindOne(doc, `//li[@class="CLICKDATA"]/a[text()="新房"]`) // 新房

xiaoQu := htmlquery.FindOne(doc, `//li[@class="CLICKDATA"]/a[text()="小区"]`) // 旧房

lp := htmlquery.SelectAttr(louPan, "href")

if lp == "" {

louPan2 := htmlquery.FindOne(doc, `//li[@class="new-link-list"]/a[text()="新房"]`) // 新房

lp = htmlquery.SelectAttr(louPan2, "href")

lp = strings.TrimRight(lp, "/")

}

if !strings.HasPrefix(lp, "http") {

lp = "https:" + lp

}

pp.Loupan = lp

pp.Xiaoqu = htmlquery.SelectAttr(xiaoQu, "href")

pp.Province = p

pp.City = c

}

四,爬取楼盘,解析字段,该网站请求楼盘返回的数据是json格式,每次返回10条记录,解析很方便,注意该网站在爬到一定页数后,每次会返回200条记录,并且都是重复数据,因此程序对此作了判断并结束循环

func ProcessLoupanData(data *utils.JData, pp *utils.CityMeta, r bool) {

// 提取出数据库需要的字段

for _, item := range data.Data.List {

item.CityName = pp.City

item.Province = pp.Province

db.InsertRow(&item, true)

if !r {

continue

}

fmt.Println("封面", item.CoverPic)

fmt.Println("省份", item.Province)

fmt.Println("城市", item.CityName)

fmt.Println("区域", item.District)

fmt.Println("地址", item.Address)

fmt.Println("商圈", item.CircleName)

fmt.Println("小区名", item.Loupan)

fmt.Println("均价", item.AvePrice)

fmt.Println("价格单位", item.PriceUnit)

fmt.Println("销售状态", item.Status)

fmt.Println("面积", item.Area)

fmt.Println("房型", item.RoomType)

fmt.Println("经度", item.LNG)

fmt.Println("纬度", item.LAT)

fmt.Println(strings.Repeat("#", 50))

}

}

func ParseCityLoupan(r bool, t int, pp *utils.CityMeta) {

// 爬取楼盘信息

if pp.Loupan == "" {

log.Printf("%s-%s 无新房记录", pp.Province, pp.City)

return

}

url := pp.Loupan + "/pg1/?_t=1"

b, err := engine.Download(url)

if err != nil {

log.Printf("首页新房请求失败", err)

return

}

var data utils.JData

err = json.Unmarshal(*b, &data)

if err != nil {

log.Printf("解析新房第1页失败:%v

", err)

return

}

total, err := strconv.Atoi(data.Data.Total)

if err != nil {

log.Fatalln("无法获取新房总页数", data.Data.Total, err)

}

ProcessLoupanData(&data, pp, r)

pages := int(math.Ceil(float64(total)/ 10)) + 1

count := 0

for page := 2; page < pages; page++ {

var data utils.JData

time.Sleep(time.Second * time.Duration(t))

url := fmt.Sprintf("%s/pg%d/?_t=1", pp.Loupan, page)

b, err := engine.Download(url)

if err != nil {

log.Printf("请求第%d页失败

", page)

continue

}

err = json.Unmarshal(*b, &data)

if err != nil {

log.Printf("解析第%d页失败

", page)

continue

}

if len(data.Data.List) > 10 {

count ++

}

if count == 2 {

fmt.Println("结束重复值")

break

}

ProcessLoupanData(&data, pp, r)

}

}

五,爬取小区,解析字段,该网站请求小区返回的是html格式内容,需要用htmlquery库xpath,方便解析字段

func ParsePageRegionXiaoqu(r bool, url string, areaName string, p string, c string) {

rsp, err := engine.GetRsp(url)

if err != nil {

log.Printf("爬取链接%s失败

", url)

return

}

defer rsp.Body.Close()

doc, _ := htmlquery.Parse(rsp.Body)

items := htmlquery.Find(doc, `//li[contains(@class, "xiaoquListItem")]`)

for _, item := range items {

var data utils.MData

titleNode := htmlquery.FindOne(item, `.//a[@class="maidian-detail"]`)

title := htmlquery.SelectAttr(titleNode, "title")

if title == "" {

continue

}

circleNode := htmlquery.FindOne(item, `//a[@class="bizcircle"]`)

circle := strings.TrimSpace(htmlquery.InnerText(circleNode))

if circle == "" {

continue

}

lng, lat := engine.BaiduAPI(c, areaName+" "+title)

if lng == 0 || lat == 0 {

continue

}

data.Province = p

data.CityName = c

data.Loupan = title

data.District = areaName

data.CircleName = circle

data.Address = circle + title

data.LNG = strconv.FormatFloat(lng, 'f', 6, 64)

data.LAT = strconv.FormatFloat(lat, 'f', 6, 64)

if r {

fmt.Println("封面", data.CoverPic)

fmt.Println("省份", data.Province)

fmt.Println("城市", data.CityName)

fmt.Println("区域", data.District)

fmt.Println("地址", data.Address)

fmt.Println("商圈", data.CircleName)

fmt.Println("小区名", data.Loupan)

fmt.Println("均价", data.AvePrice)

fmt.Println("价格单位", data.PriceUnit)

fmt.Println("销售状态", data.Status)

fmt.Println("面积", data.Area)

fmt.Println("房型", data.RoomType)

fmt.Println("经度", data.LNG)

fmt.Println("纬度", data.LAT)

fmt.Println(strings.Repeat("#", 50))

}

db.InsertRow(&data, false)

}

}

func ParseRegionXiaoqu(r bool, t int, baseURL string, areaName string, p string, c string) {

page := 1

url := baseURL + strconv.Itoa(page)

rsp, err := engine.GetRsp(url)

if err != nil {

log.Printf("爬取链接%s失败

", url)

return

}

defer rsp.Body.Close()

doc, _ := htmlquery.Parse(rsp.Body)

totalNode := htmlquery.FindOne(doc, `//h2[contains(@class, "total")]/span`)

total, err := strconv.Atoi(strings.TrimSpace(htmlquery.InnerText(totalNode)))

if err != nil {

log.Printf("非有效数字", total)

return

}

pages := int(math.Ceil(float64(total) / 30))

for page:=1;page<=pages;page++{

time.Sleep(time.Second * time.Duration(t))

url := baseURL + strconv.Itoa(page)

ParsePageRegionXiaoqu(r, url, areaName, p, c)

}

}

// 爬取旧房信息

func ParseCityXiaoqu(r bool, t int, pp *utils.CityMeta) {

if !strings.HasSuffix(pp.Xiaoqu, "xiaoqu/") {

log.Printf("%s-%s 无小区记录", pp.Province, pp.City)

return

}

url := pp.Xiaoqu + "pg1/"

b, err := engine.Download(url)

if err != nil {

log.Printf("首页新房请求失败:%v", err)

return

}

pt, _ := regexp.Compile(`href="/xiaoqu/([a-z0-9]{2,20}/pg)1".*?>(.*?)</a>`)

retLis := pt.FindAllStringSubmatch(string(*b), -1)

mp := make(map[string]string) // 去重后的区域url

for _, v := range retLis {

mp[pp.Xiaoqu+v[1]] = v[2]

}

for aURL, aName := range mp {

fmt.Println("打印当前", aURL, aName)

ParseRegionXiaoqu(r, t, aURL, aName, pp.Province, pp.City)

}

}

六,无论是爬取楼盘还是小区都有可能返回数据中没有经纬度,这里需要调用百度api获取地址对应的经纬度(需要申请百度开发者账号)

func BaiduAPI(city string, addr string) (lng, lat float64) {

// 调用百度api,获取地理位置

if useIdx == len(utils.AK) {

log.Fatalln("无可用ak")

}

params := url.Values{}

params.Set("address", addr)

params.Set("output", "json")

params.Set("city", city)

params.Set("ak", utils.AK[useIdx])

toURL := apiURL + "?" + params.Encode()

req, err = http.NewRequest("GET", toURL, nil)

if err != nil {

return 0, 0

}

req.Header.Set("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.135 Safari/537.36")

rsp, err = client.Do(req)

if err != nil {

return 0, 0

}

defer rsp.Body.Close()

b, _ := ioutil.ReadAll(rsp.Body)

var ret apiRet

err = json.Unmarshal(b, &ret)

if err != nil {

return 0, 0

}

if ret.Msg == "天配额超限,限制访问" {

log.Println(utils.AK[useIdx], ret.Msg)

useIdx += 1

return BaiduAPI(city, addr)

}

return ret.Result.Location.LNG, ret.Result.Location.LAT

}

七,将数据存入mysql,使用经纬度md5值去重

func generateID(data *utils.MData) string {

md := md5.New()

md.Write([]byte(strings.Join([]string{data.LNG, data.LAT}, "")))

ret := md.Sum([]byte(""))

return fmt.Sprintf("%x", ret)

}

// InsertRow ...

func InsertRow(data *utils.MData, checkGeo bool) {

// 若经纬度任意为空,则根据地址,调用百度api获取经纬度

if data.LNG == "" || data.LAT == "" {

if !checkGeo {

return

}

if data.CityName == "" || data.Address == "" || data.Loupan == "" {

return

}

lng, lat := engine.BaiduAPI(data.CityName, fmt.Sprintf("%s %s", data.Address, data.Loupan))

if lng == 0 || lat == 0 {

return

}

data.LNG = strconv.FormatFloat(lng, 'f', 6, 64)

data.LAT = strconv.FormatFloat(lat, 'f', 6, 64)

}

iid = generateID(data)

if _, ok := mp[iid]; ok {

// 过滤重复坐标

return

}

mp[iid] = ""

sqlStr := "insert into lbs(id, province, city, region, avenue, community_name, community_addr, longitude, dimension, status) values (?,?,?,?,?,?,?,?,?,?)"

_, err := sdb.Exec(sqlStr, iid, data.Province, data.CityName, data.District, data.CircleName, data.Loupan, data.Address, data.LNG, data.LAT, 0)

if err != nil {

fmt.Printf("insert failed, err:%v

", err)

return

}

}

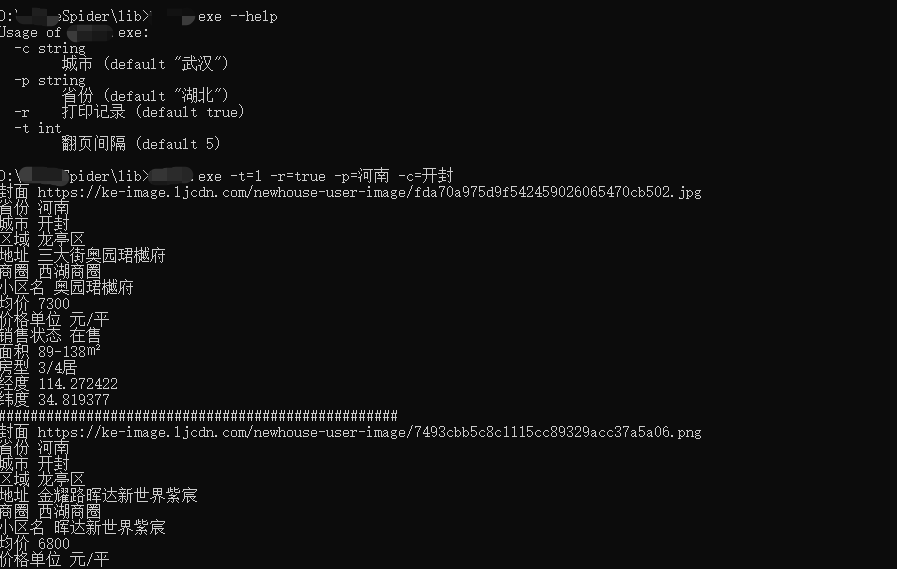

编译后执行后效果如下:

完整代码参考github项目地址:https://github.com/Tarantiner/golang_beike