1.读取

源代码:

#读取文件 file_path=r'D:PycharmProjects201706120186罗奕涛dataSMSSpamCollection' sms=open(file_path,'r',encoding='utf-8') sms_data=[] sms_label=[] csv_reader=csv.reader(sms,delimiter=' ') for line in csv_reader: sms_label.append(line[0]) sms_data.append(preprocessing(line[1]))#对每封邮件做预处理 sms.close() print(sms_label) print(sms_data)

2.数据预处理

源代码:

import csv import nltk from nltk.corpus import stopwords from nltk.stem import WordNetLemmatizer print(nltk.__doc__)#输出版本号 def get_wordnet_pos(treebank_tag):#根据词性,生成还原参数pos if treebank_tag.startswith('J'): return nltk.corpus.wordnet.ADJ elif treebank_tag.startswith('V'): return nltk.corpus.wordnet.VERB elif treebank_tag.startswith('N'): return nltk.corpus.wordnet.NOUN elif treebank_tag.startswith('R'): return nltk.corpus.wordnet.ADV else: return nltk.corpus.wordnet.NOUN #预处理 def preprocessing(text): tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)]#分词 stops = stopwords.words("english")#停用词 tokens = [token for token in tokens if token not in stops]#去掉停用词 tokens = [token.lower() for token in tokens if len(token) >= 3]#将大写字母变为小写 tag=nltk.pos_tag(tokens)#词性 lmtzr = WordNetLemmatizer() tokens = [lmtzr.lemmatize(token,pos=get_wordnet_pos(tag[i][1])) for i,token in enumerate(tokens)] preprocessed_text = ''.join(tokens) return preprocessed_text

3.数据划分—训练集和测试集数据划分

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

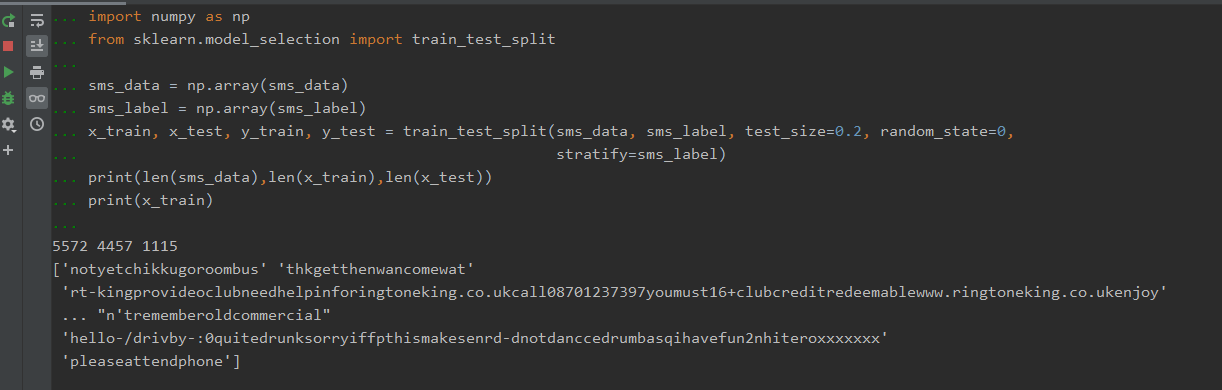

源代码:

# 按0.8:0.2比例分为训练集和测试集 import numpy as np from sklearn.model_selection import train_test_split sms_data = np.array(sms_data) sms_label = np.array(sms_label) x_train, x_test, y_train, y_test = train_test_split(sms_data, sms_label, test_size=0.2, random_state=0, stratify=sms_label) print(len(sms_data),len(x_train),len(x_test)) print(x_train)

结果:

4.文本特征提取

sklearn.feature_extraction.text.CountVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

观察邮件与向量的关系

向量还原为邮件

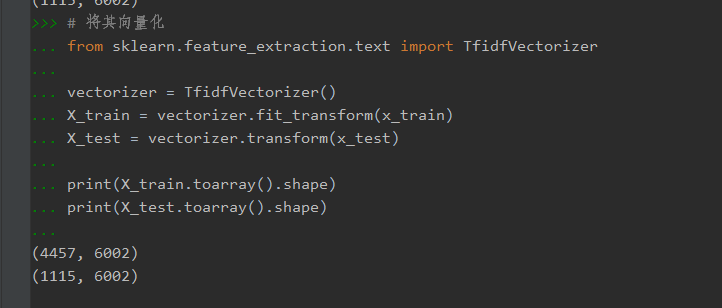

源代码:

# 将其向量化 from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer() X_train = vectorizer.fit_transform(x_train) X_test = vectorizer.transform(x_test) print(X_train.toarray().shape) print(X_test.toarray().shape)

结果:

4.模型选择

from sklearn.naive_bayes import MultinomialNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

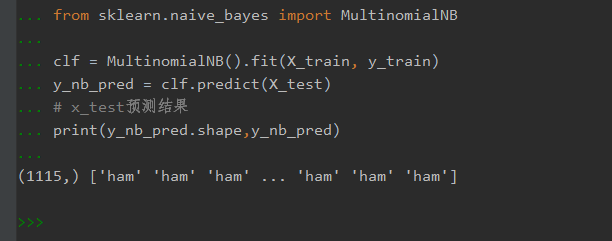

多项式朴素贝叶斯分类器适用于具有离散特征的分类(例如,用于文本分类的字数统计)

源代码:

from sklearn.naive_bayes import MultinomialNB clf = MultinomialNB().fit(X_train, y_train) y_nb_pred = clf.predict(X_test) # x_test预测结果 print(y_nb_pred.shape,y_nb_pred)

结果:

5.模型评价:混淆矩阵,分类报告

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

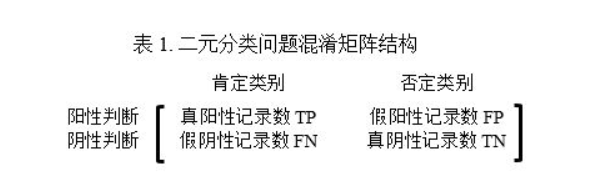

说明混淆矩阵的含义

混淆矩阵是一个2 × 2的情形分析表,显示以下四组记录的数目:作出正确判断的肯定记录(真阳性)、作出错误判断的肯定记录(假阴性)、作出正确判断的否定记录(真阴性)以及作出错误判断的否定记录(假阳性)

from sklearn.metrics import classification_report

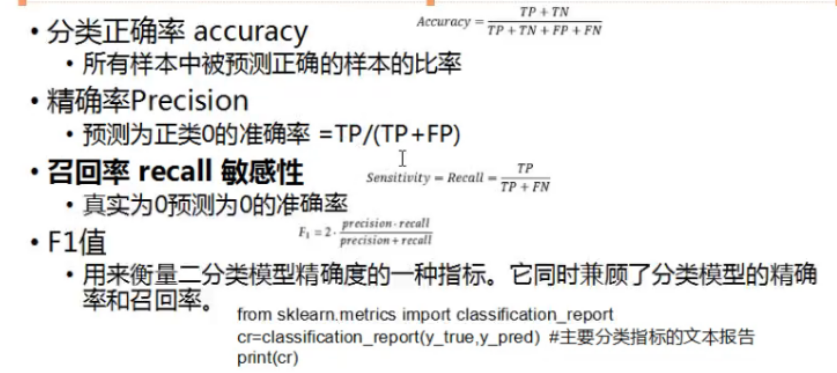

说明准确率、精确率、召回率、F值分别代表的意义

源代码:

from sklearn.metrics import confusion_matrix from sklearn.metrics import classification_report # 混淆矩阵 cm = confusion_matrix(y_test, y_nb_pred) print('nb_confusion_matrix:') print(cm) # 主要分类指标的文本报告 cr = classification_report(y_test, y_nb_pred) print('nb_classification_report:') print(cr)

结果:

6.比较与总结

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

CountVectorizer只能转化英文的,不能转化中文的,因为是靠空格识别的。