前几天博客根据基于pytorch编写了的网络,将其搭建转为wts,使用C++API搭建tensorrt的框架(查看博客点击这里),然自己写C++API搭建网络会比较费时,为此本篇博客基于同一个网络,将其转换为onnx格式,并使用python调用onnx模型,不断尝试如何使用参数,如搭建输入动态变量或静态输入变量等关系。我将分为2部分,其一静态onnx搭建与调用,其二动态搭建与调用。

一.静态搭建onnx

直接调用torch.onnx.export函数,通过pth文件直接搭建,其中input_names可为每一层输入别名,且input_names中输入名input_data必须与onnx调用时候输入名称相同,如果是多输入,则为如下(input1,input2)方法,实际是input_name=(input1,input2),其中模型参数为forward(input1,input2)

torch.onnx.export( model, (input1,input2), "./trynet.onnx", verbose=True, input_names=input_names, output_names=output_names )

因其静态简单,直接给代码,如下:

def covert2onnx(): # 定义静态onnx,若推理input_data格式不一致,将导致保存 input_data = torch.randn(2, 3, 224, 224).cuda() model = torch.load(r'E:\data_utils\TensorrtModel\trynet.pth').cuda() input_names = ["input_data"] + ["called_%d" % i for i in range(2)] output_names = ["output_data"] torch.onnx.export( model, input_data, "./trynet.onnx", verbose=True, input_names=input_names, output_names=output_names )

onnx调用方式,可通过以下代码调用,需注意以下几点:

①输入输出变量名必须与转onnx时确定的变量名相同

②保持静态输入shape

def runonnx(): image = torch.randn(2, 3, 224, 224).cuda() session = onnxruntime.InferenceSession("./trynet.onnx") session.get_modelmeta() output2 = session.run(['output_data'], {"input_data": image.cpu().numpy()}) print(output2[0].shape)

二.动态搭建onnx

动态搭建基本与静态搭建相同,其中相同部分我将不在解释,所谓动态,无非是想灵活输入,更改batchsize、图像高和宽,而想灵活输入,需修改dynamic_axes参数,其固定形式如下代码,其中input_data为输入变量名,其中{0: 'batch_size', 2 : 'in_width', 3: 'int_height'}为固定格式,需要更改哪个需使用字典指定,而output_data需要指定与input_data类似。

def covert2onnx_dynamic(): # 动态onnx,推理可更改格式,如batch_size input_data = torch.randn(1, 3, 224, 224).cuda() model = torch.load(r'E:\data_utils\TensorrtModel\trynet.pth').cuda() input_names = ["input_data"] + ["called_%d" % i for i in range(2)] output_names = ["output_data"] torch.onnx.export( model, input_data, "./trynet.onnx", verbose=True, input_names=input_names, output_names=output_names, dynamic_axes={"input_data": {0: 'batch_size', 2 : 'in_width', 3: 'int_height'}, } ) # dynamic_axes={"input_data": {0: "batch_size"}, "output_data": {0: "batch_size"}} 只能更改batch_size

动态与静态搭建onnx模型代码参考如下:

from torch import nn import struct from torchsummary import summary import torch import torchvision class trynet(nn.Module): def __init__(self): super(trynet, self).__init__() self.cov1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1) self.cov2 = nn.Conv2d(64, 2, kernel_size=3, stride=1, padding=1) def forward(self, x): x = self.cov1(x) x = self.cov2(x) return x def infer(): # print('cuda device count: ', torch.cuda.device_count()) net = torch.load(r'E:\data_utils\TensorrtModel\trynet.pth') # net = torch.load('./vgg.pth') net = net.cuda() net = net.eval() print('model: ', net) # print('state dict: ', net.state_dict().keys()) tmp = torch.ones(1, 3, 24, 24).cuda() print('input: ', tmp) out = net(tmp) print('output:', out) # summary(net, (3, 224, 224)) # return f = open("./trynet.wts", 'w') f.write("{}\n".format(len(net.state_dict().keys()))) for k, v in net.state_dict().items(): print('key: ', k) print('value: ', v.shape) vr = v.reshape(-1).cpu().numpy() f.write("{} {}".format(k, len(vr))) for vv in vr: f.write(" ") f.write(struct.pack(">f", float(vv)).hex()) f.write("\n") def main(): print('cuda device count: ', torch.cuda.device_count()) net = trynet() net = net.eval() net = net.cuda() tmp = torch.ones(1, 3, 24, 24).cuda() out = net(tmp) print('vgg out:', out.shape) torch.save(net, "./trynet.pth") def covert2onnx(): # 定义静态onnx,若推理input_data格式不一致,将导致保存 input_data = torch.randn(2, 3, 224, 224).cuda() model = torch.load(r'E:\data_utils\TensorrtModel\trynet.pth').cuda() input_names = ["input_data"] + ["called_%d" % i for i in range(2)] output_names = ["output_data"] torch.onnx.export( model, input_data, "./trynet.onnx", verbose=True, input_names=input_names, output_names=output_names ) def covert2onnx_dynamic(): # 动态onnx,推理可更改格式,如batch_size input_data = torch.randn(1, 3, 224, 224).cuda() model = torch.load(r'E:\data_utils\TensorrtModel\trynet.pth').cuda() input_names = ["input_data"] + ["called_%d" % i for i in range(2)] output_names = ["output_data"] torch.onnx.export( model, input_data, "./trynet.onnx", verbose=True, input_names=input_names, output_names=output_names, dynamic_axes={"input_data": {0: 'batch_size', 2 : 'in_width', 3: 'int_height'}, } ) # dynamic_axes={"input_data": {0: "batch_size"}, "output_data": {0: "batch_size"}} 只能更改batch_size if __name__ == '__main__': # main() # infer() # covert2onnx() covert2onnx_dynamic()

调用onnx模型代码如下:

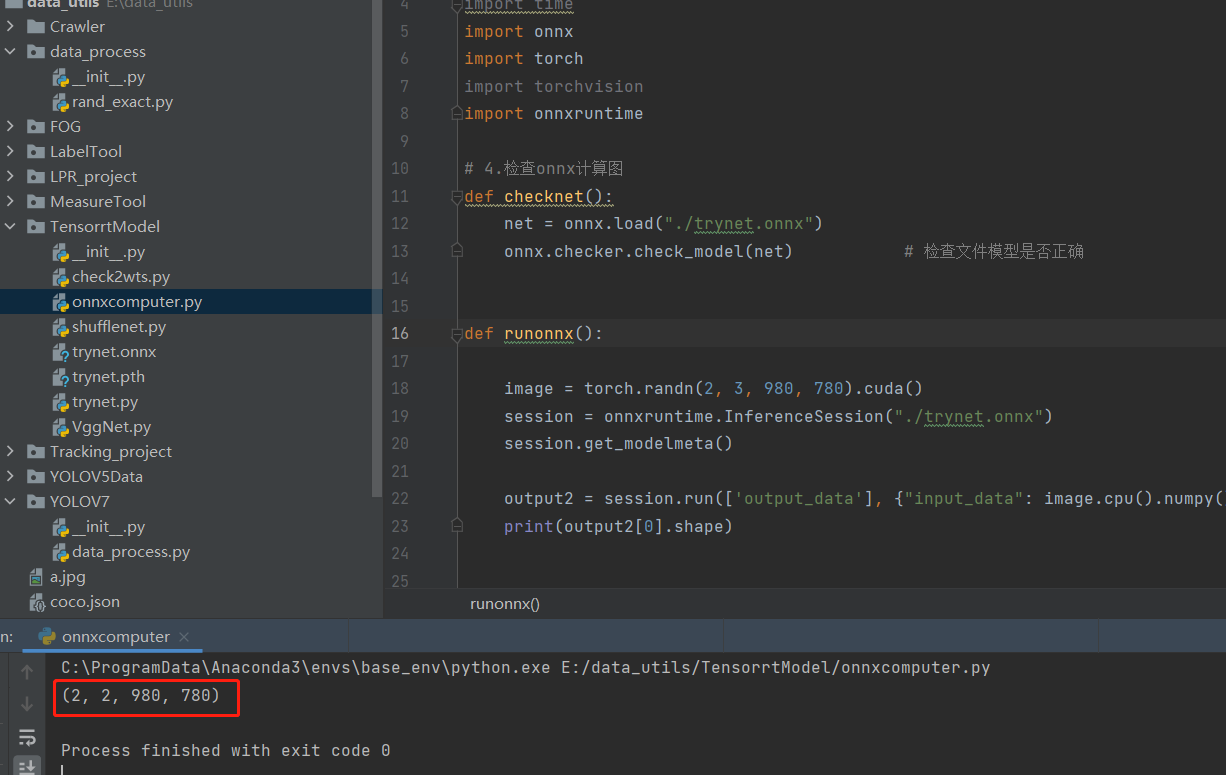

import time import onnx import torch import torchvision import onnxruntime # 4.检查onnx计算图 def checknet(): net = onnx.load("./trynet.onnx") onnx.checker.check_model(net) # 检查文件模型是否正确 def runonnx(): image = torch.randn(2, 3, 280, 224).cuda() session = onnxruntime.InferenceSession("./trynet.onnx") session.get_modelmeta() output2 = session.run(['output_data'], {"input_data": image.cpu().numpy()}) print(output2[0].shape) if __name__ == '__main__': runonnx()

结果图部分显示: