Volume

Pod被创建之后,容器中的数据是暂时的,当容器被关闭时数据也会随之消失,如果要长期保存容器中的数据就需要用到数据卷。

K8s中的volume提供了在容器中挂载外部存储的能力

Pod中使用卷,需要设置卷的来源(spec.volume)和挂载点(spec.containers.volumeMounts)这两个参数信息。

查看kubernets所支持的卷的类型:

https://v1-17.docs.kubernetes.io/docs/concepts/storage/volumes/

• awsElasticBlockStore

• azureDisk

• azureFile

• cephfs

• cinder

• configMap

• csi

• downwardAPI

• emptyDir

• fc (fibre channel)

• flexVolume

• flocker

• gcePersistentDisk

• gitRepo (deprecated)

• glusterfs

• hostPath

• iscsi

• local

• nfs

• persistentVolumeClaim

• projected

• portworxVolume

• quobyte

• rbd

• scaleIO

• secret

• storageos

• vsphereVolume

根据以上类型可以做简单分类:

1 本地卷:只在当前节点使用,无法跨节点使用 hostPath emptyDir

2 网络卷:在任意节点都可以访问到:nfs rbd cephfs glusterfs

3 公有云卷: awsElasticBlockStore azureDisk

4 k8s资源: secret configMap

emptyDir

在pod的宿主机上创建目录,挂载到Pod中的容器,Pod删除该卷也会被删除。

应用场景: pod 中容器之间的数据共享

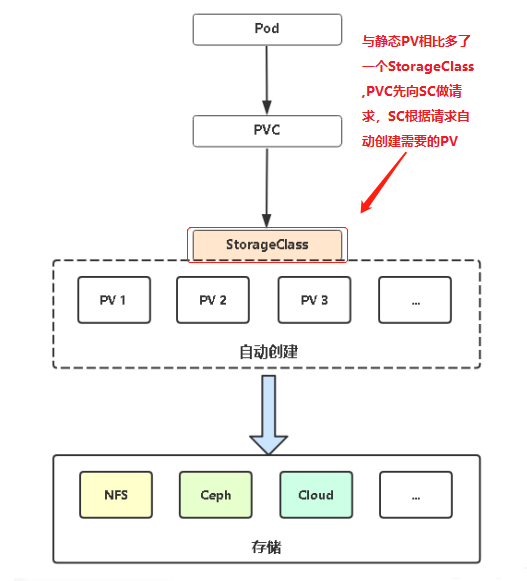

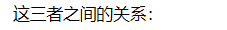

二 动态PV的实现

静态PVC的缺点:

PV需要提前手动创建,PVC在使用PV时可能会出现实现用的PV大于PVC请求的PV动态PVC可以解决这个问题,当PVC需要PV时会自动创建PVC需求的PV。

动态PVC的特点:

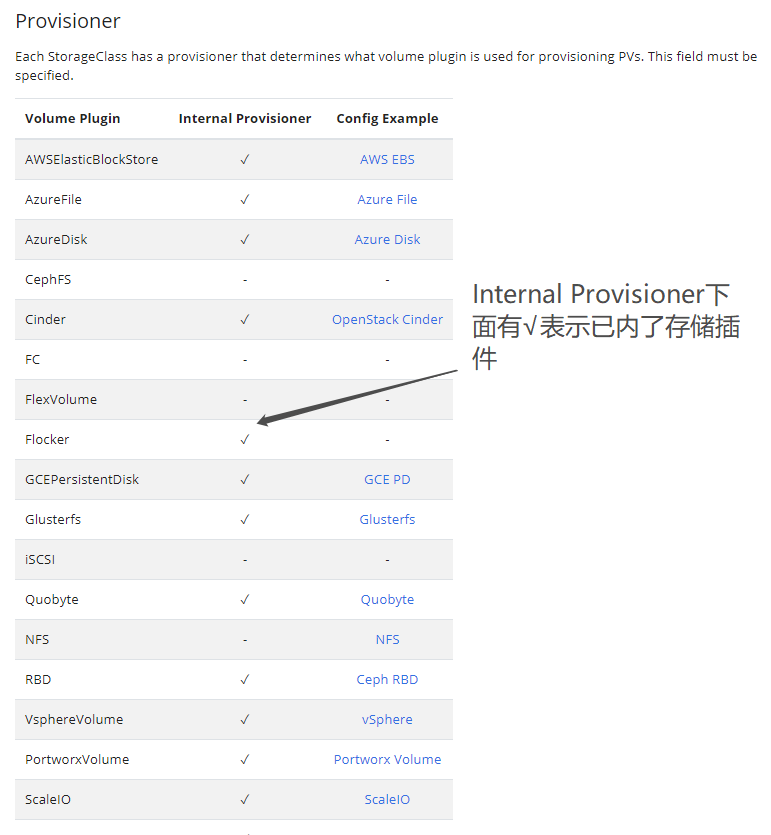

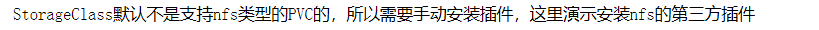

工作核心是StorageClass的API对象,SC声明存储插件,存储插件用于健PV。不是所有存储类型都支持StorageClass的自动创建。

查看StorageClass支持的存储列表:

https://kubernetes.io/docs/concepts/storage/storage-classes/

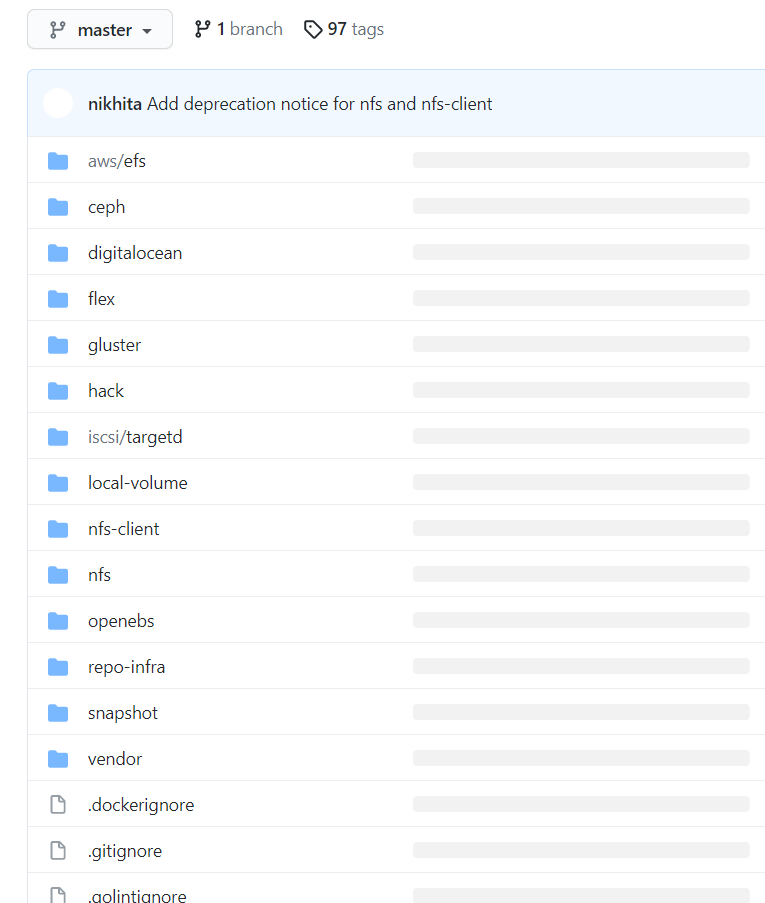

如果上表中的存储类型为支持动态PV,而实现应用中又必须使用,那么可以考虑使用第三方的存储插件

https://github.com/kubernetes-retired/external-storage/

[root@master NFS]# rz -E rz waiting to receive. [root@master NFS]# ls nfs-client.zip [root@master NFS]# unzip nfs-client.zip [root@master NFS]# cd nfs-client/ [root@master nfs-client]# ls class.yaml deployment.yaml rbac.yaml [root@master nfs-client]# #class.yam定义StorageClass资源 #deployment.yaml中的镜像负责自动创建PV #rbac.yaml用于对deployment中镜像授权,使它可以访问k8sAPI

class资源分析: [root@master nfs-client]# cat class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage #storageclass资源的名字 provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true" #ture表示当pv被删除时,pv中的数据会被自动归档(备份) [root@master nfs-client]# deployment.yaml资源分析: [root@master nfs-client]# [root@master nfs-client]# cat deployment.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs #这个变量要与class.yaml文件中的一致 - name: NFS_SERVER value: 192.168.1.63 #nfs服务器的地址 - name: NFS_PATH value: /ifs/kubernetes #NfS服务器共享出来的目录 volumes: - name: nfs-client-root nfs: server: 192.168.1.63 path: /ifs/kubernetes [root@master nfs-client]# rbac.yaml资源分析: [root@master nfs-client]# cat rbac.yaml kind: ServiceAccount apiVersion: v1 metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io [root@master nfs-client]#

[root@master nfs-client]# kubectl apply -f . storageclass.storage.k8s.io/managed-nfs-storage created serviceaccount/nfs-client-provisioner created deployment.apps/nfs-client-provisioner created serviceaccount/nfs-client-provisioner unchanged clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created [root@master nfs-client]# kubectl get pod NAME READY STATUS RESTARTS AGE nfs-client-provisioner-7676dc9cfc-j4vgl 1/1 Running 0 22s [root@master nfs-client]# [root@master nfs-client]# kubectl get sc #查看SC的name,PVC中需要使用这个名字 NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE managed-nfs-storage fuseim.pri/ifs Delete Immediate false 53m [root@master nfs-client]#

[root@master ~]# vim deployment3.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web

name: web

spec:

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

labels:

app: web

spec:

containers:

- image: nginx

name: nginx

resources: {}

volumeMounts:

- name: data

mountPath: /usr/share/nginx/html

volumes:

- name: data

persistentVolumeClaim:

claimName: my-pvc2

---

#把创建PVC的yaml文件也放面一个文件中方便apply,静态PVC与动态PVC的区别就在于是否有storageClassName

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc2

spec:

storageClassName: "managed-nfs-storage" #这里的storageClassName要与上面kubectl get sc的ame一致

accessModes:

- ReadWriteMany

resources:

requests:

storage: 9Gi

[root@master ~]#

[root@master ~]# kubectl apply -f deployment3.yaml

deployment.apps/web created

persistentvolumeclaim/my-pvc2 created

[root@master ~]#

- 查看pod,pv,pvc的状态,从下面可以看出pv,pvc都已创建成功,并且pv写pvc也已匹配成功。K8s接口先后创建了pvc和pv,然后两者再自动匹配。同时查看NFS服务器上的共享目录/ifs/kubernetes,发现这里自动创建了一个子目录default-my-pvc2-pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08。创建pv和NFS的共享目录这两个动作都是由StorageClass这个资源完成的。

[root@master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nfs-client-provisioner-7676dc9cfc-zfl8t 1/1 Running 0 95s web-748845d84d-tlrr6 1/1 Running 0 95s [root@master ~]# [root@master ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08 9Gi RWX Delete Bound default/my-pvc2 managed-nfs-storage 93s [root@master ~]# [root@master ~]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc2 Bound pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08 9Gi RWX managed-nfs-storage 2m10s [root@master ~]# [root@node2 kubernetes]# pwd /ifs/kubernetes [root@node2 kubernetes]# ls default-my-pvc2-pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08 [root@node2 kubernetes]#

- 在容器中创建数据,查看是否会持久化到目录中,

[root@master ~]# kubectl exec -it web-748845d84d-tlrr6 -- bash root@web-748845d84d-tlrr6:/# touch /usr/share/nginx/html/abc.txt root@web-748845d84d-tlrr6:/# ls /usr/share/nginx/html/ abc.txt root@web-748845d84d-tlrr6:/# [root@node2 kubernetes]# ls default-my-pvc2-pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08/ abc.txt [root@node2 kubernetes]#

- 如果把pod的副本增加到3个,那么3个Pod中的数据也是共享的。

[root@master ~]# kubectl scale deploy web --replicas=3 deployment.apps/web scaled [root@master ~]# [root@master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nfs-client-provisioner-7676dc9cfc-zfl8t 1/1 Running 0 40m web-748845d84d-2t48t 1/1 Running 0 114s web-748845d84d-6twgl 1/1 Running 0 5m30s web-748845d84d-t5tbm 1/1 Running 0 114s [root@master ~]# [root@master ~]# kubectl exec -it web-748845d84d-t5tbm -- bash root@web-748845d84d-t5tbm:/# ls /usr/share/nginx/html/ abc.txt root@web-748845d84d-t5tbm:/#

- 删除deployment,pvc之后,对应的pod,pv,pvc都会被删除。但数据会被归档存储在另一个目录,

[root@master ~]# kubectl delete -f PV-PVC/dynamic-pvc/deployment3-pvc-sc.yaml deployment.apps "web" deleted persistentvolumeclaim "my-pvc2" deleted [root@master ~]# [root@master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nfs-client-provisioner-7676dc9cfc-zfl8t 1/1 Running 0 23m [root@master ~]# [root@master ~]# kubectl get pvc No resources found in default namespace. [root@master ~]# [root@master ~]# kubectl get pv No resources found in default namespace. [root@master ~]# [root@node2 kubernetes]# ls archived-default-my-pvc2-pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08 [root@node2 kubernetes]# ls archived-default-my-pvc2-pvc-e7bbe866-2a62-4269-9c54-a4a411e93e08/ abc.txt [root@node2 kubernetes]#