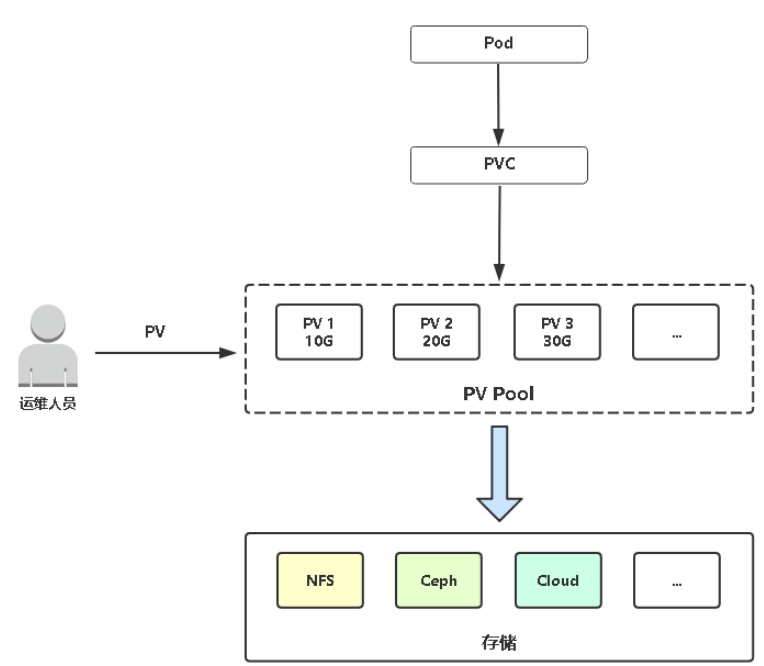

PersistentVolume(PV) 数据持久卷

对存储资源的创建与使用进行抽象,让存储可以做为集群的资源进行管理

PersistentVolumeClaim(PVC) 数据持久卷请求

让用户不需要关心具体的volume实现细节

静态PV的实现:

Kubernetes支持持久卷的存储插件: https://kubernetes.io/docs/concepts/storage/persistent-volumes/

首先配置PV:

1 #在/ifs/kubernetes/目录下创建pv01,pv02,pv03 2 [root@node2 ~]# cd /ifs/kubernetes/ 3 [root@node2 kubernetes]# mkdir pv01 4 [root@node2 kubernetes]# mkdir pv02 5 [root@node2 kubernetes]# mkdir pv03 6 7 #通过pv.yaml文件创建三个PV 8 #访问模式: 9 #ReadWriteMany RWX 所有pod读写,应用场景:数据独立,块设备 10 #ReadWriteOnce RWO 单个Pod读写 11 #ReadOnlyMany ROX 所有pod只读 应用场景:数据共享,文件系统 12 [root@master ~]# cat pv.yaml 13 apiVersion: v1 14 kind: PersistentVolume 15 metadata: 16 name: pv01 #PV的名字 17 spec: 18 capacity: #定义容量为5G 19 storage: 5Gi 20 accessModes: #访问模式为ReadWriteMany 21 - ReadWriteMany 22 nfs: #卷的类型为nfs 23 path: /ifs/kubernetes/pv01 #路径为/ifs/kubernetes/pv01 24 server: 192.168.1.63 #nfs的服务器为:192.168.1.63 25 --- 26 apiVersion: v1 27 kind: PersistentVolume 28 metadata: 29 name: pv02 30 spec: 31 capacity: 32 storage: 10Gi 33 accessModes: 34 - ReadWriteMany 35 nfs: 36 path: /ifs/kubernetes/pv02 37 server: 192.168.1.63 38 --- 39 apiVersion: v1 40 kind: PersistentVolume 41 metadata: 42 name: pv03 43 spec: 44 capacity: 45 storage: 20Gi 46 accessModes: 47 - ReadWriteMany 48 nfs: 49 path: /ifs/kubernetes/pv03 50 server: 192.168.1.63 51 52 [root@master ~]# 53 [root@master ~]# kubectl apply -f pv.yaml 54 persistentvolume/pv01 created 55 persistentvolume/pv02 created 56 persistentvolume/pv03 created 57 [root@master ~]# kubectl get pv 58 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 59 pv01 5Gi RWX Retain Available 11s 60 pv02 10Gi RWX Retain Available 11s 61 pv03 20Gi RWX Retain Available 11s 62 [root@master ~]#

以deployment的方式使用静态PVC

1 [root@master ~]# #通过deployment控制器启动一个Pod,在这个Pod中数据 2 #持久化通过PVC来实现 3 #deployment和PVC通过name:my-pvc进行关联 4 #两个yaml文件通常是写在一起的 5 [root@master ~]# cat deployment2.yaml 6 apiVersion: apps/v1 7 kind: Deployment 8 metadata: 9 labels: 10 app: web 11 name: web 12 spec: 13 replicas: 1 14 selector: 15 matchLabels: 16 app: web 17 strategy: {} 18 template: 19 metadata: 20 labels: 21 app: web 22 spec: 23 containers: 24 - image: nginx 25 name: nginx 26 resources: {} 27 volumeMounts: 28 - name: data 29 mountPath: /usr/share/nginx/html 30 31 volumes: 32 - name: data 33 persistentVloumeClaim: 34 claimName: my-pvc 35 --- 36 apiVersion: v1 37 38 kind: PersistentVolumeClaim 39 metadata: 40 name: my-pvc 41 spec: 42 accessModes: 43 - ReadWriteMany 44 resources: 45 requests: 46 storage: 8Gi 47 [root@master ~]# 48 49 [root@master ~]# kubectl apply -f deployment2.yaml 50 deployment.apps/web created 51 persistentvolumeclaim/my-pvc created 52 [root@master ~]# kubectl get pod 53 NAME READY STATUS RESTARTS AGE 54 web-7d74df4646-fr88j 1/1 Running 0 15s 55 [root@master ~]# kubectl get pvc 56 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 57 my-pvc Bound pv02 10Gi RWX 27s 58 [root@master ~]#

PVC与PV的关系

PV与PVC是一一对应的,名为my-pvc的PVC最终匹配的是PV02,一个PVC只可能匹配一个pv

PVC与PV之间的对应是由匹配条件决定的,PVC与PV的匹配条件是:

1 容量

2 访问模式

3 标签

1 [root@master ~]# kubectl get pv 2 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 3 pv01 5Gi RWX Retain Available 67s 4 pv02 10Gi RWX Retain Bound default/my-pvc 67s 5 pv03 20Gi RWX Retain Available 67s 6 [root@master ~]# #PVX删除了之后,对应的PV中的数据并不会删除 7 #如下图中的pv02,my-pvc删除后,pv02中的数据还是存在的可以对 8 #pv02中的数据进行删除或者备份 9 #同时pv02已不可以再次使用,即它的状态无法再回到available 10 #如果确定不再使用pv02中的数据,可以手动删除pv02 11 #pv删除之后对就的存储是否删除是由RECLAIM POLICY决定的: 12 #RECLAIM POLICY的状态有 13 #Delete: 直接删除PV+数据 (不推荐) 14 #Recycle: 清除数据,但保留PV(被废弃) 15 #Retain: 保留PV&数据 (推荐) 16 17 [root@master ~]# kubectl delete -f deployment2.yaml 18 deployment.apps "web" deleted 19 persistentvolumeclaim "my-pvc" deleted 20 [root@master ~]# kubectl get pv,pvc 21 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 22 persistentvolume/pv01 5Gi RWX Retain Available 86m 23 persistentvolume/pv02 10Gi RWX Retain Released default/my-pvc 86m 24 persistentvolume/pv03 20Gi RWX Retain Available 86m 25 [root@master ~]# 26

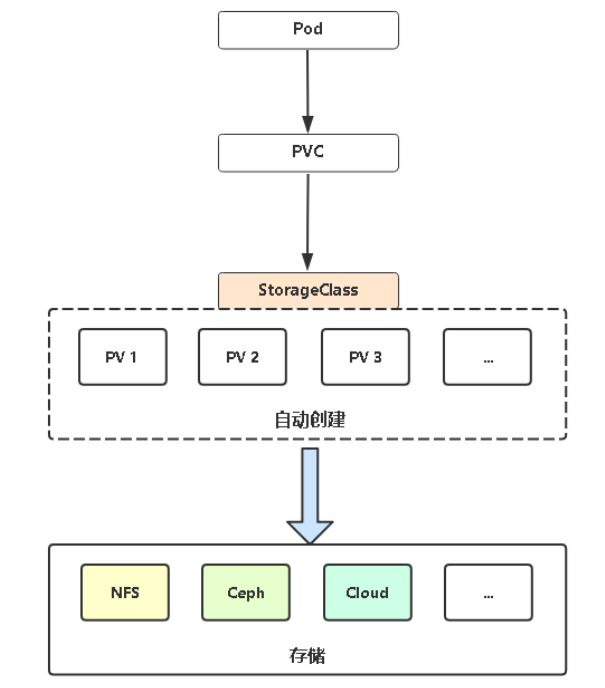

动态PVC的实现:

静态PVC的缺点:

PV需要提前手动创建,PVC在使用PV时可能会出现实现用的PV大于PVC请求的PV

动态PVC可以解决这个问题,当PVC需要PV时会自动创建PVC需求的PV

StorageClass用于自动创建PV, 并不是所有存储类型都支持StorageClass的自动创建,

查看StorageClass支持的存储列表:

https://kubernetes.io/docs/concepts/storage/storage-classes/

如果默认不支持的存储类型仍需要使用动态PVC,那么可以使用的插件列表如下

https://github.com/kubernetes-retired/external-storage/

如下示例,以nfs的存储类型配置动态PVC

StorageClass默认不是支持nfs类型的PVC的,所以需要手动安装插件

1 [root@master NFS]# rz -E 2 rz waiting to receive. 3 [root@master NFS]# ls 4 nfs-client.zip 5 [root@master NFS]# unzip nfs-client.zip 6 [root@master NFS]# cd nfs-client/ 7 [root@master nfs-client]# ls 8 class.yaml deployment.yaml rbac.yaml 9 [root@master nfs-client]# #定义一下StorageClass资源 10 [root@master nfs-client]# cat class.yaml 11 apiVersion: storage.k8s.io/v1 12 kind: StorageClass 13 metadata: 14 name: managed-nfs-storage #storageclass资源的名字 15 provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' 16 parameters: 17 archiveOnDelete: "true" 18 [root@master nfs-client]# #以容器的方式运行应用程序 19 [root@master nfs-client]# cat deployment.yaml 20 apiVersion: v1 21 kind: ServiceAccount 22 metadata: 23 name: nfs-client-provisioner 24 --- 25 kind: Deployment 26 apiVersion: apps/v1 27 metadata: 28 name: nfs-client-provisioner 29 spec: 30 replicas: 1 31 strategy: 32 type: Recreate 33 selector: 34 matchLabels: 35 app: nfs-client-provisioner 36 template: 37 metadata: 38 labels: 39 app: nfs-client-provisioner 40 spec: 41 serviceAccountName: nfs-client-provisioner 42 containers: 43 - name: nfs-client-provisioner 44 image: quay.io/external_storage/nfs-client-provisioner:latest 45 volumeMounts: 46 - name: nfs-client-root 47 mountPath: /persistentvolumes 48 env: 49 - name: PROVISIONER_NAME 50 value: fuseim.pri/ifs #这个变量要与class.yaml文件中的一致 51 - name: NFS_SERVER 52 value: 192.168.1.63 #nfs服务器的地址 53 - name: NFS_PATH 54 value: /ifs/kubernetes 55 volumes: 56 - name: nfs-client-root 57 nfs: 58 server: 192.168.1.63 59 path: /ifs/kubernetes 60 [root@master nfs-client]# #rbac.yaml的作用是授权nfs-client-provisioner这个容器中的 61 #应用程序可以访问K8S的API,从API中获取是否有新的PVC创建 62 #并根据PVC的请求自动创建PV 63 [root@master nfs-client]# cat rbac.yaml 64 kind: ServiceAccount 65 apiVersion: v1 66 metadata: 67 name: nfs-client-provisioner 68 --- 69 kind: ClusterRole 70 apiVersion: rbac.authorization.k8s.io/v1 71 metadata: 72 name: nfs-client-provisioner-runner 73 rules: 74 - apiGroups: [""] 75 resources: ["persistentvolumes"] 76 verbs: ["get", "list", "watch", "create", "delete"] 77 - apiGroups: [""] 78 resources: ["persistentvolumeclaims"] 79 verbs: ["get", "list", "watch", "update"] 80 - apiGroups: ["storage.k8s.io"] 81 resources: ["storageclasses"] 82 verbs: ["get", "list", "watch"] 83 - apiGroups: [""] 84 resources: ["events"] 85 verbs: ["create", "update", "patch"] 86 --- 87 kind: ClusterRoleBinding 88 apiVersion: rbac.authorization.k8s.io/v1 89 metadata: 90 name: run-nfs-client-provisioner 91 subjects: 92 - kind: ServiceAccount 93 name: nfs-client-provisioner 94 namespace: default 95 roleRef: 96 kind: ClusterRole 97 name: nfs-client-provisioner-runner 98 apiGroup: rbac.authorization.k8s.io 99 --- 100 kind: Role 101 apiVersion: rbac.authorization.k8s.io/v1 102 metadata: 103 name: leader-locking-nfs-client-provisioner 104 rules: 105 - apiGroups: [""] 106 resources: ["endpoints"] 107 verbs: ["get", "list", "watch", "create", "update", "patch"] 108 --- 109 kind: RoleBinding 110 apiVersion: rbac.authorization.k8s.io/v1 111 metadata: 112 name: leader-locking-nfs-client-provisioner 113 subjects: 114 - kind: ServiceAccount 115 name: nfs-client-provisioner 116 # replace with namespace where provisioner is deployed 117 namespace: default 118 roleRef: 119 kind: Role 120 name: leader-locking-nfs-client-provisioner 121 apiGroup: rbac.authorization.k8s.io 122 [root@master nfs-client]# 123 [root@master nfs-client]# kubectl apply -f . 124 storageclass.storage.k8s.io/managed-nfs-storage created 125 serviceaccount/nfs-client-provisioner created 126 deployment.apps/nfs-client-provisioner created 127 serviceaccount/nfs-client-provisioner unchanged 128 clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created 129 clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created 130 role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created 131 rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created 132 [root@master nfs-client]# kubectl get pod 133 NAME READY STATUS RESTARTS AGE 134 nfs-client-provisioner-7676dc9cfc-j4vgl 1/1 Running 0 22s 135 [root@master nfs-client]#

如上图示,PVC请求的存储类型是官方默念支持的存储类型,那么是不需要managed-nfs-storage 。

managed-nfs-storage 也正是deployment.yaml 文件容器中的应用程序

1 [root@master nfs-client]# kubectl get sc 2 NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE 3 managed-nfs-storage fuseim.pri/ifs Delete Immediate false 53m 4 [root@master nfs-client]#

通过控制器deployment3.yaml来实现动态PVC

1 [root@master ~]# vim deployment3.yaml 2 apiVersion: apps/v1 3 kind: Deployment 4 metadata: 5 labels: 6 app: web 7 name: web 8 spec: 9 name: web 10 spec: 11 replicas: 1 12 selector: 13 matchLabels: 14 app: web 15 strategy: {} 16 template: 17 metadata: 18 labels: 19 app: web 20 spec: 21 containers: 22 - image: nginx 23 name: nginx 24 resources: {} 25 volumeMounts: 26 - name: data 27 mountPath: /usr/share/nginx/html 28 29 volumes: 30 - name: data 31 persistentVolumeClaim: 32 claimName: my-pvc2 33 --- 34 apiVersion: v1 35 kind: PersistentVolumeClaim 36 metadata: 37 name: my-pvc2 38 spec: 39 storageClassName: "managed-nfs-storage" #这里的storageClassName要与上面kubectl get sc 查询出来的结果一致 40 accessModes: 41 - ReadWriteMany 42 resources: 43 requests: 44 storage: 9Gi 45 #创建pod和动态PVC 46 [root@master ~]# kubectl apply -f deployment3.yaml 47 deployment.apps/web created 48 persistentvolumeclaim/my-pvc2 created 49 [root@master ~]# 50 [root@master ~]# kubectl get pod 51 NAME READY STATUS RESTARTS AGE 52 nfs-client-provisioner-7676dc9cfc-j4vgl 1/1 Running 1 17h 53 web-748845d84d-fcclv 1/1 Running 0 54s 54 [root@master ~]# 55 [root@master ~]# kubectl get pv,pvc #容量为9G的PV会被自动创建 56 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 57 persistentvolume/pv01 5Gi RWX Retain Available 22h 58 persistentvolume/pv02 10Gi RWX Retain Released default/my-pvc 22h 59 persistentvolume/pv03 20Gi RWX Retain Available 22h 60 persistentvolume/pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9 9Gi RWX Delete Bound default/my-pvc2 managed-nfs-storage 28s 61 62 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE 63 persistentvolumeclaim/my-pvc2 Bound pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9 9Gi RWX managed-nfs-storage 28s 64 [root@master ~]# 65 #在NFS的物理目录下会自动创建目录default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9 66 [root@node2 ~]# cd /ifs/kubernetes/ 67 [root@node2 kubernetes]# ls 68 a.txt default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9 index.html pv01 pv02 pv03 69 [root@node2 kubernetes]#

验证NFS的共享目录

1 #在容器中创建一个文件验证数据是否会被持久化到这个目录中 2 [root@master ~]# kubectl exec -it web-748845d84d-fcclv -- bash 3 root@web-748845d84d-fcclv:/# cd /usr/share/nginx/html/ 4 root@web-748845d84d-fcclv:/usr/share/nginx/html# touch abc.txt 5 root@web-748845d84d-fcclv:/usr/share/nginx/html# ls 6 abc.txt 7 root@web-748845d84d-fcclv:/usr/share/nginx/html# 8 [root@node2 kubernetes]# cd default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9/ 9 [root@node2 default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9]# ls 10 abc.txt 11 [root@node2 default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9]# 12 13 #为控制器创建3个副本,进入其它副本查看是否实现了数据共享 14 [root@master ~]# kubectl scale deploy web --replicas=3 15 deployment.apps/web scaled 16 [root@master ~]# kubectl get pod 17 NAME READY STATUS RESTARTS AGE 18 nfs-client-provisioner-7676dc9cfc-j4vgl 1/1 Running 1 17h 19 web-748845d84d-87dh8 1/1 Running 0 9s 20 web-748845d84d-fcclv 1/1 Running 0 32m 21 web-748845d84d-pmbdf 1/1 Running 0 9s 22 [root@master ~]# #进入另外一个Pod中查看 23 [root@master ~]# kubectl exec -it web-748845d84d-pmbdf -- bash 24 root@web-748845d84d-pmbdf:/# cd /usr/share/nginx/html/ 25 root@web-748845d84d-pmbdf:/usr/share/nginx/html# ls 26 abc.txt 27 root@web-748845d84d-pmbdf:/usr/share/nginx/html#

验证动态PVC 对PV的回收策略

1 #通过控制器删除Pod查看持久化在外部的数据 2 #pod删除后,对应的pv,pvc都被删除了 3 [root@master ~]# kubectl delete -f deployment3.yaml 4 deployment.apps "web" deleted 5 persistentvolumeclaim "my-pvc2" deleted 6 [root@master ~]# 7 [root@master ~]# kubectl get pod 8 NAME READY STATUS RESTARTS AGE 9 nfs-client-provisioner-7676dc9cfc-j4vgl 1/1 Running 1 18h 10 [root@master ~]# 11 [root@master ~]# kubectl get pv,pvc 12 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE 13 persistentvolume/pv01 5Gi RWX Retain Available 23h 14 persistentvolume/pv02 10Gi RWX Retain Released default/my-pvc 23h 15 persistentvolume/pv03 20Gi RWX Retain Available 23h 16 [root@master ~]# 17 #持久化在外部的数据目录 default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9/ 18 #会被自动归档到archived-default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9目录 19 [root@node2 kubernetes]# ls 20 archived-default-my-pvc2-pvc-cfa1317b-67eb-4e36-8c4d-75e7c075e2a9 a.txt index.html pv01 pv02 pv03 21 [root@node2 kubernetes]#