最近在做基于图像的室内定位方面的研究,于是使用到了百度最新的室内数据库Image-based Localization (IBL) 。由于该数据库给出的数据是每幅图像和其对应相机的内外参数和光心投影方向,所以我需要先求出其6DOF预估姿态。再利用PoseNet网络对其实现基于图像的定位估计。好了,问题就很明确了:

(1)根据图像和激光雷达参数的3D点云实现2D-3D的匹配,找到每张图像上的至少四个特征点。即找到至少4个二维像素和3D点云点的对应点。

(2)根据这四组对应点和相机内外参数估计相机6DOF,即相机姿态。

今天先实现第二个问题。很幸运网上有这样几篇博客已经将相机位姿整个过程讲的比较清楚了http://www.cnblogs.com/singlex/p/pose_estimation_1.html。

但这篇文章是由c++写的,我在python上简单的对其进行了验证。

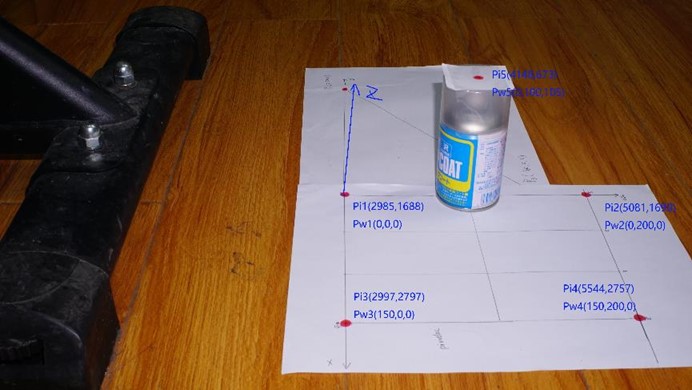

这是这张图给出的数据。

import cv2 import numpy as np import math object_3d_points = np.array(([0, 0, 0], [0, 200, 0], [150, 0, 0], [150, 200, 0]), dtype=np.double) object_2d_point = np.array(([2985, 1688], [5081, 1690], [2997, 2797], [5544, 2757]), dtype=np.double) camera_matrix = np.array(([6800.7, 0, 3065.8], [0, 6798.1, 1667.6], [0, 0, 1.0]), dtype=np.double) dist_coefs = np.array([-0.189314, 0.444657, -0.00116176, 0.00164877, -2.57547], dtype=np.double) # 求解相机位姿 found, rvec, tvec = cv2.solvePnP(object_3d_points, object_2d_point, camera_matrix, dist_coefs) rotM = cv2.Rodrigues(rvec)[0] camera_postion = -np.matrix(rotM).T * np.matrix(tvec) print(camera_postion.T) # 验证根据博客http://www.cnblogs.com/singlex/p/pose_estimation_1.html提供方法求解相机位姿 # 计算相机坐标系的三轴旋转欧拉角,旋转后可以转出世界坐标系。旋转顺序z,y,x thetaZ = math.atan2(rotM[1, 0], rotM[0, 0])*180.0/math.pi thetaY = math.atan2(-1.0*rotM[2, 0], math.sqrt(rotM[2, 1]**2 + rotM[2, 2]**2))*180.0/math.pi thetaX = math.atan2(rotM[2, 1], rotM[2, 2])*180.0/math.pi # 相机坐标系下值 x = tvec[0] y = tvec[1] z = tvec[2] # 进行三次旋转 def RotateByZ(Cx, Cy, thetaZ): rz = thetaZ*math.pi/180.0 outX = math.cos(rz)*Cx - math.sin(rz)*Cy outY = math.sin(rz)*Cx + math.cos(rz)*Cy return outX, outY def RotateByY(Cx, Cz, thetaY): ry = thetaY*math.pi/180.0 outZ = math.cos(ry)*Cz - math.sin(ry)*Cx outX = math.sin(ry)*Cz + math.cos(ry)*Cx return outX, outZ def RotateByX(Cy, Cz, thetaX): rx = thetaX*math.pi/180.0 outY = math.cos(rx)*Cy - math.sin(rx)*Cz outZ = math.sin(rx)*Cy + math.cos(rx)*Cz return outY, outZ (x, y) = RotateByZ(x, y, -1.0*thetaZ) (x, z) = RotateByY(x, z, -1.0*thetaY) (y, z) = RotateByX(y, z, -1.0*thetaX) Cx = x*-1 Cy = y*-1 Cz = z*-1 # 输出相机位置 print(Cx, Cy, Cz) # 输出相机旋转角 print(thetaX, thetaY, thetaZ) # 对第五个点进行验证 Out_matrix = np.concatenate((rotM, tvec), axis=1) pixel = np.dot(camera_matrix, Out_matrix) pixel1 = np.dot(pixel, np.array([0, 100, 105, 1], dtype=np.double)) pixel2 = pixel1/pixel1[2] print(pixel2)

输出结果

[[ 528.66321122 -2.88452091 358.60341802]]

[ 528.66321122] [-2.88452091] [ 358.60341802]

178.3558701005234 56.02221316618043 88.63218026484252

[ 4.15960851e+03 6.73694373e+02 1.00000000e+00]

验证结果证明确实python代码6行就求解出了相机6DOF位姿估计,厉害!通过验证第5个点[0, 100, 105]对应像素点[4159.6, 673.69]和真实像素位置[4146, 673]相差不大。