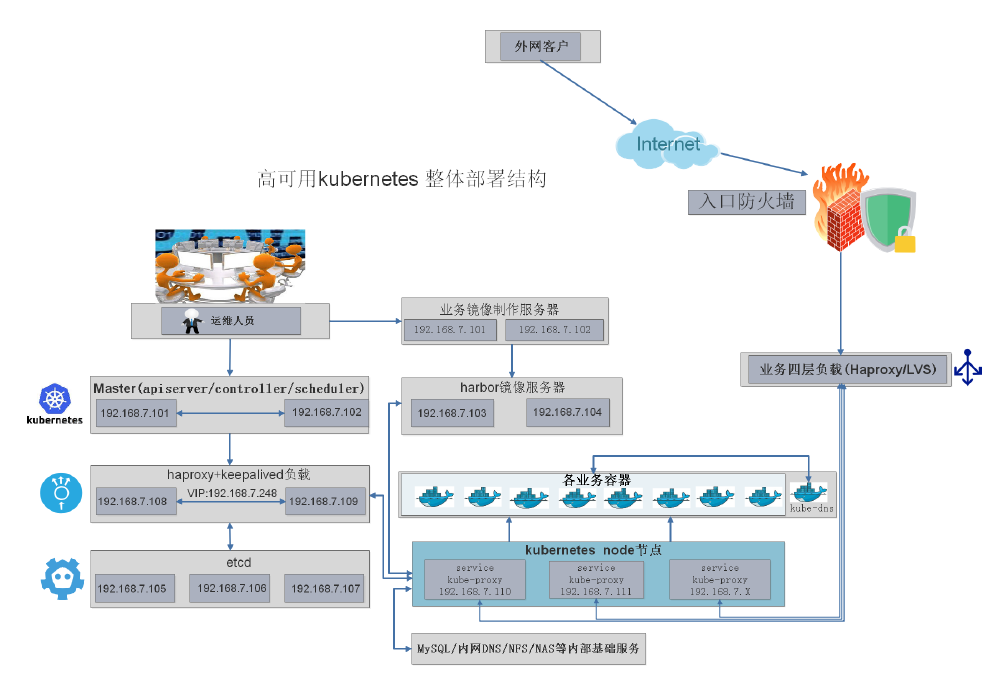

一、基础集群环境搭建

架构图:

服务器清单

1、安装最小化Ubuntu系统

1、修改内核参数,修改网卡名称,将ens33改为eth0

root@ubuntu:vim /etc/default/grub GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0" root@ubuntu:update-grub

2、修改系统的IP地址

root@node4:~# vim /etc/netplan/50-cloud-init.yaml

network:

version: 2

renderer: networkd

ethernets:

eth0:

dhcp4: no

addresses: [192.168.7.110/24]

gateway4: 192.168.7.2

nameservers:

addresses: [192.168.7.2]

3、应用ip配置并重启测试:

root@node4:~# netplan apply

4、修改apt源仓库/etc/apt/sources.list:1804版本的Ubuntu,参考阿里云仓库:https://developer.aliyun.com/mirror/ubuntu?spm=a2c6h.13651102.0.0.53322f701347Pq

deb http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-security main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-updates main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-proposed main restricted universe multiverse deb http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse deb-src http://mirrors.aliyun.com/ubuntu/ bionic-backports main restricted universe multiverse

5、安装常用命令

# apt-get update #更新 # apt-get purge ufw lxd lxd-client lxcfs lxc-common #卸载不用的包 # apt-get install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip

6、重启linux系统。

# reboot

7、想要root用户登录,先sudo切换到root用户,然后修改ssh服务vim /etc/ssh/sshd_config

root@node4:~# sudo su root@node4:~# vim /etc/ssh/sshd_config PermitRootLogin yes # 允许root用户登录即可 UseDNS no # 避免xshell通过ssh连接时进行反向解析 root@node4:~# systemctl restart sshd # 重启sshd服务就可以root登录

二、安装配置HAProxy及Keepalived

1、配置keepalived服务

1、安装HAProxy和Keepalived

root@node5:~# apt-get install haproxy keepalived -y

2、找到一个keepalived配置范例,然后复制到/etc/keepalived目录下进行配置。

root@node5:~# find / -name keepalived.* /usr/share/doc/keepalived/samples/keepalived.conf.sample root@node5:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.sample /etc/keepalived/keepalived.conf #复制范例到/etc/keepalived/目录下,并起名为keepalived.conf

3、修改keepalived.conf配置文件

root@node5:/etc/keepalived# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

interface eth0

virtual_router_id 50

nopreempt

priority 100

advert_int 1

virtual_ipaddress {

192.168.7.248 dev eth0 label eth0:0 # VIP添加为192.168.7.248

}

}

4、重启keepalived服务,并设置为开机启动

root@node5:/etc/keepalived# systemctl restart keepalived root@node5:/etc/keepalived# systemctl enable keepalived

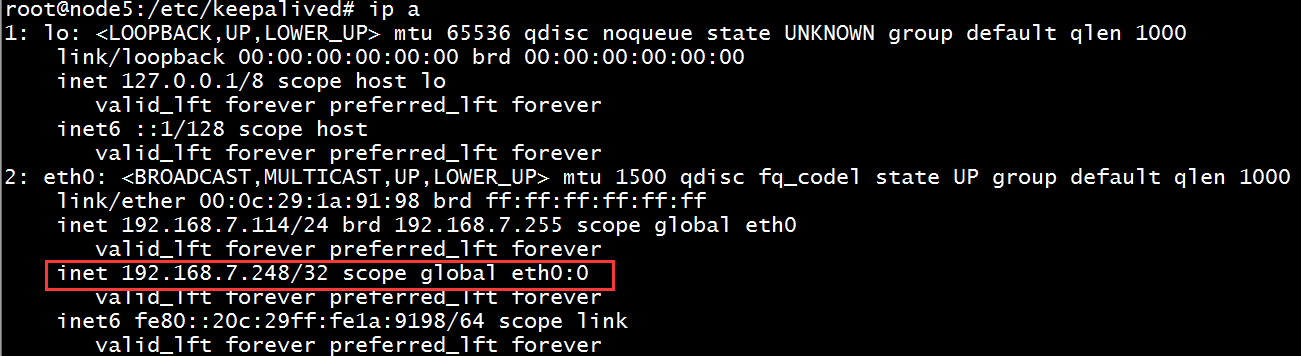

5、查看此时的VIP已经生成

2、配置haproxy服务

1、修改haproxy配置,监听master的6443端口

# vim /etc/haproxy/haproxy.cfg listen k8s-api-server-6443 bind 192.168.7.248:6443 mode tcp server 192.168.7.110 192.168.7.110:6443 check inter 2000 fall 3 rise 5

2、重启haproxy服务,并设置为开机启动

root@node5:~# systemctl restart haproxy root@node5:~# systemctl enable haproxy

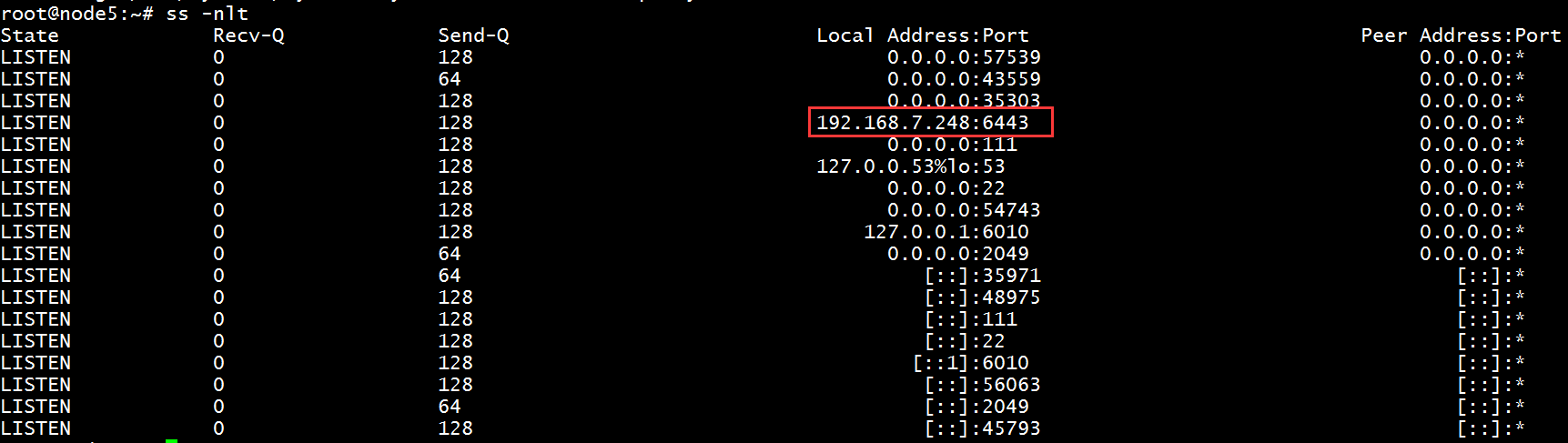

3、查看此时监听的端口

三、配置docker源仓库,并安装docker-ce(master、etcd、harbor、node节点都要安装)

1、官方阿里云地址:https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.53322f70mkWLiO

# step 1: 安装必要的一些系统工具 sudo apt-get update sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common # step 2: 安装GPG证书 curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - # Step 3: 写入软件源信息 sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" # Step 4: 更新并安装Docker-CE sudo apt-get -y update sudo apt-get -y install docker-ce # 安装指定版本的Docker-CE: # Step 1: 查找Docker-CE的版本: # apt-cache madison docker-ce # docker-ce | 17.03.1~ce-0~ubuntu-xenial | https://mirrors.aliyun.com/docker-ce/linux/ubuntu xenial/stable amd64 Packages # docker-ce | 17.03.0~ce-0~ubuntu-xenial | https://mirrors.aliyun.com/docker-ce/linux/ubuntu xenial/stable amd64 Packages # Step 2: 安装指定版本的Docker-CE: (VERSION例如上面的17.03.1~ce-0~ubuntu-xenial) # sudo apt-get -y install docker-ce=[VERSION]

2、安装docker-compose包

root@node3:~# apt-get install docker-compose -y

3、启动docker服务并设置为开机启动。

# systemctl start docker # systemctl enable docker

四、开始配置Harbor仓库

1、官网下载Harbor软件,然后传到linux的/usr/local/src目录下解压,并配置

root@node3:/usr/local/src/harbor# tar xvf harbor-offline-installer-v1.7.5.tgz root@node3:/usr/local/src/harbor# cd harbor root@node3:/usr/local/src/harbor# vim harbor.cfg hostname = harbor.struggle.net # 创建本地域名登陆 ui_url_protocol = https # 改为https加密登陆 harbor_admin_password = 123456 #登陆密码 ssl_cert = /usr/local/src/harbor/certs/harborca.crt # 指定私钥路径 ssl_cert_key = /usr/local/src/harbor/certs/harbor-ca.key #指定证书路径

修改hosts配置文件,对IP地址进行域名解析

# vim /etc/hosts 192.168.7.112 harbor.struggle.net

主机名改为harbor.struggle.net

# hostnamectl set-hostname harbor.struggle.net

2、开始创建ssl加密文件

root@node3:~# touch .rnd # 在root目录下新建一个.rnd文件,才能颁发证书 root@node3:/usr/local/src# cd harbor/ root@node3:/usr/local/src/harbor# mkdir certs # openssl genrsa -out /usr/local/src/harbor/certs/harbor-ca.key # 生成私钥key # openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.struggle.net" -days 7120 -out harborca.crt # 颁发证书

3、开始安装harbor服务

# cd /usr/local/src/harbor/ # ./install.sh

4、安装完成harbor后需要修改本地windows主机的hosts文件解析harbor.struggle.net域名才能在网页上解析。

五、在两个master主机安装docker-ce

1、配置docker-ce的apt源并安装docker-ce,详情见上面的阿里云地址:https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.53322f70mkWLiO

2、启动docker服务

# systemctl start docker # systemctl enable docker

3、创建一个存放公钥的目录,然后将harbor服务器的公钥传到此目录下

root@node1:~# mkdir /etc/docker/certs.d/harbor.struggle.net -p

4、在harbor服务器上将公钥传到master主机创建的目录下

root@harbor:/usr/local/src/harbor/certs# scp harbor-ca.crt 192.168.7.110:/etc/docker/certs.d/harbor.struggle.net

5、重启master主机的docker服务

root@node1:~# systemctl restart docker

6、修改master主机的/etc/hosts文件,对harbor的域名进行解析

192.168.7.112 harbor.struggle.net

7、然后在master主机登陆harbor,显示成功即可登陆。。

root@node1:/etc/docker/certs.d/harbor.struggle.net# docker login harbor.struggle.net Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded

8、在harbor上创建一个项目,然后再下载一个镜像进行测试是否能够上传

在master主机上测试镜像上传至harbor上

在master主机下载一个测试镜像

root@node1:~# docker pull alpine

master主机给要上传的镜像打标签

root@node1:~# docker tag alpine:latest harbor.struggle.net/baseimages/alpine:latest # 给要上传的镜像打标签

在master主机上传打标签的镜像

root@node1:~# docker push harbor.struggle.net/baseimages/alpine:latest

此时已经上传成功!!!

在每个节点安装依赖工具

在以上的主机上都安装python2.7,并创建软链接

参考相关文档:https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

# 文档中脚本默认均以root用户执行 apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y # apt-get install python2.7 -y # ln -s /usr/bin/python2.7 /usr/bin/python # 创建软链接

CentOS 7 请执行以下脚本:

# 文档中脚本默认均以root用户执行 yum update # 安装python yum install python -y

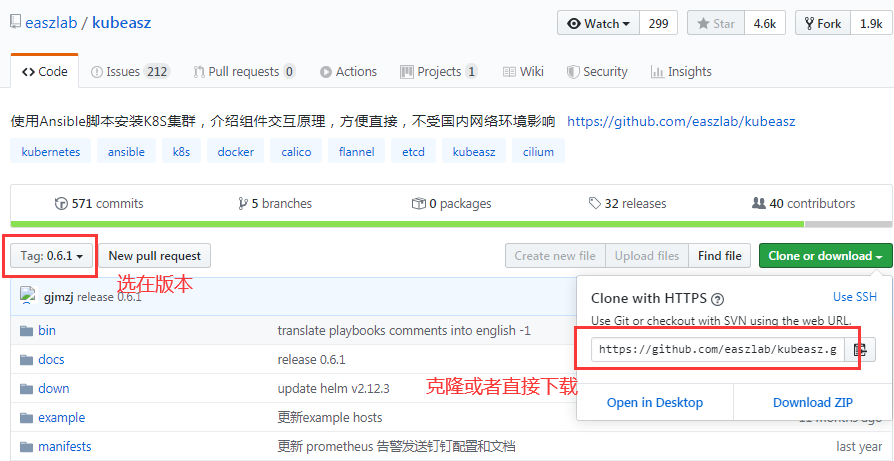

在两个master节点上克隆ansible项目,或者下载下来再传到本地linux上

参考地址:https://github.com/easzlab/kubeasz/tree/0.6.0

root@k8s-master2:~# apt-get install ansible -y # 在两个master节点上都安装ansible root@k8s-master2:~# apt-get install git -y # 两个master节点都安装git root@k8s-master1:~# git clone -b 0.6.1 https://github.com/easzlab/kubeasz.git # 克隆项目上指定的包

六、在master准备hosts文件

将/etc/ansible/hosts的文件移走,然后将范例移动到/etc/ansible/目录下,并将项目上的文件放在/etc/ansible目录下

root@k8s-master1:/etc/ansible# mv /etc/ansible/hosts /opt/ # 将ansible自带的hosts文件移走 root@k8s-master1:/etc/ansible# mv kubeasz/* /etc/ansible/ # 将项目的文件存在此目录下 root@k8s-master1:/etc/ansible# cd /etc/ansible/ root@k8s-master1:/etc/ansible# cp example/hosts.m-masters.example ./hosts # 将范例移动到ansible目录下,并起名为hosts文件 root@k8s-master1:/etc/ansible# vim hosts # 修改hosts文件

修改复制的范例hosts文件

# 集群部署节点:一般为运行ansible 脚本的节点

# 变量 NTP_ENABLED (=yes/no) 设置集群是否安装 chrony 时间同步

[deploy]

192.168.7.110 NTP_ENABLED=no # master节点的IP地址

# etcd集群请提供如下NODE_NAME,注意etcd集群必须是1,3,5,7...奇数个节点

[etcd]

192.168.7.113 NODE_NAME=etcd1 # 目前只有一个etcd主机,就先写一个

[new-etcd] # 预留组,后续添加etcd节点使用

#192.168.1.x NODE_NAME=etcdx

[kube-master]

192.168.7.110 # master节点的IP地址

[new-master] # 预留组,后续添加master节点使用

#192.168.1.5

[kube-node]

192.168.7.115 # node节点的IP地址

[new-node] # 预留组,后续添加node节点使用

#192.168.1.xx

# 参数 NEW_INSTALL:yes表示新建,no表示使用已有harbor服务器

# 如果不使用域名,可以设置 HARBOR_DOMAIN=""

[harbor]

#192.168.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no

# 负载均衡(目前已支持多于2节点,一般2节点就够了) 安装 haproxy+keepalived

#[lb]

#192.168.1.1 LB_ROLE=backup

#192.168.1.2 LB_ROLE=master

#【可选】外部负载均衡,用于自有环境负载转发 NodePort 暴露的服务等

[ex-lb]

#192.168.1.6 LB_ROLE=backup EX_VIP=192.168.1.250

#192.168.1.7 LB_ROLE=master EX_VIP=192.168.1.250

[all:vars]

# ---------集群主要参数---------------

#集群部署模式:allinone, single-master, multi-master

DEPLOY_MODE=multi-master

#集群主版本号,目前支持: v1.8, v1.9, v1.10,v1.11, v1.12, v1.13

K8S_VER="v1.13" #写上版本

# 集群 MASTER IP即 LB节点VIP地址,为区别与默认apiserver端口,设置VIP监听的服务端口8443

# 公有云上请使用云负载均衡内网地址和监听端口

MASTER_IP="192.168.7.248" # 写上VIP地址

KUBE_APISERVER="https://{{ MASTER_IP }}:6443" # 监听6443端口

# 集群网络插件,目前支持calico, flannel, kube-router, cilium

CLUSTER_NETWORK="calico" # 使用calico

# 服务网段 (Service CIDR),注意不要与内网已有网段冲突

SERVICE_CIDR="10.20.0.0/16" # 写上server网段

# POD 网段 (Cluster CIDR),注意不要与内网已有网段冲突

CLUSTER_CIDR="172.20.0.0/16" #书写POD网段

# 服务端口范围 (NodePort Range)

NODE_PORT_RANGE="20000-60000" #服务端口范围

# kubernetes 服务 IP (预分配,一般是 SERVICE_CIDR 中第一个IP)

CLUSTER_KUBERNETES_SVC_IP="10.20.0.1" # 此时的IP地址要在上面分配的10.20.0.0/16的地址段范围内

# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

CLUSTER_DNS_SVC_IP="10.20.254.254" #此行也要在上面分配的server端的IP地址段范围内

# 集群 DNS 域名

CLUSTER_DNS_DOMAIN="linux36.local." # DNS域名解析

# 集群basic auth 使用的用户名和密码

BASIC_AUTH_USER="admin"

BASIC_AUTH_PASS="123456"

# ---------附加参数--------------------

#默认二进制文件目录

bin_dir="/usr/bin" # 指定二进制路劲

#证书目录

ca_dir="/etc/kubernetes/ssl" # 默认即可

#部署目录,即 ansible 工作目录,建议不要修改

base_dir="/etc/ansible" #默认即可

在master节点部署免秘钥登录,并将harbor制作的公钥分发给每个主机(harbor和HAProxy不需要)

root@k8s-master1:~# ssh-keygen # 先在master创建公私钥对

root@k8s-master1:~# apt-get install sshpass # 安装sshpass工具

root@k8s-master1:~# vim scp.sh # 修改一个自动传递公钥的脚本

#!/bin/bash

#目标主机列表

IP="

192.168.7.110

192.168.7.111

192.168.7.113

192.168.7.114

192.168.7.115

"

for node in ${IP};do

sshpass -p centos ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

ssh ${node} "mkdir -p /etc/docker/certs.d/harbor.struggle.net"

scp /etc/docker/certs.d/harbor.struggle.net/harbor-ca.crt ${node}:/etc/docker/certs.d/harbor.struggle.net/harbor-ca.crt

ssh ${node} "systemctl restart docker"

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

执行scp.sh脚本

# bash scp.sh

七、使用ansible部署环境

1、环境初始化

1、将k8s.1-13-5版本传到linux系统上,并在/etc/ansible/bin目录下解压

root@k8s-master1:/etc/ansible/bin# cd /etc/ansible/bin root@k8s-master1:/etc/ansible/bin# tar xvf k8s.1-13-5.tar.gz # 解压k8s文件 root@k8s-master1:/etc/ansible/bin#mv bin/* . #由于解压后的文件还有一个bin,所以就再次移动到此目录下

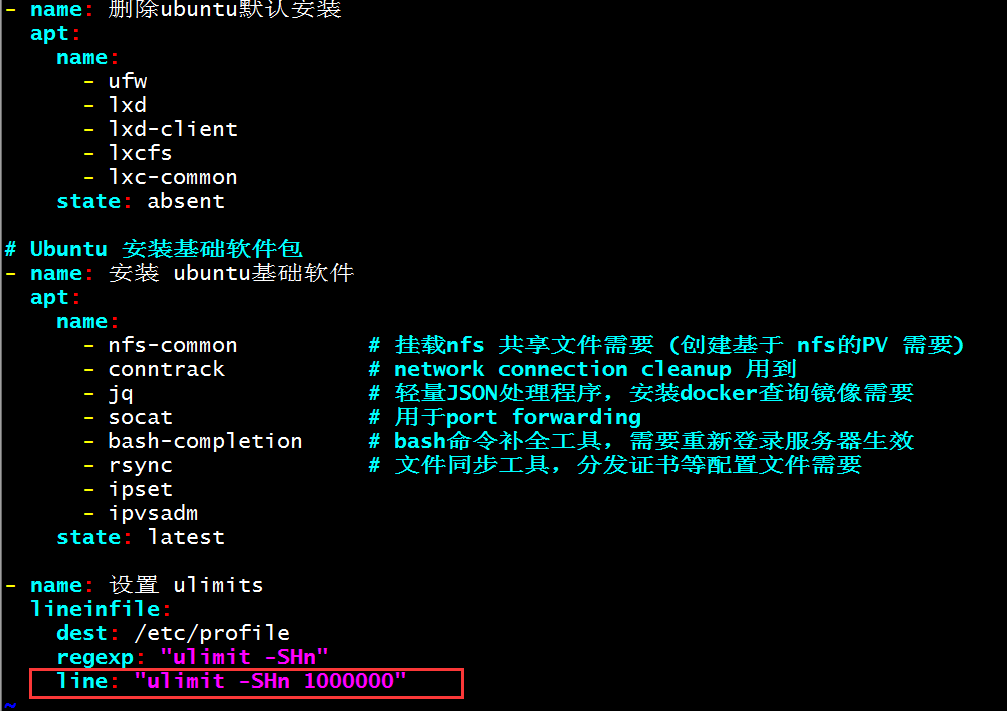

2、修改软限制和硬限制文件

root@k8s-master1:/etc/ansible# vim roles/prepare/templates/30-k8s-ulimits.conf.j2 * soft nofile 1000000 * hard nofile 1000000 * soft nproc 1000000 * hard nproc 1000000

3、添加内核参数

root@k8s-master1:/etc/ansible# vim roles/prepare/templates/95-k8s-sysctl.conf.j2 net.ipv4.ip_nonlocal_bind = 1

4、修改debian的限制,默认65536,中小型公司可不需要修改

root@k8s-master1:/etc/ansible# vim roles/prepare/tasks/debian.yml line: "ulimit -SHn 1000000"

5、切换到/etc/ansible目录下,开始使用ansible-playbook编排

root@k8s-master1:/etc/ansible/bin# cd .. root@k8s-master1:/etc/ansible# ansible-playbook 01.prepare.yml

2、部署etcd集群

1、查看此时原有的etcd和etcdctl的版本是3.3.10,需要修改版本

root@k8s-master1://etc/ansible/bini# ./etcd --version etcd Version: 3.3.10 Git SHA: 27fc7e2 Go Version: go1.10.4 Go OS/Arch: linux/amd64 root@k8s-master1:/etc/ansible/bin# ./etcdctl --version etcdctl version: 3.3.10 API version: 2

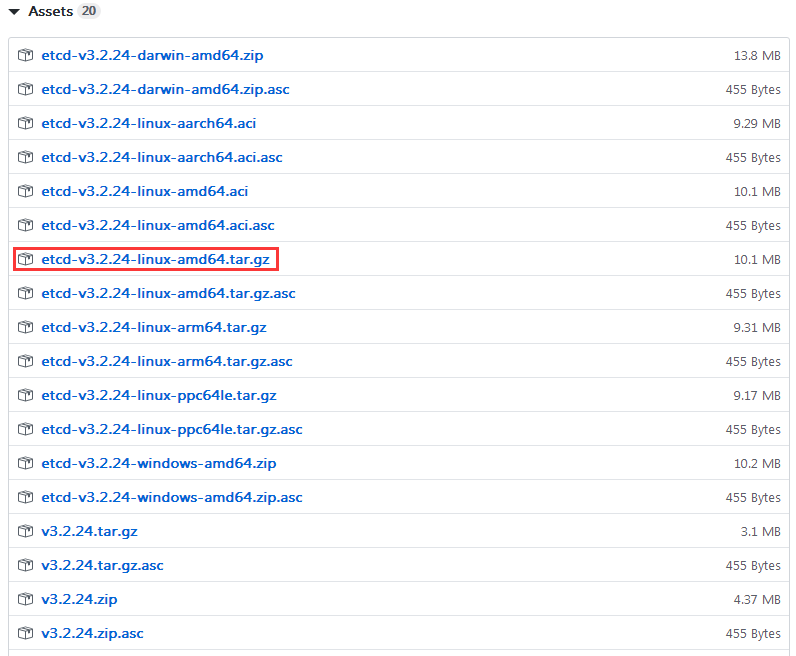

下载github的3.2.24版本etcd的包:https://github.com/etcd-io/etcd/releases?after=v3.3.12

2、将下载最新的etcd的3.2.24版本的包解压并传到linux指定的目录下

root@k8s-master1:/etc/ansible# mv bin/etc* /opt/ 将旧版本的etcd和etcdctl移动到opt目录下 root@k8s-master1:/etc/ansible# cd bin root@k8s-master1:/etc/ansible/bin# chmod +x etc* 将最新的etcd和etcdctl文件传到此目录下,并加上执行权限 root@k8s-master1:/etc/ansible# bin/etcd --version #验证版本 etcd Version: 3.2.24 Git SHA: 420a45226 Go Version: go1.8.7 Go OS/Arch: linux/amd64 root@k8s-master1:/etc/ansible# bin/etcdctl --version # 验证版本 etcdctl version: 3.2.24 API version: 2

3、开始部署etcd

root@k8s-master1:/etc/ansible# ansible-playbook 02.etcd.yml

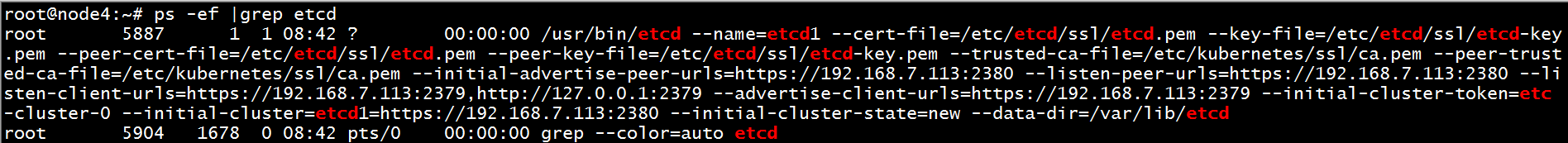

4、部署etcd完成之后,在etcd服务器上进行查看进程是否已经运行

5、各etcd服务器上验证etcd服务

for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health; done

3、部署master

1、在master主机下载镜像,然后传到harbor服务器上,在做ansible-playbook时,保证每个节点都能下载到此镜像,否则下载国外镜像有可能下载不下来。

root@k8s-master1:/etc/ansible# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 # 下载此镜像,将国外镜像地址转到阿里云镜像进行下载 root@k8s-master1:/etc/ansible# docker login harbor.struggle.net # 登陆harbor服务器 Authenticating with existing credentials... WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-master1:/etc/ansible# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1 harbor.struggle.net/baseimages/pause-amd64:3.1 # 给镜像打标签 root@k8s-master1:/etc/ansible# docker push harbor.struggle.net/baseimages/pause-amd64:3.1 # 将镜像传到harbor服务器上

2、修改配置文件

root@k8s-master1:/etc/ansible# grep PROXY_MODE roles/ -R

roles/kube-node/defaults/main.yml:PROXY_MODE: "iptables"

roles/kube-node/templates/kube-proxy.service.j2: --proxy-mode={{ PROXY_MODE }}

root@k8s-master1:/etc/ansible# vim roles/kube-node/defaults/main.yml

SANDBOX_IMAGE: "harbor.struggle.net/baseimages/pause-amd64:3.1" # 将镜像地址指向本地harbor地址

3、开始部署master

root@k8s-master1:/etc/ansible# ansible-playbook 04.kube-master.yml

4、部署node节点

root@k8s-master1:/etc/ansible# ansible-playbook 05.kube-node.yml

5、部署网络服务calico

github网站上可以下载对应版本的calico包:https://github.com/projectcalico/calico/releases/download/v3.3.2/release-v3.3.2.tgz

1、将calico包传到本地linux系统中

root@k8s-master1:/etc/ansible# vim roles/calico/defaults/main.yml # 更新支持calico 版本: [v3.2.x] [v3.3.x] [v3.4.x] calico_ver: "v3.3.2" # 修改为自己下载的calico版本

2、将下载下来的calico包传到linux系统中并解压

root@k8s-master1:/opt#rz root@k8s-master1:/opt# tar xvf calico-release-v3.3.2.tgz root@k8s-master1:/opt/release-v3.3.2/images# cd /opt/release-v3.3.2/images/ root@k8s-master1:/opt/release-v3.3.2/images# ll total 257720 drwxrwxr-x 2 liu liu 110 Dec 4 2018 ./ drwxrwxr-x 5 liu liu 66 Dec 4 2018 ../ -rw------- 1 liu liu 75645952 Dec 4 2018 calico-cni.tar -rw------- 1 liu liu 56801280 Dec 4 2018 calico-kube-controllers.tar -rw------- 1 liu liu 76076032 Dec 4 2018 calico-node.tar -rw------- 1 liu liu 55373824 Dec 4 2018 calico-typha.tar

3、将解压后的文件里的镜像传到本地的harbor上

root@k8s-master1:/opt/release-v3.3.2/images# docker login harbor.struggle.net #登陆harbor的仓库 Authenticating with existing credentials... WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded root@k8s-master1:/opt/release-v3.3.2/images# docker load -i calico-node.tar # 将calico-node.tar传到docker中 root@k8s-master1:/opt/release-v3.3.2/images# docker tag calico/node:v3.3.2 harbor.struggle.net/baseimages/calico-node:v3.3.2 # 然后对calico-node打标签 root@k8s-master1:/opt/release-v3.3.2/images# docker push harbor.struggle.net/baseimages/calico-node:v3.3.2 # 将打好标签的calico-node传到harbor仓库中 root@k8s-master1:/opt/release-v3.3.2/images# docker load -i calico-kube-controllers.tar # 将calico-kube-controllers传到docker仓库中 root@k8s-master1:/opt/release-v3.3.2/images# docker tag calico/kube-controllers:v3.3.2 harbor.struggle.net/baseimages/calico-kube-controllers:v3.3.2 #对calico-kube-controllers打标签 root@k8s-master1:/opt/release-v3.3.2/images# docker push harbor.struggle.net/baseimages/calico-kube-controllers:v3.3.2 # 将打好标签的镜像传到harbor仓库中 root@k8s-master1:/opt/release-v3.3.2/images# docker load -i calico-cni.tar #将calico-cni镜像传到本地的docker中 root@k8s-master1:/opt/release-v3.3.2/images# docker tag calico/cni:v3.3.2 harbor.struggle.net/baseimages/calico-cni:v3.3.2 #对上面的镜像打标签 root@k8s-master1:/opt/release-v3.3.2/images# docker push harbor.struggle.net/baseimages/calico-cni:v3.3.2 #将打好的标签传到本地的harbor仓库上

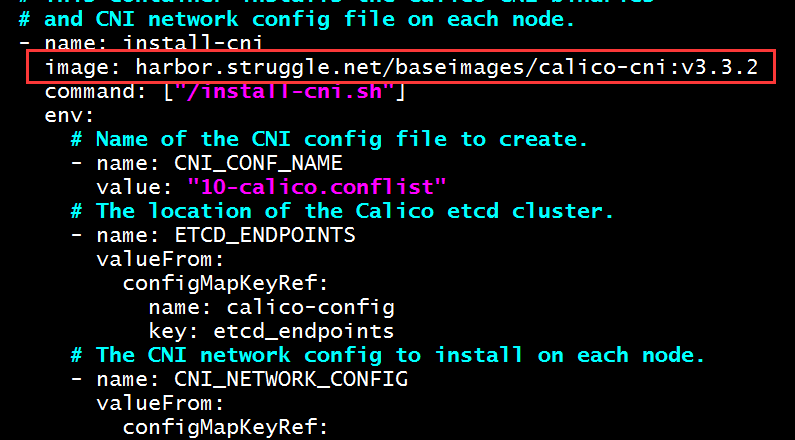

4、修改master主机的示例配置文件,将镜像的路径都指向本地仓库路径

root@k8s-master1:/etc/ansible# vim roles/calico/templates/calico-v3.3.yaml.j2

- name: calico-node

image: harbor.struggle.net/baseimages/calico-node:v3.3.2 # 主要将镜像指定了本地仓库路径

- name: install-cni

image: harbor.struggle.net/baseimages/calico-cni:v3.3.2 # 指向本地仓库路径

- name: calico-kube-controllers

image: harbor.struggle.net/baseimages/calico-kube-controllers:v3.3.2 # 指向本地仓库路径

5、将calicoctl更新为最新版本

root@k8s-master1:/etc/ansible/bin# cd /opt/release-v3.3.2/bin/ root@k8s-master1:/opt/release-v3.3.2/bin# cp calicoctl /etc/ansible/bin # 将3.32版本的执行文件传到/etc/ansible/bin目录下 root@k8s-master1:/opt/release-v3.3.2/bin# cd - /etc/ansible/bin root@k8s-master1:/etc/ansible/bin# ./calicoctl version # 查看此时calicoctl 版本,与上面的calico版本一致。 Client Version: v3.3.2 Build date: 2018-12-03T15:10:51+0000 Git commit: 594fd84e no etcd endpoints specified

6、将每个node节点服务器的hosts文件的IP解析改为harbor的域名解析,此时才能够在node节点下载harbor仓库的镜像,否则无法下载

# vim /etc/hosts 192.168.7.112 harbor.struggle.net

7、开始在master使用ansible-playbook编排网络。

root@k8s-master1:/etc/ansible/bin# cd .. root@k8s-master1:/etc/ansible# ansible-playbook 06.network.yml

此时K8S的基本搭建完成,验证此时的node节点状态

root@k8s-master1:/etc/ansible# calicoctl node status Calico process is running. IPv4 BGP status +---------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +---------------+-------------------+-------+----------+-------------+ | 192.168.7.115 | node-to-node mesh | up | 13:19:33 | Established | +---------------+-------------------+-------+----------+-------------+

在master添加新的node节点

1、将新加的node节点的hosts文件解析harbor域名

root@node5:~# vim /etc/hosts 192.168.7.112 harbor.struggle.net

2、修改/etc/ansible目录下的hosts配置文件

root@k8s-master1:/etc/ansible# vim hosts [kube-node] 192.168.7.115 [new-node] # 预留组,后续添加node节点使用 192.168.7.114 # 新添加一个新的node节点 root@k8s-master1:/etc/ansible# vim 20.addnode.yml - docker # 如果添加的节点已经安装了docker,就把此项删除,不需要再安装 root@k8s-master1:/etc/ansible# ansible-playbook 20.addnode.yml

3、 在master查看此时添加的node节点已经准备就绪

NAME STATUS ROLES AGE VERSION 192.168.7.111 Ready node 2m3s v1.13.5 192.168.7.114 Ready node 29m v1.13.5 192.168.7.115 Ready node 4h25m v1.13.5

在master添加新的master节点

1、修改新的master节点的hosts文件,并将harbor的域名进行解析,或者修改DNS,指向windows主机的DNS上也可以。

# vim /etc/hosts 192.168.7.112 harbor.struggle.net

2、 修改ansible目录下的hosts文件,添加一个新的192.168.7.111主机,因此此前已经将master安装成功,此时需要删除执行yml内的docker安装

root@k8s-master1:/etc/ansible# vim hosts [kube-master] 192.168.7.110 [new-master] # 预留组,后续添加master节点使用 192.168.7.111 #添加一个新的master root@k8s-master1:/etc/ansible# vim 21.addmaster.yml - docker # 由于此前的master已经安装了docker,此时需要将此注释掉即可

3、开始执行剧本

root@k8s-master1:/etc/ansible# ansible-playbook 21.addmaster.yml

4、查看新添加的master状态,显示以下状态,说明master添加成功!!

root@k8s-master1:/etc/ansible# kubectl get node NAME STATUS ROLES AGE VERSION 192.168.7.110 Ready,SchedulingDisabled master 5h4m v1.13.5 192.168.7.111 Ready,SchedulingDisabled master 22m v1.13.5 192.168.7.114 Ready node 49m v1.13.5 192.168.7.115 Ready node 4h45m v1.13.5

再次配置haproxy

修改haproxy,将新加的master监听起来,作为高可用节点,当一个master宕机后,另一个master还可以继续使用

root@node5:~# vim /etc/haproxy//haproxy.cfg

listen k8s-api-server-6443

bind 192.168.7.248:6443

mode tcp

server 192.168.7.110 192.168.7.110:6443 check inter 2000 fall 3 rise 5

server 192.168.7.111 192.168.7.111:6443 check inter 2000 fall 3 rise 5

root@node5:~# systemctl restart haproxy