规划

s101(master+slave)

s102(master+slave)

s103(slave)

将tar包分发到每个节点

[centos@s101 /home/centos]$xsync.sh flink-1.10.1-bin-scala_2.12.tgz

解压tar包

xcall.sh tar -zxvf /home/centos/flink-1.10.1-bin-scala_2.12.tgz -C /soft/

[centos@s101 /soft]$ln -s flink-1.10.1/ flink

[centos@s101 /soft]$xsync.sh flink

注意

添加jar依赖否则会报错

[centos@s101 /soft/flink/lib]$cp flink-shaded-hadoop-2-uber-2.7.5-10.0.jar /soft/flink/lib/

[centos@s101 /soft/flink/lib]$cd /soft/flink/lib/

[centos@s101 /soft/flink/lib]$xsync.sh flink-shaded-hadoop-2-uber-2.7.5-10.0.jar

修改配置文件

[centos@s101 /soft/flink/conf]$nano flink-conf.yaml

# 配置 Master 的机器名( IP 地址) node01 = 192.168.100.201 jobmanager.rpc.address: s101 # 配置每个 taskmanager 生成的临时文件夹 taskmanager.tmp.dirs: /soft/flink/tmp #开启 HA, 使用文件系统作为快照存储 state.backend: filesystem #默认为 none, 用于指定 checkpoint 的 data files 和 meta data 存储的目录 state.checkpoints.dir: hdfs://s101:8020/flink/flink-checkpoints #默认为 none, 用于指定 savepoints 的默认目录 state.savepoints.dir: hdfs://s101:8020/flink/flink-checkpoints #使用 zookeeper 搭建高可用 high-availability: zookeeper # 存储 JobManager 的元数据到 HDFS,用来恢复 JobManager 所需的所有元数据 high-availability.storageDir: hdfs://s101:8020/flink/ha/ high-availability.zookeeper.quorum: s101:2181,s102:2181,s103:2181 #根zookeerper节点,在该节点下放置所有集群节点 high-availability.zookeeper.path.root:/flink #自定义集群 high-availability.cluster-id: /starFlinkCluster # The high-availability mode. Possible options are 'NONE' or 'zookeeper'. high-availability: zookeeper

[centos@s101 /soft/flink/conf]$nano masters

s101:8081

s102:8081

[centos@s101 /soft/flink/conf]$nano slaves

s101

s102

s103

所有节点创建

mkdir /soft/flink/tmp

分发

[centos@s101 /soft/flink]$scp -r /soft/flink/conf s102:/soft/flink/

[centos@s101 /soft/flink]$scp -r /soft/flink/conf s103:/soft/flink/

修改s102的配置文件

[centos@s102 /soft/flink/conf]$nano flink-conf.yaml

# 配置 Master 的机器名( IP 地址) node01 = 192.168.100.201

jobmanager.rpc.address: s102

基于yarn的

开启start-dfs.sh start-yarn.sh

yarn-daemon.sh start proxyserver

开启flink集群

[centos@s101 /soft/flink/bin]$./start-cluster.sh

Starting HA cluster with 2 masters.

Starting standalonesession daemon on host s101.

Starting standalonesession daemon on host s102.

Starting taskexecutor daemon on host s101.

Starting taskexecutor daemon on host s102.

Starting taskexecutor daemon on host s103.

web端查看

http://192.168.17.101:8081/#/job-manager/config

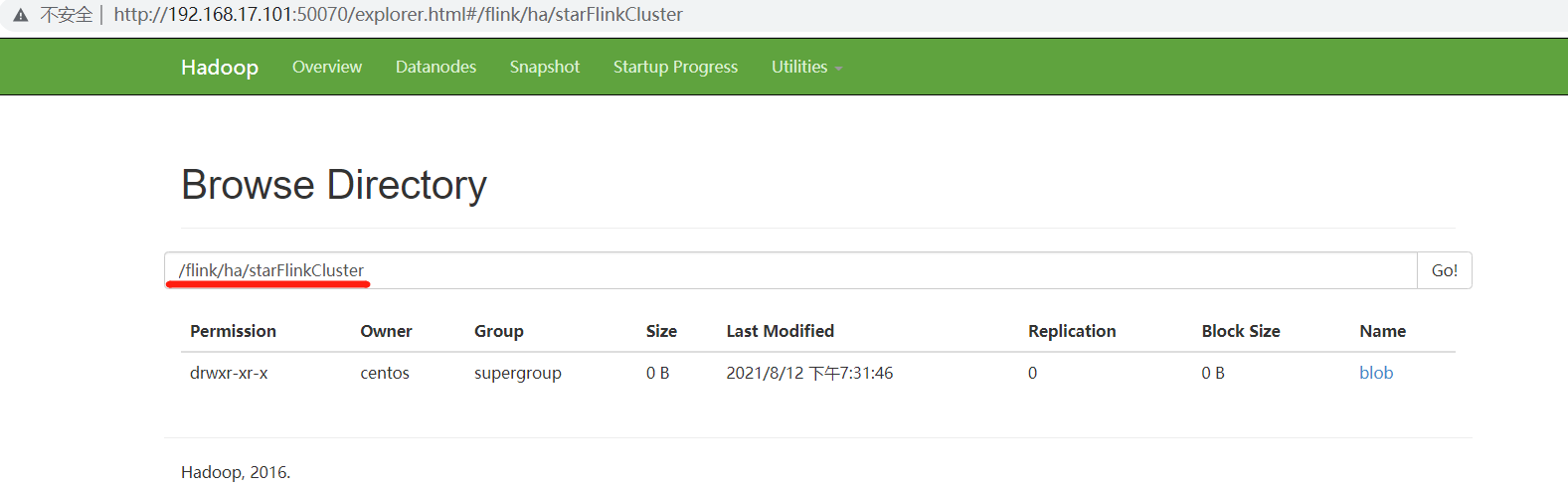

在hdfs生成一个保存flink的目录