补充:下载Hadoop源码

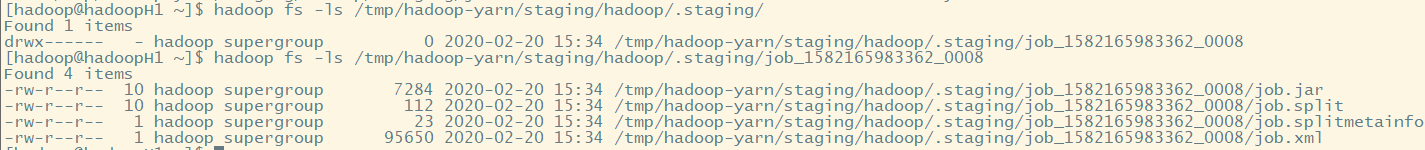

一:YARN框架:进行资源调度

(一)YARN框架流程图

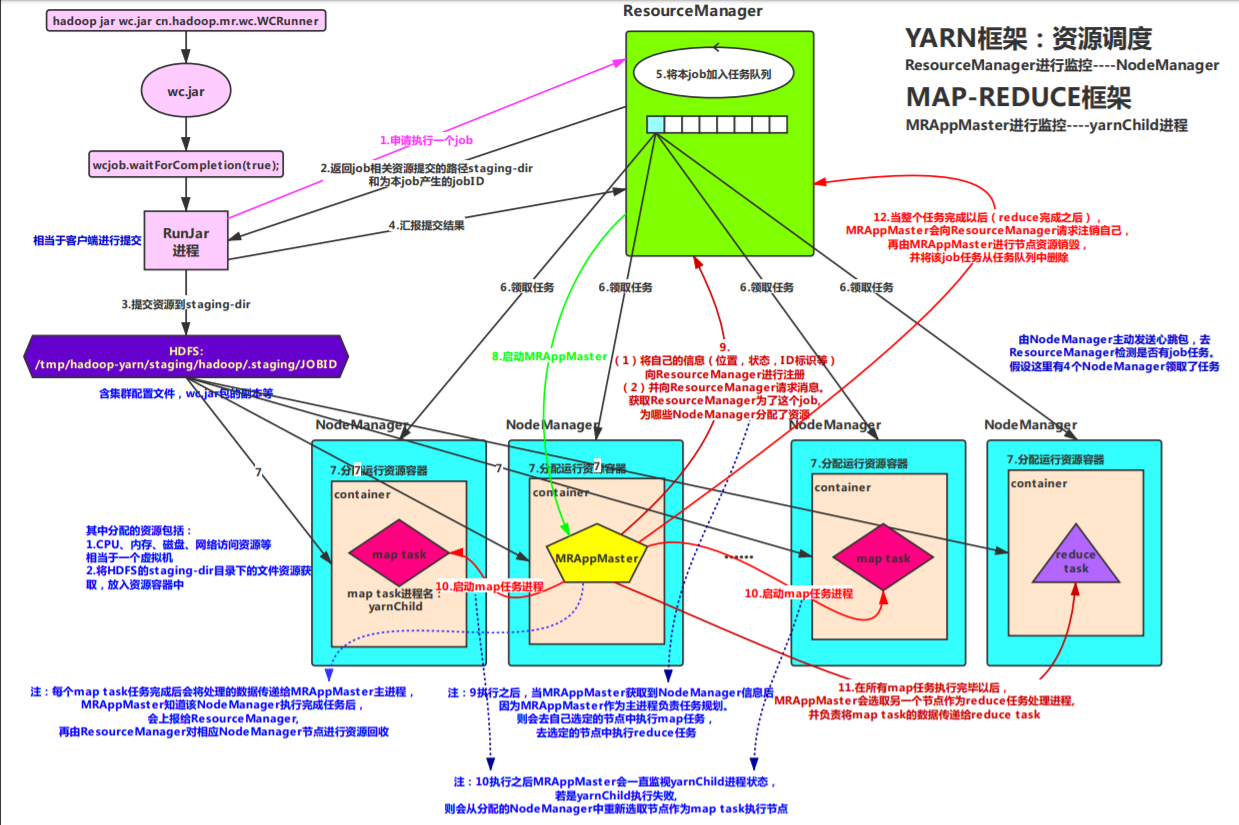

注意:yarn框架只做资源的管理,如果要运行一个程序,则会为该程序分配节点、内存、cpu等资源,至于该程序如何运行,yarn框架不进行管理。故也不会知道mapreduce的运行逻辑 。同样因为这样的松耦合,yarn框架的使用范围更加广泛,可以兼容其他运行程序。

补充:MapReduce框架知道我们写的map-reduce程序的运行逻辑。我们写的map-reduce中并没有管理层的任务运行分配逻辑,该逻辑被封装在MapReduce框架里面,被封装为MRAppMaster类,该类用于管理整个map-reduce的运行逻辑。(map-reduce程序的管理者)

重点:步骤6中,由NodeManager主动发送心跳包,去ResourceManager检测是否有job任务,只当该NodeManager(即DataNode)有相关资源时,才会领取该job

MRAppMaster由YARN框架启动(动态启动,随机选取)

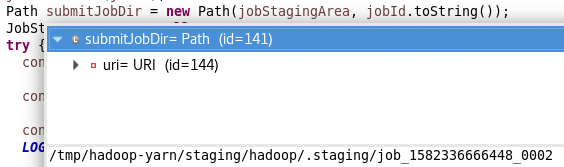

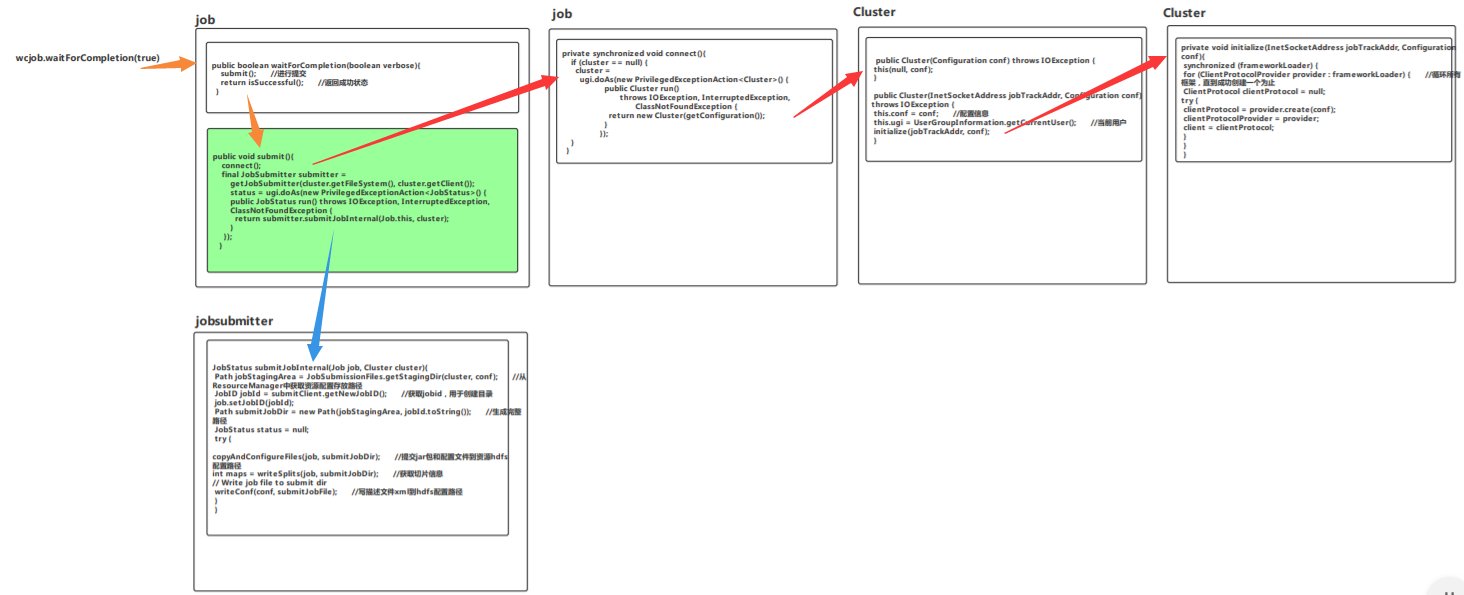

(二)补充:RunJar上传到HDFS中的资源:

1.其中job.jar就是我们生成的wc.jar包。

2.job.split中数据如下:

SPL/org.apache.hadoop.mapreduce.lib.input.FileSplit(hdfs://hadoopH1:9000/wc/input/wcdata.txt;

含有输入数据所在HDFS路径及文件名。

3.job.splitmetainfo数据如下:

META-SPhadoopH1;

为节点主机名。

4.job.xml含有集群的各种配置信息