- 1、生产环境k8s平台架构

- 2、官方提供三种部署方式

- 3、服务器规划

- 4、系统初始化

- 5、Etcd集群部署

- 5.1、安装cfssl工具

- 5.2、生成etcd证书

- 5.3、部署etcd

- 6、部署Master组件

- 7、部署Node组件

- 8、部署Web UI(Dashboard)

- 9、部署集群内部DNS解析服务(CoreDNS)

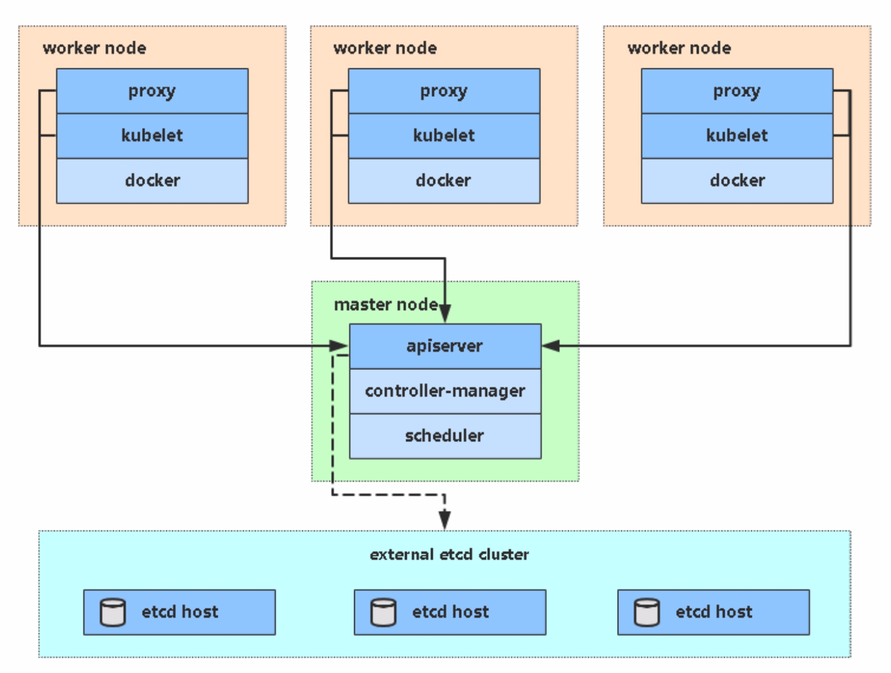

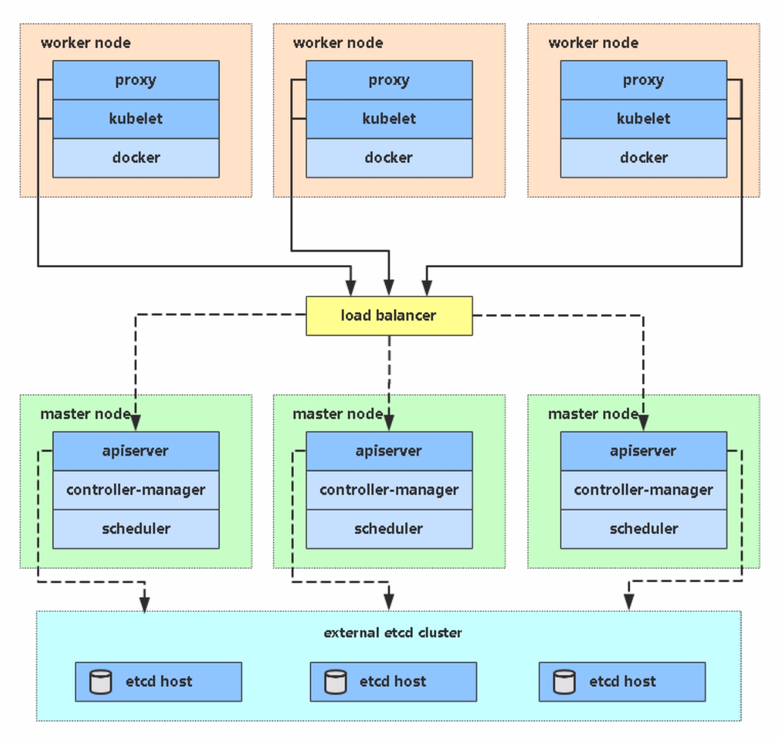

1、生产环境k8s平台架构

- 单master集群

- 多master集群(HA)

2、官方提供三种部署方式

-

minikube

Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。

部署地址:

https://kubernetes.io/docs/setup/minikube/ -

kubeadm

Kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

部署地址:

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/ -

二进制

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

下载地址:

https://github.com/kubernetes/kubernetes/releases

3、服务器规划

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master-01 | 192.168.2.10 | kube-apiserver kube-controller-manager kube-scheduller etcd |

| k8s-node-01 | 192.168.2.11 | kubelet kube-proxy docker etcd |

| k8s-node-02 | 192.168.2.12 | kubelet kube-proxy docker etcd |

4、系统初始化

- 关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

- 关闭selinux:

setenforce 0 # 临时

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

- 关闭swap:

swapoff -a # 临时

vim /etc/fstab # 永久

- 同步系统时间:

ntpdate time.windows.com

- 添加hosts:

vim /etc/hosts

192.168.2.10 k8s-master-01

192.168.2.11 k8s-node-01

192.168.2.12 k8s-node-02

- 修改主机名:

hostnamectl set-hostname k8s-master-01

5、Etcd集群部署

5.1、安装cfssl工具

[root@k8s-master-01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

[root@k8s-master-01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

[root@k8s-master-01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

[root@k8s-master-01 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

在任意节点完成以下操作

5.2、生成etcd证书

[root@k8s-master-01 ~]# mkdir /usr/local/kubernetes/{k8s-cert,etcd-cert} -p

[root@master01 ~]# cd /usr/local/kubernetes/etcd-cert/

5.2.1 创建用来生成 CA 文件的 JSON 配置文件

[root@k8s-master-01 etcd-cert]# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

5.2.2 创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

[root@k8s-master-01 etcd-cert]# vim ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

5.2.3 生成CA证书(ca.pem)和密钥(ca-key.pem)

[root@k8s-master-01 etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2019/10/07 18:14:49 [INFO] generating a new CA key and certificate from CSR

2019/10/07 18:14:49 [INFO] generate received request

2019/10/07 18:14:49 [INFO] received CSR

2019/10/07 18:14:49 [INFO] generating key: rsa-2048

2019/10/07 18:14:50 [INFO] encoded CSR

2019/10/07 18:14:50 [INFO] signed certificate with serial number 241296075377614671289689316194561193913636321821

[root@k8s-master-01 etcd-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

5.2.4 创建 etcd 证书签名请求

[root@k8s-master-01 etcd-cert]# vim server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.2.10",

"192.168.2.11",

"192.168.2.12"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

5.2.5 生成etcd证书和私钥

[root@k8s-master-01 etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2019/10/07 18:18:00 [INFO] generate received request

2019/10/07 18:18:00 [INFO] received CSR

2019/10/07 18:18:00 [INFO] generating key: rsa-2048

2019/10/07 18:18:00 [INFO] encoded CSR

2019/10/07 18:18:00 [INFO] signed certificate with serial number 344196956354486691280839684095587396673015955849

2019/10/07 18:18:00 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master-01 etcd-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem

5.3、部署etcd

5.3.1 etcd二进制包

下载地址:https://github.com/coreos/etcd/releases

[root@k8s-master-01 etcd-cert]# cd ..

[root@k8s-master-01 kubernetes]# mkdir soft

[root@k8s-master-01 kubernetes]# cd soft/

[root@k8s-master-01 soft]# tar xf etcd-v3.4.1-linux-amd64.tar.gz

[root@k8s-master-01 soft]# mkdir /opt/etcd/{bin,cfg,ssl} -p

[root@k8s-master-01 soft]# mv etcd-v3.4.1-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

5.3.2 设置etcd配置文件

[root@k8s-master-01 soft]# vim /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.2.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.2.10:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.10:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.2.10:2380,etcd-2=https://192.168.2.11:2380,etcd-3=https://192.168.2.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

说明:

ETCD_NAME 节点名称

ETCD_DATA_DIR 数据目录

ETCD_LISTEN_PEER_URLS 集群通信监听地址

ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址

ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址

ETCD_INITIAL_CLUSTER 集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN 集群Token

ETCD_INITIAL_CLUSTER_STATE 加入集群的当前状态,new是新集群,existing表示加入已有集群

5.3.3 创建etcd系统服务

[root@master01 soft]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd

--name=${ETCD_NAME}

--data-dir=${ETCD_DATA_DIR}

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS}

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS}

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS}

--initial-cluster=${ETCD_INITIAL_CLUSTER}

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN}

--initial-cluster-state=new

--cert-file=/opt/etcd/ssl/server.pem

--key-file=/opt/etcd/ssl/server-key.pem

--peer-cert-file=/opt/etcd/ssl/server.pem

--peer-key-file=/opt/etcd/ssl/server-key.pem

--trusted-ca-file=/opt/etcd/ssl/ca.pem

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

5.3.4 拷贝证书

[root@k8s-master-01 soft]# cp ../etcd-cert/{ca,server-key,server}.pem /opt/etcd/ssl/

5.3.5 拷贝配置到其他节点并修改配置

[root@k8s-master-01 soft]# scp -r /opt/etcd/ root@192.168.2.11:/opt/

[root@k8s-master-01 soft]# scp -r /opt/etcd/ root@192.168.2.12:/opt/

[root@k8s-master-01 soft]# scp /usr/lib/systemd/system/etcd.service root@192.168.2.11:/usr/lib/systemd/system

[root@k8s-master-01 soft]# scp /usr/lib/systemd/system/etcd.service root@192.168.2.12:/usr/lib/systemd/systemroot@172.16.1.66:/usr/lib/systemd/system

[root@k8s-node-01 ~]# vim /opt/etcd/cfg/etcd.conf

修改ETCD_NAME为节点的ETCD_NAME,url的ip地址为节点对应ip

5.3.6 启动并检查

在每个节点启动etcd并加入开机启动

systemctl daemon-reload

systemctl start etcd.service

systemctl enable etcd.service

检查集群状态

[root@k8s-master-01 bin]# /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.10:2379,https://192.168.2.11:2379,https://192.168.2.12:2379" cluster-health

member 272dad16f2666b47 is healthy: got healthy result from https://192.168.2.11:2379

member 2ab38a249cac7a26 is healthy: got healthy result from https://192.168.2.12:2379

member e99d560084d446c8 is healthy: got healthy result from https://192.168.2.10:2379

cluster is healthy

如上输出,则etcd部署没有问题

6、部署Master组件

6.1 生成证书

6.1.1 创建用来生成 CA 文件的 JSON 配置文件

[root@k8s-master-01 k8s-cert]# cd /usr/local/kubernetes/k8s-cert/

[root@k8s-master-01 k8s-cert]# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

6.1.2 创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

[root@k8s-master-01 k8s-cert]# vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

6.1.3 生成CA证书(ca.pem)和密钥(ca-key.pem)

[root@k8s-master-01 k8s-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2019/10/07 19:40:46 [INFO] generating a new CA key and certificate from CSR

2019/10/07 19:40:46 [INFO] generate received request

2019/10/07 19:40:46 [INFO] received CSR

2019/10/07 19:40:46 [INFO] generating key: rsa-2048

2019/10/07 19:40:46 [INFO] encoded CSR

2019/10/07 19:40:46 [INFO] signed certificate with serial number 588664830704961805809033488881742877285758664105

[root@k8s-master-01 k8s-cert]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

6.1.4 生成api-server证书

证书签名请求文件中的hosts可以多规划一些,方便以后添加节点避免重新制作证书

[root@k8s-master-01 k8s-cert]# vim server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.2.10",

"192.168.2.11",

"192.168.2.12",

"192.168.2.13",

"192.168.2.14",

"192.168.2.15"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

[root@k8s-master-01 k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2019/10/07 19:42:13 [INFO] generate received request

2019/10/07 19:42:13 [INFO] received CSR

2019/10/07 19:42:13 [INFO] generating key: rsa-2048

2019/10/07 19:42:13 [INFO] encoded CSR

2019/10/07 19:42:13 [INFO] signed certificate with serial number 662961272942353092215454648475276017019331528744

2019/10/07 19:42:13 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master-01 k8s-cert]# ls server

server.csr server-csr.json server-key.pem server.pem

6.1.5 生成kube-proxy证书

[root@k8s-master-01 k8s-cert]# vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

[root@k8s-master-01 k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2019/10/07 19:43:56 [INFO] generate received request

2019/10/07 19:43:56 [INFO] received CSR

2019/10/07 19:43:56 [INFO] generating key: rsa-2048

2019/10/07 19:43:57 [INFO] encoded CSR

2019/10/07 19:43:57 [INFO] signed certificate with serial number 213756085107124414330670553092451400314905460929

2019/10/07 19:43:57 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master-01 k8s-cert]# ls kube-proxy

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

6.1.6 生成admin管理员证书

[root@master01 k8s-cert]# vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

[root@master01 k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

[root@master01 k8s-cert]# ll admin

admin.csr admin-csr.json admin-key.pem admin.pem

6.1.7 拷贝证书

[root@k8s-master-01 k8s-cert]# cp ca.pem ca-key.pem server.pem server-key.pem /opt/kubernetes/ssl/

6.2 创建TLSBootstrapping Token

[root@master01 k8s-cert]# cd /opt/kubernetes/cfg/

[root@master01 cfg]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

c47ffb939f5ca36231d9e3121a252940

[root@master01 cfg]# vim token.csv

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

6.3 准备二进制包

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.16.md

[root@master01 ~]# cd /usr/local/kubernetes/soft/

[root@master01 soft]# tar xf kubernetes-server-linux-amd64.tar.gz

[root@master01 soft]# mkdir /opt/kubernetes/{bin,cfg,ssl,logs} -p

[root@master01 soft]# cd kubernetes/server/bin/

[root@master01 bin]# cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin/

6.4 部署kube-apiserver

6.4.1 创建apiserver配置文件

[root@k8s-master-01 bin]# cd /opt/kubernetes/cfg/

[root@k8s-master-01 cfg]# vim kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--etcd-servers=https://192.168.2.10:2379,https://192.168.2.11:2379,https://192.168.2.12:2379

--bind-address=192.168.2.10

--secure-port=6443

--advertise-address=192.168.2.10

--allow-privileged=true

--service-cluster-ip-range=10.0.0.0/24

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction

--authorization-mode=RBAC,Node

--enable-bootstrap-token-auth=true

--token-auth-file=/opt/kubernetes/cfg/token.csv

--service-node-port-range=30000-32767

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem

--tls-cert-file=/opt/kubernetes/ssl/server.pem

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem

--client-ca-file=/opt/kubernetes/ssl/ca.pem

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem

--etcd-cafile=/opt/etcd/ssl/ca.pem

--etcd-certfile=/opt/etcd/ssl/server.pem

--etcd-keyfile=/opt/etcd/ssl/server-key.pem

--audit-log-maxage=30

--audit-log-maxbackup=3

--audit-log-maxsize=100

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

参数说明:

--logtostderr 启用日志

---v 日志等级

--etcd-servers etcd集群地址

--bind-address 监听地址

--secure-port https安全端口

--advertise-address 集群通告地址

--allow-privileged 启用授权

--service-cluster-ip-range Service虚拟IP地址段

--enable-admission-plugins 准入控制模块

--authorization-mode 认证授权,启用RBAC授权和节点自管理

--enable-bootstrap-token-auth 启用TLS bootstrap功能,后面会讲到

--token-auth-file token文件

--service-node-port-range Service Node类型默认分配端口范围

6.4.2 systemd管理apiserver

[root@k8s-master-01 cfg]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

6.4.3 启动apiserver

[root@k8s-master-01 cfg]# systemctl daemon-reload

[root@k8s-master-01 cfg]# systemctl enable kube-apiserver

[root@k8s-master-01 cfg]# systemctl restart kube-apiserver

[root@k8s-master-01 cfg]# systemctl status kube-apiserver

6.5 部署controller-manager

6.5.1 创建controller-manager配置文件

[root@k8s-master-01 cfg]# vim kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--leader-elect=true

--master=127.0.0.1:8080

--address=127.0.0.1

--allocate-node-cidrs=true

--cluster-cidr=10.244.0.0/16

--service-cluster-ip-range=10.0.0.0/24

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem

--root-ca-file=/opt/kubernetes/ssl/ca.pem

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem

--experimental-cluster-signing-duration=87600h0m0s"

参数说明:

--master 连接本地apiserver

--leader-elect 当该组件启动多个时,自动选举(HA)

6.5.2 systemd管理controller-manager

[root@k8s-master-01 cfg]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

6.5.3 启动controller-manager

[root@master01 cfg]# systemctl daemon-reload

[root@master01 cfg]# systemctl enable kube-controller-manager

[root@master01 cfg]# systemctl restart kube-controller-manager

[root@master01 cfg]# systemctl status kube-controller-manager

6.6 部署kube-scheduler

6.6.1 创建kube-scheduler配置文件

[root@k8s-master-01 vim]# cat kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--leader-elect

--master=127.0.0.1:8080

--address=127.0.0.1"

6.6.2 systemd管理kube-scheduler

[root@master01 cfg]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

6.6.3 启动kube-scheduler

[root@master01 cfg]# systemctl daemon-reload

[root@master01 cfg]# systemctl enable kube-scheduler

[root@master01 cfg]# systemctl restart kube-scheduler

[root@master01 cfg]# systemctl status kube-scheduler

6.6 给kubelet-bootstrap授权

Master apiserver启用TLS认证后,Node节点kubelet组件想要加入集群,必须使用CA签发的有效证书才能与apiserver通信,当Node节点很多时,签署证书是一件很繁琐的事情,因此有了TLS Bootstrapping机制,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。

TLS Bootstrapping认证机制:

[root@k8s-master-01 cfg]# kubectl create clusterrolebinding kubelet-bootstrap

--clusterrole=system:node-bootstrapper

--user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

6.7 检查集群状态

[root@master01 cfg]# cp /opt/kubernetes/bin/kubectl /usr/local/bin/

[root@master01 cfg]# kubectl get cs

NAME AGE

scheduler <unknown>

controller-manager <unknown>

etcd-0 <unknown>

etcd-1 <unknown>

etcd-2 <unknown>

7、部署Node组件

7.1、安装docker

docker二进制包下载地址:https://download.docker.com/linux/static/stable/x86_64/

[root@k8s-node-01 ~]# tar xf docker-18.09.6.tgz

[root@k8s-node-01 ~]# mv docker/* /usr/bin/

[root@k8s-node-01 ~]# mkdir /etc/docker

[root@k8s-node-01 ~]# cd /etc/docker

[root@k8s-node-01 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["http://bc437cce.m.daocloud.io"]

}

[root@k8s-node-01 ~]# vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@k8s-node-01 ~]# systemctl daemon-reload

[root@k8s-node-01 ~]# systemctl enable docker.service

[root@k8s-node-01 ~]# systemctl start docker.service

[root@k8s-node-01 ~]# docker version

Client: Docker Engine - Community

Version: 18.09.6

API version: 1.39

Go version: go1.10.8

Git commit: 481bc77

Built: Sat May 4 02:33:34 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.6

API version: 1.39 (minimum version 1.12)

Go version: go1.10.8

Git commit: 481bc77

Built: Sat May 4 02:41:08 2019

OS/Arch: linux/amd64

Experimental: false

7.2、准备二进制包

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.16.md

[root@k8s-node-01 ~]# cd /usr/local/kubernetes/soft/

[root@k8s-node-01 soft]# tar xf kubernetes-node-linux-amd64.tar.gz

[root@k8s-node-01 soft]# cd kubernetes/node/bin/

[root@k8s-node-01 soft]# mkdir /opt/kubernetes/{bin,cfg,ssl,logs} -p

[root@k8s-node-01 bin]# cp kubelet kube-proxy /opt/kubernetes/bin/

7.3、拷贝证书到node

[root@k8s-master-01 k8s-cert]# scp ca.pem kube-proxy.pem kube-proxy-key.pem root@192.168.2.11:/opt/kubernetes/ssl/

7.4、部署kubelet

7.4.1、创建kubelet配置文件

[root@k8s-node-01 opt]# vim /opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--hostname-override=k8s-node-01

--network-plugin=cni

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig

--config=/opt/kubernetes/cfg/kubelet-config.yml

--cert-dir=/opt/kubernetes/ssl

--pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.0"

参数说明:

--hostname-override:指定节点注册到k8s中显示的节点名称

--network-plugin:启用网络插件

--bootstrap-kubeconfig 指定刚才生成的bootstrap.kubeconfig文件

--cert-dir 颁发证书存放位置

--pod-infra-container-image 管理Pod网络的镜像

7.4.2、创建bootstrap.kubeconfig文件

[root@k8s-node-01 opt]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://192.168.2.10:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: c47ffb939f5ca36231d9e3121a252940

7.4.3、创建kubelet-config.yml文件

[root@k8s-node-01 opt]# vim /opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

7.4.4、创建kubelet.kubeconfig文件

[root@k8s-node-01 cfg]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://192.168.2.10:6443

name: default-cluster

contexts:

- context:

cluster: default-cluster

namespace: default

user: default-auth

name: default-context

current-context: default-context

kind: Config

preferences: {}

users:

- name: default-auth

user:

client-certificate: /opt/kubernetes/ssl/kubelet-client-current.pem

client-key: /opt/kubernetes/ssl/kubelet-client-current.pem

7.4.5、systemd管理kubelet

[root@k8s-node-01 ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Before=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

7.4.6、启动服务

[root@k8s-node-01 ~]# systemctl daemon-reload

[root@k8s-node-01 ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-node-01 ~]# systemctl start kubelet.service

7.4.7、允许给Node颁发证书

[root@k8s-master-01 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-xjZUw-J4jvu4ht0mtt05-lP3hYcQQHt-DEKkm-gRg4Y 80s kubelet-bootstrap Pending

[root@k8s-master-01 ~]# kubectl certificate approve node-csr-xjZUw-J4jvu4ht0mtt05-lP3hYcQQHt-DEKkm-gRg4Y

certificatesigningrequest.certificates.k8s.io/node-csr-xjZUw-J4jvu4ht0mtt05-lP3hYcQQHt-DEKkm-gRg4Y approved

[root@k8s-master-01 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-xjZUw-J4jvu4ht0mtt05-lP3hYcQQHt-DEKkm-gRg4Y 94s kubelet-bootstrap Approved,Issued

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node-01 NotReady <none> 7s v1.16.0

[root@k8s-node-01 kubernetes]# ll /opt/kubernetes/ssl/

total 24

-rw-r--r-- 1 root root 1359 Oct 7 23:15 ca.pem

-rw------- 1 root root 1273 Oct 7 23:23 kubelet-client-2019-10-07-23-23-29.pem

lrwxrwxrwx 1 root root 58 Oct 7 23:23 kubelet-client-current.pem -> /opt/kubernetes/ssl/kubelet-client-2019-10-07-23-23-29.pem

-rw-r--r-- 1 root root 2185 Oct 7 23:22 kubelet.crt

-rw------- 1 root root 1675 Oct 7 23:22 kubelet.key

-rw------- 1 root root 1679 Oct 7 23:15 kube-proxy-key.pem

-rw-r--r-- 1 root root 1403 Oct 7 23:15 kube-proxy.pem

7.5、部署kube-proxy

7.5.1、创建kube-proxy配置文件

[root@k8s-node-01 opt]# vim /opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false

--v=2

--log-dir=/opt/kubernetes/logs

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

7.5.2、创建kube-proxy.kubeconfig文件

[root@k8s-node-01 opt]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://192.168.2.10:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate: /opt/kubernetes/ssl/kube-proxy.pem

client-key: /opt/kubernetes/ssl/kube-proxy-key.pem

7.5.3、创建kube-proxy-config.yml文件

[root@k8s-node-01 opt]# cat /opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-node-01

clusterCIDR: 10.0.0.0/24

mode: ipvs

ipvs:

scheduler: "rr"

iptables:

masqueradeAll: true

7.5.4、systemd管理kube-proxy

[root@k8s-node-01 ~]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

7.5.5、启动服务

[root@k8s-node-01 kubernetes]# systemctl daemon-reload

[root@k8s-node-01 kubernetes]# systemctl enable kube-proxy.service

[root@k8s-node-01 kubernetes]# systemctl start kube-proxy.service

[root@k8s-node-01 kubernetes]# systemctl status kube-proxy.service

7.6、部署另外一个node

步骤省略

7.7、部署cni网络插件

相关内容可参考:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

7.7.1、准备二进制包

下载地址:https://github.com/containernetworking/plugins/releases

[root@k8s-node-01 ~]# mkdir /opt/cni/bin -p

[root@k8s-node-01 ~]# mkdir /etc/cni/net.d -p

[root@k8s-node-01 soft]# tar xf cni-plugins-linux-amd64-v0.8.2.tgz -C /opt/cni/bin/

7.7.2、部署k8s集群网络

yaml文件下载地址https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

确保yaml文件中的镜像地址可正常下载,json文件中的网络地址与集群规划中相符合

[root@k8s-master-01 ~]# cat kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@k8s-master-01 ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8s-master-01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-amd64-6z2f8 1/1 Running 0 32s

kube-flannel-ds-amd64-qwb9h 1/1 Running 0 32s

创建角色绑定,授权查看日志

[root@k8s-master-01 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

[root@k8s-master-01 ~]# kubectl -n kube-system logs -f kube-flannel-ds-amd64-6z2f8

7.7.3、创建一个测试pod,查看是否成功

[root@k8s-master-01 ~]# kubectl create deployment test-nginx --image=nginx

deployment.apps/test-nginx created

[root@k8s-master-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx-7d97ffc85d-gzxrl 0/1 ContainerCreating 0 3s

[root@k8s-master-01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx-7d97ffc85d-gzxrl 1/1 Running 0 48s 10.244.1.2 k8s-node-02 <none> <none>

# 查看对应node上是否有cni网卡生成

[root@k8s-node-02 ~]# ifconfig cni0

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.1.1 netmask 255.255.255.0 broadcast 10.244.1.255

inet6 fe80::5c7c:daff:fe3f:a6bd prefixlen 64 scopeid 0x20<link>

ether 5e:7c:da:3f:a6:bd txqueuelen 1000 (Ethernet)

RX packets 1 bytes 28 (28.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 656 (656.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# 暴露服务

[root@k8s-master-01 ~]# kubectl expose deployment test-nginx --port=80 --type=NodePort

service/test-nginx exposed

[root@k8s-master-01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 15h

test-nginx NodePort 10.0.0.91 <none> 80:32089/TCP 26s

# 在node上通过nodeip:32089访问是否正常

7.8、授权apiserver访问kubelet

为提供安全性,kubelet禁止匿名访问,必须授权才可以访问

[root@k8s-master-01 ~]# vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

[root@k8s-master-01 ~]# kubectl apply -f apiserver-to-kubelet-rbac.yaml

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

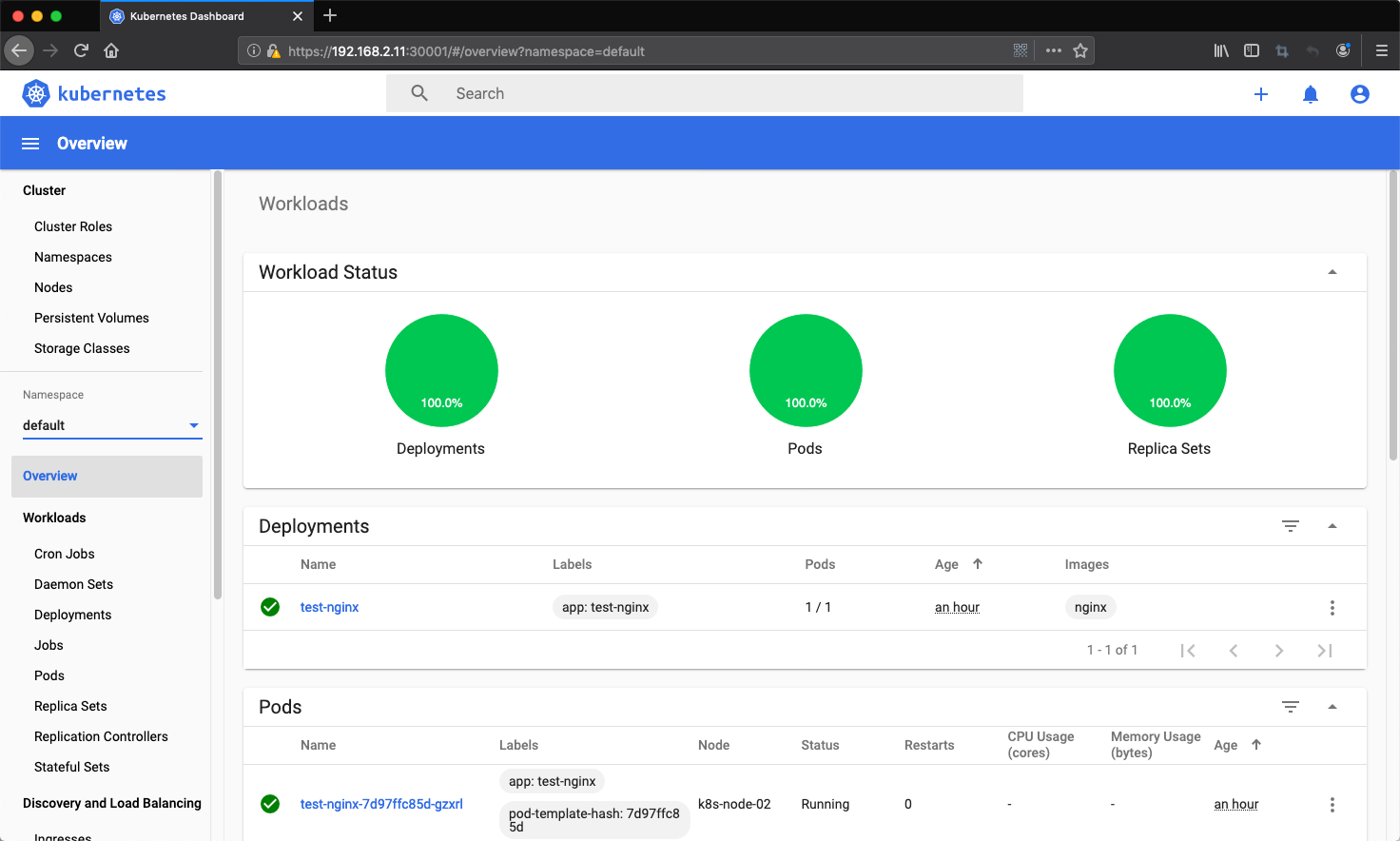

8、部署Web UI(Dashboard)

8.1、部署dashboard

地址:https://github.com/kubernetes/dashboard

文档:https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/

部署最新版本v2.0.0-beta4,下载yaml

[root@k8s-master-01 ~]# wget -c https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml

# 修改service类型为nodeport

[root@k8s-master-01 ~]# vim recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

...

[root@k8s-master-01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master-01 ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-566cddb686-trqhd 1/1 Running 0 70s

kubernetes-dashboard-7b5bf5d559-8bzbh 1/1 Running 0 70s

[root@k8s-master-01 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.0.0.199 <none> 8000/TCP 81s

kubernetes-dashboard NodePort 10.0.0.107 <none> 443:30001/TCP 81s

# 在node上通过https://nodeip:30001访问是否正常

8.2、创建service account并绑定默认cluster-admin管理员集群角色

[root@k8s-master-01 ~]# vim dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@k8s-master-01 ~]# kubectl apply -f dashboard-adminuser.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

# 获取token

[root@k8s-master-01 ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-24qrg

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 4efbdf22-ef2b-485f-b177-28c085c71dc9

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlRMSFVUZGxOaU1zODRVdVptTkVnOV9wTEUzaGhZZDJVaVNzTndrZU9zUmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTI0cXJnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI0ZWZiZGYyMi1lZjJiLTQ4NWYtYjE3Ny0yOGMwODVjNzFkYzkiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.fM9b4zU45ZI1SsWhg9_v3z3-C6Y173Y3d1xUwp9GdFyTN3FSKfxwBMQbo2lC1mDick13dnqnnHoLzE55zTamGmJdWK_9HUbEV3qDgtCNYISujiQazfbAmv-ctWPU7RoWqiJ5MztK5K4g-mFTEJWNrb0KQjs1tCVvC3S3Es2VkCHztBoM0JM3WYrW0lyruQmDf5KeXn3mw_TBlAKd4A-EUYZJ27-eKLbRyGRjbvI4I93DaOlidXbXkRq3dJXKOKjveVUaleY20cog4_hAkiQWIiUFImrHhv23h9AfCO5z7JhgWXNWMKL9aZSf1PXuDutAymdyNjIKqlHwE4tc4EVS1Q

8.3、使用token登录到dashboard界面

9、部署集群内部DNS解析服务(CoreDNS)

DNS服务监视Kubernetes API,为每一个Service创建DNS记录用于域名解析。

ClusterIP A记录格式:

示例:my-svc.my-namespace.svc.cluster.local

部署CoreDNS,修改官方yaml中的镜像地址,域名称cluster.local,dns的clusterip

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

9.1、部署coredns

下载yaml,修改配置

[root@k8s-master-01 ~]# cat coredns.yaml

# Warning: This is a file generated from the base underscore template file: coredns.yaml.base

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: lizhenliang/coredns:1.2.2

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

[root@k8s-master-01 ~]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

[root@k8s-master-01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d8cfdd59d-czx9w 1/1 Running 0 7s

kube-flannel-ds-amd64-6z2f8 1/1 Running 0 120m

kube-flannel-ds-amd64-qwb9h 1/1 Running 0 120m

9.2、测试dns是否可用

[root@k8s-master-01 ~]# vim bs.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- image: busybox:1.28.4

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always

[root@k8s-master-01 ~]# kubectl apply -f bs.yaml

pod/busybox created

[root@k8s-master-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 16s

[root@k8s-master-01 YAML]# kubectl exec -it busybox sh

/ # nslookup kubernetes

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

/ # nslookup kubernetes.default.svc.cluster.local

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

/ # nslookup www.baidu.com

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: www.baidu.com

Address 1: 220.181.38.150

Address 2: 220.181.38.149

至此、二进制搭建最新1.16.0版本的kubernetes集群完成。