介绍

Dask本质上由两部分构成:动态计算调度、集群管理,高级Dataframe api模块;类似于spark与pandas。Dask内部实现了分布式调度,无需用户自行编写复杂的调度逻辑和程序,通过简单的方法实现了分布式计算,支持部分模型并行处理(例如分部署算法:xgboost、LR、sklearn等)。Dask 专注于数据科学领域,与Pandas非常接近,但并不完全兼容。

集群搭建:

在Dask集群中,存在多种角色:client,scheduler, worker

- client: 用于客户client与集群之间的交互

- scheduler:主节点(集群的注册中心)管理点,负责client提交的任务管理,以不同策略分发不同worker节点

- worker:工作节点,受scheduler管理,负责数据计算

1. 主节点(scheduler):

- scheduler:默认端口8786

a. 依赖包:dask、distributed

b. 安装:pip install dask distributed

c. 启动:dask-scheduler

distributed.scheduler - INFO - -----------------------------------------------

distributed.http.proxy - INFO - To route to workers diagnostics web server please install jupyter-server-proxy: python -m pip install jupyter-server-proxy

distributed.scheduler - INFO - -----------------------------------------------

distributed.scheduler - INFO - Clear task state

distributed.scheduler - INFO - Scheduler at: tcp://192.168.1.21:8786

distributed.scheduler - INFO - dashboard at: :8787

- web UI:默认端口8787

a. web 登录提示:需要安装依赖项( bokeh )

b. 安装:pip install bokeh>=0.13.0

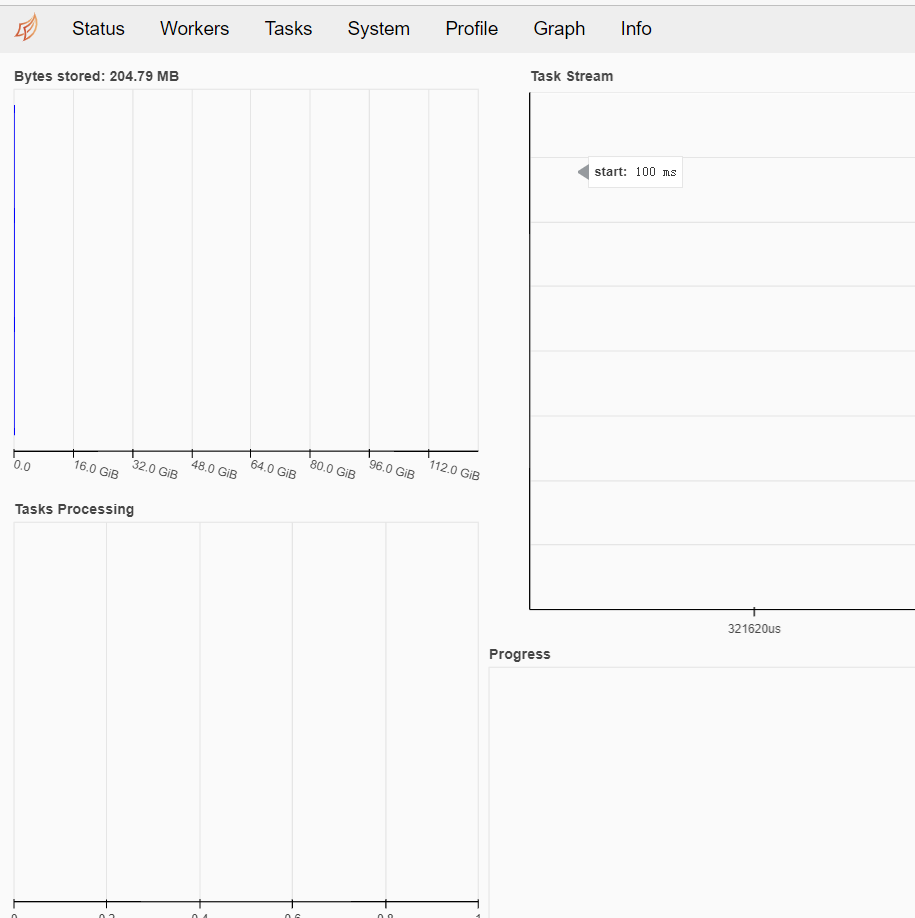

c. 界面效果:

2. 工作节点(worker):

a. 依赖包:dask、distributed

b. 安装:pip install dask distributed

c. 启动:以192.168.1.22 为例,192.168.1.23雷同

> dask-worker 192.168.1.21:8786

distributed.nanny - INFO - Start Nanny at: 'tcp://192.168.1.22:36803'

distributed.worker - INFO - Start worker at: tcp://192.168.1.22:37089

distributed.worker - INFO - Listening to: tcp://192.168.1.22:37089

distributed.worker - INFO - dashboard at: 192.168.1.22:36988

distributed.worker - INFO - Waiting to connect to: tcp://192.168.1.21:8786

distributed.worker - INFO - -------------------------------------------------

distributed.worker - INFO - Threads: 24

distributed.worker - INFO - Memory: 33.52 GB

distributed.worker - INFO - Local Directory: /home/binger/dask-server/dask-worker-space/worker-ntrdwzqp

distributed.worker - INFO - -------------------------------------------------

distributed.worker - INFO - Registered to: tcp://192.168.1.21:8786

distributed.worker - INFO - -------------------------------------------------

distributed.core - INFO - Starting established connection

主节点变化:

distributed.scheduler - INFO - -----------------------------------------------

distributed.scheduler - INFO - -----------------------------------------------

distributed.scheduler - INFO - Clear task state

distributed.scheduler - INFO - Scheduler at: tcp://192.168.1.21:8786

distributed.scheduler - INFO - dashboard at: :8787

distributed.scheduler - INFO - Register worker <Worker 'tcp:/192.168.1.22:37089', name: tcp://192.168.1.22:37089, memory: 0, processing: 0>

distributed.scheduler - INFO - Starting worker compute stream, tcp://192.168.1.22:37089

distributed.core - INFO - Starting established connection

3. dask-scheduler 启动失败:ValueError: 'default' must be a list when 'multiple' is true.

Traceback (most recent call last):

File "D:\Program Files\Python36\lib\runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "D:\Program Files\Python36\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "E:\workspace\ceshi\venv\Scripts\dask-scheduler.exe\__main__.py", line 4, in <module>

File "e:\workspace\ceshi\venv\lib\site-packages\distributed\cli\dask_scheduler.py", line 122, in <module>

@click.version_option()

File "e:\workspace\ceshi\venv\lib\site-packages\click\decorators.py", line 247, in decorator

_param_memo(f, OptionClass(param_decls, **option_attrs))

File "e:\workspace\ceshi\venv\lib\site-packages\click\core.py", line 2465, in __init__

super().__init__(param_decls, type=type, multiple=multiple, **attrs)

File "e:\workspace\ceshi\venv\lib\site-packages\click\core.py", line 2101, in __init__

) from None

ValueError: 'default' must be a list when 'multiple' is true.

解决办法:修改click 版本<8.0

pip install "click>=7,<8"