redis-cluster集群

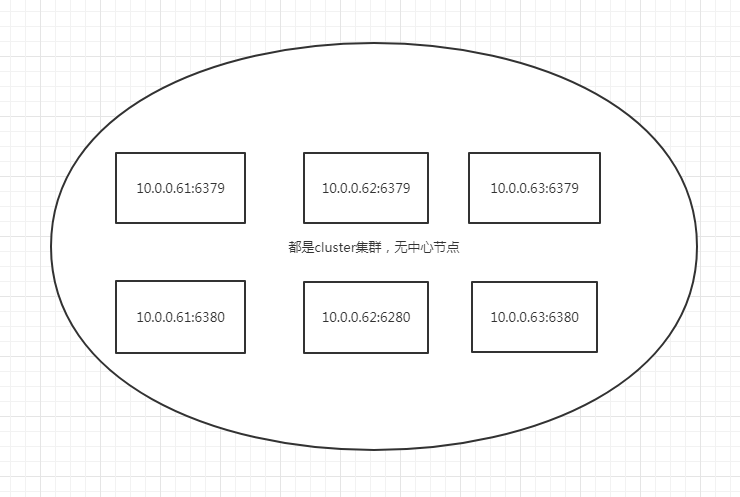

Redis集群搭建的方式有多种,例如使用zookeeper等,但从redis 3.0之后版本支持redis-cluster集群,Redis-Cluster采用无中心结构,每个节点保存数据和整个集群状态,每个节点都和其他所有节点连接。其redis-cluster架构图如下:

其结构特点:

- 所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽。

- 节点的fail是通过集群中超过半数的节点检测失效时才生效。

- 客户端与redis节点直连,不需要中间proxy层.客户端不需要连接集群所有节点,连接集群中任何一个可用节点即可。

- redis-cluster把所有的物理节点映射到[0-16383]slot上(不一定是平均分配),cluster 负责维护node<->slot<->value。

- Redis集群预分好16384个桶,当需要在 Redis 集群中放置一个 key-value 时,根据 CRC16(key) mod 16384的值,决定将一个key放到哪个桶中。

1.1 Redis Cluster主从模式

- redis cluster 为了保证数据的高可用性,加入了主从模式,一个主节点对应一个或多个从节点,主节点提供数据存取,从节点则是从主节点拉取数据备份,当这个主节点挂掉后,就会有这个从节点选取一个来充当主节点,从而保证集群不会挂掉。

- 上面那个例子里, 集群有ABC三个主节点, 如果这3个节点都没有加入从节点,如果B挂掉了,我们就无法访问整个集群了。A和C的slot也无法访问。

- 所以我们在集群建立的时候,一定要为每个主节点都添加了从节点, 比如像这样, 集群包含主节点A、B、C, 以及从节点A1、B1、C1, 那么即使B挂掉系统也可以继续正确工作。

- B1节点替代了B节点,所以Redis集群将会选择B1节点作为新的主节点,集群将会继续正确地提供服务。 当B重新开启后,它就会变成B1的从节点。

- 不过需要注意,如果节点B和B1同时挂了,Redis集群就无法继续正确地提供服务了。

redis架构如图:

- 10.0.0.61 Redis01

- 10.0.0.62 Redis02

- 10.0.0.63 Redis03

- 在每个节点搭建两个redis,在任意一个节点安装ruby环境

- 记得关闭防火墙,或者开放对应端口

1.2 在每个节点安装redis

[root@redis01 ~]# cd /usr/local/src/ [root@redis01 src]# wget http://download.redis.io/releases/redis-3.2.8.tar.gz --2017-12-26 18:27:29-- http://download.redis.io/releases/redis-3.2.8.tar.gz Resolving download.redis.io (download.redis.io)... 109.74.203.151 Connecting to download.redis.io (download.redis.io)|109.74.203.151|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 1547237 (1.5M) [application/x-gzip] Saving to: ‘redis-3.2.8.tar.gz’ 100%[==================================================================================================================================>] 1,547,237 32.3KB/s in 23s 2017-12-26 18:27:53 (64.7 KB/s) - ‘redis-3.2.8.tar.gz’ saved [1547237/1547237] [root@redis01 src]# tar xf redis-3.2.8.tar.gz [root@redis01 src]# cd redis-3.2.8/ [root@redis01 redis-3.2.8]# make [root@redis01 redis-3.2.8]# make PREFIX=/usr/local/redis_m install [root@redis01 ~]# echo "export PATH=/usr/local/redis_m/bin:$PATH" >>/etc/profile [root@redis01 ~]# source /etc/profile [root@redis01 ~]# cd /usr/local/redis_m/ [root@redis01 redis_m]# mkdir -p conf [root@redis01 redis_m]# cp /usr/local/src/redis-3.2.8/redis.conf conf/

- 修改redis_m的redis.conf

bind 10.0.0.61 protected-mode yes port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 300 cluster-enabled yes cluster-config-file nodes_6379.conf #启动首次生成 cluster-node-timeout 15000 daemonize yes supervised no pidfile "/var/run/redis_6379.pid" loglevel notice logfile "" databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename "dump.rdb" dir "/usr/local/redis_m/conf" slave-serve-stale-data yes slave-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no slave-priority 100 appendonly no appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-size -2 list-compress-depth 0 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes

在本机搭建第二个redis

[root@redis01 conf]# cd /usr/local/ [root@redis01 conf]# cp -r redis_m redis_s #修改节点2 redis_s的配置文件 bind 10.0.0.61 protected-mode yes port 6380 tcp-backlog 511 timeout 0 tcp-keepalive 300 cluster-enabled yes cluster-config-file nodes_6380.conf cluster-node-timeout 15000 daemonize yes supervised no pidfile "/var/run/redis_6380.pid" loglevel notice logfile "" databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename "dump.rdb" dir "/usr/local/redis_s/conf" slave-serve-stale-data yes slave-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no slave-priority 100 appendonly no appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-size -2 list-compress-depth 0 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes

-

每台机器都部署两个redis,重复上述操作。

- 部署完成在任意节点安装ruby环境

[root@redis01 src]# pwd /usr/local/src/redis-3.2.8/src [root@redis01 src]# cp redis-trib.rb /usr/local/bin/ [root@redis01 src]# yum -y install ruby ruby-devel rubygems rpm-build [root@redis01 src]# gem install redis --version 3.0.0 Fetching: redis-3.0.0.gem (100%) Successfully installed redis-3.0.0 Parsing documentation for redis-3.0.0 Installing ri documentation for redis-3.0.0 1 gem installed

#启动redis

[root@redis01 ~]# redis-server /usr/local/redis_m/conf/redis.conf [root@redis01 ~]# redis-server /usr/local/redis_s/conf/redis.conf [root@redis01 ~]# netstat -lntup|grep redis tcp 0 0 10.0.0.61:6379 0.0.0.0:* LISTEN 7226/redis-server 1 tcp 0 0 10.0.0.61:6380 0.0.0.0:* LISTEN 7230/redis-server 1 tcp 0 0 10.0.0.61:16379 0.0.0.0:* LISTEN 7226/redis-server 1 tcp 0 0 10.0.0.61:16380 0.0.0.0:* LISTEN 7230/redis-server 1

2 .1 创建集群

启动集群

[root@redis01 ~]# redis-trib.rb create --replicas 1 10.0.0.61:6379 10.0.0.62:6379 10.0.0.63:6379 10.0.0.61:6380 10.0.0.62:6380 10.0.0.63:6380 >>> Creating cluster ##省略 M: 7876243e1de6e81316ff299d0cbd9f50dee6a6a6 10.0.0.61:6379 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 98b24b670b08b6f87c805d7225738b2119d1c1f3 10.0.0.63:6379 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 2f8dde5cfb03dd02331abf0560c3ab2e6fcfee0b 10.0.0.63:6380 slots: (0 slots) slave replicates 98b24b670b08b6f87c805d7225738b2119d1c1f3 S: a4212518794b6afacd9dc703a061bcba2ecd9683 10.0.0.62:6380 slots: (0 slots) slave replicates 7876243e1de6e81316ff299d0cbd9f50dee6a6a6 S: ce3105e44480c1c312918b01f6415767adc1e253 10.0.0.61:6380 slots: (0 slots) slave replicates c3d233cd5aa613b271e117c43a8d2a3f7702cdb5 M: c3d233cd5aa613b271e117c43a8d2a3f7702cdb5 10.0.0.62:6379 slots:5461-10922 (5462 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

进入到集群

[root@redis01 ~]# redis-cli -h 10.0.0.61 -c -p 6379

查看cluster集群

[root@redis01 ~]# redis-trib.rb check 10.0.0.61:6379