内容目录

- 什么是EPOLL

- EPOLL接口

- EPOLL机制

- 两张图

什么是EPOLL

摘录自manpage介绍

man:epoll(7) epoll(4)

epoll is a variant of poll(2) that can be used either as an edge-triggered or a level-triggered interface and scales well to large numbers of watched file descriptors.

EPOLL接口

epoll_create (or epoll_create1)

epoll_create opens an epoll file descriptor by requesting the kernel to allocate an event backing store dimensioned for size descriptors.

epoll_ctl

epoll_ctl() opens an epoll file descriptor by requesting the kernel to allocate an event backing store dimensioned for size descriptors.

epoll_wait

The epoll_wait() system call waits for events on the epoll file descriptor epfd for a maximum time of timeout millisec-onds.

Linux内核EPOLL实现

关键数据结构:

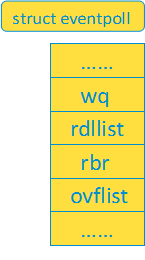

struct eventpoll

每个epoll文件都有一个struct eventpoll,存储在epoll文件的priv_data中,其主要成员如下图所示:

wait_queue_head_t wq: sys_epoll_wait使用的等待队列

struct list_head rdllist: 准备好的文件列表

struct rb_root rbr: 存储被监控的fd的RB树

struct ovflist: 单链表结构,当正在传输已准备好事件到用户层时,将发生的事件拷贝到该链表

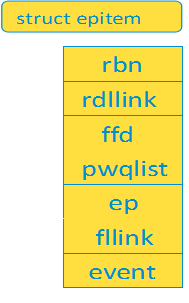

struct epitem

每个被监控文件设备都有一个对应的struct epitem,其成员如下图所示:

struct rb_node rbn: 链接到eventpoll RB tree的节点

struct list_head rdllink: 链接到eventpoll ready list,即rdllist

struct epoll_filefd ffd: 被监控文件的信息,包括*file和fd

struct list_head pwqlist: 包含poll wait queues的列表

struct eventpoll *ep: 指向这个item所属的ep

struct list_head fllink: 链接到被监控文件(目标文件)f_ep_links条目列表

struct epoll_event event:描述感兴趣的事件和fd

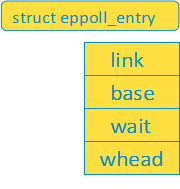

struct eppoll_entry

struct eppoll_entry用于socket的poll的钩子。它与被监控文件的struct epitem结构是一一对应的,ep_ptable_queue_proc函数通过这个结构体,把epoll wait queue添加到目标文件(被监控socket文件)的唤醒队列上。

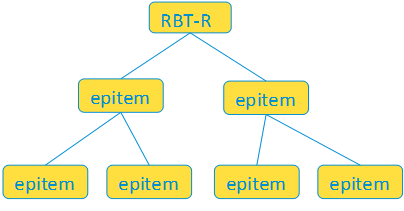

红黑树结构:

红黑树用于存储和组织代表被监控设备文件的struct epitem结构体。

Linux EPOLL接口内核实现

epoll_create接口分析

1) ep_alloc

创建新的struct eventpoll结构体

2)get_unused_fd_flags

获取一个空闲的文件描述符,即fd

3)anon_inode_getfile

创建一个新的struct file实例,并且挂载到一个匿名inode节点上;

struct eventpoll赋值给epoll文件的struct file->private_data

4)fd_install

安装struct file到file array中

5)struct eventpoll->file = epoll文件struct file

epoll_ctl接口分析

相关的处理函数:ep_insert,ep_remove和ep_modify

(1)ep_insert:

ep_insert代码片段:

1 struct ep_pqueue epq; 2 3 epq.epi = epi; 4 5 6 7 /* 初始化epq.pt的proc和key两个变量,为下面的函数做准备*/ 8 9 init_poll_funcptr(&epq.pt, ep_ptable_queue_proc); 10 11 sock = file->private_data; 12 13 14 15 /* 目标文件的文件操作poll,即socket_file_ops的sock_poll函数: 16 17 (socket_file_ops .poll = sock_poll) 18 19 return sock->ops->poll(file, sock, wait); 20 21 即inet_stream_ops的tcp_poll,或者inet_dgram_ops的udp_poll 22 23 这两个函数都有相同的一句: 24 25 sock_poll_wait(file, sk_sleep(sk), wait); 26 27 -->poll_wait(filp, wait_address, p); #把pwq添加到socket的sk_wq 28 29 -->p->qproc(filp, wait_address, p); ###即调用ep_ptable_queue_proc 30 31 */ 32 33 revents = tfile->f_op->poll(tfile, &epq.pt); 34 35 …… 36 37 /* 把epi插入到ep的红黑树上 */ 38 39 ep_rbtree_insert(ep, epi); 40 41 42 43 /* 如果被监控事件已经发生,且未加入到ep->rdllist链表中,则epitem添加到ep->rdllist链表上 */ 44 45 if ((revents & event->events) && !ep_is_linked(&epi->rdllink)) { 46 47 list_add_tail(&epi->rdllink, &ep->rdllist); 48 49 50 51 /* 通知等待任务,已经有事件发生 */ 52 53 if (waitqueue_active(&ep->wq)) 54 55 wake_up_locked(&ep->wq); 56 57 if (waitqueue_active(&ep->poll_wait)) 58 59 pwake++; 60 61 } 62 63 ……

(2)ep_ptable_queue_proc函数分析:

函数实现功能:

1)安装事件回调函数ep_poll_callback,并返回当前事件

2)并把struct eppoll_entry 即等待队列添加到sk_sleep(sk)的等待队列头

处理流程:

1)把epitem对应的waitqueue添加到socket的sk_wq,并返回当前可用事件

2)epi->fllink is added to tfile->f_ep_linkss

3)epi(event poll item) is added to event poll (according to a epoll fd)

4)返回事件中有需要的poll事件,并且epi->rdlink未被连接,则添加到ep->rdllist

5)唤醒ep->wq ##调用sys_poll_wait函数触发

6)唤醒ep->poll_wait ## 调用file->poll函数触发

struct ep_pqueue {

poll_table pt; ###查询表

struct epitem *epi; ##被监控文件的条目信息

};

代码分析:

ep_ptable_queue_proc

-->create struct eppoll_entry *pwq;

-->initialize pwq->wait->func = ep_poll_callback ##注册socket wait queue poll函数

--> pwq->whead = whead ### whead = sk_sleep(sock->sk)

-->pwq->base = epi ### event poll item

-->add_wait_queue: ###pwq->wait will be added to whead

--> list_add_tail : ###pwq->llink added to epi->pwqlist

1 static void ep_ptable_queue_proc(struct file *file, wait_queue_head_t *whead, poll_table *pt) 2 { 3 struct epitem *epi = ep_item_from_epqueue(pt); 4 struct eppoll_entry *pwq; 5 6 if (epi->nwait >= 0 && (pwq = kmem_cache_alloc(pwq_cache, GFP_KERNEL))) { 7 init_waitqueue_func_entry(&pwq->wait, ep_poll_callback); ###初始化wait回调函数 8 pwq->whead = whead; ###即sock->sk_wq->wait 9 pwq->base = epi; ###要监听的文件的epitem 10 add_wait_queue(whead, &pwq->wait); ###添加到sock的等待队列中 11 list_add_tail(&pwq->llink, &epi->pwqlist); ###添加到epitem的poll wait queues列表 12 epi->nwait++; 13 } else { 14 /* We have to signal that an error occurred */ 15 epi->nwait = -1; 16 } 17 }

(3)ep_poll_callback函数

函数功能:

这个回调函数由等待队列唤醒机制进行处理。当被监控的文件描述符有事件报告时,则由该文件描述符的相关函数来调用。

1)处理ep->ovflist链表

当应用接口拷贝已发生的事件时,又有新的事件发生,则把新事件链接到ovflist链表

2)如果该epi->rdllink还没被链接,则添加到ep->rdllist链表

3)如果在用户层有等待队列ep->wq,则唤醒用户态的等待进程

epoll_wait接口分析

epoll_wait在内核中的处理函数是ep_poll,它主要做如下三个方面的工作:

(1)超时时间处理

if (timeout > 0) {

struct timespec end_time = ep_set_mstimeout(timeout);

slack = select_estimate_accuracy(&end_time);

to = &expires;

*to = timespec_to_ktime(end_time);

} else if (timeout == 0) {

/*

* Avoid the unnecessary trip to the wait queue loop, if the

* caller specified a non blocking operation.

*/

timed_out = 1;

spin_lock_irqsave(&ep->lock, flags);

goto check_events;

}

1)如果超时时间大于0,则设置struct timespec类型的结束时间,并转换为ktime_t类型;

2)如果超时时间等于0,则设置timed_out为1,直接跳转到检查事件代码。

(2)等待事件通知

如果超时时间大于0 ,则进入获取事件的流程。

1 fetch_events: 2 spin_lock_irqsave(&ep->lock, flags); /* 获取事件锁 */ 3 4 /* 首先检查当前是否有事件发生,如果有则直接跳转到check_events流程 */ 5 if (!ep_events_available(ep)) { 6 /* 7 * We don't have any available event to return to the caller. 8 * We need to sleep here, and we will be wake up by 9 * ep_poll_callback() when events will become available. 10 */ 11 /* 初始化等待队列wait,并将等待队列加入到epoll的等待队列链表ep->wq */ 12 init_waitqueue_entry(&wait, current); 13 __add_wait_queue_exclusive(&ep->wq, &wait); 14 15 for (;;) { 16 /* 17 * We don't want to sleep if the ep_poll_callback() sends us 18 * a wakeup in between. That's why we set the task state 19 * to TASK_INTERRUPTIBLE before doing the checks. 20 */ 21 /* 设置当前进程为可中断状态 */ 22 set_current_state(TASK_INTERRUPTIBLE); 23 /* 如果当前有事件发生,或者已经超时,则退出事件检查的循环 */ 24 if (ep_events_available(ep) || timed_out) 25 break; 26 27 /* 给当前进程发送pending信号 */ 28 if (signal_pending(current)) { 29 res = -EINTR; 30 break; 31 } 32 /* 释放ep->lock自旋所,进程睡眠到超时时间 */ 33 spin_unlock_irqrestore(&ep->lock, flags); 34 if (!schedule_hrtimeout_range(to, slack, HRTIMER_MODE_ABS)) 35 timed_out = 1; 36 37 spin_lock_irqsave(&ep->lock, flags); 38 } 39 40 /* 如果当前进程睡眠时间到,或者有事件触发,则把当前进程从ep->wait等待事件列表中移除,并设置为RUNNING状态 */ 41 __remove_wait_queue(&ep->wq, &wait); 42 43 set_current_state(TASK_RUNNING); 44 }

(3)处理已触发事件

1 check_events: 2 /* Is it worth to try to dig for events ? */ 3 4 eavail = ep_events_available(ep); 5 6 spin_unlock_irqrestore(&ep->lock, flags); 7 8 /* 9 * Try to transfer events to user space. In case we get 0 events and 10 * there's still timeout left over, we go trying again in search of 11 * more luck. 12 */ 13 /* res为0,并且已有事件触发,则将已经发生事件拷贝到用户态 */ 14 if (!res && eavail && 15 !(res = ep_send_events(ep, events, maxevents)) && !timed_out) 16 goto fetch_events; 17 18 return res; 19

(4)ep_send_events处理函数

调用ep_scan_ready_list函数,扫描epoll的rdllist链表,并将事件拷贝到用户态。

实际调用函数ep_scan_ready_list:

return ep_scan_ready_list(ep, ep_send_events_proc, &esed, 0, false);

(5)ep_scan_ready_list

1)获取epoll的rdllist链表:

spin_lock_irqsave(&ep->lock, flags);

/* 获取这个rdllist链表 */

list_splice_init(&ep->rdllist, &txlist);

/* 设置ovflist为空 */

/* ovflist单向链表在这里的作用是,告诉ep_poll_callback函数,当前有进程在拷贝事件,如果有新的事件发生,则放到该链表中 */

ep->ovflist = NULL;

spin_unlock_irqrestore(&ep->lock, flags);

2)调用事件回调函数,将事件拷贝到用户态

error = (*sproc)(ep, &txlist, priv);

即调用ep_send_events_proc函数

3)处理ep->ovflist链表

如果在拷贝事件过程中,有新的事件触发,则需要把新的实际链接到epoll的rdllist链表中。

1 /* 2 * During the time we spent inside the "sproc" callback, some 3 * other events might have been queued by the poll callback. 4 * We re-insert them inside the main ready-list here. 5 */ 6 for (nepi = ep->ovflist; (epi = nepi) != NULL; 7 nepi = epi->next, epi->next = EP_UNACTIVE_PTR) { 8 /* 9 * We need to check if the item is already in the list. 10 * During the "sproc" callback execution time, items are 11 * queued into ->ovflist but the "txlist" might already 12 * contain them, and the list_splice() below takes care of them. 13 */ 14 if (!ep_is_linked(&epi->rdllink)) 15 list_add_tail(&epi->rdllink, &ep->rdllist); 16 } 17 /* 18 * We need to set back ep->ovflist to EP_UNACTIVE_PTR, so that after 19 * releasing the lock, events will be queued in the normal way inside 20 * ep->rdllist.

21 */ 22 ep->ovflist = EP_UNACTIVE_PTR; 23 24 /* 25 * Quickly re-inject items left on "txlist". 26 */ 27 28 list_splice(&txlist, &ep->rdllist);

3)如果epoll还有用户处于等待状态,则唤醒该用户

if (!list_empty(&ep->rdllist)) {

/*

* Wake up (if active) both the eventpoll wait list and

* the ->poll() wait list (delayed after we release the lock).

*/

if (waitqueue_active(&ep->wq))

wake_up_locked(&ep->wq);

if (waitqueue_active(&ep->poll_wait))

pwake++;

}

(6)ep_send_events_proc函数

/* 遍历获取的已触发事件链表 */

for (eventcnt = 0, uevent = esed->events;

!list_empty(head) && eventcnt < esed->maxevents;) {

epi = list_first_entry(head, struct epitem, rdllink);

list_del_init(&epi->rdllink);

/* 调用被监控设备文件的poll函数,即tcp_poll,或者udp_poll等函数

* 注意:此处调用,第二个参数poll_table *wait为空,

* 已经在ep_insert函数中,把监听任务挂载到socket的sk_sleep队列上,

* 所以此处不需要再处理

*/

revents = epi->ffd.file->f_op->poll(epi->ffd.file, NULL) &

epi->event.events;

/*

* If the event mask intersect the caller-requested one,

* deliver the event to userspace. Again, ep_scan_ready_list()

* is holding "mtx", so no operations coming from userspace

* can change the item.

*/

/* 如果有触发事件,则将事件拷贝到用户态空间 */

if (revents) {

if (__put_user(revents, &uevent->events) ||

__put_user(epi->event.data, &uevent->data)) {

list_add(&epi->rdllink, head);

return eventcnt ? eventcnt : -EFAULT;

}

eventcnt++;

uevent++;

if (epi->event.events & EPOLLONESHOT)

epi->event.events &= EP_PRIVATE_BITS;

else if (!(epi->event.events & EPOLLET)) {

/* 此处为边缘触发流程:

* 如果为水平触发,则将触发事件的epi再次链接到epoll的rdllist链表

*/

/*

* If this file has been added with Level

* Trigger mode, we need to insert back inside

* the ready list, so that the next call to

* epoll_wait() will check again the events

* availability. At this point, no one can insert

* into ep->rdllist besides us. The epoll_ctl()

* callers are locked out by

* ep_scan_ready_list() holding "mtx" and the

* poll callback will queue them in ep->ovflist.

*/

list_add_tail(&epi->rdllink, &ep->rdllist);

}

}

}

socket事件通知

inet_create

-->sock_init_data

--> sk->sk_state_change = sock_def_wakeup;

sk->sk_data_ready = sock_def_readable; ## readable, POLLIN, 唤醒监控可读事件的任务

sk->sk_write_space = sock_def_write_space; ##writable, POLLOUT,唤醒监控可写事件的任务

sk->sk_error_report = sock_def_error_report; ##error, POLLERR,唤醒监控错误事件的任务

sk->sk_destruct = sock_def_destruct; ##free sock

示例:

sock_def_readable函数分析:

{

struct socket_wq *wq;

rcu_read_lock();

wq = rcu_dereference(sk->sk_wq); /* 获取socket wait_queue */

if (wq_has_sleeper(wq)) /* sk->sock_wq->wait 是否有等待队列 */

/* __wake_up_sync_key --> __wake_up_common(加wait_queue_head q->lock锁)

* 最终调用 ep_poll_callback 函数*/

wake_up_interruptible_sync_poll(&wq->wait, POLLIN | POLLPRI |

POLLRDNORM | POLLRDBAND);

sk_wake_async(sk, SOCK_WAKE_WAITD, POLL_IN);

rcu_read_unlock();

}

数据结构关系图

Q&A:

Q1: Epoll常用用户态编程接口有哪些?

A1:epoll_create epoll_ctl epoll_wait

Q2: 什么是EPOLL?

A2:EPOLL是一种IO事件通知机制

Q3:EPOLL事件触发机制有哪些?

A3:水平触发和边缘触发

Q4:epoll_ctl接口中op参数有哪些?

A4: EPOLL_CTL_ADD, EPOLL_CTL_MOD, EPOLL_CTL_DEL

Q5:EPOLL接口可以监控哪些事件?

A5: EPOLLIN, EPOLLOUT, EPOLLERR等