一、Flume安装

二、Sqoop安装

参考:Sqoop简介与安装

三、Flume和Sqoop结合使用案例

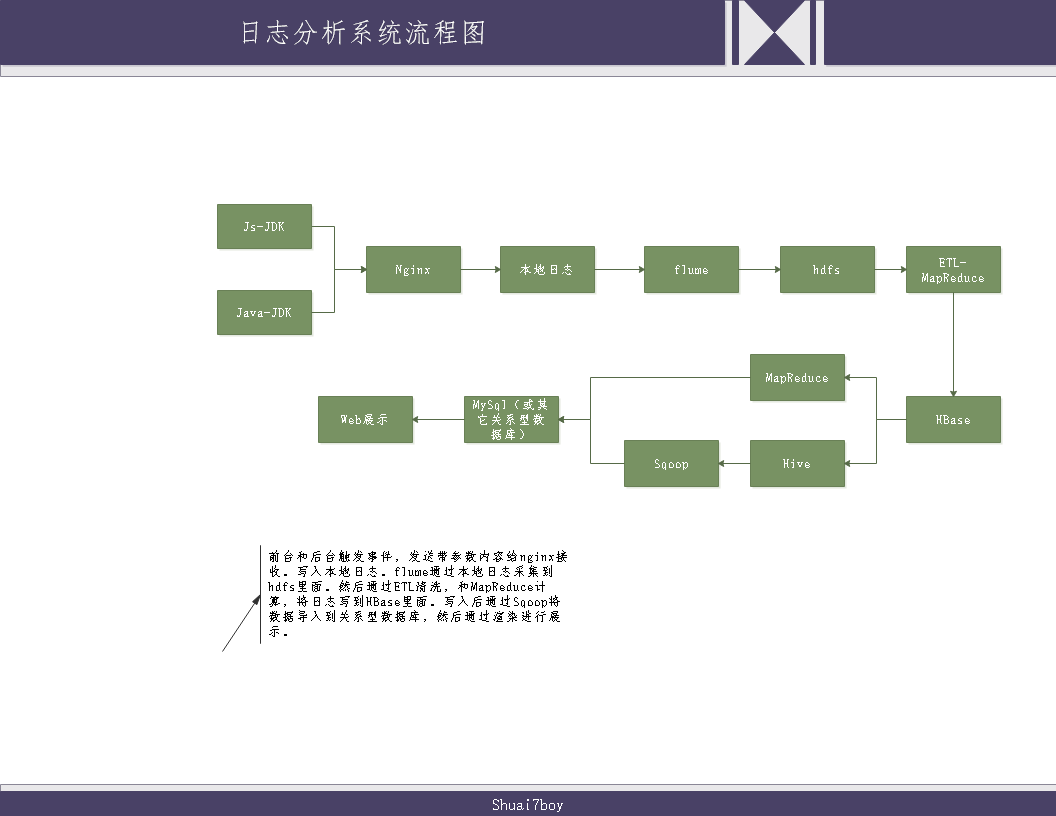

日志分析系统整体架构图:

3.1配置nginx环境

请参考菜鸟教程: https://www.runoob.com/linux/nginx-install-setup.html

按照上述步骤安装完后,需要对nginx配置下访问日志格式:

编辑nginx.conf,默认安装路径在/etc/nginx下

cd /etc/nginx

vim nginx.conf

在http模块下面添加:

解析:(以^A为日志分隔符,remote_addr代表远程地址,msec代表访问时间,http_host代表访问主机名,request_uri代表访问资源)

log_format my_format '$remote_addr^A$msec^A$http_host^A$request_uri';

在server模块下面添加:

解析:(访问地址 域名/log.gif,请求格式是image,存放地址是/opt/data/access.log )

location =/log.gif {

default_type image/gif;

access_log /opt/data/access.log my_format;

}

这样访问nginx的时候就会生成类似下面的内容:

192.168.40.1^A1577365502.563^Atuge1^A/log.gif?en=e_crt&oid=123456&on=%E6%B5%8B%E8%AF%95%E8%AE%A2%E5%8D%95123456&cua=524.01&cut=RMB&pt=alipay&ver=1&pl=website&sdk=js&u_ud=039F6588-ED65-4187-87CF-9DBBC9F19645&u_mid=zhangsan&u_sd=605DECAA-93C0-46B7-AC47-7B1898DBD6BC&c_time=1577365502881&l=zh-CN&b_iev=Mozilla%2F5.0%20(Windows%20NT%2010.0%3B%20WOW64)%20AppleWebKit%2F537.36%20(KHTML%2C%20like%20Gecko)%20Chrome%2F78.0.3904.97%20Safari%2F537.36&b_rst=1536*86

3.2编写触发事件传输代码

代码思路:

- 前端通过触发事件,生成image格式数据发送。

- 后端通过API接口直接发送Get请求。

代码连接: https://gitee.com/shuai7boy/BIG_DATA_LOG

3.3运行Flume,将本地日志写入HDFS

编写flume代码,参考官方案例: http://flume.apache.org/releases/content/1.6.0/FlumeUserGuide.html

在安装Linux的服务器上,创建一个监控文件(姑且取名optionHdfs.conf):

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /opt/data/access.log --监控文件路径,新增加内容就会往hdfs里面写。

# Describe the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path =hdfs://tuge2:9000/flume/webdata/%Y-%m-%d --填写active NameNode

a1.sinks.k1.hdfs.useLocalTimeStamp = true

a1.sinks.k1.hdfs.filePrefix = events-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

运行flume,同样参考官方来就行:flume-ng agent --conf conf --conf-file optionHdfs.conf --name a1 -Dflume.root.logger=INFO,console

运行后就按照文件规则将日志里面的内容导入hdfs里了。

3.4编写ETL代码,将HDFS内容导入到HBase里面

代码思路:

- 使用Map/Reduce将hdfs中的内容提取出来进行分割处理,然后Map到HBase里面。

代码连接:https://gitee.com/shuai7boy/BIG_DATA_ETL

第一种分析方案:

3.5使用Map/Reduce将HBase数据分析处理后导入到MySql

数据和维度:

- 用户某段时间活跃量

- 用户某段时间基于某个浏览器的活跃量

- 用户某段时间新增人数

- 用户某段时间基于某个浏览器的新增人数

主要拿用户时间活跃量和用户某段时间基于某个浏览器的活跃量来讨论。

代码思路:

-

定义来源:

在Runner类里面定义来源为HBase。

-

定义维度

将基于用户活跃度,基于某平台的用户活跃度,新增用户数,基于某平台的新增用户数等维度进行设定类。

-

进行Map:

继承TableMapper

根据查询的数据来源,映射成 维度+用户信息。

-

进行Reduce:

继承Reducer

计算成 维度+用户去重。

-

进行To MySql:

继承OutputFormat重写getRecordWriter,checkOutputSpecs,getOutputCommitter

继承RecordWriter重写write和close

继承IDimensionConverter重写getDimensionIdByValue,executeSql

在Runner里面定义写入MySql。SQL语句都定义在了配置文件里面,根据维度进行调用。

首先判断各个维度是否存在,不存在先写入维度信息。 然后就是写入更新统计信息(每映射10条更新一次。)

第二种分析方案:

3.6在Hive里面创建数据表进行存数据

HBase表结构:Row,Name(列族+限定符),timestamp,Value

- 在hive中创建hbase的eventlog对应表,并进行hive表和hbase表关联

hive和hbase表关联官方文档: https://cwiki.apache.org/confluence/display/Hive/HBaseIntegration

CREATE EXTERNAL TABLE event_logs(

key string, pl string, en string, s_time bigint, p_url string, u_ud string, u_sd string

) ROW FORMAT SERDE 'org.apache.hadoop.hive.hbase.HBaseSerDe'

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties('hbase.columns.mapping'=':key,log:pl,log:en,log:s_time,log:p_url,log:u_ud,log:u_sd')

tblproperties('hbase.table.name'='eventlog');

- 创建一个中间表(PS:要和MySql表结构保持一致,计算结果存放此表并同步MySql)

CREATE TABLE stats_view_depth (

platform_dimension_id bigint ,

data_dimension_id bigint ,

kpi_dimension_id bigint ,

pv1 bigint ,

pv2 bigint ,

pv3 bigint ,

pv4 bigint ,

pv5_10 bigint ,

pv10_30 bigint ,

pv30_60 bigint ,

pv60_plus bigint ,

created string

) row format delimited fields terminated by ' ';

- 创建一个临时表(PS:存放中间结果)

CREATE TABLE stats_view_depth_tmp(pl string, date string, col string, ct bigint);

3.7编写platformdimension和datedimension(日期维度处理类)

注:要继承udf

/**

* 操作日期dimension 相关的udf

*

* @author root

*

*/

public class DateDimensionUDF extends UDF {

private IDimensionConverter converter = new DimensionConverterImpl();

/**

* 根据给定的日期(格式为:yyyy-MM-dd)至返回id

*

* @param day

* @return

*/

public IntWritable evaluate(Text day) {

DateDimension dimension = DateDimension.buildDate(TimeUtil.parseString2Long(day.toString()), DateEnum.DAY);

try {

//根据日期查找时间维度表,如果有数据则返回表ID,没有数据,则插入后返回表ID。

int id = this.converter.getDimensionIdByValue(dimension);

return new IntWritable(id);

} catch (IOException e) {

throw new RuntimeException("获取id异常");

}

}

}

将编写的内容进行打包上传到linux服务器

3.8创建hive的function

create function date_convert as 'shuai7boy.vip.transformer.hive.DateDimensionUDF' using jar 'hdfs://tuge2:9000/transform/transform-0.0.1.jar';

其中一开始没加端口号报错:java.lang.IllegalArgumentException: java.net.UnknownHostException: transform ,然后参考博文 https://blog.csdn.net/heming621/article/details/53317562 解决了。

3.9编写HQL语句进行计算

- 根据用户的角度统计每个页面的浏览量

上面我们将HDFS数据导入到了HBase里面,并且做了Hive表和HBase表同步,又因为Hive表支持HQL语句。所在在Hive里面使用HQL语句就能进行分析。

计算用户的浏览深度

from (

select

pl, from_unixtime(cast(s_time/1000 as bigint),'yyyy-MM-dd') as day, u_ud,

(case when count(p_url) = 1 then "pv1"

when count(p_url) = 2 then "pv2"

when count(p_url) = 3 then "pv3"

when count(p_url) = 4 then "pv4"

when count(p_url) >= 5 and count(p_url) <10 then "pv5_10"

when count(p_url) >= 10 and count(p_url) <30 then "pv10_30"

when count(p_url) >=30 and count(p_url) <60 then "pv30_60"

else 'pv60_plus' end) as pv

from event_logs

where

en='e_pv'

and p_url is not null

and pl is not null

and s_time >= unix_timestamp('2016-06-08','yyyy-MM-dd')*1000

and s_time < unix_timestamp('2016-06-09','yyyy-MM-dd')*1000

group by

pl, from_unixtime(cast(s_time/1000 as bigint),'yyyy-MM-dd'), u_ud

) as tmp

insert overwrite table stats_view_depth_tmp

select pl,day,pv,count(distinct u_ud) as ct where u_ud is not null group by pl,day,pv;

--将行转列

with tmp as

(

select pl,date as date1,ct as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv1' union all

select pl,date as date1,0 as pv1,ct as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv2' union all

select pl,date as date1,0 as pv1,0 as pv2,ct as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv3' union all

select pl,date as date1,0 as pv1,0 as pv2,0 as pv3,ct as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv4' union all

select pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,ct as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv5_10' union all

select pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,ct as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv10_30' union all

select pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,ct as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv30_60' union all

select pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,ct as pv60_plus from stats_view_depth_tmp where col='pv60_plus' union all

select 'all' as pl,date as date1,ct as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv1' union all

select 'all' as pl,date as date1,0 as pv1,ct as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv2' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,ct as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv3' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,0 as pv3,ct as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv4' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,ct as pv5_10,0 as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv5_10' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,ct as pv10_30,0 as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv10_30' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,ct as pv30_60,0 as pv60_plus from stats_view_depth_tmp where col='pv30_60' union all

select 'all' as pl,date as date1,0 as pv1,0 as pv2,0 as pv3,0 as pv4,0 as pv5_10,0 as pv10_30,0 as pv30_60,ct as pv60_plus from stats_view_depth_tmp where col='pv60_plus'

)

from tmp

insert overwrite table stats_view_depth

select 2,date_convert(date1),6,sum(pv1),sum(pv2),sum(pv3),sum(pv4),sum(pv5_10),sum(pv10_30),sum(pv30_60),sum(pv60_plus),'2017-01-10' group by pl,date1;

执行可能出现异常:Failed to recognize predicate 'xxx'. Failed rule: 'identifier' in column specification。

解决方案:主要原因是使用了date关键字导致的,弃用保留关键字即可。

在hive-site.xml里面添加如下命令:

<property>

<name>hive.support.sql11.reserved.keywords</name>

<value>false</value>

</property>

参考博客: https://blog.csdn.net/sjf0115/article/details/73244762

4.0使用Sqoop将hive中的数据同步到MySql里面

退出hive命令,执行以下语句:

sqoop export --connect jdbc:mysql://tuge1:3306/result_db --username root --password 123456 --table stats_view_depth --export-dir /user/hive/warehouse/stats_view_depth/* --input-fields-terminated-by "\t" --update-mode allowinsert --update-key platform_dimension_id,data_dimension_id,kpi_dimension_id;

然后就能在MySql里面看到数据了,以后的事情就是把数据在平台渲染下,这里就不演示了。