1.创建scrapy项目

dos窗口输入:

scrapy startproject images360

cd images360

2.编写item.py文件(相当于编写模板,需要爬取的数据在这里定义)

import scrapy

class Images360Item(scrapy.Item):

# define the fields for your item here like:

#图片ID

image_id = scrapy.Field()

#链接

url = scrapy.Field()

#标题

title = scrapy.Field()

#缩略图

thumb = scrapy.Field()

3.创建爬虫文件

dos窗口输入:

scrapy genspider myspider images.so.com

4.编写myspider.py文件(接收响应,处理数据)

# -*- coding: utf-8 -*-

from urllib.parse import urlencode

import scrapy

from images360.items import Images360Item

import json

class MyspiderSpider(scrapy.Spider):

name = 'myspider'

allowed_domains = ['images.so.com']

urls = []

data = {'ch': 'beauty', 'listtype': 'new'}

base_url = 'https://image.so.com/zj?0'

for page in range(1,51):

data['sn'] = page * 30

params = urlencode(data)

url = base_url + params

urls.append(url)

print(urls)

start_urls = urls

# ch: beauty

# sn: 120

# listtype: new

# temp: 1

def parse(self, response):

result = json.loads(response.text)

for each in result.get('list'):

item = Images360Item()

item['image_id'] = each.get('imageid')

item['url'] = each.get('qhimg_url')

item['title'] = each.get('group_title')

item['thumb'] = each.get('qhimg_thumb_url')

yield item

5.编写pipelines.py(存储数据)

import pymysql.cursors

class Images360Pipeline(object):

def __init__(self):

self.connect = pymysql.connect(

host='localhost',

user='root',

password='',

database='quotes',

charset='utf8',

)

self.cursor = self.connect.cursor()

def process_item(self, item, spider):

item = dict(item)

sql = 'insert into images360(image_id,url,title,thumb) values(%s,%s,%s,%s)'

self.cursor.execute(sql, (item['image_id'], item['url'], item['title'],item['thumb']))

self.connect.commit()

return item

def close_spider(self, spider):

self.cursor.close()

self.connect.close()

6.编写settings.py(设置headers,pipelines等)

robox协议

# Obey robots.txt rules ROBOTSTXT_OBEY = False

headers

DEFAULT_REQUEST_HEADERS = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

}

pipelines

ITEM_PIPELINES = {

'quote.pipelines.Images360Pipeline': 300,

}

7.运行爬虫

dos窗口输入:

scrapy crawl myspider

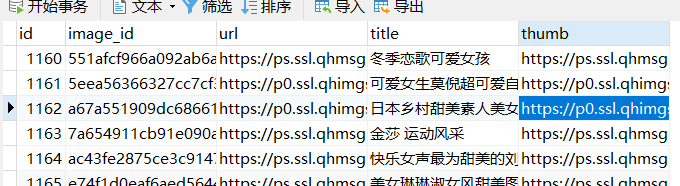

运行结果