1 引言

DSSM,业内也叫做双塔模型,2013年微软发出来是为了解决NLP领域中计算语义相似度任务,即如何让搜索引擎在大规模web文件中基于query给出最相似的docment。因为语义匹配本身是一种排序问题,和推荐场景不谋而合,所以 DSSM 模型被自然的引入到推荐领域中。因为效果不错并且对工业界十分友好,所以被各大厂广泛应用于推荐系统中。

2 DSSM结构图

如上图所示,示意图下,就是给定一堆doc的基础上,给一个query,想要知道哪个doc最接近;其中就是n+1个塔,一个query塔和n个docment塔:

- 输入中每个doc部分是一个高维度的向量,一个没有归一化的docment(如词袋形式);query部分就是一个onehot形式;

- 输出是一个低维语义特征空间中的概念向量;

然后:

- 将每个输入向量都映射成对应的语义概念向量,就图中的128维度;

- 计算一个文档和一个query各自的语义概念向量之间的cos值,其中n可以选择10,然后包含6个点击的正样本和4个随机选择的负样本;

其中目标函数是:

- \(D\)包含\(D^+\)和\(D^-\),其中\(D^+\)为点击过的doc,\(D^-\)为随机选取的未点击doc,最终loss是最小化下面的式子

- 先计算query和每个doc的cos值

- 再基于cos计算query和每个doc的softmax值

- 然后基于最开始的loss函数计算loss值

在大规模的web搜索中,往往是先训练完整个模型,然后对每个docment都先执行一次得到每个概念向量,提前保存下来;

然后再等query来,过一遍获取其对应的概念向量,然后直接通过诸如faiss等等向量引擎找到最匹配的那个docment概念向量即可

3 word hashing

但是如上图所示,因为输入其实就是onehot和multihot,但是随着整个网站规模变大,比如谷歌,千万亿的量,那么将其放入dnn,几乎没法训练,所以需要对输入层进行瘦身,因此文中提出 word hashing,举例good这个单词

- 左右加一个# 符号;

- 以n-grams进行切分,这里n=3,则分为#go, goo, ood, od#;

- 将所有单词都这么切,然后来个词袋模型,如good原来是onehot,000001000;现在就成了010100101类似样子了(其中的1只是刚好出现一次而已)

其实就是类似词根为桶,有点布隆过滤器的意思,这样将onehot就直接降维了,

4 推荐中召回的使用DSSM

如果将图中最左侧看作一个塔,并称为用户塔;那右侧就可以称为物料塔(或者物料塔1,2,…,n),双塔,即在写网络结构时,一个DNN表示用户塔,一个DNN表示物料塔,互相独立,这里有网络结构的讲解,并且有代码;

如DSSM双塔模型原理及在推荐系统中的应用中提到的,实际上使用DSSM解决不同的问题,我们通常使用不同的loss函数,双塔模型通过使用不同的label构造不同的模型,比如点击率模型采用用户向量和文章向量内积结果过sigmoid作为预估值,用到的损失函数为logloss,时长模型直接使用用户向量和文章向量的内积作为预估值,损失函数为mse。

用户侧和Item侧分别构建多层NN模型,最后分别输出一个多维embedding,分别作为该用户和Item的低维语义表征,然后通过相似度函数如余弦相似度来计算两者相关性,通过计算与实际label如是否点击、阅读时长等的损失,进行后向传播优化网络参数。

在实际工程中,

- Item Embeding会通过持续调用模型Item侧网络,将结果保存起来,如放到Faiss中;

- User Embedding在线上Serving时需要通过调用模型用户侧网络进行计算,获得一个向量,然后将其基于faiss找到最相似的那些item

5 基于pytorch的代码实现

这里基于DSSM双塔模型及pytorch实现的代码进行学习dssm的过程

5.1 数据展示及其预处理

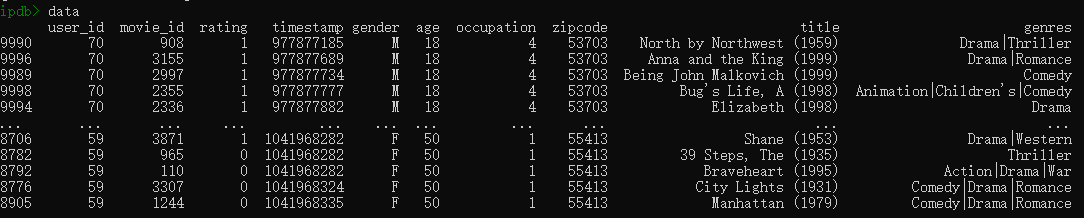

我们给一个观影者给电影评分的数据集,其实就类似电商的购买者买了啥或者看了啥的数据集,列名为

def data_process(data_path='movielens.txt', samp_rows=10000):

'''按时间升序,将最后的20%作为测试集 '''

data = pd.read_csv(data_path, nrows=samp_rows)

data['rating'] = data['rating'].apply(lambda x: 1 if x > 3 else 0) # 投票大于3星的为正类,其余的为负类

data = data.sort_values(by='timestamp', ascending=True)

train = data.iloc[:int(len(data)*0.8)].copy()

test = data.iloc[int(len(data)*0.8):].copy()

return train, test, data

train, test, data = data_process(data_path, samp_rows=10000)

pandas读取数据时如上图

转换后data如上图

5.2 特征处理

5.2.1 计算每个user的推荐正类物料特征,计算每个item的平均打分特征

接着获取user特征和item特征

def get_user_feature(data):

'''针对每个user,将其标记为正样本的收集起来,计算其user_hist即历史访问;以及user_mean_rating '''

data_group = data[data['rating'] == 1]

data_group = data_group[['user_id', 'movie_id']].groupby('user_id').agg(list).reset_index()

data_group['user_hist'] = data_group['movie_id'].apply(lambda x: '|'.join([str(i) for i in x]))

data = pd.merge(data_group.drop('movie_id', axis=1), data, on='user_id')

data_group = data[['user_id', 'rating']].groupby('user_id').agg('mean').reset_index()

data_group.rename(columns={'rating': 'user_mean_rating'}, inplace=True)

data = pd.merge(data_group, data, on='user_id')

return data

def get_item_feature(data):

'''计算每个item的,所有打分的均值 '''

data_group = data[['movie_id', 'rating']].groupby('movie_id').agg('mean').reset_index()

data_group.rename(columns={'rating': 'item_mean_rating'}, inplace=True)

data = pd.merge(data_group, data, on='movie_id')

return data

train = get_user_feature(train)

train = get_item_feature(train)

经过get_user_feature处理,每个用户新增user_hist和user_mean_rating列

经过get_item_feature的处理,将每个item为基,计算所有该item的得分,并计算其均值

5.2.2 区分稀疏特征和密集特征,并进行归一化等处理

当前列一共有13列

movie_id item_mean_rating user_id user_mean_rating

user_hist rating timestamp gender

age occupation zipcode title

genres

将其分为稀疏特征和密集特征

# 先标明哪些是稀疏特征,哪些是密集特征

sparse_features = ['user_id', 'movie_id', 'gender', 'age', 'occupation']

dense_features = ['user_mean_rating', 'item_mean_rating']

target = ['rating']

user_sparse_features, user_dense_features = ['user_id', 'gender', 'age', 'occupation'], ['user_mean_rating']

item_sparse_features, item_dense_features = ['movie_id', ], ['item_mean_rating']

#==================================

# 1.Label Encoding for sparse features,and process sequence features

# 即对稀疏特征进行索引化,即将其标记为字典的序列索引,

from sklearn.preprocessing import LabelEncoder, MinMaxScaler

for feat in sparse_features:

lbe = LabelEncoder()

lbe.fit(data[feat])

train[feat] = lbe.transform(train[feat])

test[feat] = lbe.transform(test[feat])

mms = MinMaxScaler(feature_range=(0, 1))

mms.fit(train[dense_features])

train[dense_features] = mms.transform(train[dense_features])

经过LabeEncoder之后,即将对应的稀疏特征进行index索引化

经过MinMaxScaler之后,即将对应的密集特征进行归一化到[0-1]之间

5.2.3 几个预处理函数

from collections import OrderedDict, namedtuple, defaultdict

DEFAULT_GROUP_NAME = "default_group"

class SparseFeat(namedtuple('SparseFeat', ['name', 'vocabulary_size', 'embedding_dim', 'use_hash', 'dtype',

'embedding_name', 'group_name'])):

def __new__(cls, name, vocabulary_size, embedding_dim=4, use_hash=False, dtype='int32', embedding_name=None,

group_name=DEFAULT_GROUP_NAME):

if embedding_name is None:

embedding_name = name

if embedding_dim == 'auto':

embedding_dim = 6 * int(pow(vocabulary_size, 0.25))

if use_hash:

print("Notice! Feature Hashing on the fly currently!")

return super(SparseFeat, cls).__new__(cls, name, vocabulary_size, embedding_dim, use_hash, dtype,

embedding_name, group_name)

def __hash__(self):

return self.name.__hash__()

class VarLenSparseFeat(namedtuple('VarLenSparseFeat', ['sparsefeat', 'maxlen', 'combiner', 'length_name'])):

def __new__(cls, sparsefeat, maxlen, combiner='mean', length_name=None):

return super(VarLenSparseFeat, cls).__new__(cls, sparsefeat, maxlen, combiner, length_name)

@property

def name(self):

return self.sparsefeat.name

@property

def vocabulary_size(self):

return self.sparsefeat.vocabulary_size

@property

def embedding_dim(self):

return self.sparsefeat.embedding_dim

@property

def dtype(self):

return self.sparsefeat.dtype

@property

def embedding_name(self):

return self.sparsefeat.embedding_name

@property

def group_name(self):

return self.sparsefeat.group_name

def __hash__(self):

return self.name.__hash__()

class DenseFeat(namedtuple('DenseFeat', ['name', 'dimension', 'dtype'])):

def __new__(cls, name, dimension=1, dtype="float32"):

return super(DenseFeat, cls).__new__(cls, name, dimension, dtype)

def __hash__(self):

return self.name.__hash__()

5.2.3 处理序列特征,并将它们进行索引化

在前面的data中,序列特征主要是电影的标签(动作,爱情等等)和每个user的观影历史

from keras.preprocessing.sequence import pad_sequences

from preprocessing.inputs import SparseFeat, DenseFeat, VarLenSparseFeat

def get_var_feature(data, col):

key2index = {}

def split(x):

'''将电影的标签进行独立,并对其赋予索引,key2index就是为了不断地计算索引值 '''

key_ans = x.split('|')

for key in key_ans:

if key not in key2index:

# Notice : input value 0 is a special "padding",\

# so we do not use 0 to encode valid feature for sequence input

key2index[key] = len(key2index) + 1

return list(map(lambda x: key2index[x], key_ans))

var_feature = list(map(split, data[col].values))

var_feature_length = np.array(list(map(len, var_feature)))

max_len = max(var_feature_length)

var_feature = pad_sequences(var_feature, maxlen=max_len, padding='post', )

return key2index, var_feature, max_len

#=====================================

# 2.preprocess the sequence feature

genres_key2index, train_genres_list, genres_maxlen = get_var_feature(train, 'genres')

user_key2index, train_user_hist, user_maxlen = get_var_feature(train, 'user_hist')

# 处理 ['user_id', 'gender', 'age', 'occupation'], ['user_mean_rating']

user_feature_columns = [SparseFeat(feat, data[feat].nunique(), embedding_dim=4)

for i, feat in enumerate(user_sparse_features)] + \

[DenseFeat(feat, 1, ) for feat in user_dense_features]

# 处理 ['movie_id', ], ['item_mean_rating']

item_feature_columns = [SparseFeat(feat, data[feat].nunique(), embedding_dim=4)

for i, feat in enumerate(item_sparse_features)] + \

[DenseFeat(feat, 1, ) for feat in item_dense_features]

# 处理 genres

item_varlen_feature_columns = [VarLenSparseFeat(SparseFeat('genres', vocabulary_size=1000, embedding_dim=4),

maxlen=genres_maxlen, combiner='mean', length_name=None)]

#处理 user_hist

user_varlen_feature_columns = [VarLenSparseFeat(SparseFeat('user_hist', vocabulary_size=3470, embedding_dim=4),

maxlen=user_maxlen, combiner='mean', length_name=None)]

# 3.generate input data for model

user_feature_columns += user_varlen_feature_columns

item_feature_columns += item_varlen_feature_columns

# add user history as user_varlen_feature_columns

train_model_input = {name: train[name] for name in sparse_features + dense_features}

train_model_input["genres"] = train_genres_list

train_model_input["user_hist"] = train_user_hist

其中pad_sequences函数的作用是将:

进行矩阵化并填充

user_feature_columns:

item_feature_columns:

item_varlen_feature_columns:

user_varlen_feature_columns:

train_genres_list和train_user_hist

最后train_model_input:

5.3 模型构建

从外到里的方式阅读代码,会容易理解的多

from model.dssm import DSSM

# 4.Define Model,train,predict and evaluate

device = 'cpu'

use_cuda = True

if use_cuda and torch.cuda.is_available():

print('cuda ready...')

device = 'cuda:0'

# -------------------------------------------------------

model = DSSM(user_feature_columns, item_feature_columns, task='binary', device=device)

5.3.1 DSS网络结构

# DSSM的网络结构,其中作者为了方便扩展到其他诸如dssm,esmm的模型扩展,先抽取出基类 BaseTower

from model.base_tower import BaseTower

from preprocessing.inputs import combined_dnn_input, compute_input_dim

from layers.core import DNN

from preprocessing.utils import Cosine_Similarity

class DSSM(BaseTower):

"""DSSM双塔模型"""

def __init__(self, user_dnn_feature_columns, item_dnn_feature_columns, gamma=1, dnn_use_bn=True,

dnn_hidden_units=(300, 300, 128), dnn_activation='relu', l2_reg_dnn=0,

l2_reg_embedding=1e-6, dnn_dropout=0, init_std=0.0001, seed=1024, task='binary',

device='cpu', gpus=None):

super(DSSM, self).__init__(user_dnn_feature_columns, item_dnn_feature_columns,

l2_reg_embedding=l2_reg_embedding, init_std=init_std, seed=seed,

task=task, device=device, gpus=gpus)

if len(user_dnn_feature_columns) > 0:

# 建立一个DNN结构,隐藏神经元(300,300,128),输入compute_input_dim(user_dnn_feature_columns)

self.user_dnn = DNN(compute_input_dim(user_dnn_feature_columns), dnn_hidden_units,

activation=dnn_activation, l2_reg=l2_reg_dnn, dropout_rate=dnn_dropout,

use_bn=dnn_use_bn, init_std=init_std, device=device)

self.user_dnn_embedding = None

if len(item_dnn_feature_columns) > 0:

# 建立一个DNN结构,隐藏神经元(300,300,128),输入compute_input_dim(item_dnn_feature_columns)

self.item_dnn = DNN(compute_input_dim(item_dnn_feature_columns), dnn_hidden_units,

activation=dnn_activation, l2_reg=l2_reg_dnn, dropout_rate=dnn_dropout,

use_bn=dnn_use_bn, init_std=init_std, device=device)

self.item_dnn_embedding = None

self.gamma = gamma

self.l2_reg_embedding = l2_reg_embedding

self.seed = seed

self.task = task

self.device = device

self.gpus = gpus

def forward(self, inputs):

if len(self.user_dnn_feature_columns) > 0:

# 获取user稀疏向量的embedding, 密集向量的

# input_from_feature_columns(); user_embedding_dict都在基类中

user_sparse_embedding_list, user_dense_value_list = \

self.input_from_feature_columns(inputs, self.user_dnn_feature_columns,

self.user_embedding_dict)

# 计算获取user dnn的输入,并传递给之前创建好的user的DNN结构,获取输出,将其视为user的embedding

user_dnn_input = combined_dnn_input(user_sparse_embedding_list, user_dense_value_list)

self.user_dnn_embedding = self.user_dnn(user_dnn_input)

if len(self.item_dnn_feature_columns) > 0:

# 获取item稀疏向量的embedding, 密集向量的

# input_from_feature_columns(); item_embedding_dict都在基类中

item_sparse_embedding_list, item_dense_value_list = \

self.input_from_feature_columns(inputs, self.item_dnn_feature_columns,

self.item_embedding_dict)

# 计算获取item dnn的输入,并传递给之前创建好的item的DNN结构,获取输出,将其视为item的embedding

item_dnn_input = combined_dnn_input(item_sparse_embedding_list, item_dense_value_list)

self.item_dnn_embedding = self.item_dnn(item_dnn_input)

if len(self.user_dnn_feature_columns) > 0 and len(self.item_dnn_feature_columns) > 0:

# 计算user 和item对应 dnn输出embedding 之间的相似性,

# self.out 为PredictionLayer('binary')(score),即将其+1然后进行sigmoid输出

score = Cosine_Similarity(self.user_dnn_embedding, self.item_dnn_embedding, gamma=self.gamma)

output = self.out(score)

return output

elif len(self.user_dnn_feature_columns) > 0:

return self.user_dnn_embedding

elif len(self.item_dnn_feature_columns) > 0:

return self.item_dnn_embedding

else:

raise Exception("input Error! user and item feature columns are empty.")

5.3.2 DSSM 其辅助函数

------------------------------preprocessing.input------------------------------

import numpy as np

import torch

import torch.nn as nn

from collections import OrderedDict, namedtuple

from layers.sequence import SequencePoolingLayer

from collections import OrderedDict, namedtuple, defaultdict

from itertools import chain

DEFAULT_GROUP_NAME = "default_group"

def concat_fun(inputs, axis=-1):

# concat的功能

if len(inputs) == 1:

return inputs[0]

else:

return torch.cat(inputs, dim=axis)

def compute_input_dim(feature_columns, include_sparse=True, include_dense=True, feature_group=False):

# 该函数是计算将要输入到DNN时,输入层的维度大小,主要是稀疏特征+密集特征的维度

# 如果feature_columns存在,则过滤出其中(SparseFeat, VarLenSparseFeat)

sparse_feature_columns = list(

filter(lambda x: isinstance(x, (SparseFeat, VarLenSparseFeat)), feature_columns))

if len(feature_columns) else []

# 如果feature_columns存在,则过滤出其中(DenseFeat)

dense_feature_columns = list(

filter(lambda x: isinstance(x, DenseFeat), feature_columns)) if len(feature_columns) else []

# 获取密集特征concat后的维度

dense_input_dim = sum(map(lambda x: x.dimension, dense_feature_columns))

# 获取稀疏特征concat后的维度

if feature_group:

sparse_input_dim = len(sparse_feature_columns)

else:

sparse_input_dim = sum(feat.embedding_dim for feat in sparse_feature_columns)

# input_dim 为稀疏+密集特征 concat后的维度大小

input_dim = 0

if include_sparse:

input_dim += sparse_input_dim

if include_dense:

input_dim += dense_input_dim

return input_dim

def combined_dnn_input(sparse_embedding_list, dense_value_list):

if len(sparse_embedding_list) > 0 and len(dense_value_list) > 0:

# 稀疏特征和密集特征先concat,然后去除多余的轴,然后两者concat

sparse_dnn_input = torch.flatten(

torch.cat(sparse_embedding_list, dim=-1), start_dim=1)

dense_dnn_input = torch.flatten(

torch.cat(dense_value_list, dim=-1), start_dim=1)

return concat_fun([sparse_dnn_input, dense_dnn_input])

elif len(sparse_embedding_list) > 0:

return torch.flatten(torch.cat(sparse_embedding_list, dim=-1), start_dim=1)

elif len(dense_value_list) > 0:

return torch.flatten(torch.cat(dense_value_list, dim=-1), start_dim=1)

else:

raise NotImplementedError

------------------------------layers.core------------------------------

import torch

import torch.nn as nn

class Identity(nn.Module):

def __init__(self, **kwargs):

super(Identity, self).__init__()

def forward(self, X):

return X

def activation_layer(act_name, hidden_size=None, dice_dim=2):

if isinstance(act_name, str):

if act_name.lower() == 'sigmoid':

act_layer = nn.Sigmoid()

elif act_name.lower() == 'linear':

act_layer = Identity()

elif act_name.lower() == 'relu':

act_layer = nn.ReLU(inplace=True)

elif act_name.lower() == 'dice':

assert dice_dim

# act_layer = Dice(hidden_size, dice_dim)

elif act_name.lower() == 'prelu':

act_layer = nn.PReLU()

elif issubclass(act_name, nn.Module):

act_layer = act_name()

else:

raise NotImplementedError

return act_layer

class DNN(nn.Module):

def __init__(self, inputs_dim, hidden_units, activation='relu', l2_reg=0, dropout_rate=0, use_bn=False,

init_std=0.0001, dice_dim=3, seed=1024, device='cpu'):

super(DNN, self).__init__()

self.dropout_rate = dropout_rate

self.dropout = nn.Dropout(dropout_rate)

self.seed = seed

self.l2_reg = l2_reg

self.use_bn = use_bn

if len(hidden_units) == 0:

raise ValueError("hidden_units is empty!!")

if inputs_dim > 0:

hidden_units = [inputs_dim] + list(hidden_units)

else:

hidden_units = list(hidden_units)

# 建立DNN的网络结构

self.linears = nn.ModuleList(

[nn.Linear(hidden_units[i], hidden_units[i+1]) for i in range(len(hidden_units) - 1)])

# 准备好len(DNN)个bn层

if self.use_bn:

self.bn = nn.ModuleList(

[nn.BatchNorm1d(hidden_units[i+1]) for i in range(len(hidden_units) - 1)])

# 准备好len(DNN)个激活函数层

self.activation_layers = nn.ModuleList(

[activation_layer(activation, hidden_units[i+1], dice_dim)

for i in range(len(hidden_units) - 1)])

# 初始化DNN每一层的权重参数

for name, tensor in self.linears.named_parameters():

if 'weight' in name:

nn.init.normal_(tensor, mean=0, std=init_std)

self.to(device)

def forward(self, inputs):

deep_input = inputs

# 将__init__中准备好的全连接层,bn层,激活函数层,以及dropout层进行组装成完整的DNN网络

# 为什么前面几个都需要ModuleList保存,比如bn层,而dropout不需要;

# 因为linears和bn需要设置不同的参数,而dropout可以使用同一个参数,本质上两者均可用来构建网络结构

# 构建类之后,实例调用__call__ 内部就是调用forward函数

for i in range(len(self.linears)):

fc = self.linears[i](deep_input)

if self.use_bn:

fc = self.bn[i](fc)

fc = self.activation_layers[i](fc)

fc = self.dropout(fc)

deep_input = fc

return deep_input

------------------------------preprocessing.utils------------------------------

import numpy as np

import torch

def Cosine_Similarity(query, candidate, gamma=1, dim=-1):

# 实现cos公式先2个向量内积,再除以向量的模(加上epsilon平滑系数)

query_norm = torch.norm(query, dim=dim)

candidate_norm = torch.norm(candidate, dim=dim)

cosine_score = torch.sum(torch.multiply(query, candidate), dim=-1)

cosine_score = torch.div(cosine_score, query_norm*candidate_norm+1e-8)

cosine_score = torch.clamp(cosine_score, -1, 1.0)*gamma

return cosine_score

5.3.3 BaseTower

可以看到,整体网络构建为DSSM, 主要是先构建一个BaseTower类

from __future__ import print_function

import time

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data as Data

from sklearn.metrics import *

from torch.utils.data import DataLoader

from tqdm import tqdm

from preprocessing.inputs import SparseFeat, DenseFeat, VarLenSparseFeat, create_embedding_matrix, \

get_varlen_pooling_list, build_input_features

from layers.core import PredictionLayer

from preprocessing.utils import slice_arrays

class BaseTower(nn.Module):

def __init__(self, user_dnn_feature_columns, item_dnn_feature_columns, l2_reg_embedding=1e-5,

init_std=0.0001, seed=1024, task='binary', device='cpu', gpus=None):

super(BaseTower, self).__init__()

torch.manual_seed(seed)

self.reg_loss = torch.zeros((1,), device=device)

self.aux_loss = torch.zeros((1,), device=device)

self.device = device

self.gpus = gpus

if self.gpus and str(self.gpus[0]) not in self.device:

raise ValueError("`gpus[0]` should be the same gpu with `device`")

# 基于build_input_features 处理user特征和item特征

self.feature_index = build_input_features(user_dnn_feature_columns + item_dnn_feature_columns)

# user特征

self.user_dnn_feature_columns = user_dnn_feature_columns

self.user_embedding_dict = create_embedding_matrix(self.user_dnn_feature_columns, init_std,

sparse=False, device=device)

# item特征

self.item_dnn_feature_columns = item_dnn_feature_columns

self.item_embedding_dict = create_embedding_matrix(self.item_dnn_feature_columns, init_std,

sparse=False, device=device)

# 需要做正则化的参数

self.regularization_weight = []

self.add_regularization_weight(self.user_embedding_dict.parameters(), l2=l2_reg_embedding)

self.add_regularization_weight(self.item_embedding_dict.parameters(), l2=l2_reg_embedding)

self.out = PredictionLayer(task,)

self.to(device)

# parameters of callbacks

self._is_graph_network = True # used for ModelCheckpoint

self.stop_training = False # used for EarlyStopping

def fit(self, x=None, y=None, batch_size=None, epochs=1, verbose=1, initial_epoch=0, validation_split=0.,

validation_data=None, shuffle=True, callbacks=None):

if isinstance(x, dict):

x = [x[feature] for feature in self.feature_index]

do_validation = False

if validation_data:

do_validation = True

if len(validation_data) == 2:

val_x, val_y = validation_data

val_sample_weight = None

elif len(validation_data) == 3:

val_x, val_y, val_sample_weight = validation_data

else:

raise ValueError(

'When passing a `validation_data` argument, '

'it must contain either 2 items (x_val, y_val), '

'or 3 items (x_val, y_val, val_sample_weights), '

'or alternatively it could be a dataset or a '

'dataset or a dataset iterator. '

'However we received `validation_data=%s`' % validation_data)

if isinstance(val_x, dict):

val_x = [val_x[feature] for feature in self.feature_index]

elif validation_split and 0 < validation_split < 1.:

do_validation = True

if hasattr(x[0], 'shape'):

split_at = int(x[0].shape[0] * (1. - validation_split))

else:

split_at = int(len(x[0]) * (1. - validation_split))

x, val_x = (slice_arrays(x, 0, split_at),

slice_arrays(x, split_at))

y, val_y = (slice_arrays(y, 0, split_at),

slice_arrays(y, split_at))

else:

val_x = []

val_y = []

for i in range(len(x)):

if len(x[i].shape) == 1:

x[i] = np.expand_dims(x[i], axis=1)

train_tensor_data = Data.TensorDataset(torch.from_numpy(

np.concatenate(x, axis=-1)), torch.from_numpy(y))

if batch_size is None:

batch_size = 256

'''获取训练好的模型; loss函数; 迭代器 '''

model = self.train()

loss_func = self.loss_func

optim = self.optim

if self.gpus:

print('parallel running on these gpus:', self.gpus)

model = torch.nn.DataParallel(model, device_ids=self.gpus)

batch_size *= len(self.gpus) # input `batch_size` is batch_size per gpu

else:

print(self.device)

train_loader = DataLoader(dataset=train_tensor_data, shuffle=shuffle, batch_size=batch_size)

sample_num = len(train_tensor_data)

steps_per_epoch = (sample_num - 1) // batch_size + 1

# train

print("Train on {0} samples, validate on {1} samples, {2} steps per epoch".format(

len(train_tensor_data), len(val_y), steps_per_epoch))

for epoch in range(initial_epoch, epochs):

epoch_logs = {}

start_time = time.time()

loss_epoch = 0

total_loss_epoch = 0

train_result = {}

with tqdm(enumerate(train_loader), disable=verbose != 1) as t:

for _, (x_train, y_train) in t:

x = x_train.to(self.device).float()

y = y_train.to(self.device).float()

y_pred = model(x).squeeze()

optim.zero_grad()

loss = loss_func(y_pred, y.squeeze(), reduction='sum')

reg_loss = self.get_regularization_loss()

total_loss = loss + reg_loss + self.aux_loss

loss_epoch += loss.item()

total_loss_epoch += total_loss.item()

total_loss.backward()

optim.step()

if verbose > 0:

for name, metric_fun in self.metrics.items():

if name not in train_result:

train_result[name] = []

train_result[name].append(metric_fun(

y.cpu().data.numpy(), y_pred.cpu().data.numpy().astype('float64') ))

# add epoch_logs

epoch_logs["loss"] = total_loss_epoch / sample_num

for name, result in train_result.items():

epoch_logs[name] = np.sum(result) / steps_per_epoch

if do_validation:

eval_result = self.evaluate(val_x, val_y, batch_size)

for name, result in eval_result.items():

epoch_logs["val_" + name] = result

if verbose > 0:

epoch_time = int(time.time() - start_time)

print('Epoch {0}/{1}'.format(epoch + 1, epochs))

eval_str = "{0}s - loss: {1: .4f}".format(epoch_time, epoch_logs["loss"])

for name in self.metrics:

eval_str += " - " + name + ": {0: .4f} ".format(epoch_logs[name]) + " - " + \

"val_" + name + ": {0: .4f}".format(epoch_logs["val_" + name])

print(eval_str)

if self.stop_training:

break

def evaluate(self, x, y, batch_size=256):

pred_ans = self.predict(x, batch_size)

eval_result = {}

for name, metric_fun in self.metrics.items():

eval_result[name] = metric_fun(y, pred_ans)

return eval_result

def predict(self, x, batch_size=256):

model = self.eval()

if isinstance(x, dict):

x = [x[feature] for feature in self.feature_index]

for i in range(len(x)):

if len(x[i].shape) == 1:

x[i] = np.expand_dims(x[i], axis=1)

tensor_data = Data.TensorDataset(

torch.from_numpy(np.concatenate(x, axis=-1)) )

test_loader = DataLoader(

dataset=tensor_data, shuffle=False, batch_size=batch_size )

pred_ans = []

with torch.no_grad():

for _, x_test in enumerate(test_loader):

x = x_test[0].to(self.device).float()

y_pred = model(x).cpu().data.numpy()

pred_ans.append(y_pred)

return np.concatenate(pred_ans).astype("float64")

def input_from_feature_columns(self, X, feature_columns, embedding_dict, support_dense=True):

sparse_feature_columns = list(

filter(lambda x: isinstance(x, SparseFeat), feature_columns)) if len(feature_columns) else []

dense_feature_columns = list(

filter(lambda x: isinstance(x, DenseFeat), feature_columns)) if len(feature_columns) else []

varlen_sparse_feature_columns = list(

filter(lambda x: isinstance(x, VarLenSparseFeat), feature_columns)) if feature_columns else []

if not support_dense and len(dense_feature_columns) > 0:

raise ValueError(

"DenseFeat is not supported in dnn_feature_columns")

sparse_embedding_list = [

embedding_dict[feat.embedding_name](

X[:, self.feature_index[feat.name][0]:self.feature_index[feat.name][1]].long()

) for feat in sparse_feature_columns ]

varlen_sparse_embedding_list = get_varlen_pooling_list(embedding_dict, X, self.feature_index,

varlen_sparse_feature_columns, self.device)

dense_value_list = [ X[:, self.feature_index[feat.name][0]:self.feature_index[feat.name][1]]

for feat in dense_feature_columns]

return sparse_embedding_list + varlen_sparse_embedding_list, dense_value_list

def compute_input_dim(self, feature_columns, include_sparse=True,

include_dense=True, feature_group=False):

sparse_feature_columns = list(

filter( lambda x: isinstance(x, (SparseFeat, VarLenSparseFeat)), feature_columns) )

if len(feature_columns) else []

dense_feature_columns = list(

filter(lambda x: isinstance(x, DenseFeat), feature_columns)) if len(feature_columns) else []

dense_input_dim = sum(

map(lambda x: x.dimension, dense_feature_columns))

if feature_group:

sparse_input_dim = len(sparse_feature_columns)

else:

sparse_input_dim = sum(feat.embedding_dim for feat in sparse_feature_columns)

input_dim = 0

if include_sparse:

input_dim += sparse_input_dim

if include_dense:

input_dim += dense_input_dim

return input_dim

def add_regularization_weight(self, weight_list, l1=0.0, l2=0.0):

if isinstance(weight_list, torch.nn.parameter.Parameter):

weight_list = [weight_list]

else:

weight_list = list(weight_list)

self.regularization_weight.append((weight_list, l1, l2))

def get_regularization_loss(self):

total_reg_loss = torch.zeros((1,), device=self.device)

for weight_list, l1, l2 in self.regularization_weight:

for w in weight_list:

if isinstance(w, tuple):

parameter = w[1] # named_parameters

else:

parameter = w

if l1 > 0:

total_reg_loss += torch.sum(l1 * torch.abs(parameter))

if l2 > 0:

try:

total_reg_loss += torch.sum(l2 * torch.square(parameter))

except AttributeError:

total_reg_loss += torch.sum(l2 * parameter * parameter)

return total_reg_loss

def add_auxiliary_loss(self, aux_loss, alpha):

self.aux_loss = aux_loss * alpha

def compile(self, optimizer, loss=None, metrics=None):

self.metrics_names = ["loss"]

self.optim = self._get_optim(optimizer)

self.loss_func = self._get_loss_func(loss)

self.metrics = self._get_metrics(metrics)

def _get_optim(self, optimizer):

if isinstance(optimizer, str):

if optimizer == "sgd":

optim = torch.optim.SGD(self.parameters(), lr=0.01)

elif optimizer == "adam":

optim = torch.optim.Adam(self.parameters()) # 0.001

elif optimizer == "adagrad":

optim = torch.optim.Adagrad(self.parameters()) # 0.01

elif optimizer == "rmsprop":

optim = torch.optim.RMSprop(self.parameters())

else:

raise NotImplementedError

else:

optim = optimizer

return optim

def _get_loss_func(self, loss):

if isinstance(loss, str):

if loss == "binary_crossentropy":

loss_func = F.binary_cross_entropy

elif loss == "mse":

loss_func = F.mse_loss

elif loss == "mae":

loss_func = F.l1_loss

else:

raise NotImplementedError

else:

loss_func = loss

return loss_func

def _log_loss(self, y_true, y_pred, eps=1e-7, normalize=True, sample_weight=None, labels=None):

# change eps to improve calculation accuracy

return log_loss(y_true,

y_pred,

eps,

normalize,

sample_weight,

labels)

def _get_metrics(self, metrics, set_eps=False):

metrics_ = {}

if metrics:

for metric in metrics:

if metric == "binary_crossentropy" or metric == "logloss":

if set_eps:

metrics_[metric] = self._log_loss

else:

metrics_[metric] = log_loss

if metric == "auc":

metrics_[metric] = roc_auc_score

if metric == "mse":

metrics_[metric] = mean_squared_error

if metric == "accuracy" or metric == "acc":

metrics_[metric] = lambda y_true, y_pred: accuracy_score(

y_true, np.where(y_pred > 0.5, 1, 0))

self.metrics_names.append(metric)

return metrics_

@property

def embedding_size(self):

feature_columns = self.dnn_feature_columns

sparse_feature_columns = list(

filter(lambda x: isinstance(x, (SparseFeat, VarLenSparseFeat)), feature_columns)) if len(

feature_columns) else []

embedding_size_set = set([feat.embedding_dim for feat in sparse_feature_columns])

if len(embedding_size_set) > 1:

raise ValueError("embedding_dim of SparseFeat and VarlenSparseFeat must be same in this model!")

return list(embedding_size_set)[0]

5.3.4 BaseTower 其辅助函数和类

下面是为了构建BaseTower所需要的几个辅助函数和类

------------------------------preorcessing.input------------------------------

import numpy as np

import torch

import torch.nn as nn

from collections import OrderedDict, namedtuple

from layers.sequence import SequencePoolingLayer

from collections import OrderedDict, namedtuple, defaultdict

from itertools import chain

DEFAULT_GROUP_NAME = "default_group"

def create_embedding_matrix(feature_columns, init_std=0.0001, linear=False, sparse=False, device='cpu'):

sparse_feature_columns = list(

filter(lambda x: isinstance(x, SparseFeat), feature_columns)) if len(feature_columns) else []

varlen_sparse_feature_columns = list(

filter(lambda x: isinstance(x, VarLenSparseFeat), feature_columns)) if len(feature_columns) else []

embedding_dict = nn.ModuleDict({feat.embedding_name: nn.Embedding(feat.vocabulary_size,

feat.embedding_dim if not linear else 1)

for feat in sparse_feature_columns + varlen_sparse_feature_columns})

for tensor in embedding_dict.values():

nn.init.normal_(tensor.weight, mean=0, std=init_std)

return embedding_dict.to(device)

def get_varlen_pooling_list(embedding_dict, features, feature_index, varlen_sparse_feature_columns, device):

varlen_sparse_embedding_list = []

for feat in varlen_sparse_feature_columns:

seq_emb = embedding_dict[feat.embedding_name](

features[:, feature_index[feat.name][0]:feature_index[feat.name][1]].long())

if feat.length_name is None:

seq_mask = features[:, feature_index[feat.name][0]:feature_index[feat.name][1]].long() != 0

emb = SequencePoolingLayer(mode=feat.combiner, support_masking=True, device=device)(

[seq_emb, seq_mask])

else:

seq_length = features[:, feature_index[feat.length_name][0]:feature_index[feat.length_name][1]

].long()

emb = SequencePoolingLayer(mode=feat.combiner, support_masking=False, device=device)(

[seq_emb, seq_length])

varlen_sparse_embedding_list.append(emb)

return varlen_sparse_embedding_list

def build_input_features(feature_columns):

features = OrderedDict()

start = 0

for feat in feature_columns:

feat_name = feat.name

if feat_name in features:

continue

if isinstance(feat, SparseFeat):

features[feat_name] = (start, start + 1)

start += 1

elif isinstance(feat, DenseFeat):

features[feat_name] = (start, start + feat.dimension)

start += feat.dimension

elif isinstance(feat, VarLenSparseFeat):

features[feat_name] = (start, start + feat.maxlen)

start += feat.maxlen

if feat.length_name is not None and feat.length_name not in features:

features[feat.length_name] = (start, start+1)

start += 1

else:

raise TypeError("Invalid feature column type,got", type(feat))

return features

------------------------------layers.core------------------------------

import torch

import torch.nn as nn

# 如果一切都是默认值,则是输出结果为=sigmoid(score+bias),其中bias可训练

class PredictionLayer(nn.Module):

def __init__(self, task='binary', use_bias=True, **kwargs):

if task not in ["binary", "multiclass", "regression"]:

raise ValueError("task must be binary,multiclass or regression")

super(PredictionLayer, self).__init__()

self.use_bias = use_bias

self.task = task

if self.use_bias:

self.bias = nn.Parameter(torch.zeros((1,)))

def forward(self, X):

output = X

if self.use_bias:

output += self.bias

if self.task == "binary":

output = torch.sigmoid(X)

return output

------------------------------preprocessing.utils------------------------------

import numpy as np

import torch

def slice_arrays(arrays, start=None, stop=None):

if arrays is None:

return [None]

if isinstance(arrays, np.ndarray):

arrays = [arrays]

if isinstance(start, list) and stop is not None:

raise ValueError('The stop argument has to be None if the value of start '

'is a list.')

elif isinstance(arrays, list):

if hasattr(start, '__len__'):

# hdf5 datasets only support list objects as indices

if hasattr(start, 'shape'):

start = start.tolist()

return [None if x is None else x[start] for x in arrays]

else:

if len(arrays) == 1:

return arrays[0][start:stop]

return [None if x is None else x[start:stop] for x in arrays]

else:

if hasattr(start, '__len__'):

if hasattr(start, 'shape'):

start = start.tolist()

return arrays[start]

elif hasattr(start, '__getitem__'):

return arrays[start:stop]

else:

return [None]

5.4 模型训练

这部分还是挺简单的

model.compile("adam", "binary_crossentropy", metrics=['auc', 'accuracy'])

# %%

model.fit(train_model_input, train[target].values, batch_size=256, epochs=10, verbose=2, validation_split=0.2)

参考文献:

DSSM

双塔的前世今生(Deep Structured Semantic Models)

DSSM原理解读与工程实践

DSSM双塔模型及pytorch实现