-

环境搭建准备

- 准备zookeeper环境(zookeeper-3.4.6) - 下载kafka安装包(kafka_2.12-2.1.0) - 上传到服务器 -

搭建kafka环境

# 解压kafka安装包到/usr/local tar -zxvf kafka_2.12-2.0.0.tgz -C /usr/local/ # 重命名kafka cd /usr/local/ mv kafka_2.12-2.0.0/ kafka_2.12 # 修改server.properties vi /usr/local/kafka_2.12/conf/ (1) broker.id=0 (2) 添加port port=9092 (3) 添加host.name host.name=192.168.147.102 (4) num.partitions(分区数) num.partitions=5 (5) 日志输出地址 log.dirs=/usr/local/kafka_2.12/kafka-logs (6) zk集群地址 zookeeper.connect=192.168.147.102:2181,192.168.147.103:2181,192.168.147.104:2181-

创建kafka存储消息(log日志数据)

[root@bigdata01 config]# mkdir -p /usr/local/kafka_2.12/kafka-logs [root@bigdata01 config]# cd /usr/local/kafka_2.12/ [root@bigdata01 kafka_2.12]# ls bin config kafka-logs libs LICENSE NOTICE site-docs-

启动kafka

/usr/local/kafka_2.12/bin/kafka-server-start.sh /usr/local/kafka_2.12/config/server.properties &[2021-05-17 22:29:27,916] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,916] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,916] INFO Client environment:os.version=3.10.0-1160.el7.x86_64 (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,916] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,916] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,917] INFO Client environment:user.dir=/usr/local/kafka_2.12 (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,918] INFO Initiating client connection, connectString=192.168.147.102:2181,192.168.147.103:2181,192.168.147.104 sessionTimeout=6000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@4386f16 (org.apache.zookeeper.ZooKeeper) [2021-05-17 22:29:27,947] INFO [ZooKeeperClient] Waiting until connected. (kafka.zookeeper.ZooKeeperClient) [2021-05-17 22:29:27,947] INFO Opening socket connection to server bigdata03/192.168.147.104:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn) [2021-05-17 22:29:27,968] INFO Socket error occurred: bigdata03/192.168.147.104:2181: Connection refused (org.apache.zookeeper.ClientCnxn) [2021-05-17 22:29:28,072] INFO Opening socket connection to server bigdata02/192.168.147.103:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn) [2021-05-17 22:29:28,073] INFO Socket connection established to bigdata02/192.168.147.103:2181, initiating session (org.apache.zookeeper.ClientCnxn) [2021-05-17 22:29:28,107] INFO Session establishment complete on server bigdata02/192.168.147.103:2181, sessionid = 0x1797aaf01bb0000, negotiated timeout = 6000 (org.apache.zookeeper.ClientCnxn) [2021-05-17 22:29:28,114] INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient) [2021-05-17 22:29:28,707] INFO Cluster ID = 6vIlYDZhSDyFBAKl_N6Brg (kafka.server.KafkaServer) [2021-05-17 22:29:28,755] WARN No meta.properties file under dir /usr/local/kafka_2.12/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint) [2021-05-17 22:29:28,946] INFO KafkaConfig values: advertised.host.name = null advertised.listeners = null advertised.port = null alter.config.policy.class.name = null alter.log.dirs.replication.quota.window.num = 11 alter.log.dirs.replication.quota.window.size.seconds = 1 authorizer.class.name = auto.create.topics.enable = true auto.leader.rebalance.enable = true background.threads = 10 broker.id = 0 broker.id.generation.enable = true broker.rack = null client.quota.callback.class = null compression.type = producer connections.max.idle.ms = 600000 controlled.shutdown.enable = true controlled.shutdown.max.retries = 3 controlled.shutdown.retry.backoff.ms = 5000 controller.socket.timeout.ms = 30000 create.topic.policy.class.name = null default.replication.factor = 1 delegation.token.expiry.check.interval.ms = 3600000 delegation.token.expiry.time.ms = 86400000 delegation.token.master.key = null delegation.token.max.lifetime.ms = 604800000 delete.records.purgatory.purge.interval.requests = 1 delete.topic.enable = true fetch.purgatory.purge.interval.requests = 1000 group.initial.rebalance.delay.ms = 0 group.max.session.timeout.ms = 300000 group.min.session.timeout.ms = 6000 host.name = 192.168.147.102 inter.broker.listener.name = null inter.broker.protocol.version = 2.0-IV1 leader.imbalance.check.interval.seconds = 300 leader.imbalance.per.broker.percentage = 10 listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL listeners = null log.cleaner.backoff.ms = 15000 log.cleaner.dedupe.buffer.size = 134217728 log.cleaner.delete.retention.ms = 86400000 log.cleaner.enable = true log.cleaner.io.buffer.load.factor = 0.9 log.cleaner.io.buffer.size = 524288 log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308 log.cleaner.min.cleanable.ratio = 0.5 log.cleaner.min.compaction.lag.ms = 0 log.cleaner.threads = 1 log.cleanup.policy = [delete] log.dir = /tmp/kafka-logs log.dirs = /usr/local/kafka_2.12/kafka-logs log.flush.interval.messages = 9223372036854775807 log.flush.interval.ms = null log.flush.offset.checkpoint.interval.ms = 60000 log.flush.scheduler.interval.ms = 9223372036854775807 log.flush.start.offset.checkpoint.interval.ms = 60000 log.index.interval.bytes = 4096 log.index.size.max.bytes = 10485760 log.message.downconversion.enable = true log.message.format.version = 2.0-IV1 log.message.timestamp.difference.max.ms = 9223372036854775807 log.message.timestamp.type = CreateTime log.preallocate = false log.retention.bytes = -1 log.retention.check.interval.ms = 300000 log.retention.hours = 168 log.retention.minutes = null log.retention.ms = null log.roll.hours = 168 log.roll.jitter.hours = 0 log.roll.jitter.ms = null log.roll.ms = null log.segment.bytes = 1073741824 log.segment.delete.delay.ms = 60000 max.connections.per.ip = 2147483647 max.connections.per.ip.overrides = max.incremental.fetch.session.cache.slots = 1000 message.max.bytes = 1000012 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 min.insync.replicas = 1 num.io.threads = 8 num.network.threads = 3 num.partitions = 5 num.recovery.threads.per.data.dir = 1 num.replica.alter.log.dirs.threads = null num.replica.fetchers = 1 offset.metadata.max.bytes = 4096 offsets.commit.required.acks = -1 offsets.commit.timeout.ms = 5000 offsets.load.buffer.size = 5242880 offsets.retention.check.interval.ms = 600000 offsets.retention.minutes = 10080 offsets.topic.compression.codec = 0 offsets.topic.num.partitions = 50 offsets.topic.replication.factor = 1 offsets.topic.segment.bytes = 104857600 password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding password.encoder.iterations = 4096 password.encoder.key.length = 128 password.encoder.keyfactory.algorithm = null password.encoder.old.secret = null password.encoder.secret = null port = 9092 principal.builder.class = null producer.purgatory.purge.interval.requests = 1000 queued.max.request.bytes = -1 queued.max.requests = 500 quota.consumer.default = 9223372036854775807 quota.producer.default = 9223372036854775807 quota.window.num = 11 quota.window.size.seconds = 1 replica.fetch.backoff.ms = 1000 replica.fetch.max.bytes = 1048576 replica.fetch.min.bytes = 1 replica.fetch.response.max.bytes = 10485760 replica.fetch.wait.max.ms = 500 replica.high.watermark.checkpoint.interval.ms = 5000 replica.lag.time.max.ms = 10000 replica.socket.receive.buffer.bytes = 65536 replica.socket.timeout.ms = 30000 replication.quota.window.num = 11 replication.quota.window.size.seconds = 1 request.timeout.ms = 30000 reserved.broker.max.id = 1000 sasl.client.callback.handler.class = null sasl.enabled.mechanisms = [GSSAPI] sasl.jaas.config = null sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.principal.to.local.rules = [DEFAULT] sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.login.callback.handler.class = null sasl.login.class = null sasl.login.refresh.buffer.seconds = 300 sasl.login.refresh.min.period.seconds = 60 sasl.login.refresh.window.factor = 0.8 sasl.login.refresh.window.jitter = 0.05 sasl.mechanism.inter.broker.protocol = GSSAPI sasl.server.callback.handler.class = null security.inter.broker.protocol = PLAINTEXT socket.receive.buffer.bytes = 102400 socket.request.max.bytes = 104857600 socket.send.buffer.bytes = 102400 ssl.cipher.suites = [] ssl.client.auth = none ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = https ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000 transaction.max.timeout.ms = 900000 transaction.remove.expired.transaction.cleanup.interval.ms = 3600000 transaction.state.log.load.buffer.size = 5242880 transaction.state.log.min.isr = 1 transaction.state.log.num.partitions = 50 transaction.state.log.replication.factor = 1 transaction.state.log.segment.bytes = 104857600 transactional.id.expiration.ms = 604800000 unclean.leader.election.enable = false zookeeper.connect = 192.168.147.102:2181,192.168.147.103:2181,192.168.147.104 zookeeper.connection.timeout.ms = 6000 zookeeper.max.in.flight.requests = 10 zookeeper.session.timeout.ms = 6000 zookeeper.set.acl = false zookeeper.sync.time.ms = 2000 (kafka.server.KafkaConfig) [2021-05-17 22:29:28,968] INFO KafkaConfig values: advertised.host.name = null advertised.listeners = null advertised.port = null alter.config.policy.class.name = null alter.log.dirs.replication.quota.window.num = 11 alter.log.dirs.replication.quota.window.size.seconds = 1 authorizer.class.name = auto.create.topics.enable = true auto.leader.rebalance.enable = true background.threads = 10 broker.id = 0 broker.id.generation.enable = true broker.rack = null client.quota.callback.class = null compression.type = producer connections.max.idle.ms = 600000 controlled.shutdown.enable = true controlled.shutdown.max.retries = 3 controlled.shutdown.retry.backoff.ms = 5000 controller.socket.timeout.ms = 30000 create.topic.policy.class.name = null default.replication.factor = 1 delegation.token.expiry.check.interval.ms = 3600000 delegation.token.expiry.time.ms = 86400000 delegation.token.master.key = null delegation.token.max.lifetime.ms = 604800000 delete.records.purgatory.purge.interval.requests = 1 delete.topic.enable = true fetch.purgatory.purge.interval.requests = 1000 group.initial.rebalance.delay.ms = 0 group.max.session.timeout.ms = 300000 group.min.session.timeout.ms = 6000 host.name = 192.168.147.102 inter.broker.listener.name = null inter.broker.protocol.version = 2.0-IV1 leader.imbalance.check.interval.seconds = 300 leader.imbalance.per.broker.percentage = 10 listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL listeners = null log.cleaner.backoff.ms = 15000 log.cleaner.dedupe.buffer.size = 134217728 log.cleaner.delete.retention.ms = 86400000 log.cleaner.enable = true log.cleaner.io.buffer.load.factor = 0.9 log.cleaner.io.buffer.size = 524288 log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308 log.cleaner.min.cleanable.ratio = 0.5 log.cleaner.min.compaction.lag.ms = 0 log.cleaner.threads = 1 log.cleanup.policy = [delete] log.dir = /tmp/kafka-logs log.dirs = /usr/local/kafka_2.12/kafka-logs log.flush.interval.messages = 9223372036854775807 log.flush.interval.ms = null log.flush.offset.checkpoint.interval.ms = 60000 log.flush.scheduler.interval.ms = 9223372036854775807 log.flush.start.offset.checkpoint.interval.ms = 60000 log.index.interval.bytes = 4096 log.index.size.max.bytes = 10485760 log.message.downconversion.enable = true log.message.format.version = 2.0-IV1 log.message.timestamp.difference.max.ms = 9223372036854775807 log.message.timestamp.type = CreateTime log.preallocate = false log.retention.bytes = -1 log.retention.check.interval.ms = 300000 log.retention.hours = 168 log.retention.minutes = null log.retention.ms = null log.roll.hours = 168 log.roll.jitter.hours = 0 log.roll.jitter.ms = null log.roll.ms = null log.segment.bytes = 1073741824 log.segment.delete.delay.ms = 60000 max.connections.per.ip = 2147483647 max.connections.per.ip.overrides = max.incremental.fetch.session.cache.slots = 1000 message.max.bytes = 1000012 metric.reporters = [] metrics.num.samples = 2 metrics.recording.level = INFO metrics.sample.window.ms = 30000 min.insync.replicas = 1 num.io.threads = 8 num.network.threads = 3 num.partitions = 5 num.recovery.threads.per.data.dir = 1 num.replica.alter.log.dirs.threads = null num.replica.fetchers = 1 offset.metadata.max.bytes = 4096 offsets.commit.required.acks = -1 offsets.commit.timeout.ms = 5000 offsets.load.buffer.size = 5242880 offsets.retention.check.interval.ms = 600000 offsets.retention.minutes = 10080 offsets.topic.compression.codec = 0 offsets.topic.num.partitions = 50 offsets.topic.replication.factor = 1 offsets.topic.segment.bytes = 104857600 password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding password.encoder.iterations = 4096 password.encoder.key.length = 128 password.encoder.keyfactory.algorithm = null password.encoder.old.secret = null password.encoder.secret = null port = 9092 principal.builder.class = null producer.purgatory.purge.interval.requests = 1000 queued.max.request.bytes = -1 queued.max.requests = 500 quota.consumer.default = 9223372036854775807 quota.producer.default = 9223372036854775807 quota.window.num = 11 quota.window.size.seconds = 1 replica.fetch.backoff.ms = 1000 replica.fetch.max.bytes = 1048576 replica.fetch.min.bytes = 1 replica.fetch.response.max.bytes = 10485760 replica.fetch.wait.max.ms = 500 replica.high.watermark.checkpoint.interval.ms = 5000 replica.lag.time.max.ms = 10000 replica.socket.receive.buffer.bytes = 65536 replica.socket.timeout.ms = 30000 replication.quota.window.num = 11 replication.quota.window.size.seconds = 1 request.timeout.ms = 30000 reserved.broker.max.id = 1000 sasl.client.callback.handler.class = null sasl.enabled.mechanisms = [GSSAPI] sasl.jaas.config = null sasl.kerberos.kinit.cmd = /usr/bin/kinit sasl.kerberos.min.time.before.relogin = 60000 sasl.kerberos.principal.to.local.rules = [DEFAULT] sasl.kerberos.service.name = null sasl.kerberos.ticket.renew.jitter = 0.05 sasl.kerberos.ticket.renew.window.factor = 0.8 sasl.login.callback.handler.class = null sasl.login.class = null sasl.login.refresh.buffer.seconds = 300 sasl.login.refresh.min.period.seconds = 60 sasl.login.refresh.window.factor = 0.8 sasl.login.refresh.window.jitter = 0.05 sasl.mechanism.inter.broker.protocol = GSSAPI sasl.server.callback.handler.class = null security.inter.broker.protocol = PLAINTEXT socket.receive.buffer.bytes = 102400 socket.request.max.bytes = 104857600 socket.send.buffer.bytes = 102400 ssl.cipher.suites = [] ssl.client.auth = none ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1] ssl.endpoint.identification.algorithm = https ssl.key.password = null ssl.keymanager.algorithm = SunX509 ssl.keystore.location = null ssl.keystore.password = null ssl.keystore.type = JKS ssl.protocol = TLS ssl.provider = null ssl.secure.random.implementation = null ssl.trustmanager.algorithm = PKIX ssl.truststore.location = null ssl.truststore.password = null ssl.truststore.type = JKS transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000 transaction.max.timeout.ms = 900000 transaction.remove.expired.transaction.cleanup.interval.ms = 3600000 transaction.state.log.load.buffer.size = 5242880 transaction.state.log.min.isr = 1 transaction.state.log.num.partitions = 50 transaction.state.log.replication.factor = 1 transaction.state.log.segment.bytes = 104857600 transactional.id.expiration.ms = 604800000 unclean.leader.election.enable = false zookeeper.connect = 192.168.147.102:2181,192.168.147.103:2181,192.168.147.104 zookeeper.connection.timeout.ms = 6000 zookeeper.max.in.flight.requests = 10 zookeeper.session.timeout.ms = 6000 zookeeper.set.acl = false zookeeper.sync.time.ms = 2000 (kafka.server.KafkaConfig) [2021-05-17 22:29:29,025] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2021-05-17 22:29:29,027] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2021-05-17 22:29:29,029] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper) [2021-05-17 22:29:29,109] INFO Loading logs. (kafka.log.LogManager) [2021-05-17 22:29:29,125] INFO Logs loading complete in 16 ms. (kafka.log.LogManager) [2021-05-17 22:29:29,146] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager) [2021-05-17 22:29:29,153] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager) [2021-05-17 22:29:30,308] INFO Awaiting socket connections on 192.168.147.102:9092. (kafka.network.Acceptor) [2021-05-17 22:29:30,359] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer) [2021-05-17 22:29:30,402] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,405] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,413] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,435] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler) [2021-05-17 22:29:30,474] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient) [2021-05-17 22:29:30,479] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient) [2021-05-17 22:29:30,480] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(192.168.147.102,9092,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient) [2021-05-17 22:29:30,483] WARN No meta.properties file under dir /usr/local/kafka_2.12/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint) [2021-05-17 22:29:30,589] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,599] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,599] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper) [2021-05-17 22:29:30,613] INFO Creating /controller (is it secure? false) (kafka.zk.KafkaZkClient) [2021-05-17 22:29:30,623] INFO Result of znode creation at /controller is: OK (kafka.zk.KafkaZkClient) [2021-05-17 22:29:30,639] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator) [2021-05-17 22:29:30,644] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator) [2021-05-17 22:29:30,655] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 10 milliseconds. (kafka.coordinator.group.GroupMetadataManager) [2021-05-17 22:29:30,677] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:0,blockEndProducerId:999) by writing to Zk with path version 1 (kafka.coordinator.transaction.ProducerIdManager) [2021-05-17 22:29:30,715] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator) [2021-05-17 22:29:30,718] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator) [2021-05-17 22:29:30,789] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager) [2021-05-17 22:29:30,913] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread) [2021-05-17 22:29:31,005] INFO [SocketServer brokerId=0] Started processors for 1 acceptors (kafka.network.SocketServer) [2021-05-17 22:29:31,020] INFO Kafka version : 2.0.0 (org.apache.kafka.common.utils.AppInfoParser) [2021-05-17 22:29:31,020] INFO Kafka commitId : 3402a8361b734732 (org.apache.kafka.common.utils.AppInfoParser) [2021-05-17 22:29:31,022] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

-

-

-

安装kafka-manager

# 解压kafka-manager unzip kafka-manager-2.0.0.2.zip -d /usr/local/ # 修改配置文件 cd /usr/local/kafka-manager-2.0.0.2/conf vi application.conf kafka-manager.zkhosts="192.168.147.102:2181,192.168.147.103:2181,192.168.147.104:2181"- 启动kafka-manager控制台

nohup /usr/local/kafka-manager-2.0.0.2/bin/kafka-manager &-

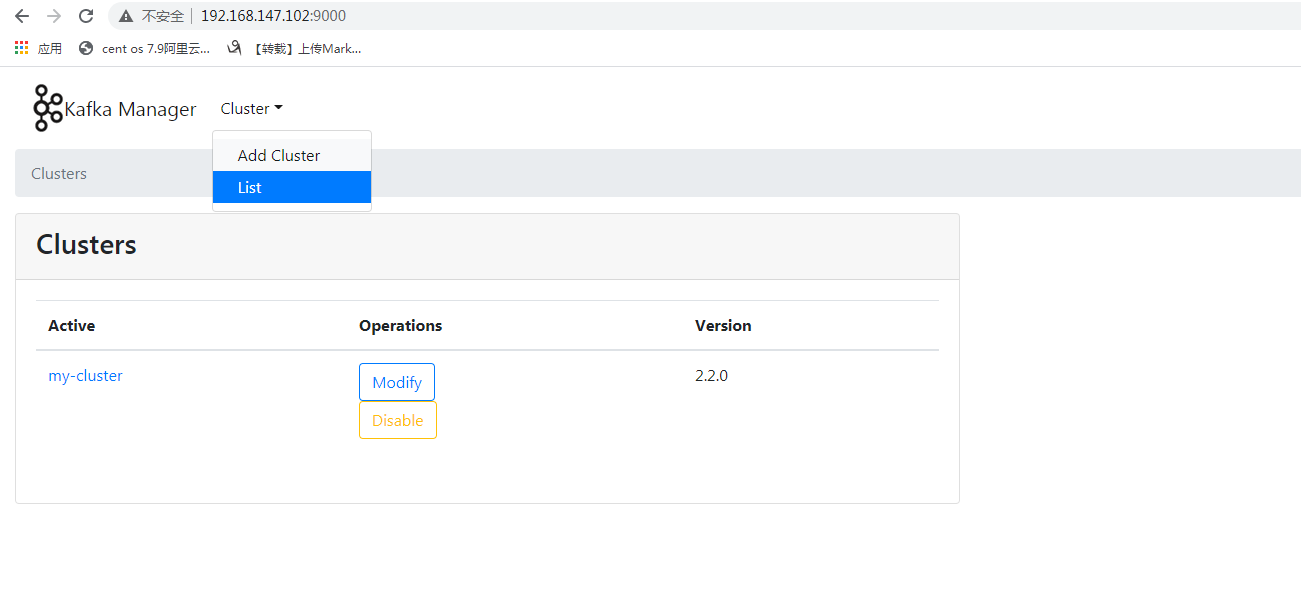

浏览器访问控制台:默认端口号:9000

-

添加cluster集群

-

集群验证

# 通过控制台创建一个topic为'test' 2个分区 1个副本 cd /usr/local/kafka_2.12/bin # 启动发送消息的脚本 ./kafka-console-producer.sh --broker-list 192.168.147.102:9092 --topic myTest # 启动接收消息的脚本 ./kafka-console-consumer.sh --bootstrap-server 192.168.147.102:9092 --topic myTest