1. 健康检查(Probe)的定义

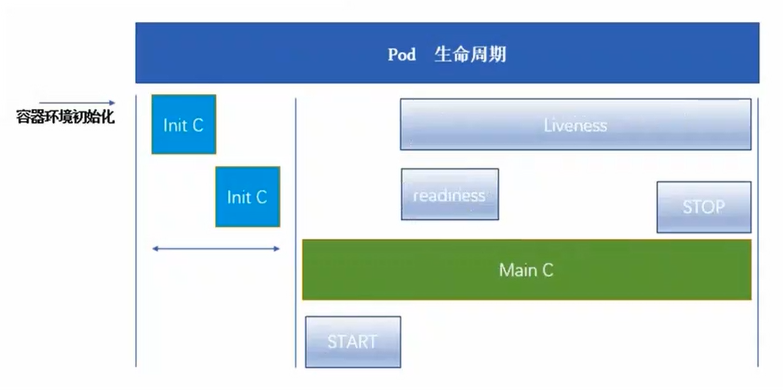

k8s 在 Docker 技术的基础上,为应用提供容器跨多个服务器主机的容器部署和管理、服务发现、负载均衡和动态伸缩等一系列完整功能,可方便地进行大规模容器集群管理。云上应用程序在运行过程中,由于一些不确定因素(例如网络瞬间不可达、配置错误、程序内部错误等),经常导致出现一些异常状况。为此 k8s 提供了一套完善的容器健康检查的探测机制。健康检查又称为探针(Probe),是由 kubelet 对容器执行的定期诊断。

2. 探针的种类

2.1 存活检查(livenessprobe,存活探针)

判断容器是否正在运行。如果探测失败,则 kubelet 会杀死容器,并且容器将根据 restartPolicy 来设置 Pod 状态,如果容器不提供存活探针,则默认状态为 Success。

2.2 就绪检查(readinessprobe,就绪探针,业务探针)

判断容器是否准备好接受请求。如果探测失败,端点控制器将从与 Pod 匹配的所有 service endpoints 中剔除删除该 Pod 的 IP 地址,这样失败的 Pod 就无法提供服务了。初始延迟之前的就绪状态默认为 Failure。如果容器不提供就绪探针,则默认状态为 Success。

2.3 启动检查(startupprobe,启动探针,1.17 版本新增)

判断容器内的应用程序是否已启动,主要针对于不能确定具体启动时间的应用。如果匹配了 startupProbe 探测,则在 startupProbe 状态为 Success 之前,其他所有探针都处于无效状态,直到它成功后其他探针才起作用。如果 startupProbe 失败,kubelet 将杀死容器,容器将根据 restartPolicy 来重启。如果容器没有配置 startupProbe,则默认状态为 Success。

如果以上三种规则同时定义。在 readinessProbe 检测成功之前,Pod 的运行状态是不会变成 ready 状态的。

3. Probe 支持的三种检测方法

3.1 exec

在容器内执行指定的 shell 命令,如果命令返回 0,说明容器运行状态正常;如果命名返回非 0 值,说明容器运行状态异常。

3.2 tcpSocket

使用 TCP Socket 连接容器中的指定端口,如果能够建立连接,kubelet 会认为容器处于健康状态;如果无法建立连接,kubelet 认为容器处于异常状态。

3.3 httpGet

使用 HTTP GET 请求指定的 URI,如果返回了成功状态码(2xx 或 3xx),kubelet 会认为容器处于健康状态;如果返回了失败的状态码(除 2xx 和 3xx 外的状态码),则 kubelet 会认为容器处于异常状态。

每次探测都将获得以下三种结果之一:

● 成功:容器通过了诊断

● 失败:容器未通过诊断

● 未知:诊断失败,因此不会采取任何行动

4. 可选参数

| 行为属性名称 | 默认值 | 最小值 | 备注 |

|---|---|---|---|

| initialDelaySeconds | 0 秒 | 0 秒 | 探测延迟时长,容器启动后多久开始进行第一次探测工作。 |

| timeoutSeconds | 1 秒 | 1 秒 | 探测的超时时长。 |

| periodSeconds | 10 秒 | 1 秒 | 探测频度,频率过高会对 pod 带来较大的额外开销,频率过低则无法及时反映容器产生的错误。 |

| failureThreshold | 3 | 1 | 处于成功状态时,探测连续失败几次可被认为失败。 |

| successThreshold | 1 | 1 | 处于失败状态时,探测连续成功几次,被认为成功。 |

5. 探测示例

5.1 exec

官方示例

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: k8s.gcr.io/busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

在这个配置文件中,可以看到 Pod 中只有一个容器。 periodSeconds 字段指定了 kubelet 应该每 5 秒执行一次存活探测。 initialDelaySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 5 秒。 kubelet 在容器内执行命令 cat /tmp/healthy 来进行探测。 如果命令执行成功并且返回值为 0,kubelet 就会认为这个容器是健康存活的。 如果这个命令返回非 0 值,kubelet 会杀死这个容器并重新启动它。

编写 yaml 资源配置清单

[root@master ~]#vim exec.yaml

[root@master ~]#cat exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec

namespace: default

spec:

containers:

- name: liveness-exec-container

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","touch /tmp/live; sleep 30; rm -rf /tmp/live; sleep 600"]

livenessProbe:

exec:

command: ["test","-e","/tmp/live"]

initialDelaySeconds: 1

periodSeconds: 3

在这个配置文件中,可以看到 Pod 只有一个容器。

容器中的 command 字段表示创建一个 /tmp/live 文件后休眠 30 秒,休眠结束后删除该文件,并休眠 10 分钟。

仅使用 livenessProbe 存活探针,并使用 exec 检查方式,对 /tmp/live 文件进行存活检测。

initialDelaySeconds 字段表示 kubelet 在执行第一次探测前应该等待 1 秒。

periodSeconds 字段表示 kubelet 每隔 3 秒执行一次存活探测。

创建资源

[root@master ~]#kubectl create -f exec.yaml

pod/liveness-exec created

跟踪查看 pod 状态

[root@master ~]#kubectl get pod -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-exec 1/1 Running 0 36s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 1 (1s ago) 74s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 2 (0s ago) 2m22s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 3 (1s ago) 3m32s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 4 (0s ago) 4m40s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 5 (0s ago) 5m49s 10.244.1.15 node01 <none> <none>

liveness-exec 0/1 CrashLoopBackOff 5 (0s ago) 6m58s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 6 (82s ago) 8m20s 10.244.1.15 node01 <none> <none>

liveness-exec 0/1 CrashLoopBackOff 6 (0s ago) 9m28s 10.244.1.15 node01 <none> <none>

liveness-exec 1/1 Running 7 (2m41s ago) 12m 10.244.1.15 node01 <none> <none>

liveness-exec 0/1 CrashLoopBackOff 7 (1s ago) 13m 10.244.1.15 node01 <none> <none>

......

查看 pod 事件

[root@master ~]#kubectl describe pod liveness-exec

Name: liveness-exec

Namespace: default

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 16m default-scheduler Successfully assigned default/liveness-exec to node01

Normal Pulling 16m kubelet Pulling image "busybox"

Normal Pulled 16m kubelet Successfully pulled image "busybox" in 2.316123738s

Normal Killing 13m (x3 over 15m) kubelet Container liveness-exec-container failed liveness probe, will be restarted

Normal Created 12m (x4 over 16m) kubelet Created container liveness-exec-container

Normal Started 12m (x4 over 16m) kubelet Started container liveness-exec-container

Normal Pulled 12m (x3 over 14m) kubelet Container image "busybox" already present on machine

Warning Unhealthy 11m (x13 over 15m) kubelet Liveness probe failed:

Warning BackOff 66s (x30 over 9m14s) kubelet Back-off restarting failed container

每次健康检查失败后 kubelet 启动 killing 程序并拉取镜像创建新的容器

5.2 httpGet 方式

官方示例

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-http

spec:

containers:

- name: liveness

image: k8s.gcr.io/liveness

args:

- /server

livenessProbe:

httpGet:

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

initialDelaySeconds: 3

periodSeconds: 3

在这个配置文件中,可以看到 Pod 只有一个容器。initialDealySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 3 秒。preiodSeconds 字段指定了 kubelet 每隔 3 秒执行一次存活探测。kubelet 会向容器内运行的服务(服务会监听 8080 端口)发送一个认为容器是健康存活的。如果处理程序返回失败代码,则 kubelet 会杀死这个容器并且重新启动它。

任何大于或等于 200 并且小于 400 的返回代码标示成功,其他返回代码都标示失败。

编写 yaml 资源配置清单

[root@master ~]#cat httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget

namespace: default

spec:

containers:

- name: liveness-httpget-container

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: nginx

containerPort: 80

livenessProbe:

httpGet:

port: nginx

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

创建资源

[root@master ~]#kubectl create -f httpget.yaml

pod/liveness-httpget created

[root@master ~]#kubectl get pod

NAME READY STATUS RESTARTS AGE

liveness-httpget 1/1 Running 0 59s

删除 Pod 的 index.html 文件

[root@master ~]#kubectl exec -it liveness-httpget -- rm -rf /usr/share/nginx/html/index.html

查看 pod 状态

[root@master ~]#kubectl get pod -w

NAME READY STATUS RESTARTS AGE

liveness-httpget 1/1 Running 1 (18s ago) 2m6s

......

查看容器事件

[root@master ~]#kubectl describe pod liveness-httpget

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m22s default-scheduler Successfully assigned default/liveness-httpget to node02

Normal Pulling 4m21s kubelet Pulling image "nginx"

Normal Pulled 3m25s kubelet Successfully pulled image "nginx" in 55.685103435s

Normal Created 2m34s (x2 over 3m25s) kubelet Created container liveness-httpget-container

Warning Unhealthy 2m34s (x3 over 2m40s) kubelet Liveness probe failed: HTTP probe failed with statuscode: 404

Normal Killing 2m34s kubelet Container liveness-httpget-container failed liveness probe, will be restarted

Normal Pulled 2m34s kubelet Container image "nginx" already present on machine

Normal Started 2m33s (x2 over 3m25s) kubelet Started container liveness-httpget-container

重启原因是 HTTP 探测得到的状态返回码是 404,HTTP probe failed with statuscode: 404。

重启完成后,不会再次重启,因为重新拉取的镜像中包含了 index.html 文件。

5.3 tcpSocket 方式

官方示例

apiVersion: v1

kind: Pod

metadata:

name: goproxy

labels:

app: goproxy

spec:

containers:

- name: goproxy

image: k8s.gcr.io/goproxy:0.1

ports:

- containerPort: 8080

readinessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 15

periodSeconds: 20

这个例子同时使用 readinessProbe 和 livenessProbe 探测。kubelet 会在容器启动 5 秒后发送第一个 readiness 探测。这会尝试连接 goproxy 容器的 8080 端口。如果探测成功,kubelet 将继续每隔 10 秒运行一次检测。除了 readinessProbe 探测,这个配置包括了一个 livenessProbe 探测。kubelet 会在容器启动 15 秒后进行第一次 livenessProbe 探测。就像 readinessProbe 探测一样,会尝试连接 goproxy 容器的 8080 端口。如果 livenessProbe 探测失败,这个容器会被重新启动。

编写 yaml 资源配置清单

[root@master ~]#vim tcpsocket.yaml

[root@master ~]#cat tcpsocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcpsocket

spec:

containers:

- name: liveness-tcpsocket-container

image: nginx

livenessProbe:

initialDelaySeconds: 5

timeoutSeconds: 1

tcpSocket:

port: 8080

periodSeconds: 3

创建资源

[root@master ~]#kubectl apply -f tcpsocket.yaml

pod/liveness-tcpsocket created

跟踪查看 pod 状态

[root@master ~]#kubectl get pod -w

NAME READY STATUS RESTARTS AGE

liveness-tcpsocket 1/1 Running 0 31s

liveness-tcpsocket 1/1 Running 1 (16s ago) 43s

liveness-tcpsocket 1/1 Running 2 (17s ago) 71s

liveness-tcpsocket 1/1 Running 3 (2s ago) 83s

liveness-tcpsocket 0/1 CrashLoopBackOff 3 (1s ago) 94s

liveness-tcpsocket 1/1 Running 4 (28s ago) 2m1s

......

查看 pod 事件

[root@master ~]#kubectl describe pod liveness-tcpsocket

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m44s default-scheduler Successfully assigned default/liveness-tcpsocket to node02

Normal Pulled 2m28s kubelet Successfully pulled image "nginx" in 15.610378425s

Normal Pulled 2m1s kubelet Successfully pulled image "nginx" in 15.598030812s

Normal Created 94s (x3 over 2m28s) kubelet Created container liveness-tcpsocket-container

Normal Started 94s (x3 over 2m28s) kubelet Started container liveness-tcpsocket-container

Normal Pulled 94s kubelet Successfully pulled image "nginx" in 15.553201391s

Warning Unhealthy 83s (x9 over 2m23s) kubelet Liveness probe failed: dial tcp 10.244.2.18:8080: connect: connection refused

Normal Killing 83s (x3 over 2m17s) kubelet Container liveness-tcpsocket-container failed liveness probe, will be restarted

Normal Pulling 83s (x4 over 2m43s) kubelet Pulling image "nginx"

重启原因是 nginx 使用的默认端口为 80,8080 端口的健康检查被拒绝访问

删除 pod

[root@master ~]#kubectl delete -f tcpsocket.yaml

pod "liveness-tcpsocket" deleted

修改 tcpSocket 端口

[root@master ~]#vim tcpsocket.yaml

[root@master ~]#cat tcpsocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcpsocket

spec:

containers:

- name: liveness-tcpsocket-container

image: nginx

livenessProbe:

initialDelaySeconds: 5

timeoutSeconds: 1

tcpSocket:

port: 80 # 修改为 80 端口

periodSeconds: 3

创建资源并查看

[root@master ~]#kubectl apply -f tcpsocket.yaml

pod/liveness-tcpsocket created

[root@master ~]#kubectl get pod -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

liveness-tcpsocket 0/1 ContainerCreating 0 5s <none> node02 <none> <none>

liveness-tcpsocket 1/1 Running 0 17s 10.244.2.19 node02 <none> <none>

......

查看 pod 事件

[root@master ~]#kubectl describe pod liveness-tcpsocket

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 85s default-scheduler Successfully assigned default/liveness-tcpsocket to node02

Normal Pulling 85s kubelet Pulling image "nginx"

Normal Pulled 69s kubelet Successfully pulled image "nginx" in 15.532244594s

Normal Created 69s kubelet Created container liveness-tcpsocket-container

Normal Started 69s kubelet Started container liveness-tcpsocket-container

启动正常

5.4 readinessProbe 就绪探针 1

编写 yaml 资源配置清单

[root@master ~]#vim readiness-httpget.yaml

[root@master ~]#cat readiness-httpget.yaml

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: 80

path: /index1.html #注意,这里设置个错误地址

initialDelaySeconds: 1

periodSeconds: 3

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

创建资源查看 pod 状态

[root@master ~]#kubectl apply -f readiness-httpget.yaml

pod/readiness-httpget created

[root@master ~]#kubectl get pod

NAME READY STATUS RESTARTS AGE

readiness-httpget 0/1 Running 0 8s

STATUS 为 Running,但无法进入 READY 状态

查看 pod 事件

[root@master ~]#kubectl describe pod readiness-httpget

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 43s default-scheduler Successfully assigned default/readiness-httpget to node02

Normal Pulled 42s kubelet Container image "nginx" already present on machine

Normal Created 42s kubelet Created container readiness-httpget-container

Normal Started 42s kubelet Started container readiness-httpget-container

Warning Unhealthy 1s (x17 over 41s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404

异常原因为 readinessProbe 检测的状态返回值为 404,kubelet 阻止 pod 进入 READY 状态

查看日志

[root@master ~]#kubectl logs readiness-httpget

......

2022/07/03 06:03:18 [error] 33#33: *65 open() "/usr/share/nginx/html/index1.html" failed (2: No such file or directory), client: 10.244.2.1, server: localhost, request: "GET /index1.html HTTP/1.1", host: "10.244.2.20:80"

2022/07/03 06:03:21 [error] 33#33: *67 open() "/usr/share/nginx/html/index1.html" failed (2: No such file or directory), client: 10.244.2.1, server: localhost, request: "GET /index1.html HTTP/1.1", host: "10.244.2.20:80"

10.244.2.1 - - [03/Jul/2022:06:03:21 +0000] "GET /index1.html HTTP/1.1" 404 153 "-" "kube-probe/1.22" "-"

10.244.2.1 - - [03/Jul/2022:06:03:21 +0000] "GET /index.html HTTP/1.1" 200 615 "-" "kube-probe/1.22" "-"

为容器创建 index1.html

[root@master ~]#kubectl exec -it readiness-httpget -- touch /usr/share/nginx/html/index1.html

[root@master ~]#kubectl get pod # 恢复正常

NAME READY STATUS RESTARTS AGE

readiness-httpget 1/1 Running 0 2m52s

5.5 readinessProbe 就绪探针 2

编写 yaml 资源配置清单

[root@master ~]#vim readiness-multi-nginx.yaml

[root@master ~]#cat readiness-multi-nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx1

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 10

---

apiVersion: v1

kind: Pod

metadata:

name: nginx2

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 10

---

apiVersion: v1

kind: Pod

metadata:

name: nginx3

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

# service 通过 selector 绑定到 nginx 集群中

selector:

app: nginx

type: ClusterIP

ports:

- name: http

port: 80

targetPort: 80

创建资源

[root@master ~]#kubectl apply -f readiness-multi-nginx.yaml

pod/nginx1 created

pod/nginx2 created

pod/nginx3 created

service/nginx-svc created

......

[root@master ~]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx1 1/1 Running 0 88s 10.244.1.16 node01 <none> <none>

pod/nginx2 1/1 Running 0 88s 10.244.1.17 node01 <none> <none>

pod/nginx3 1/1 Running 0 88s 10.244.2.21 node02 <none> <none>

pod/readiness-httpget 1/1 Running 0 50m 10.244.2.20 node02 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d19h <none>

service/nginx-svc ClusterIP 10.102.12.150 <none> 80/TCP 88s app=nginx

删除 nginx1 中的 index.html

[root@master ~]#kubectl exec -it nginx1 -- rm -rf /usr/share/nginx/html/index.html

[root@master ~]#kubectl get pod -o wide -w # nginx1 的 READY 状态变为 0/1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx1 1/1 Running 0 3m5s 10.244.1.16 node01 <none> <none>

nginx2 1/1 Running 0 3m5s 10.244.1.17 node01 <none> <none>

nginx3 1/1 Running 0 3m5s 10.244.2.21 node02 <none> <none>

readiness-httpget 1/1 Running 0 52m 10.244.2.20 node02 <none> <none>

nginx1 0/1 Running 0 3m10s 10.244.1.16 node01 <none> <none>

......

查看 pod 事件

[root@master ~]#kubectl describe pod nginx1

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m6s default-scheduler Successfully assigned default/nginx1 to node01

Normal Pulling 4m5s kubelet Pulling image "nginx"

Normal Pulled 3m19s kubelet Successfully pulled image "nginx" in 46.172728026s

Normal Created 3m19s kubelet Created container nginx

Normal Started 3m18s kubelet Started container nginx

Warning Unhealthy 1s (x15 over 66s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404

由于 httpGet 检测到的状态返回码为 404,所以 readinessProbe 失败,kubelet 将其设定为 noready 状态。

查看 service 详情

[root@master ~]#kubectl describe svc nginx-svc # nginx1 被剔除出了 service 的终端列表

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.102.12.150

IPs: 10.102.12.150

Port: http 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.17:80,10.244.2.21:80

Session Affinity: None

Events: <none>

查看终端

[root@master ~]#kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 192.168.10.20:6443 6d19h

nginx-svc 10.244.1.17:80,10.244.2.21:80 6m43s

6. 启动、退出动作(postStart,preStop)

编写 yaml 资源配置清单

[root@master]#vim post.yaml

[root@master]#cat post.yaml

apiVersion: v1

kind: Pod

metadata:

name: lifecycle-test

spec:

containers:

- name: lifecycle-test-container

image: nginx

lifecycle:

postStart:

exec:

command: ["/bin/sh","-c","echo Hello from the postStart handler >> /var/log/nginx/message"]

preStop:

exec:

command: ["/bin/sh","-c","echo Hello from the postStop handler >> /var/log/nginx/message"]

volumeMounts:

- name: message-log

mountPath: /var/log/nginx/

readOnly: false

initContainers:

- name: init-nginx

image: nginx

command: ["/bin/sh","-c","echo 'Hello initContainers' >> /var/log/nginx/message"]

volumeMounts:

- name: message-log

mountPath: /var/log/nginx/

readOnly: false

volumes:

- name: message-log

hostPath:

path: /data/volumes/nginx/log/

type: DirectoryOrCreate

创建资源

[root@master ~]#kubectl apply -f post.yaml

pod/lifecycle-test created

跟踪查看 pod 状态

[root@master]#kubectl get pod -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

lifecycle-test 0/1 Init:0/1 0 5s <none> node01 <none> <none>

lifecycle-test 0/1 PodInitializing 0 17s 10.244.1.73 node01 <none> <none>

lifecycle-test 1/1 Running 0 19s 10.244.1.73 node01 <none> <none>

查看 pod 事件

[root@master]#kubectl describe po lifecycle-test

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 46s default-scheduler Successfully assigned default/lifecycle-test to node01

Normal Pulling 45s kubelet, node01 Pulling image "nginx"

Normal Pulled 30s kubelet, node01 Successfully pulled image "nginx"

Normal Created 30s kubelet, node01 Created container init-nginx

Normal Started 30s kubelet, node01 Started container init-nginx

Normal Pulling 29s kubelet, node01 Pulling image "nginx"

Normal Pulled 27s kubelet, node01 Successfully pulled image "nginx"

Normal Created 27s kubelet, node01 Created container lifecycle-test-container

Normal Started 27s kubelet, node01 Started container lifecycle-test-container

查看容器日志

[root@master]#kubectl exec -it lifecycle-test -- cat /var/log/nginx/message

Hello initContainers

Hello from the postStart handler

init 容器先执行,然后当一个主容器启动后,kubernetes 将立即发送 postStart 事件。

闭容器后查看节点挂载文件

[root@master]#kubectl delete -f post.yaml

pod "lifecycle-test" deleted

[root@node01 ~]#cat /data/volumes/nginx/log/message

Hello initContainers

Hello from the postStart handler

Hello from the postStop handler

由上可知,当在容器被终结之前,kubernetes 将发送一个 preStop 事件。

重新创建资源,查看容器日志

[root@master]#kubectl apply -f post.yaml

pod/lifesycle-test created

[root@master]#kubectl exec -it lifecycle-test -- cat /var/log/nginx/message

Hello initContainers

Hello from the postStart handler

Hello from the postStop handler

Hello initContainers

Hello from the postStart handler

7. 总结

7.1 探针

探针分为 3 种

- livenessProbe(存活探针)∶判断容器是否正常运行,如果失败则杀掉容器(不是 pod),再根据重启策略是否重启容器

- readinessProbe(就绪探针)∶判断容器是否能够进入 ready 状态,探针失败则进入 noready 状态,并从 service 的 endpoints 中剔除此容器

- startupProbe∶判断容器内的应用是否启动成功,在 success 状态前,其它探针都处于无效状态

7.2 检查方式

检查方式分为 3 种

- exec∶使用 command 字段设置命令,在容器中执行此命令,如果命令返回状态码为 0,则认为探测成功

- httpget∶通过访问指定端口和 url 路径执行 http get 访问。如果返回的 http 状态码为大于等于 200 且小于 400 则认为成功

- tcpsocket∶通过 tcp 连接 pod(IP)和指定端口,如果端口无误且 tcp 连接成功,则认为探测成功

7.3 常用的探针可选参数

| 行为属性名称 | 默认值 | 最小值 | 备注 |

|---|---|---|---|

| initialDelaySeconds | 0秒 | 0秒 | 探测延迟时长,容器启动后多久开始进行第一次探测工作。 |

| timeoutSeconds | 1秒 | 1秒 | 探测的超时时长。 |

| periodSeconds | 10秒 | 1秒 | 探测频度,频率过高会对pod带来较大的额外开销,频率过低则无法及时反映容器产生的错误。 |

| failureThreshold | 3 | 1 | 处于成功状态时,探测连续失败几次可被认为失败。 |

| successThreshold | 1 | 1 | 处于失败状态时,探测连续成功几次,被认为成功。 |

参考: